FIGURE 14.1 Polly Klaas.

|

“Well, I never heard it before,” said the Mock Turtle; “but it sounds uncommon nonsense.” ~ Lewis Carroll, Alice in Wonderland |

Chapter 14

Consciousness, Free Will, and the Law

OUTLINE

Anatomical Orientation

Consciousness

Neurons, Neuronal Groups, and Conscious Experience

The Emergence of the Brain Interpreter in the Human Species

Abandoning the Concept of Free Will

The Law

ON OCTOBER 1, 1993, 12-year-old Polly Klaas (Figure 14.1) had two girlfriends over to her suburban home in Petaluma, California, for a slumber party. At about 10:30 p.m., with her mother and sister asleep down the hall, Polly opened her bedroom door to get her friend’s sleeping bags from the living room. An unknown man with a knife was standing in her doorway. He made the girls lie down on their stomachs, tied them up, put pillowcases over their heads, and kidnapped Polly. Fifteen minutes later, the two friends had freed themselves and ran to wake Polly’s mother, who made a frantic 911 call. A massive ground search was launched to find Polly and her abductor—who, it was later found, had left his palm print behind in Polly’s room. Over the course of 2 months, 4,000 volunteers helped with the search.

The year 1993 was early in the history of the Internet. The system was not used for information sharing to the extent that it is today. While local businesses donated thousands of posters and paid for and mailed 54 million flyers, two local residents contacted the police and suggested digitizing Polly’s missing child poster and using the Internet to disseminate the information about her. This approach had never been taken before. Thanks to all these efforts, Polly’s plight became widely publicized and known nationally and internationally. Two months later, a twice-convicted kidnapper with a history of assaults against women, Richard Allen Davis, was arrested on a parole violation. He had been paroled 5 months earlier after serving half of a 16-year sentence for kidnapping, assault, and robbery. He had spent 18 of the previous 21 years in and out of prison. After his release, he had been placed in a halfway house, had a job doing sheet-metal work, was keeping his parole officer appointments, and was passing his drug tests. As soon as he had enough money to buy a car, however, things changed. He stopped showing up at work, disappeared from the halfway house, and was in violation of his parole. When Davis’s prints were found to match the palm print left behind in Polly’s room, he was charged with her abduction. Four days after his arrest, he led police to a place about 50 miles north of Petaluma and showed them Polly’s half-dressed, decomposed body, lying under a blackberry bush and covered with a piece of plywood. Davis admitted to strangling her twice, once with a cloth garrote and again with a rope to be sure she was dead. He was later identified by several residents as having been seen in the park across the street from Polly’s house or in the neighborhood during the 2 months before her abduction.

FIGURE 14.1 Polly Klaas.

Davis was tried and found guilty of the first-degree murder of Polly Klaas with special circumstances, which included robbery, burglary, kidnapping, and a lewd act on a child. This verdict made him eligible for the death sentence in California, which the jury recommended. Polly’s father stated, “It doesn’t bring our daughter back into our lives, but it gets one monster off the streets,” and agreed with the jury, saying that, “Richard Allen Davis deserves to die for what he did to my child” (Kennedy, 1996).

Incapacitation, retributive punishment, and rehabilitation are the three choices society has for dealing with criminal behavior. The judge does the sentencing, and in this case, the jury’s recommendation was followed. Richard Allen Davis is currently on California’s death row. When society considers public safety, it is faced with the decision about which perspective those making and enforcing the laws should take: retribution, an approach focused on punishing the individual and bestowing “just deserts,” or consequentialism, a utilitarian approach holding that what is right is what has the best consequences for society.

Polly’s kidnapping and murder were a national and international story that sparked widespread outrage. Not only was a child taken from the supposed safety of her home while her mother was present, but the perpetrator was a violent repeat offender who had been released early from prison and again was free to prey upon innocent victims. Although this practice was common enough, most of the public was unaware of its scope. Following the Polly Klaas case, people demanded a change. Many thought that Davis should not have been paroled, that he was still a threat. They also thought that certain behavior warranted longer incarceration. The response was swift. In 1993, Washington State passed the first threestrikes law, mandating that criminals convicted of serious offenses on three occasions be sentenced to life in prison without the possibility of parole. The next year, California followed suit and 72 % of voters supported that state’s rendition of the three-strikes law, which mandated a 25-year to life sentence for the third felony conviction. Several states have enacted similar habitual offender laws designed to counter criminal recidivism by physical incapacitation via imprisonment.

Throughout this book, we have come to see that our essence, who we are and what we do, is the result of our brain processes. We are born with an intricate brain, slowly developing under genetic control, with refinements being made under the influence of the environment and experience. The brain has particular skill sets, with constraints, and a capacity to generalize. All of these traits, which evolved under natural selection, are the foundation for a myriad of distinct cognitive abilities represented in different parts of the brain. We have seen that our brains possess thousands, perhaps millions, of discrete processing centers and networks, commonly referred to as modules, working outside of our conscious awareness and biasing our responses to life’s daily challenges. In short, the brain has distributed systems running in parallel. It also has multiple control systems. What makes some of these brain findings difficult to accept, however, is that we feel unified and in control: We feel that we are calling the shots and can consciously control all our actions. We do not feel at the mercy of multiple systems battling it out in our heads. So what is this unified feeling of consciousness, and how does it come about? The question of what exactly consciousness is and what processes are contributing to it remains the holy grail of neuroscience. What are the neural correlates of consciousness? Are we in conscious control or not? Are all animals equally conscious, or are there degrees of consciousness? We begin the chapter by looking at these questions.

As neuroscience comes to an increasingly physicalist understanding of brain processing, some people’s notions about free will are being challenged. This deterministic view of behavior disputes long-standing beliefs about what it means for people to be responsible for their actions. Some scholars assert the extreme view that humans have no conscious control over their behavior, and thus, they are never responsible for any of their actions. These ideas challenge the very foundational rules regulating how we live together in social groups. Yet research has shown that both accountability and what we believe influences our behavior. Can a mental state affect the physical processing of our brain? After we examine the neuroscience of consciousness, we will tackle the issue of free will and personal responsibility. In so doing, we will see if, indeed, our mental states influence our neuronal processes.

Philosopher Gary Watson pointed out that we shape the rules that we decide to live by. From a legal perspective, we are the law because we make the law. Our emotional reactions contribute to the laws we make. If we come to understand that our retributive responses to antisocial behavior are innate and have been honed by evolution, can or should we try to amend or ignore them and not let them affect the laws we create? Or, are these reactions the sculptors of a civilized society? Do we ignore them to our peril? Is accountability what keeps us civilized, and should we be held accountable for our behavior? We close the chapter by looking at these questions.

ANATOMICAL ORIENTATION

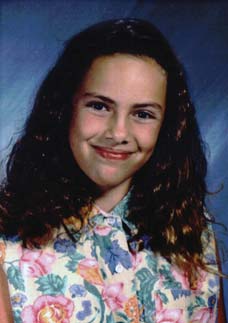

The anatomy of consciousness

The cerebral cortex, the thalamus, the brainstem, and the hypothalamus are largely responsible for the conscious mind.

Anatomical Orientation

The conscious mind primarily depends on three brain structures: the brainstem, including the hypothalamus; the thalamus; and the cerebral cortex (see the Anatomical Orientation box). When we look at the anatomical regions that contribute to consciousness, it is helpful to distinguish wakefulness from simple awareness and from more complex states. Neurologist Antonio Damasio has done this for us. First he makes the point that wakefulness is necessary for consciousness (except in dream sleep), but consciousness is not necessary for wakefulness. For example, patients in a vegetative state may be awake, but not conscious. Next he trims consciousness down to two categories: core consciousness and extended consciousness (Damasio, 1998). Core consciousness (or awareness) is what goes on when the consciousness switch is flipped “on.” The organism is alive, awake, alert, and aware of one moment: now, and in one place: here. It is not concerned with the future or the past. Core consciousness is the foundation for building increasingly complex levels of consciousness, which Damasio calls extended consciousness. Extended consciousness provides an organism with an elaborate sense of self. It places the self in individual historic time, includes thoughts of the past and future, and depends on the gradual buildup of an autobiographical self from memories and expected future experiences. Thus consciousness has nested layers of organizational complexity (Damasio & Meyer, 2008).

The Brainstem

The brain regions needed to modulate wakefulness, and to flip the consciousness “on” switch, are located in the evolutionarily oldest part of the brain, the brainstem. The primary job of brainstem nuclei is homeostatic regulation of the body and brain. This is performed mainly by nuclei in the medulla oblongata along with some input from the pons. Disconnect this portion of the brainstem, and the body dies (and the brain along with it). This is true for all mammals. Above the medulla are the nuclei of the pons and the mesencephalon. Within the pons is the reticular formation and the locus coeruleus (LC). The reticular formation is a heterogeneous collection of nuclei contributing to a number of neural circuits involved with motor control, cardiovascular control, pain modulation, and the filtering out of irrelevant sensory stimuli. Some nuclei influence the entire cortex via direct cortical connections, and some through neurons that comprise the neural circuits of the reticular activating system (RAS). The RAS has extensive connections to the cortex via two pathways. The dorsal pathway courses through the intralaminar nucleus of the thalamus to the cortex, and the ventral pathway zips through the hypothalamus and the basal forebrain and on to the cortex. The RAS is involved with arousal, regulating sleep–wake cycles, and mediating attention. Damage or disruption to the RAS can result in coma. Depending on the location, damage to the pons could result in locked-in syndrome, coma, a vegetative state, or death.

Arousal is also influenced by the outputs of the LC in the pons, which is the main site of norepinephrine production in the brain. The LC has extensive connections throughout the brain and, when active, prevents sleep by activating the cortex. With cell bodies located in the brainstem, it has projections that follow a route similar to that of the RAS up through the thalamus.

From the spinal cord, the brainstem receives afferent neurons involved with pain, interoception, somatosensory, and proprioceptive information as well as vestibular information from the ear and afferent signals from the thalamus, hypothalamus, amygdala, cingulate gyrus, insula, and prefrontal cortex. Thus, information about the state of the organism in its current milieu, along with ongoing changes in the organism’s state as it interacts with objects and the environment, is all mediated via the brainstem.

The Thalamus

The neurons that connect the brainstem with the intralaminar nuclei (ILN) of the thalamus play a key role in core consciousness. The thalamus has two ILN, one on the right side and one on the left. Small and strategically placed bilateral lesions to the ILN in the thalamus turn core consciousness off forever, although a lesion in one alone will not. Likewise, if the neurons connecting the thalamic ILN and the brainstem are severed or blocked, so that the ILN do not receive input signals, core consciousness is lost.

We know from previous chapters that the thalamus is a well-connected structure. As a result, it has many roles relating to consciousness. First, all sensory input, both about the body and the surrounding world (except smell, as we learned in Chapter 5), pass through the thalamus. This brain structure also is important to arousal, processing information from the RAS that arouses the cortex or contributes to sleep. The thalamus also has neuronal connections linking it to specific regions all over the cortex. Those regions send connections straight back to the thalamus, thus forming connection loops. These circuits contribute to consciousness by coordinating activity throughout the cortex. Lesions anywhere from the brainstem up to the cortex can disrupt core consciousness.

The Cerebral Cortex

In concert with the brainstem and thalamus, the cerebral cortex maintains wakefulness and contributes to selective attention. Extended consciousness begins with contributions from the cortex that help generate the core of self. These contributions are records from the memory bank of past activities, emotions, and experiences. Damage to the cortex may result in the loss of a specific ability, but not loss of consciousness itself. We have seen examples of these deficits in previous chapters. For instance, in Chapter 7, we came across patients with unilateral lesions to their parietal cortex: These people were not conscious of half of the space around them; that is, they suffered neglect.

TAKE-HOME MESSAGES

Consciousness

The problem of consciousness, otherwise known as the mind–brain problem, was originally the realm of philosophers. The basic question is, how can a purely physical system (the body and brain) construct conscious intelligence (the mind)? In seemingly typical human fashion, philosophers have adopted dichotomous perspectives: dualism and materialism. Dualism, famously expounded by Descartes, states that mind and brain are two distinct and separate phenomena, and conscious experience is nonphysical and beyond the scope of the physical sciences. Materialism asserts that both mind and body are physical mediums and that by understanding the physical workings of the body and brain well enough, an understanding of the mind will follow. Within these philosophies, views differ on the specifics, but each side ignores an inconvenient problem. Dualism tends to ignore biological findings, and materialism overlooks the reality of subjective experience.

Notice that we have been throwing the word consciousness around without having defined it. Unfortunately, this has been a common problem and has led to much confusion in the literature. In both the 1986 and 1995 editions of the International Dictionary of Psychology, the psychologist Stuart Sutherland defined consciousness as follows:

Consciousness The having of perceptions, thoughts, and feelings; awareness. The term is impossible to define except in terms that are unintelligible without a grasp of what consciousness means. Many fall into the trap of equating consciousness with selfconsciousness—to be conscious it is only necessary to be aware of the external world. Consciousness is a fascinating but elusive phenomenon: it is impossible to specify what it is, what it does, or why it evolved. Nothing worth reading has been written on it.

Harvard psychologist Steve Pinker also was confused by the different uses of the word: Some said that only man is conscious; others said that consciousness refers to the ability to recognize oneself in a mirror; some argued that consciousness is a recent invention by man or that it is learned from one’s culture. All these viewpoints provoked him to make this observation:

Something about the topic of consciousness makes people, like the White Queen in Through the Looking Glass, believe six impossible things before breakfast. Could most animals really be unconscious—sleepwalkers, zombies, automata, out cold? Hath not a dog senses, affections, passions? If you prick them, do they not feel pain? And was Moses really unable to taste salt or see red or enjoy sex? Do children learn to become conscious in the same way that they learn to wear baseball caps turned around? People who write about consciousness are not crazy, so they must have something different in mind when they use the word. (Pinker, 1997, p. 133)

In reviewing the work of the linguist Ray Jackendoff of Brandeis University and the philosopher Ned Block at New York University, Pinker pulled together a framework for thinking about the problem of consciousness in his book How the Mind Works (1997). The proposal for ending this consciousness confusion consists of breaking the problem of consciousness into three issues: self-knowledge, access to information, and sentience. Pinker summarized and embellished the three views as follows:

Self-knowledge: Among the long list of people and objects that an intelligent being can have accurate information about is the being itself. As Pinker said, “I cannot only feel pain and see red, but think to myself, ‘Hey, here I am, Steve Pinker, feeling pain and seeing red!’” Pinker says that self-knowledge is no more mysterious than any other topic in perception or memory. He does not believe that “navel-gazing” has anything to do with consciousness in the sense of being alive, awake, and aware. It is, however, what most academic discussions have in mind when they banter about consciousness.

Access to information: Access awareness is the ability to report on the content of mental experience without the capacity to report on how the content was built up by all the neurons, neurotransmitters, and so forth, in the nervous system. The nervous system has two modes of information processing: conscious processing and unconscious processing. Conscious processing can be accessed by the systems underlying verbal reports, rational thought, and deliberate decision making and includes the product of vision and the contents of short-term memory. Unconscious processing, which cannot be accessed, includes autonomic (gut-level) responses, the internal operations of vision, language, motor control, and repressed desires or memories (if there are any).

Sentience: Pinker considers sentience to be the most interesting meaning of consciousness. It refers to subjective experience, phenomenal awareness, raw feelings, and the first person viewpoint—what it is like to be or do something. Sentient experiences are called qualia by philosophers and are the elephant in the room ignored by the materialists. For instance, philosophers are always wondering what another person’s experience is like when they both look at the same color. In a paper spotlighting qualia, philosopher Thomas Nagel famously asked, “What is it like to be a bat?” (1974), which makes the point that if you have to ask, you will never know. Explaining sentience is known as the hard problem of consciousness. Some think it will never be explained.

By breaking the problem of consciousness into these three parts, cognitive neuroscience can be brought to bear on the topic of consciousness. Through the lens of cognitive neuroscience, much can be said about access to information and self-knowledge, but the topic of sentience remains elusive.

|

FIGURE 14.2 Blindsight. |

Conscious Versus Unconscious Processing and the Access of Information

We have seen throughout this book that the vast majority of mental processes that control and contribute to our conscious experience happen outside of our conscious awareness. An enormous amount of research in cognitive science clearly shows that we are conscious only of the content of our mental life, not what generates the content. For instance, we are aware of the products of mnemonic processing and the perceptual processing of imaging, not what produced the products. Thus, when considering conscious processes, it is also necessary to consider unconscious processes and how the two interact. A statement about conscious processing involves conjunction—putting together awareness of the stimulus with the identity, or the location, or the orientation, or some other feature of the stimulus. A statement about unconscious processing involves disjunction—separating awareness of the stimulus from the features of the stimulus such that even when unaware of the stimulus, participants can still respond to stimulus features at an above-chance level.

When Ned Block originally drew distinctions between sentience and access, he suggested that the phenomenon of blindsight provided an example where one existed without the other. Blindsight, a term coined by Larry Weiskrantz at Oxford University (1974; 1986), refers to the phenomenon that patients suffering a lesion in their visual cortex can respond to visual stimuli presented in the blind part of their visual field (Figure 14.2). Most interestingly, these activities happen outside the realm of consciousness. Patients will deny that they can do a task, yet their performance is clearly above that of chance. Such patients have access to information but do not experience it.

Weiskrantz believed that subcortical and parallel pathways and centers could now be studied in the human brain. A vast primate literature had already developed on the subject. Monkeys with occipital lesions not only can localize objects in space but also can make color, luminance, orientation, and pattern discriminations. It hardly seemed surprising that humans could use visually presented information not accessible to consciousness. Subcortical networks with interhemispheric connections provided a plausible anatomy on which the behavioral results could rest.

Since blindsight demonstrates vision outside the realm of conscious awareness, this phenomenon has often been invoked as support for the view that perception happens in the absence of sensation, for sensations are presumed to be our experiences of impinging stimuli. Because the primary visual cortex processes sensory inputs, advocates of the secondary pathway view have found it useful to deny the involvement of the primary visual pathway in blindsight. Certainly, it would be easy to argue that perceptual decisions or cognitive activities routinely result from processes outside of conscious awareness. But it would be difficult to argue that such processes do not involve primary sensory systems.

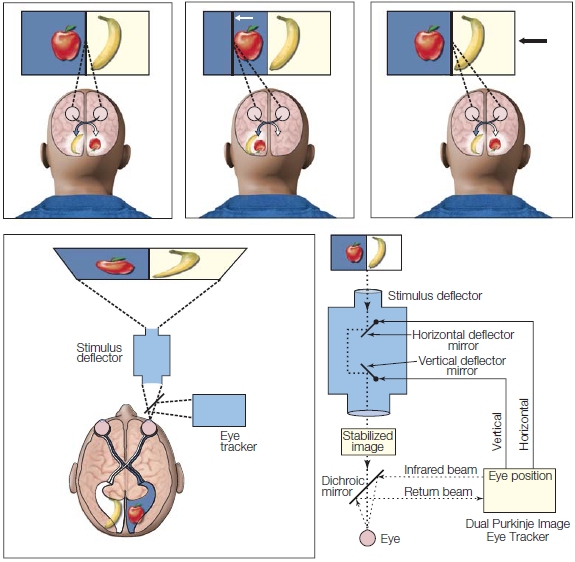

Evidence supports the notion that the primary sensory systems are still involved. Involvement of the damaged primary pathway in blindsight has been demonstrated by Mark Wessinger and Robert Fendrich at Dartmouth College (Fendrich et al., 1992). They investigated this fascinating phenomenon using a dual Purkinje image eye tracker that was augmented with an image stabilizer, allowing for the sustained presentation of information in discrete parts of the visual field (Figure 14.3). Armed with this piece of equipment and with the cooperation of C.L.T., a robust 55-year-old outdoorsman who had suffered a right occipital stroke 6 years before his examination, they began to tease apart the various explanations for blindsight.

FIGURE 14.3 Schematic of the Purkinje image eye tracker.

The eye tracker compensates for a subject’s eye movements by moving the image in the visual field in the same direction as the eyes, thus stabilizing the image on the retina.

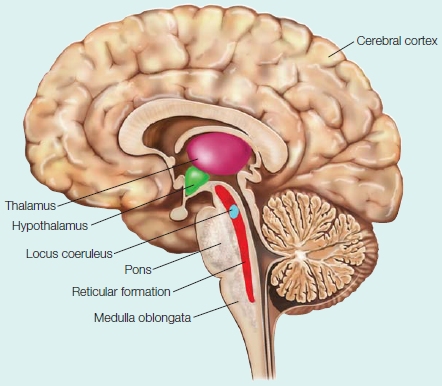

Standard perimetry indicated that C.L.T. had a left homonymous hemianopia with lower-quadrant macular sparing. Yet the eye tracker found small regions of residual vision (Figure 14.4). C.L.T.’s scotoma was explored carefully, using high-contrast, retinally stabilized stimuli and an interval, two-alternative, forced-choice procedure. This procedure requires that a stimulus be presented on every trial and that the participant respond on every trial, even though he denies having seen a stimulus. Such a design is more sensitive to subtle influences of the stimulus on the participant’s responses. C.L.T. also indicated his confidence on every trial. The investigators found regions of above-chance performance surrounded by regions of chance performance within C.L.T.’s blind field. Simply stated, they found islands of blindsight.

Magnetic resonance imaging (MRI) reconstructions revealed a lesion that damaged the calcarine cortex, which is consistent with C.L.T.’s clinical blindness. But MRI also demonstrated some spared tissue in the region of the calcarine fissure. We assume that this tissue mediates C.L.T.’s central vision with awareness. Given this, it seems reasonable that similar tissue mediates C.L.T.’s islands of blindsight. More important, both positron emission tomography (PET) and functional magnetic resonance imaging (fMRI) conclusively demonstrated that these regions are metabolically active—these areas are alive and processing information! Thus, the most parsimonious explanation for C.L.T.’s blindsight is that it is directed by spared, albeit severely dysfunctional, remnants of the primary visual pathway rather than by a more general secondary visual system.

Before it can be asserted that blindsight is due to subcortical or extrastriate structures, we first must be extremely careful to rule out the possibility of spared striate cortex. With careful perimetric mapping, it is possible to discover regions of vision within a scotoma that would go undetected with conventional perimetry. Through such discoveries, we can learn more about consciousness.

FIGURE 14.4 Results of stabilized image perimetry in left visual hemifield.

Results of stabilized image perimetry in C.L.T.’s left visual hemifield. Each test location is represented by a circle. The number in a circle represents the percentage of correct detections. The number under the circle indicates the number of trials at each location. White circles are unimpaired detection, green circles are impaired detection that was above the level of chance, and purple circles indicate detection that was no better than chance.

Similar reports of vision without awareness in other neurological populations can similarly inform us about consciousness. It is commonplace to design demanding perceptual tasks on which both neurological and nonneurological participants routinely report low confidence values but perform at a level above chance. Yet it is unnecessary to propose secondary visual systems to account for such reports, since the primary visual system is intact and fully functional. For example, patients with unilateral neglect (see Chapter 7) as a result of right-hemisphere damage are unable to name stimuli entering their left visual field. The conscious brain cannot access this information. When asked to judge whether two lateralized visual stimuli, one in each visual field, are the same or different (Figure 14.5), however, these same patients can do so. When they are questioned on the nature of the stimuli after a trial, they easily name the stimulus in the right visual field but deny having seen the stimulus in the neglected left field. In short, patients with parietal lobe damage, but spared visual cortex can make perceptual judgments outside of conscious awareness. Their failure to consciously access information for comparing the stimuli should not be attributed to processing within a secondary visual system, because their geniculostriate pathway is still intact. They lost the function of a chunk of parietal cortex, and because of that loss, they lost a chunk of conscious awareness.

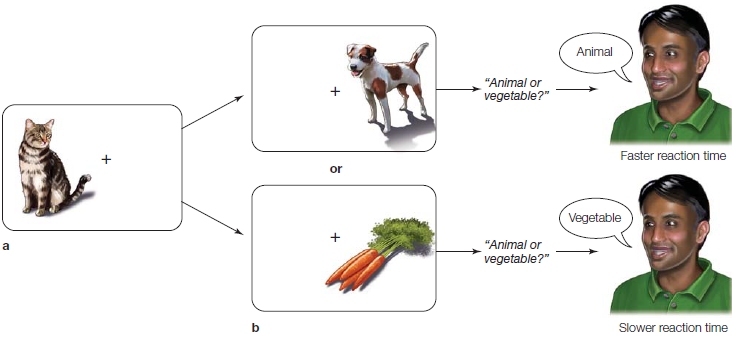

The Extent of Subconscious Processing

A variety of reports extended these initial observations that information presented in the extinguished visual field can be used for decision making. In fact, quite complex information can be processed outside of conscious awareness (Figure 14.6). In one study of right-sided neglect patients, a picture of a fruit or an animal was quickly presented to the right visual field. Subsequently, a picture of the same item or of an item in the same category was presented to the left visual field. In another condition, the pictures presented in each field had nothing to do with each other (Volpe et al., 1979). All patients in the study denied that a stimulus had been presented in the left visual field. When the two pictures were related, however, patients responded faster than they did when the pictures were different. The reaction time to the unrelated pictures did not increase. In short, high-level information was being exchanged between processing systems, outside the realm of conscious awareness.

The vast staging for our mental activities happens largely without our monitoring. The stages of this production can be identified in many experimental venues. The study of blindsight and neglect yields important insights. First, it underlines a general feature of human cognition: Many perceptual and cognitive activities can and do go on outside the realm of conscious awareness. We can access information of which we are not sentient. Further, this feature does not necessarily depend on subcortical or secondary processing systems: More than likely, unconscious processes related to cognitive, perceptual, and sensory-motor activities happen at the level of the cortex. To help understand how consciousness and unconsciousness interact within the cortex, it is necessary to investigate both conscious and unconscious processes in the intact, healthy brain.

|

FIGURE 14.5 The same–different paradigm presented to patients with neglect. |

|

Richard Nisbett and Lee Ross (1980) at the University of Michigan clearly made this point. In a clever experiment, using the tried-and-true technique of learning word pairs, they first exposed participants to word associations like ocean–moon. The idea is that participants might subsequently say “Tide” when asked to free-associate the word detergent. That is exactly what they do, but they do not know why. When asked, they might say, “Oh, my mother always used Tide to do the laundry.” As we know from Chapter 4, that was their left brain interpreter system coming up with an answer from the information that was available to it.

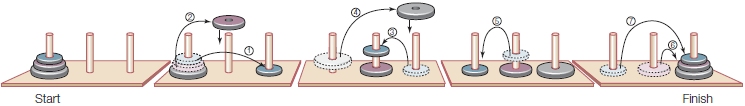

Now, any student will commonly and quickly declare that he is fully aware of how he solves a problem even when he really does not know. Students solve the famous Tower of Hanoi (Figure 14.7) problem all the time. When researchers listen to the running discourse of students articulating what they are doing and why they are doing it, the result can be used to write a computer program to solve the problem. The participant calls on facts known from short- and long-term memory. These events are accessible to consciousness and can be used to build a theory for their action. Yet no one is aware of how the events became established in shortor long-term memory. Problem solving is going on at two different levels, the conscious and the unconscious, but we are only aware of one.

FIGURE 14.6 Category discrimination test presented to patients with right sided neglect.

(a) A picture of an item, such as a cat, was flashed to the left visual field. (b) A picture of the same item or a related item, such as a dog, was presented to the right visual field, and the participant was asked to discriminate the category that the second item belonged to. If the items were related by category, the time needed to categorize the second word was shorter.

FIGURE 14.7 The Tower of Hanoi problem.

The task is to rebuild the rings on another tower without ever putting a larger ring on top of a smaller ring. It can be done in seven steps, and after much practice, students learn the task. After they have solved it, however, their explanations for how they solved it can be quite bizarre.

Cognitive psychologists also have examined the extent and kind of information that can be processed unconsciously. Freud staked out the most complex range, where the unconscious was hot and wet. Deep emotional conflicts are fought, and their resolution slowly makes its way to conscious experience. Other psychologists placed more stringent constraints on what can be processed. Many researchers maintain that only low-level stimuli—like the lines forming the letter of a word, not the word itself—can be processed unconsciously. Over the last century, these matters have been examined time and again; only recently has unconscious processing been examined in a cognitive neuroscience setting.

The classic approach was to use the technique of subliminal perception. Here a picture of a girl either throwing a cake at someone, or simply presenting the cake in a friendly manner, is flashed quickly. A neutral picture of the girl is presented subsequently, and the participant proves to be biased in judging the girl’s personality based on the subliminal exposures he received (Figure 14.8). Hundreds of such demonstrations have been recounted, although they are not easy to replicate. Many psychologists maintain that elements of the picture are captured subconsciously and that this result is sufficient to bias judgment.

FIGURE 14.8 Testing subliminal perception.

A participant is quickly shown just one picture of a girl, similar to the images in the top row, in such a way that the participant is not consciously aware of the picture’s content. The participant is then shown a neutral picture (bottom row) and is asked to describe the girl’s character. Judgments of the girl’s character have been found to be biased by the previous subthreshold presentation.

Cognitive psychologists have sought to reaffirm the role of unconscious processing through various experimental paradigms. A leader in this effort has been Tony Marcel of Cambridge University (1983a, 1983b). Marcel used a masking paradigm in which the brief presentation of either a blank screen or a word was followed quickly by a masking stimulus of a crosshatch of letters. One of two tasks followed presentation of the masking stimulus. In a detection task, participants merely had to choose whether a word had been presented. On this task, participants responded at a level of chance. They simply could not tell whether a word had been presented. If the task became a lexical decision task, however, the subliminally presented stimulus had effects. Here, following presentation of the masking stimulus, a string of letters was presented and participants had to specify whether the string formed a word. Marcel cleverly manipulated the subthreshold words in such a way that some were related to the word string and some were not. If there had been at least lexical processing of the subthreshold word, related words should elicit faster response times, and this is exactly what Marcel found.

FIGURE 14.9 Picture-to-word priming paradigm.

(a) During the study, either extended and unmasked (top) or brief and masked (bottom) presentations were used. (b) During the test, participants were asked to complete word stems (kan and bic were the word stems presented in this example). Priming performance was identical between extended and brief presentations. (c) Afterward, participants were asked if they remembered seeing the words as pictures. Here performance differed—participants usually remembered seeing the extended presentations but regularly denied having seen the brief presentations.

Since then, investigations of conscious and unconscious processing of pictures and words have been combined successfully into a single cross-form priming paradigm. This paradigm involves presenting pictures for study and word stems for the test (Figure 14.9). Using both extended and brief periods of presentation, the investigators also showed that such picture-to-word priming can occur with or without awareness. In addition to psychophysically setting the brief presentation time at identification threshold, a pattern mask was used to halt conscious processing. Apparently not all processing was halted, however, because priming occurred equally well under both conditions. Given that participants denied seeing the briefly presented stimuli, unconscious processing must have allowed them to complete the word stems (primes). In other words, they were extracting conceptual information from the pictures, even without consciously seeing them. How often does this happen in everyday life? Considering the complexity of the visual world, and how rapidly our eyes look around, briefly fixating from object to object (about 100–200 ms), this situation probably happens quite often! These data further underscore the need to consider both conscious and unconscious processes when developing a theory of consciousness.

Gaining Access to Consciousness

As cognitive neuroscientists make further attempts to understand the links between conscious and unconscious processing, it becomes clear that these phenomena remain elusive. We now know that obtaining evidence of subliminal perception depends on whether subjective or objective criteria set the threshold. When the criteria are subjective (i.e., introspective reports from each subject), priming effects are evident. When criteria are set objectively by requiring a forced choice as to whether a participant saw any visual information, no priming effects are seen. Among other things, these studies point out the gray area between conscious and unconscious. Thresholds clearly vary with the criteria.

Pinker (1997) presented an enticing analysis on how evolutionary pressures gave rise to access-consciousness. The general insight has to do with the idea that information has costs and benefits. He argued that at least three dimensions must be considered: cost of space to store and process it, cost of time to process and retrieve it, and cost of resources—energy in the form of glucose—to process it. The point is that any complex organism is made up of matter, which is subject to the laws of thermodynamics, and there are restrictions on the information it accesses. To operate optimally within these constraints, only information relevant to the problem at hand should be allowed into consciousness, which seems to be how the brain is organized.

Access-consciousness has four obvious features that Pinker recounted. It is brimming with sensations: the shocking pink sunset, the fragrance of jasmine, the stinging of a stubbed toe. Second, we are able to move information into and out of our awareness and into and out of short-term memory by turning our attentional spotlight on it. Third, this information always comes with salience, some kind of emotional coloring. Finally, there is the “I” that calls the shots on what to do with the information as it comes into the field of awareness.

Jackendoff (1987) argued that for perception, access is limited to the intermediate stages of information processing. Luckily, we do not ponder the elements that go into a percept, only the output. Consider the patient described in Chapter 6, who could not see objects but could see faces, thus indicating he was a face processor. When this patient was shown a picture that arranged pieces of vegetables in such a way as to make them look like a face, the patient immediately said he saw the face but was totally unable to state that the eyes were garlic cloves and the nose a turnip. He had access only to output of the module.

Concerning attention and its role in access, the work of Anne Treisman (1991) at Princeton University reveals that unconscious parallel processing can go only so far. Treisman proposed a candidate for the border between conscious and unconscious processes. In her famous popout experiments that we discussed in Chapter 7, a participant picks a prespecified object from a field of others. The notion is that each point in the visual field is processed for color, shape, and motion, outside of conscious awareness. The attention system then picks up elements and puts them together with other elements to make the desired percept. Treisman showed, for example, that when we are attending to a point in space and processing the color and form of that location, elements at unattended points seem to be floating. We can tell the color and shape, but we make mistakes about what color goes with what shape. Attention is needed to conjoin the results of the separate unconscious processes. The illusory conjunctions of stimulus features are first-glimpse evidence for how the attentional system combines elements into whole percepts.

We have discussed emotional salience in Chapter 10, and we will get to the “I” process in a bit. Before turning to such musings, let’s consider an often overlooked aspect of consciousness: the ability to move from conscious, controlled processing to unconscious, automatic processing. Such “movement” from conscious to unconscious is necessary when we are learning complex motor tasks such as riding a bike or driving a car, as well as for complex cognitive tasks such as verb generation and reading.

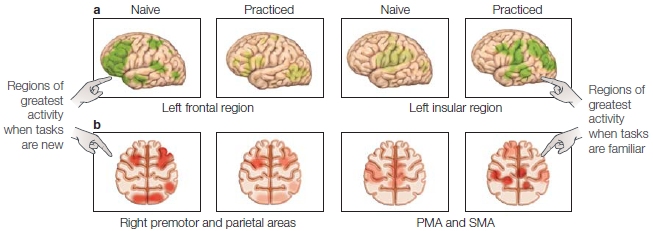

At Washington University in St. Louis, Marcus Raichle and Steven Petersen, two pioneers in the brain imaging field, proposed a “scaffolding to storage” framework to account for this movement (Petersen et al., 1998). Initially, according to their framework, we must use conscious processing during practice while developing complex skills (or memories)—this activity can be considered the scaffolding process. During this time, the memory is being consolidated, or the skill is being developed and honed. Once the task is learned, brain activity and brain involvement change. This change can be likened to the removal of the scaffolding, or the disinvolvement of support structures and the involvement of more permanent structures as the tasks are “stored” for use.

Petersen and Raichle demonstrated this scaffolding to storage movement in the awake-behaving human brain. Using PET techniques participants either performed a verb generation task which was compared to simply reading verbs, or a maze tracing task, compared to tracing a square. They clearly demonstrated that early, unlearned, conscious processing uses a much different network of brain regions than does later, learned, unconscious processing (Figure 14.10). They hypothesized that during learning, a scaffolding set of regions is used to handle novel task demands. Following learning, a different set of regions is involved, perhaps regions specific to the storage or representation of the particular skill or memory. Further, once this movement from conscious to unconscious has occurred (once the scaffolding is removed), it is sometimes difficult to reinitiate conscious processing. A classic example is learning to drive with a clutch. Early on, you have to consciously practice the steps of releasing the gas pedal while depressing the clutch, moving the shift lever, and slowly releasing the clutch while applying pressure to the gas pedal again—all without stalling the car. After a few jerky attempts, you know the procedures well: The process has been stored, but it is rather difficult to separate the steps.

Similar processes occur in learning other complex skills. Chris Chabris, a cognitive psychologist at Harvard University, has studied chess players as they progress from the novice to the master level (Chabris & Hamilton, 1992). During lightning chess, masters play many games simultaneously and very fast. Seemingly, they play by intuition as they make move after move after move, and in essence they are playing by intuition—“learned intuition,” that is. They intuitively know, without really knowing how they know, what the next best move is. For novices, such lightning play is not possible. They have to painstakingly examine the pieces and moves one by one (OK, if I move my knight over there, she will take my bishop; no, that won’t work. Let’s see, if I move the rook—no, then she will move her bishop and then I can take her knight... whoops, that will put me in check... hmmm). But after many hours of practice and hard work, as the novices develop into chess masters, they see and react to the chessboard differently. They now begin to view and play the board as a series of groups or clumps of pieces and moves, as opposed to separate pieces with serial moves. Chabris’s research has shown that during early stages of learning, the talking, language-based, left brain is consciously controlling the game. With experience, however, as the different moves and possible groupings are learned, the perceptual, feature-based, right brain takes over.

FIGURE 14.10 Activated areas of the brain change as tasks are practiced.

Based on positron emission tomography (PET) images, these eight panels show that practicing a task results in a shift in which regions of the brain are most active. (a) When confronted with a new verb generation task, areas in the left frontal region, such as the prefrontal cortex, are activated (green areas in leftmost panel). As the task is practiced, blood flow to these areas decreases (as depicted by the fainter color in the adjacent panel). In contrast, the insula is less active during naïve verb generation. With practice, however, activation in the insula increases, suggesting that with practice, activity in the insula replaces activity previously observed in the frontal regions. (b) An analogous shift in activity is observed elsewhere in the brain during a motor learning maze-tracing task. Activity in the premotor and parietal areas seen early in the maze task (red areas in leftmost panel) subsides with practice (fainter red in the adjacent panel) while increases in blood flow are then seen in the primary and supplementary motor areas as a result of practice.

For example, International Grandmaster chess player and two-time U.S. chess champion Patrick Wolff, who at age 20 defeated the world chess champion Gary Kasparov in 25 moves, was given 5 seconds to look at a picture of a chessboard with all the pieces set in a pattern that made chess sense. He was then asked to reproduce it, and he quickly and accurately did so, getting 25 out of 27 pieces in the correct position. Even a good player would place only about five pieces correctly. In a different trial, however, with the same board, the same number of pieces, but pieces in positions that didn’t make chess sense, he got only a few pieces right, just like a person who doesn’t play chess. Wolff’s original accuracy was from his right brain automatically matching up patterns that it had learned from years of playing chess.

Although neuroscientists may know that Wolff’s right-brain pattern perception mechanism is all coded, runs automatically, and is the source of this capacity, he did not. When he was asked about his ability, his left-brain interpreter struggled for an explanation: “You sort of get it by trying to, to understand what’s going on quickly and of course you chunk things, right?... I mean obviously, these pawns, just, but, but it, I mean, you chunk things in a normal way, like I mean one person might think this is sort of a structure, but actually I would think this is more, all the pawns like this....” When asked, the speaking left brain of the master chess player can assure us that it can explain how the moves are made, but it fails miserably to do so—as often happens when you try, for example, to explain how to use a clutch to someone who doesn’t drive a car with a standard transmission.

The transition of controlled, conscious processing to automatic, unconscious processing is analogous to the implementation of a computer program. Early stages require multiple interactions among many brain processes, including consciousness, as the program is written, tested, and prepared for compilation. Once the process is well under way, the program is compiled, tested, recompiled, retested, and so on. Eventually, as the program begins to run and unconscious processing begins to take over, the scaffolding is removed, and the executable file is uploaded for general use.

This theory seems to imply that once conscious processing has effectively allowed us to move a task to the realm of the unconscious, we no longer need conscious processing. This transition would allow us to perform that task unconsciously and allow our limited conscious processing to turn to another task. We could unconsciously ride our bikes and talk at the same time.

One evolutionary goal of consciousness may be to improve the efficiency of unconscious processing. The ability to relegate learned tasks and memories to unconsciousness allows us to devote our limited consciousness resources to recognizing and adapting to changes and novel situations in the environment, thus increasing our chances of survival.

Sentience

Neurologist Antonio Damasio (2011) defines consciousness as a mind state in which the regular flow of mental images (defined as mental patterns in any of the sensory modalities) has been enriched by subjectivity, meaning mental images that represent body states. He suggests that various parts of the body continuously signal the brain and are signaled back by the brain in a perpetual resonant loop. Mental images about the self—that is, the body—are different from other mental images. They are connected to the body, and as such they are “felt.” Because these images are felt, an organism is able to sense that the contents of its thoughts are its own: They are formulated in the perspective of the organism, and the organism can act on those thoughts. This form of self-awareness, however, is not meta self-awareness, or being aware that one is aware of oneself. Sentience does not imply that an organism knows it is sentient.

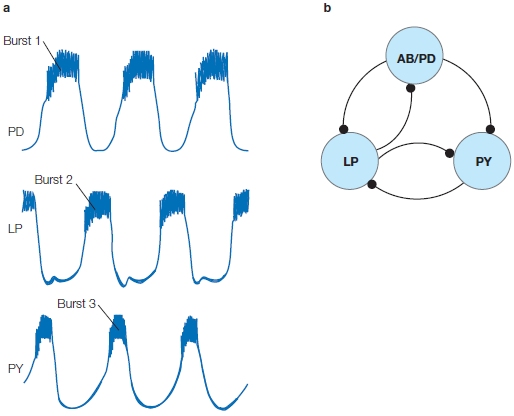

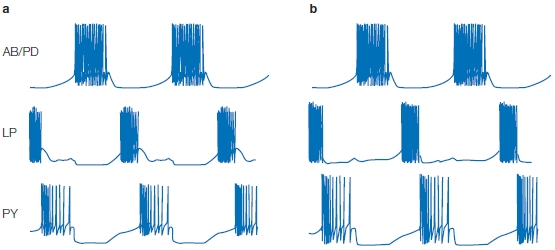

Neurons, Neuronal Groups, and Conscious Experience

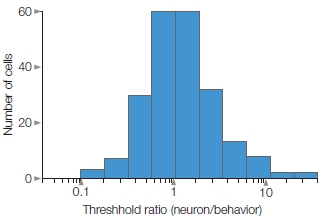

Neuroscientists interested in higher cognitive functions have been extraordinarily innovative in analyzing how the nervous system enables perceptual activities. Recording from single neurons in the visual system, they have tracked the flow of visual information and how it becomes encoded and decoded during a perceptual activity. They have also directly manipulated the information and influenced an animal’s decision processes. One of the leaders in this approach to understanding the mind is William Newsome at Stanford University.

Newsome has studied how neural events in area MT of the monkey cortex, which is actively involved in motion detection, correlate with the actual perceptual event (Newsome et al., 1989). One of his first findings was striking. The animal’s psychophysical performance capacity to discriminate motion could be predicted by the neuronal response pattern of a single neuron (Figure 14.11). In other words, a single neuron in area MT was as sensitive to changes in the visual display as was the monkey.

FIGURE 14.11 Motion discrimination can be predicted by a single-neuron response pattern.

Motion stimuli, with varying levels of coherent motion, were presented to rhesus monkeys trained in a task to discriminate the direction of motion. The monkey’s decision regarding the direction of apparent motion and the responses of 60 single middle temporal visual area (MT) cells (which are selective for direction of motion) were recorded and compared to the stimulus coherence on each trial. On average, individual cells in MT were as sensitive as the entire monkey. In subsequent work, the firing rate of single cells predicted (albeit weakly) the monkey’s choice on a trial-by-trial basis.

This finding stirred the research community because it raised a fundamental question about how the brain does its job. Newsome’s observation challenged the common view that the signal averaging that surely goes on in the nervous system eliminated the noise carried by individual neurons. From this view, the decision-making capacity of pooled neurons should be superior to the sensitivity of single neurons. Yet Newsome did not side with those who believe that a single neuron is the source for any one behavioral act. It is well known that killing a single neuron, or even hundreds of them, will not impair an animal’s ability to perform a task, so a single neuron’s behavior must be redundant.

An even more tantalizing finding, which is of particular interest to the study of conscious experience, is that altering the response rate of these same neurons by careful microstimulation can tilt the animal toward making the right decision on a perceptual task. Maximum effects are seen during the interval the animal is thinking about the task. Newsome and his colleagues (Salzman et al., 1990; Celebrini & Newsome, 1995), in effect, inserted an artificial signal into the monkey’s nervous system and influenced how it thinks.

Based on this discovery, can the site of the microstimulation be considered as the place where the decision is made? Researchers are not convinced that this is the way to think about the problem. Instead, it’s believed they have tapped into part of a neural loop involved with this particular perceptual discrimination. They argue that stimulation at different sites in the loop creates different perceptual subjective experiences. For example, let’s say that the stimulus was moving upward and the response was as if the stimulus were moving downward. If this were your brain, you might think you saw downward motion if the stimulation occurred early in the loop. If, however, the stimulation occurred late in the loop and merely found you choosing the downward response instead of the upward one, your sensation would be quite different. Why, you might ask yourself, did I do that?

This question raises the issue of the timing of consciousness. When do we become conscious of our thoughts, intentions, and actions? Do we consciously choose to act, and then consciously initiate an act? Or is an act initiated unconsciously, and only afterward do we consciously think we initiated it?

Benjamin Libet (1996), an eminent neuroscientist-philosopher, researched this question for nearly 35 years. In a groundbreaking and often controversial series of experiments, he investigated the neural time factors in conscious and unconscious processing. These experiments are the basis for his backward referral hypothesis. Libet and colleagues (Libet et al., 1979) concluded that awareness of a neural event is delayed approximately 500 milliseconds after the onset of the stimulating event and, more important, this awareness is referred back in time to the onset of the stimulating event. To put it another way, you think that you were aware of the stimulus from the onset of the stimulus and are unaware of the time gap. Surprisingly, according to participant reports, brain activity related to an action increased as many as 300 ms before the conscious intention to act. Using more sophisticated fMRI techniques, John-Dylan Haynes (Soon et al., 2008) showed that the outcomes of a decision can be encoded in brain activity up to 10 seconds before it enters awareness.

Fortunately, backward referral of our consciousness is not so delayed that we act without thinking. Enough time elapses between the awareness of the intent to act and the actual beginning of the act than we can override inappropriately triggered behavior. This ability to detect and correct errors is what Libet believes is the basis for free will.

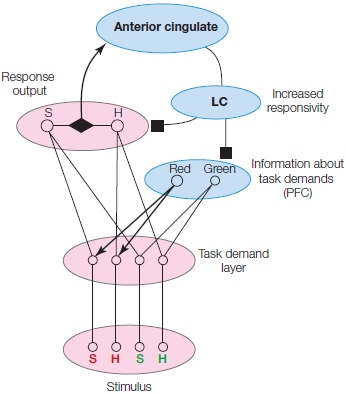

FIGURE 14.12 Model of the conflict monitoring system.

Study participants were presented with two letters (S and/or H), one in red and the other in green. They were cued to respond to the red letter (dark black arrows) and asked whether it was an S or an H. Basic task-related components are in pink, and control-related components are in blue. The anterior cingulate cortex responds to conflict of response units. This directs the locus coeruleus (labeled LC) that leads to increases in responsivity of multiple processing units (via the squares). Specifically, selective attention is modulated via the prefrontal cortex (PFC), and motor preparation is modulated via the response units. Patients with damage to control-related components, particularly the PFC, have problems in recognizing and correcting their mistakes. The model was suggested by Gehring and Knight (2000).

Whether or not error detection and correction are indeed experimental manifestations of free will, such abilities have been linked to brain regions (Figure 14.12). Not all people can detect and correct errors adequately. In a model piece of brain science linking event-related potentials (ERPs) and patient studies, Robert Knight at the University of California, Berkeley, and William Gehring at the University of Michigan, Ann Arbor, characterized the role of the frontal lobe in checking and correcting errors (Gehring & Knight, 2000). By comparing and contrasting the performance of patients and healthy volunteers on a letter discrimination task, they conclusively demonstrated that the lateral prefrontal cortex was essential for corrective behavior. The task was arranged such that flanking “distracters” often disrupted responses to “targets.” Healthy volunteers showed the expected “corrective” neural activity in the anterior cingulate (see Chapter 12). Patients with lateral prefrontal damage also showed the corrective activity for errors. The patients, however, also showed the same sort of “corrective” activity for non-errors; that is, patients could not distinguish between errors and correct responses. It seems that patients with lateral prefrontal damage no longer have the ability to monitor and integrate their behavior across time. Perhaps they have even lost the ability to learn from their mistakes. It is as if they are trapped in the moment, unable to go back yet unable to decide to go forward. They seem to have lost a wonderful and perhaps uniquely human benefit of consciousness—the ability to escape from the here and now of linear time, or to “time-shift” away.

TAKE-HOME MESSAGES

The Emergence of the Brain Interpreter in the Human Species

The brain’s modular organization has now been well established. The functioning modules do have some kind of physical instantiation, but brain scientists cannot yet specify the exact nature of the neural networks. It is clear that these networks operate mainly outside the realm of awareness, each providing specialized bits of information. Yet, even with the insight that many of our cognitive capacities appear to be automatic domain-specific operations, we feel that we are in control. Despite knowing that these modular systems are beyond our control and fully capable of producing behaviors, mood changes, and cognitive activity, we think we are a unified conscious agent—an “I” with a past, a present, and a future. With all of this apparent independent activity running in parallel, what allows for the sense of conscious unity we possess?

A private narrative appears to take place inside us all the time. It consists partly of the effort to tie together into a coherent whole the diverse activities of thousands of specialized systems that we have inherited through evolution to handle the challenges presented to us each day from both environmental and social situations. Years of research have confirmed that humans have a specialized process to carry out this interpretive synthesis, and, as we discussed in Chapter 4, it is located in the brain’s left hemisphere. This system, called the interpreter, is most likely cortically based and works largely outside of conscious awareness. The interpreter makes sense of all the internal and external information that is bombarding the brain. Asking how one thing relates to another, looking for cause and effect, it offers up hypotheses, makes order out of the chaos of information, and creates a running narrative. The interpreter is the glue that binds together the thousands of bits of information from all over the cortex into a cause-and-effect, “makes sense” narrative: our personal story. It explains why we do the things we do, and why we feel the way we do. Our dispositions, emotional reactions, and past learned behavior are all fodder for the interpreter. If some action, thought, or emotion doesn’t fit in with the rest of the story, the interpreter will rationalize it (I am a really cool, macho guy with tattoos and a Harley and I got a poodle because... ah, um... my great grandmother was French).

The interpreter, however, can use only the information that it receives. For example, a patient with Capgras’ syndrome will recognize a familiar person but will insist that an identical double or an alien has replaced the person, and they are looking at an imposter. In this syndrome, it appears that the emotional feelings for the familiar person are disconnected from the representation of that person. A patient will be looking at her husband, but she feels no emotion when she sees him. The interpreter has to explain this phenomenon. It is receiving the information from the face identification module (“That’s Jack, my husband”), but it is not receiving any emotional information. The interpreter, seeking cause and effect, comes up with a solution: “It must not really be Jack, because if it really were Jack I’d feel some emotion, so he is an imposter!”

The interpreter is a system of primary importance to the human brain. Interpreting the cause and effect of both internal and external events enables the formation of beliefs, which are mental constructs that free us from simply responding to stimulus–response aspects of everyday life. When a stimulus, such as a pork roast, is placed in front of your dog, he will scarf it down. When you are faced with such a stimulus, however, even if you are hungry you may not partake if you have a belief that it is unhealthy, or that you should not eat animal products, or your religious beliefs forbid it. Your behavior can hinge on a belief.

Looking at the past decades of split-brain research, we find one unalterable fact. Disconnecting the two cerebral hemispheres, an event that finds one half of the cortex no longer interacting in a direct way with the other half, does not typically disrupt the cognitive-verbal intelligence of these patients. The left dominant hemisphere remains the major force in their conscious experience and that force is sustained, it would appear, not by the whole cortex but by specialized circuits within the left hemisphere. In short, the inordinately large human brain does not render its unique contributions simply by being a bigger brain, but by the accumulation of specialized circuits.

We now understand that the brain is a constellation of specialized circuits. We know that beyond early childhood, our sense of being conscious never changes. We know that when we lose function in particular areas of our cortex, we lose awareness of what that area processes. Consciousness is not another system but a felt awareness of the products of processing in various parts of the brain. It reflects the affective component of specialized systems that have evolved to enable human cognitive processes. With an inferential system in place, we have a system that empowers all sorts of mental activity.

Left- and Right-Hemisphere Consciousness

Because of the processing differences between the hemispheres, the quality of consciousness emanating from each hemisphere might be expected to differ radically. Although left-hemisphere consciousness would reflect what we mean by normal conscious experience, right hemisphere consciousness would vary as a function of the specialized circuits that the right half of our brain possesses. Mind Left, with its complex cognitive machinery, can distinguish between sorrow and pity and appreciate the feelings associated with each state. Mind Right does not have the cognitive apparatus for such distinctions and consequently has a narrower state of awareness. Consider the following examples of reduced capacity in the right hemisphere and the implications they have for consciousness.

Split-brain patients without right-hemisphere language have a limited capacity for responding to patterned stimuli. The capacity ranges from none whatsoever to the ability to make simple matching judgments above the level of chance. Patients with the capacity to make perceptual judgments not involving language were unable to make a simple same–different judgment within the right brain when both the sample and the match were lateralized simultaneously. Thus, when a judgment of sameness was required for two simultaneously presented figures, the right hemisphere failed.

This minimal profile of capacity stands in marked contrast to patients with right-hemisphere language. One patient, J.W., who after his surgery initially was unable to access speech from the right hemisphere, years later developed the ability to understand language and had a rich right-hemisphere lexicon (as assessed by the Peabody Picture Vocabulary Tests and other special tests). Patients V.P. and P.S. could understand language and speak from each half of the brain. Would this extra skill give the right hemisphere greater ability to think, to interpret the events of the world?

It turns out that the right hemispheres of both patient groups (those with and without right-hemisphere language) are poor at making simple inferences. When shown two pictures, one after the other (e.g., a picture of a match and a picture of a woodpile), the patient (or the right hemisphere) cannot combine the two elements into a causal relation and choose the proper result (i.e., a picture of a burning woodpile as opposed to a picture of a woodpile and a set of matches). In other testing, simple words are presented serially to the right side of the brain. The task is to infer the causal relation between the two lexical elements and pick the answer from six possible answers in full view of the participant. A typical trial consists of words like pin and finger being flashed to the right hemisphere, and the correct answer is bleed. Even though the patient (right hemisphere) can always find a close lexical associate of the words used, he cannot make the inference that pin and finger should lead to bleed.

In this light, it is hard to imagine that the left and right hemispheres have similar conscious experiences. The right cannot make inferences, so it has limited awareness. It deals mainly with raw experience in an unembellished way. The left hemisphere, though, is constantly—almost reflexively—labeling experiences, making inferences as to cause, and carrying out a host of other cognitive activities. The left hemisphere is busy differentiating the world, whereas the right is simply monitoring it.

Is Consciousness a Uniquely Human Experience?

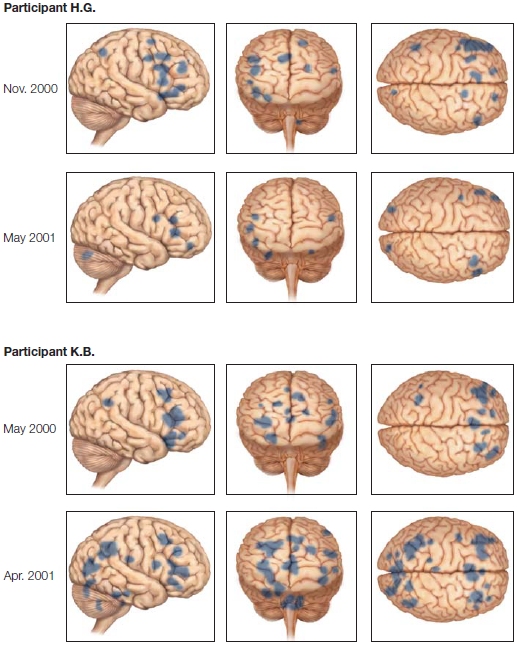

Humans, chimpanzees, and bonobos have a common ancestor, so it is reasonable to assume that we share many perceptual, behavioral, and cognitive skills. If our conscious state has evolved as a product of our brain’s biology, is it possible that our closest relatives might also possess this mental attribute or a developing state of our ability?

One way to tackle the question of nonhuman primate consciousness would be to compare different species’ brains to those of humans. Comparing the species on a neurological basis has proven to be difficult, though it has been shown that the human prefrontal cortex is much larger in area than that of other primates. Another approach, instead of comparing pure biological elements, is to focus on the behavioral manifestation of the brain in nonhuman primates. This approach parallels that of developmental psychologists, who study the development of self-awareness and theory of mind (see Chapter 13) in children. It draws from the idea that children develop abilities that outwardly indicate conscious awareness of themselves and their environment.

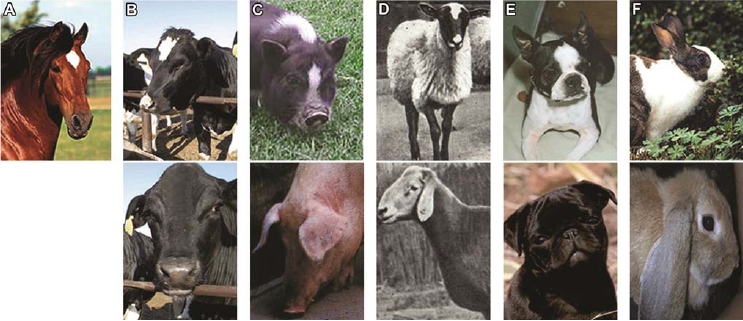

FIGURE 14.13 Evidence for self-awareness in chimpanzees.

When initially presented with a mirror, chimpanzees react to it as if they are confronting another animal. After 5 to 30 minutes, however, chimpanzees will engage in self-exploratory behaviors, indicating that they know they are indeed viewing themselves.

Trying to design a test to demonstrate self-awareness in animals has proven difficult. In the past, it was approached from two angles. One is by mirror self-recognition (MSR), and the other is through imitation. Gordon Gallup (1970) designed the MSR test and proposed that if an animal could recognize itself in a mirror, then it implies the presence of a self-concept and self-awareness (Gallup, 1982). Only a few members of a few species can pass the test. It develops in some chimps (Figure 14.13), around puberty, and is present to a lesser degree in older chimps (Povinelli et al., 1993). Orangutans also may show MSR, but only the rare gorilla possesses it (Suarez & Gallup, 1981; Swartz, 1997). Children reliably develop MSR by age 2 (Amsterdam, 1972). Gallup’s suggestion that mirror self-recognition implies the presence of a selfconcept and self-awareness has come under attack. For instance, Robert Mitchell (1997), a psychologist at Eastern Kentucky University, questioned what degree of selfawareness is demonstrated by recognizing oneself in the mirror. He points out that MSR requires only an awareness of the body, rather than any abstract concept of self. No need to invoke more than matching sensation to visual perception; people do not require attitudes, values, intentions, emotion, and episodic memory to recognize their body in the mirror. Another problem with the MSR test is that some patients with prosopagnosia, although they have a sense of self, are unable to recognize themselves in a mirror. They think they are seeing someone else. So although the MSR test can indicate a degree of self-awareness, it is of limited value in evaluating just how self-aware an animal is. It does not answer the question of whether an animal is aware of its visible self only, or if it is aware of unobservable features.

Imitation provides another approach. If we can imitate another’s actions, then we are capable of distinguishing between our own actions and the other person’s. The ability to imitate is used as evidence for self-recognition in developmental studies of children. Although it has been searched for extensively, scant evidence has been found that other animals imitate. Most of the evidence in primates points to the ability to reproduce the result of an action, not to imitate the action itself (Tennie et al., 2006, 2010).

Another avenue has been the search for evidence of theory of mind, which has been extensive. In 2008, Josep Call and Michael Tomasello from the Max Planck Institute for Evolutionary Anthropology reviewed the research from the 30 years since Premack and Woodruff posed the question asking whether chimpanzees have a theory of mind. Call and Tomasello concluded:

There is solid evidence from several different experimental paradigms that chimpanzees understand the goals and intentions of others, as well as the perception and knowledge of others. Nevertheless, despite several seemingly valid attempts, there is currently no evidence that chimpanzees understand false beliefs. Our conclusion for the moment is, thus, that chimpanzees understand others in terms of a perception–goal psychology, as opposed to a full-fledged, human-like belief–desire psychology.

What chimpanzees do not do is share intentionality (such as their beliefs and desires) with others, perhaps as a result of their different theory-of-mind capacity. On the other hand, children from about 18 months of age do (for a review, see Tomasello, 2005). Tomasello and Malinda Carpenter suggest that this ability to share intentionality is singularly important in children’s early cognitive development and is at the root of human cooperation. Chimpanzees can follow someone else’s gaze, can deceive, engage in group activities, and learn from observing, but they do it all on an individual, competitive basis. Shared intentionality in children transforms gaze following into joint attention, social manipulation into cooperative communication, group activity into collaboration, and social learning into instructed learning. That chimpanzees do not have the same conscious abilities makes perfect sense. They evolved under different conditions than the hominid line. They have always called the tropical forest home and have not had to adapt to many changes. Because they have changed very little since their lineage diverged from the common ancestor shared with humans, they are known as a conservative species. In contrast, many species have come and gone along the hominid lineage between Homo sapiens and the common ancestor. The human ancestors that left the tropical forest had to deal with very different environments when they migrated to woodlands, savanna, and beyond. Faced with adapting to radically different environments and social situations, they, unlike the chimpanzee lineage, underwent many evolutionary changes—one of which may well be shared intentionality.

TAKE-HOME MESSAGES

Abandoning the Concept of Free Will

Even after some visual illusions have been explained to us, we still see them (an example is Roger Shepard’s “Turning the Tables” illusion; see http://www.michaelbach.de/ot/sze_usshepardTables/index.html). Why does this happen? Our visual system contains hardwired adaptations that under standard viewing conditions allow us to view the world accurately. Knowing that we can tweak the interpretation of the visual scene by some artificial manipulations does not prevent our brain from manufacturing the illusion. It happens automatically. The same holds true for the human interpreter. Using its capacity for seeking cause and effect, the interpreter provides the narrative, which creates the illusion of a unified self and, with it, the sense that we have agency and “freely” make decisions about our actions. The illusion of a unified self calling the shots is so powerful that, just as with some visual illusions, no amount of analysis will change the feeling that we are in control, acting willfully and with purpose. Does what we have learned about the deterministic brain mechanisms that control our cognition undermine the concept of a self, freely willing actions? At the personal psychological level, we do not believe that we are pawns in the brain’s chess game. Are we? Are our cherished concepts of free will and personal responsibility an illusion, a sham?

One goal of this section is to challenge the concept of free will, yet to leave the concept of personal responsibility intact. The idea is that a mechanistic concept of how the mind works eliminates the need for the concept of free will. In contrast, responsibility is a property of human social interactions, not a process found in the brain. Thus, no matter how mechanistic and deterministic the views of brain function become, the idea of personal responsibility will remain intact. In what follows, we view brain–mind interactions as a multi-layered system (see Doyle & Csete, 2011) plunked down in another layer, the social world. The laws of the higher social layer, which include personal responsibility, constrain the lower layer (people) that the social layer is made of (Gazzaniga, 2013).

Another goal is to suggest that mental states emerge from stimulus-driven (bottom-up) neural activity that is constrained by goal-directed (top-down) neural activity. That is, a belief can constrain behavior. This view challenges the traditional idea that brain activity precedes conscious thought and that brain-generated beliefs do not constrain brain activity. This concept, that there is bidirectional causation, makes it clear that we must decode and understand the interactions among hierarchical levels (layers) of the brain (Mesulam, 1998) to understand the nature of brain-enabled conscious experience. These interactions are both anatomical (e.g., molecules, genes, cells, ensembles, mini-columns, columns, areas, lobes) and functional (e.g., unimodal, multimodal, and transmodal mental processing). Each brain layer animates the other, just as software animates hardware and vice versa. At the point of interaction between the layers, not in the staging areas within a single layer, is where phenomenal awareness arises—our feeling of free will. The freedom that is represented in a choice not to eat the jelly doughnut comes from an interaction between the mental layer belief (about health and weight) and the neuronal layer reward systems for calorie-laden food. The stimulus-driven pull sometimes loses out to a goal-directed belief in the battle to initiate an action: The mental layer belief can trump the pull to eat the doughnut because of its yummy taste. Yet the top layer was engendered by the bottom layer and does not function alone or without it.

If this concept is correct, then we are not living after the fact; we are living in real time. And there’s more: This view also implies that everything our mechanistic brain generates and changes (such as hypotheses, beliefs, and so forth) as we go about our business can influence later actions. Thus, what we call freedom is actually the gaining of more options that our mechanistic brain can choose from as we relentlessly explore our environment. Taken together, these ideas suggest that the concept of personal responsibility remains intact, and that brain-generated beliefs add further richness to our lives. They can free us from the sense of inevitability that comes with a deterministic view of the world (Gazzaniga, 2013).

Philosophical discussions about free will have gone on at least since the days of ancient Greece. Those philosophers, however, were handicapped by a lack of empirical information about how the brain functions. Today, we have a huge informational advantage over our predecessors that arguably makes past discussions obsolete. In the rest of the chapter, we examine the issue of determinism, free will, and responsibility in light of this modern knowledge.

Determinism and Physics