|

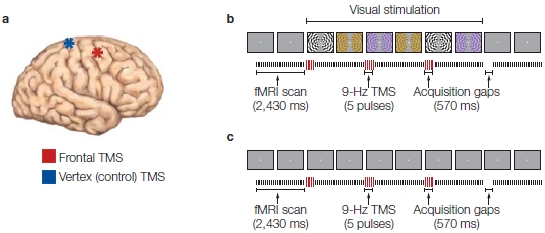

If everything seems under control, you’re just not going fast enough.

~ Mario Andretti

|

Chapter 12

Cognitive Control

OUTLINE

What Is Cognitive Control?

The Anatomy Behind Cognitive Control

Cognitive Control Deficits

Goal-Oriented Behavior

Decision Making

Goal Planning

Goal-Based Cognitive Control

Ensuring That Goal-Oriented Behaviors Succeed

THE AT TENDING PHYSICIAN in the neurology clinic had dealt with a lot of bizarre complaints during his 20 years of practice, but he was about to hear something new. W.R., a well-dressed man accompanied by his brother, complained to the neurologist that “he had lost his ego” (Knight & Grabowecky, 1995).

W.R. had been a focused kid, deciding while still a teenager that he wanted to become a lawyer. He remained focused on this goal in college, building up a nice GPA and resume. His life was well balanced: He found time for tennis, parties, and numerous girlfriends. After graduation, things continued just as planned. W.R. was admitted to the law school of his choice and completed the program with a solid, if not stellar, academic record. After earning his degree, however, his life suddenly seemed to change course. He no longer found himself driven to secure a job with a top law firm. Indeed, 4 years had passed and he still had not taken the bar exam or even looked for a job in the legal profession. Instead, he was an instructor at a tennis club.

His family was extremely disturbed to see the changes in W.R.’s fortunes. After law school, they thought he was experiencing an early midlife crisis that was not atypical of the times. They hoped that he would find satisfaction in his passion for tennis or that he would eventually resume his pursuit of a career in law. Neither had occurred. Indeed, he even gave up playing tennis. His opponents became frustrated because, shortly after commencing a match, W.R. would project an aura of nonchalance, forgetting to keep track of the score or even whose turn it was for service. Unable to support himself financially, he hit up his brother with increasingly frequent requests for “temporary” loans. As time passed, his family found it more and more difficult to tolerate W.R.’s behavior.

It was clear to the neurologist that W.R. was a highly intelligent man. He could clearly recount the many details of his life history, and he was cognizant that something was amiss. He realized that he had become a burden to his family, expressing repeatedly that he wished he could pull things together. He simply could not take the necessary steps, however, to find a job or get a place to live. His brother noted another radical change in W.R. Although he had been sexually active throughout his college years and had even lived with a woman, he had not been on a date for a number of years and seemed to have lost all interest in romantic pursuits. W.R. sheepishly agreed. He had little regard for his own future, for his successes, even for his own happiness. Though aware that his life had drifted off course, he just was not able to make the plans to effect any changes.

If this had been the whole story, the neurologist might have thought that a psychiatrist was a better option to treat a “lost ego.” Four years previously, however, during his senior year in law school, W.R. had suffered a seizure after staying up all night drinking coffee and studying for an exam. An extensive neurological examination done at the time, which included positron emission tomography (PET) and computer tomography (CT) scans, failed to identify the cause of the seizure. The neurologist was suspicious, however, given the claims of a lost ego combined with the patient’s obvious distractibility.

A CT scan that day confirmed the physician’s worst fears. W.R. had an astrocytoma. Not only was the tumor extremely large, but it had followed an unusual course. Traversing along the fibers of the corpus callosum, it had extensively invaded the lateral prefrontal cortex in the left hemisphere and a considerable portion of the right frontal lobe. This tumor had very likely caused the initial seizure, even though it was not detected at the time. Over the previous 4 years, it had slowly spread.

The next day, the neurologist informed W.R. and his brother of the diagnosis. Unfortunately, containment of the tumor was not possible. They could try radiation, but the prognosis was poor: W.R. was unlikely to live more than a year. His brother was devastated, shedding tears upon hearing the news. He had to face the loss of W.R., and he also felt guilty over the frustration he had felt with W.R.’s cavalier lifestyle over the previous 4 years. W.R., on the other hand, remained relatively passive and detached. Though he understood that the tumor was the culprit behind the dramatic life changes he had experienced, he was not angry or upset. Instead, he appeared unconcerned. He understood the seriousness of his condition; but the news, as with so many of his recent life events, failed to evoke a clear response or a resolve to take some action. W.R.’s self-diagnosis seemed to be right on target: He had lost his ego and, with it, the ability to take command of his own life.

Leaving the question of “the self” to Chapter 14, we can discern from W.R.’s actions, or rather inaction, that he had lost the ability to engage in goal-oriented behavior. Although he could handle the daily chores required to groom and feed himself, these actions were performed out of habit, without the context of an overriding goal, such as being prepared and full of energy to tussle in the legal system. He had few plans beyond satisfying his immediate needs, and even these seemed minimal. He could step back and see that things were not going as well for him as others hoped. But on a day-to-day basis, the signals that he was not making progress just seemed to pass him by.

What Is Cognitive Control?

In this chapter, our focus turns to the cognitive processes that permit us to perform more complex aspects of behavior. Cognitive control, or what is sometimes referred to as executive function, allows us to use our perceptions, knowledge, and goals to bias the selection of action and thoughts from a multitude of possibilities. Cognitive control processes allow us to override automatic thoughts and behavior and step out of the realm of habitual responses. They give us cognitive flexibility, letting us think and act in novel and creative ways. By being able to suppress some thoughts and activate others, we can simulate plans and consider the consequences of those plans. We can plan for the future and troubleshoot problems. Cognitive control is essential for purposeful goal-oriented behavior and decision making.

As we will see, the successful completion of goal-oriented behavior faces many challenges, and cognitive control is necessary to meet them. All of us must develop a plan of action that draws on our personal experiences, yet is tailored to the current environment. Such actions must be flexible and adaptive to accommodate unforeseen changes and events. We must monitor our actions to stay on target and attain that goal. Sometimes we need to constrain our own desires and follow rules to conform to social conventions. We may need to inhibit a habitual response in order to attain a goal. Although you might want to stop at the doughnut store when heading to work in the morning, cognitive control mechanisms can override that sugary urge, allowing you to stop by the café for a healthier breakfast.

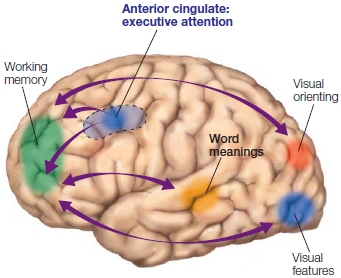

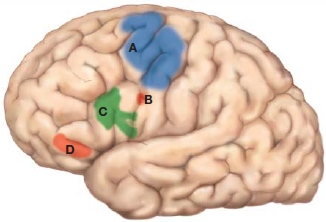

The study of cognitive control brings us to a part of the cerebral cortex that has received little attention in preceding chapters—the prefrontal cortex. In this chapter, we concentrate on two prefrontal control systems (see the Anatomical Orientation box). The first, which includes the lateral prefrontal cortex and frontal pole, supports goal-oriented behavior. This control system works in concert with more posterior regions of the cortex to constitute a working memory system that recruits and selects task-relevant information. This system is involved with planning, simulating consequences, and initiating, inhibiting, and shifting behavior. The second control system, which includes the medial frontal cortex, plays an essential role in guiding and monitoring behavior. It works in tandem with the prefrontal cortex, monitoring ongoing activity to modulate the degree of cognitive control needed to keep behavior in line with goals. Before we get into these functions, we review some anatomy and consider cognitive control deficits that are seen in patients with frontal lobe dysfunction. We then focus on goal-oriented behavior and decision making, two complicated processes that rely on cognitive control mechanisms to work properly.

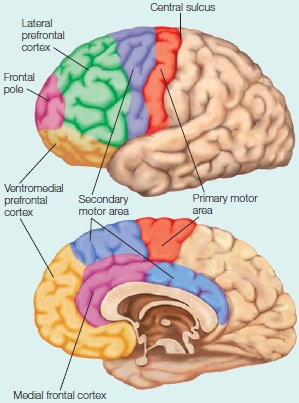

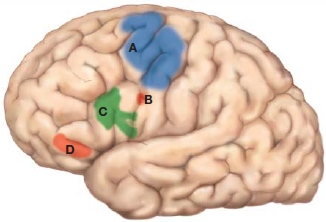

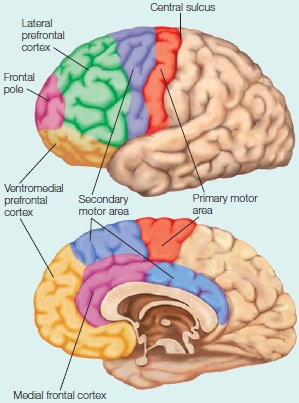

Anatomy of cognitive control

The prefrontal cortex includes all of the areas in front of the primary and secondary motor areas. The four subdivisions of prefrontal cortex are the lateral prefrontal cortex, ventromedial prefrontal cortex, frontal pole, and medial frontal cortex. The most ventral part of the ventromedial prefrontal cortex is frequently referred to as the orbitofrontal cortex, referring to the cortex which lies above the bony orbits of the eyes.

The Anatomy Behind Cognitive Control

As might be suspected of any complex process, cognitive control requires the integrated function of many different parts of the brain. This chapter highlights the frontal lobes, and in particular, prefrontal cortex. The discussion, however, also requires references to other cortical and subcortical areas that are massively interconnected with the frontal cortex, forming the networks that enable goal-oriented behavior. This network includes the parietal lobe and the basal ganglia, regions that were discussed in previous chapters when we considered the neural mechanisms for attention and action selection.

Subdivisions of the Frontal Lobes

The frontal lobes comprise about a third of the cerebral cortex in humans. The posterior border with the parietal lobe is marked by the central sulcus. The frontal and temporal lobes are separated by the lateral fissure.

As we learned in Chapter 8, the most posterior part of the frontal lobe is the primary motor cortex, encompassing the gyrus in front of the central sulcus and extending into the central sulcus itself. Anterior and ventral to the motor cortex are the secondary motor areas, including the lateral premotor cortex and the supplementary motor area. The remainder of the frontal lobe is termed the prefrontal cortex (PFC). The prefrontal cortex includes half of the entire frontal lobe in humans. The ratio is considerably smaller for non-primate species (Figure 12.1). We will refer to four regions of prefrontal cortex in this chapter: the lateral prefrontal cortex (LPFC), the frontal polar region (FP), the orbitofrontal cortex (OFC, or sometimes referred to as ventromedial zone), and the medial frontal cortex (MFC).

The frontal cortex is present in all mammalian species. In human evolution, however, it has expanded tremendously, especially in the more anterior aspects of prefrontal cortex. Interestingly, when compared to other primate species, the expansion of prefrontal cortex in the human brain is more pronounced in the white matter (the axonal tracts) than in the gray matter (the cell bodies; Schoenemann et al., 2005). This finding suggests that the cognitive capabilities that are uniquely human may be more a result of how our brains are connected rather than due to an increase in the number of neurons.

Because the development of functional capabilities parallels phylogenetic trends, the frontal lobe’s expansion is related to the emergence of the complex cognitive capabilities that are especially pronounced in humans. What’s more, as investigators frequently note, “Ontogeny recapitulates phylogeny.” Compared to the rest of the brain, prefrontal cortex matures late in terms of the development of neural density patterns and white matter tracts. Correspondingly, cognitive control processes appear relatively late in development, as evident in the “me-oriented” behavior of the infant and the rebellious teenager.

Networks Underlying Cognitive Control

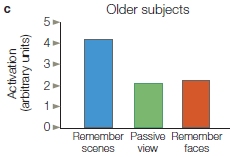

FIGURE 12.1 A comparison of prefronal cortex in different species.

The purple region indicates prefrontal cortex in six mammalian species. Although the brains are not drawn to scale, the figure makes clear that the PFC spans a much larger percentage of the overall cortex in the chimpanzee and human.

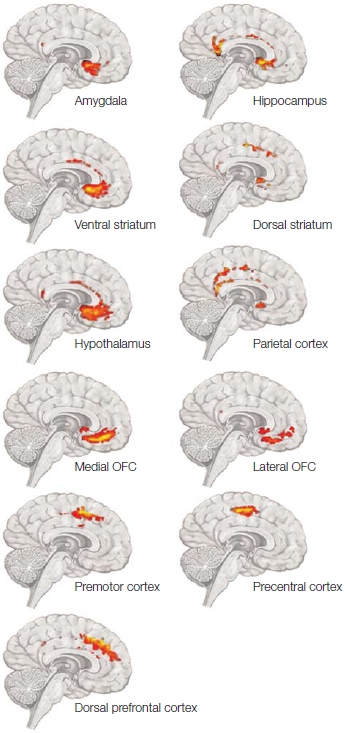

The prefrontal cortex coordinates processing across wide regions of the central nervous system (CNS). It contains a massively connected network that links the brain’s motor, perceptual, and limbic regions (Goldman-Rakic, 1995; Passingham, 1993). Extensive projections connect the prefrontal cortex to almost all regions of the parietal and temporal cortex, and even prestriate regions of the occipital cortex. The largest input comes from the thalamus, which connects the prefrontal cortex with subcortical structures including the basal ganglia, cerebellum, and various brainstem nuclei. Indeed, almost all cortical and subcortical areas influence the prefrontal cortex either through direct projections or indirectly via a few synapses. The prefrontal cortex also sends reciprocal connections to most areas that project to it, and to premotor and motor areas. It also has many projections to the contralateral hemisphere—projections to homologous prefrontal areas via the corpus callosum as well as bilateral projections to premotor and subcortical regions.

When we arrive at the discussion on decision making, which plays a prominent role in this chapter, we consider a finer-grained analysis of the dopamine system. This system includes the ventral tegmental area, a brainstem nucleus, the basal ganglia, and the dorsal and ventral striata (singular: striatum).

Cognitive Control Deficits

Patients with frontal lobe lesions like W.R., the wayward lawyer, present a paradox. From a superficial look at their everyday behavior, it is frequently difficult to detect a neurological disorder. They seem fine: They do not display obvious disorders in any of their perceptual abilities, they can execute motor actions, and their speech is fluent and coherent. These patients are unimpaired on conventional neuropsychological tests of intelligence and knowledge. They generally score within the normal range on IQ tests. Their memory for previously learned facts is fine, and they do well on most tests of long-term memory. With more sensitive and specific tests, however, it becomes clear that frontal lesions can disrupt different aspects of normal cognition and memory, producing an array of problems. Such patients may persist in a response even after being told that it is incorrect; this behavior is known as perseveration. These patients may be apathetic, distractible, or impulsive. They may be unable to make decisions, unable to plan actions, unable to understand the consequences of their actions, impaired in their ability to organize and segregate the timing of events in memory, unable to remember the source of their memories, and unable to follow rules—including a disregard of social conventions (discussed in the next chapter). Because the deficits seem to vary with the location of the patient’s lesion, it suggests that the neural substrates within the prefrontal cortex subserve different processes. As we’ll see, those processes are involved with cognitive control.

Ironically, patients with frontal lobe lesions are aware of their deteriorating social situation, have the intellectual capabilities to generate ideas that may alleviate their problems, and may be able to tell you the pros and cons of each idea. Yet their efforts to prioritize and organize these ideas into a plan and put them into play are haphazard at best. Similarly, though they are not amnesic, they are able to tell you a list of rules from memory, but may not be able to follow them.

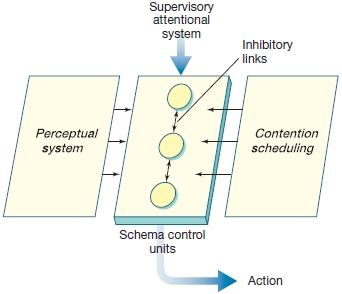

A demonstration of how these problems are manifest in everyday behavior was given by Tim Shallice (Shallice & Burgess, 1991). He asked three patients with frontal lesions from head trauma to go to the local shopping center to make a few purchases (e.g., a loaf of bread), keep an appointment, or collect information such as the exchange rate of the rupee. These chores presented a real problem for the patients. For instance, one patient failed to purchase soap because the store she visited did not carry her favorite brand; another wandered outside the designated shopping center in pursuit of an item that could easily be found within the designated region. All became embroiled in social complications. One succeeded in obtaining the newspaper but was pursued by the merchant for failing to pay! In a related experiment, patients were asked to work on three tasks for 15 minutes. Whereas control participants successfully juggled their schedule to ensure that they made enough progress on each task, the patients got bogged down on one or two tasks.

Lesion studies in animals have revealed a similar paradox. Unilateral lesions of prefrontal cortex also tend to produce relatively mild deficits. When the lesions are bilateral, however, dramatic changes can be observed. Consider the observations of Leonardo Bianchi (1922), an Italian psychiatrist of the early 20th century:

The monkey which used to jump on to the window-ledge, to call out to his companions, after the operation jumps to the ledge again, but does not call out. The sight of the window determines the reflex of the jump, but the purpose is now lacking, for it is no longer represented in the focal point of consciousness. ... Another monkey sees the handle of the door and grasps it, but the mental process stops at the sight of the bright colour of the handle. The animal does not attempt to turn it so as to open the door.... Evidently there are lacking all those other images that are necessary for the determination of a series of movements coordinated towards one end.

As with W.R., the monkeys demonstrate a loss of goal-oriented behavior.

The behavior of these monkeys underscores an important aspect of goal-oriented behavior. Following the lesions, the behavior is stimulus driven. The animal sees the ledge and jumps up; another sees the door and grasps the handle, but that is the end of it. They no longer appear to have a purpose for their actions. The sight of the door is no longer a sufficient cue to remind the animal of the food and other animals that can be found beyond it. The question is, what is the deficit? Is it a problem with motivation, attention, memory, or something else? Insightfully, Bianchi thought it was a problem with lack of representation in the “focal point of consciousness,” what we now think of as working memory.

A classic demonstration of this tendency for stimulus-driven behavior among humans with frontal lobe injuries is evident from the clinical observations of Francois Lhermitte of the Hôpital de la Salpêtrière in Paris (Lhermitte, 1983; Lhermitte et al., 1986). Lhermitte invited a patient to meet him in his office. At the entrance to the room, he had placed a hammer, a nail, and a picture. Upon entering the room and seeing these objects, the patient spontaneously used the hammer and nail to hang the picture on the wall. In a more extreme example, Lhermitte put a hypodermic needle on his desk, dropped his trousers, and turned his back to his patient. Whereas most people in this situation would consider filing ethical charges, the frontal lobe patient was unfazed. He simply picked up the needle and gave his doctor a healthy jab in the buttocks! Lhermitte coined the term utilization behavior to characterize this extreme dependency on prototypical responses for guiding behavior. The patients with frontal lobe damage retained knowledge about prototypical uses of objects such as a hammer or needle, saw the stimulus, and responded. They were not able to inhibit their response or flexibly change it according to the context in which they found themselves. Their cognitive control mechanisms were out of whack.

TAKE-HOME MESSAGES

- Cognitive control refers to mental abilities that involve planning, controlling, and regulating the flow of information processing.

- Prefrontal cortex includes four major components: lateral prefrontal cortex, frontal pole, medial frontal cortex, and ventromedial prefrontal cortex. All are associated with cognitive control.

- The ability to make goal-directed decisions is impaired in patients with frontal cortex lesions, even if their general intellectual capabilities remain unaffected.

Goal-Oriented Behavior

Our actions are not aimless, nor are they entirely automatic—dictated by events and stimuli immediately at hand. We choose to act because we want to accomplish a goal or gratify a personal need.

Researchers distinguish between two fundamental types of actions. Goal-oriented actions are based on the assessment of an expected reward or value and the knowledge that there is a causal relationship between the action and the reward (action–outcome). Most of our actions are of this type. We turn on the radio when getting into the car so that we can catch the news on the drive home. We put money into the soda machine to purchase a favorite beverage. We resist going out to the movies the night before an exam to get in some extra studying, with the hope that this effort will lead to the desired grade.

In contrast to goal-oriented actions stand habitual actions. A habit is defined as an action that is no longer under the control of a reward, but is stimulus driven; as such, we can consider it automatic. The habitual commuter might find herself flipping on the car radio without even thinking about the expected outcome. The action is triggered simply by the context. It becomes obvious that this is a habit when our commuter reaches to switch on the radio, even though she knows it is broken. Habit-driven actions occur in the presence of certain stimuli that trigger the retrieval of well-learned associations. These associations can be useful, allowing us to rapidly select a response (Bunge, 2004), such as stopping quickly at a red light. They can also develop into persistent bad habits, however, such as eating junk food when bored or lighting up a cigarette when anxious. Habitual responses make addictions difficult to break.

The distinction between goal-oriented behavior and habits is graded. Though the current context is likely to dictate our choice of actions and may even be sufficient to trigger a habitual-like response, we are also capable of being flexible. The soda machine might beckon invitingly, but if we are on a health kick, we might walk on past or choose to purchase a bottle of water. These are situations in which cognitive control comes into play.

Cognitive control provides the interface through which goals influence behavior. Goal-oriented behaviors require processes that enable us to maintain our goal, focus on the information that is relevant to achieving that goal, ignore or inhibit irrelevant information, monitor our progress toward the goal, and shift flexibly from one subgoal to another in a coordinated way.

Cognitive Control Requires Working Memory

As we learned in Chapter 9, working memory, a type of short-term memory, is the transient representation of task-relevant information—what Patricia Goldman-Rakic has called the “blackboard of the mind.” These representations may be from the distant past, or they may be closely related to something that is currently in the environment, or has been experienced recently. Working memory refers to the temporary maintenance of this information, providing an interface between perception, long-term memory, and action and thus, enabling goal-oriented behavior and decision making.

Working memory is critical for animals whose behavior is not exclusively stimulus driven. What is immediately in front of us surely influences our behavior, but we are not automatons. We can (usually) hold off eating until all the guests sitting around the table have been served. This capacity demonstrates that we can represent information that is not immediately evident, in this case social rules, in addition to reacting to stimuli that currently dominate our perceptual pathways (the fragrant food and conversation). We can mind our dinner manners (stored knowledge) by choosing to respond to some stimuli (the conversation) while ignoring other stimuli (the food). This process requires integrating current perceptual information with stored knowledge from long-term memory.

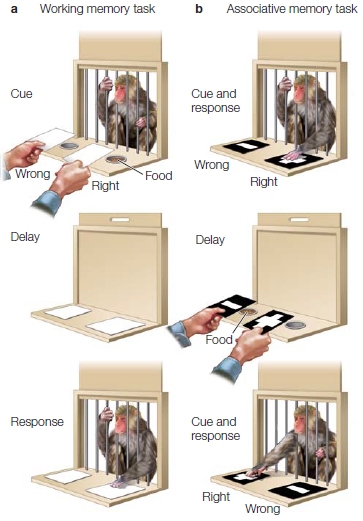

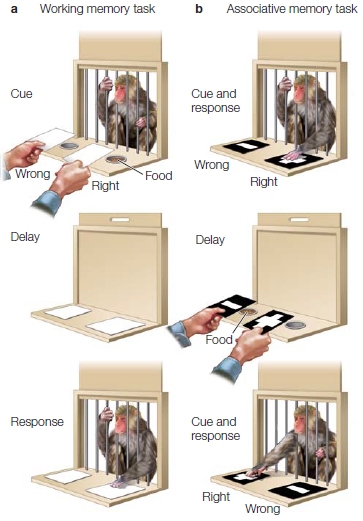

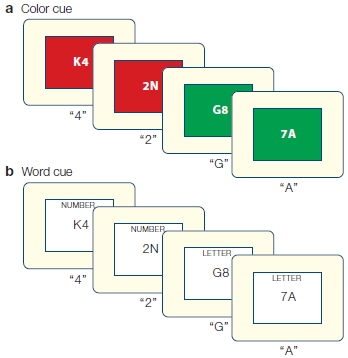

Prefrontal Cortex Is Necessary for Working Memory but Not Associative Memory

The lateral prefrontal cortex appears to be an important interface between current perceptual information and stored knowledge, and thus, constitutes a major component of the working memory system. Prefrontal cortex is necessary for cognitive control. Its importance in working memory was first demonstrated in animal studies using a variety of delayed-response tasks. In the simplest version, sketched in Figure 12.2, a monkey is situated within reach of two food wells. At the start of a trial, the monkey observes the experimenter placing a food morsel in one of the two wells (perception). Then the two wells are covered, and a curtain is lowered to prevent the monkey from reaching toward either well. After a delay period, the curtain is raised and the monkey is allowed to choose one of the two wells and recover the food. Although this appears to be a simple task, it demands one critical cognitive capability: The animal must continue to represent the location of the unseen food during the delay period (working memory). Monkeys with lesions of the lateral prefrontal cortex do poorly on the task.

The problem for these animals does not reflect a general deficit in forming associations. In an experiment to test associative memory, the food wells are covered with distinctive visual cues: The well with the food has a plus sign, and the empty well has a negative sign. In this condition, the researcher may shift the food morsel’s location during the delay period, but the associated visual cue—the food cover—will be relocated with the food. Prefrontal lesions do not disrupt performance in this task.

These two tasks clarify the concept of working memory (Goldman-Rakic, 1992). In the delayed-response task (see Figure 12.2a), the animal must remember the currently baited location during the delay period. In contrast, in the associative learning condition (see Figure 12.2b), it is only necessary for the visual cue to reactivate a long-term association of which cue is associated with the reward. The reappearance of the two visual cues can trigger recall and guide the animal’s performance.

Studies of patients with prefrontal lesions have also emphasized the role of this region in working memory. One example comes from studies of recency memory, the ability to organize and segregate the timing or order of events in memory (Milner, 1995). In a recency discrimination task, participants are presented with a series of study cards and every so often are asked which of two pictures was seen most recently. For example, one of the pictures might have been on a study card presented 4 trials previously, and the other, on a study card shown 32 trials back. For a control task, the procedure is modified: The test card contains two pictures, but only one of the two pictures was presented earlier. Following the same instructions, the participant should choose that picture because, by definition, it is the one seen most recently. Note, though, that the task is really one of recognition memory. There is no need to evaluate the temporal position of the two choices.

Patients with frontal lobe lesions perform as well as control participants on the recognition memory task, but they have a selective deficit in recency judgments. The memory task can be performed by evaluating if one of the stimuli was recently presented—or perhaps more relevant, if one of the stimuli is novel. The recency task, though, requires working memory in the sense that the patient must also keep track of the relationship between recently presented stimuli. This is not to suggest that the person could construct a full timeline of all of the stimuli—this would certainly exceed the capacity of working memory. But to compare the relative timing of two items, the frontal lobes are required to maintain the representations of those items at the time of the probe. When frontal lobes are damaged, this temporal structure is lost.

FIGURE 12.2 Prefrontal lesions impair working memory performance.

(a) In the working memory task, the monkey observes one well being baited with food. After a delay period, the animal retrieves the food. The location of the food is determined randomly. (b) In the associative memory task, the food reward is always associated with one of the two visual cues. The location of the cues (and food) is determined randomly. Working memory is required in the first task because, at the time the animal responds, no external cues indicate the location of the food. Long-term memory is required in the second task because the animal must remember which visual cue is associated with the reward.

A breakdown in the temporal structure of working memory may account for more bizarre aspects of frontal lobe syndrome. For example, Wilder Penfield described a patient who was troubled by her inability to prepare her family’s evening meal. She could remember the ingredients for dishes and perform all of the actions to make the dish, but unless someone was there to tell her the proper sequence step by step, she could not organize her actions into a proper temporal sequence and could not prepare a meal (Jasper, 1995).

Another, albeit indirect, demonstration of the importance of prefrontal cortex in working memory comes from developmental studies. Adele Diamond of the University of Pennsylvania (1990) pointed out that a common marker of conceptual intelligence, Piaget’s Object Permanence Test, is logically similar to the delayed-response task. In this task, a child observes the experimenter hiding a reward in one of two locations. After a delay of a few seconds, the child is encouraged to find the reward. Children younger than 1 year are unable to accomplish this task. At this age, the frontal lobes are still maturing. Diamond maintained that the ability to succeed in tasks such as the Object Permanence Test parallels the development of the frontal lobes. Before this development takes place, the child acts as though the object is “out of sight, out of mind.” As the frontal lobes mature, the child can be guided by representations of objects and no longer requires their presence.

It seems likely that many species must have some ability to recognize object permanence. A species would not have survived for long if its members did not understand that a predator that had stepped behind a particular bush was still there. The difference between species may be in the capacity of the working memory, how long information can be maintained in working memory, and the ability to maintain attention (see the box How the Brain Works: Working Memory, Learning, and Intelligence).

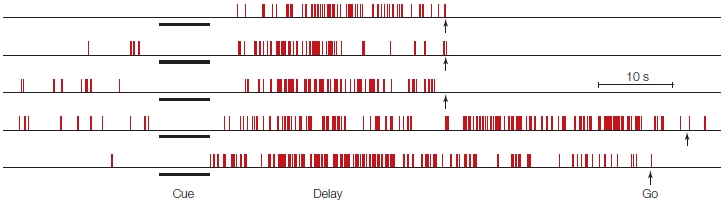

Physiological Correlates of Working Memory

A working memory system requires a mechanism to access stored information and a way to keep it active. The prefrontal cortex can perform both operations. In the delayed-response studies described earlier, single-cell recordings from the prefrontal cortex of monkeys (see Figure 12.3) showed that these neurons become active during the delayed-response task and show sustained activity throughout the delay period (Fuster, 1989). For some cells, activation doesn’t commence until after the delay begins and can be maintained up to 1 minute. These cells provide a neural correlate for keeping a representation active after the triggering stimulus is no longer visible. The cells provide a continuous record of the response required for the animal to obtain the reward.

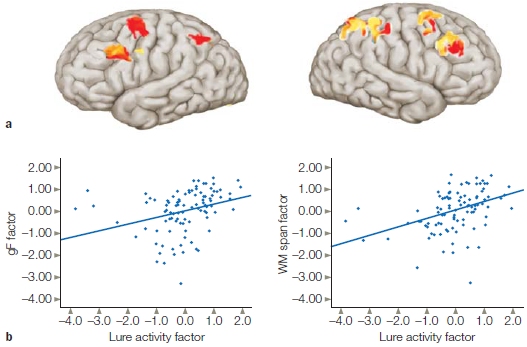

HOW THE BRAIN WORKS

Working Memory, Learning, and Intelligence

Humans are obsessed with identifying why people differ in what we call “intelligence.” We have looked at anatomical measures such as brain size, prefrontal cortex size, amount of grey matter, and amount of white matter (connectivity). These measures have all been shown to account for some of the variation observed on tests of intelligence. Another approach is to consider differences among types of intelligence. For example, we can compare crystallized intelligence and fluid intelligence. Crystallized intelligence refers to our knowledge, things like vocabulary and experience. Fluid intelligence refers to the ability to engage in creative abstract thinking, to recognize patterns, and to solve problems.

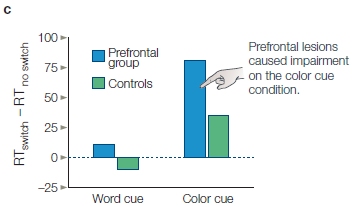

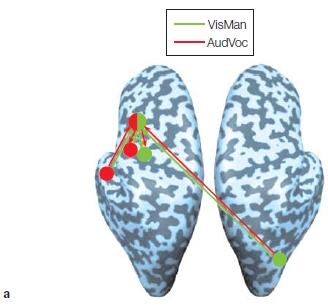

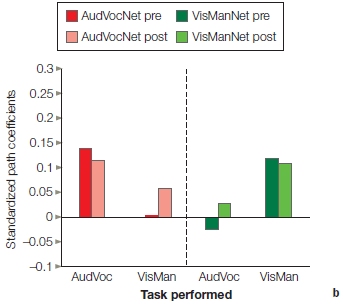

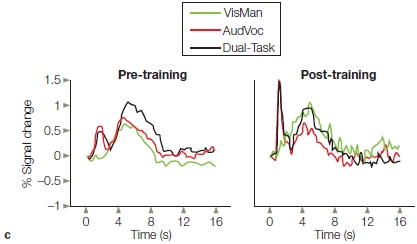

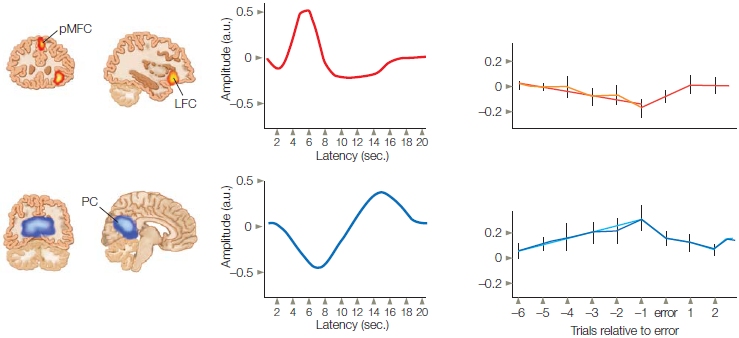

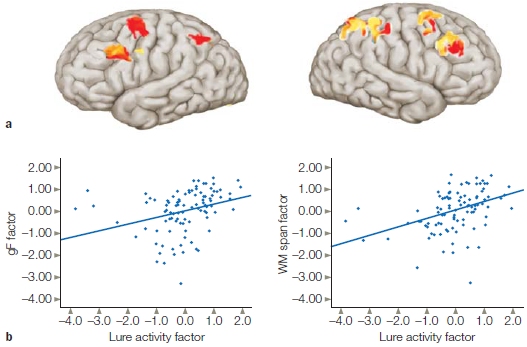

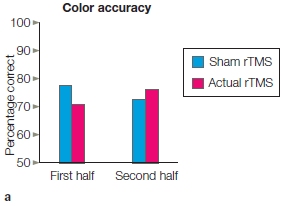

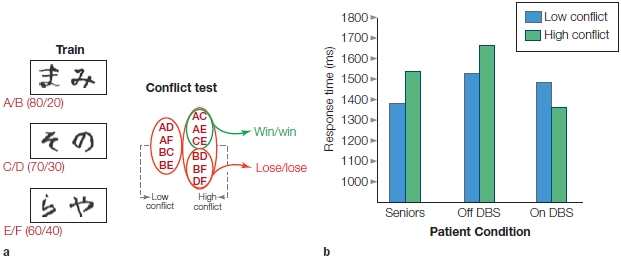

Fluid intelligence is closely linked to working memory. A child’s working memory at 5 years old turns out to be a better predictor of academic success than is IQ (Alloway & Alloway, 2010). Observations like these have inspired research on the neural mechanisms that are behind the differences in fluid intelligence. One study (Burgess et al., 2011) investigated whether the relationship between fluid intelligence and working memory is mediated by interference control, the ability to suppress irrelevant information. The researchers used fMRI while participants performed a classic working memory n-back task. Participants were presented with either word or face stimuli, and they were to respond when a stimulus matched one presented three items back. The researchers were curious about the neural response to “lures,” a stimulus that was recently presented but not 3-back (e.g., 2-back or 4-back). They assumed that the participants would show a tendency to respond to the lures and would need to exhibit interference control to suppress these responses. Indeed, an impressive positive correlation was found between the magnitude of the BOLD response to the lures in PFC (and parietal cortex) and fluid intelligence (Figure 1). They concluded that a key component of fluid intelligence is the ability to maintain focus on task-relevant information in working memory.

As we shall see in this chapter, the neurotransmitter dopamine plays an important role in learning. Dopamine receptors are abundant in PFC and thought to serve a modulatory function, sharpening the response of PFC neurons. It might be hypothesized that having more dopamine would predict better learning performance. Unlike fun, however, you can have too much dopamine. Various studies have shown that the efficacy of dopamine follows an inverted U-shaped function when performance is plotted as a function of dopamine levels. As dopamine levels increase, learning performance improves—but only to a point. At some level, increasing dopamine levels results in a reduction in performance.

The inverted U-shaped function can help explain some of the paradoxical effects of L-dopa therapy in Parkinson’s disease. In these patients, the reduction in dopamine levels is most pronounced in dorsal (motor) striatum, at least in the early stages of the disease; dopamine levels in ventral striatum and the cerebral cortex are less affected. L-dopa treatment boosts dopamine levels back to normal in the dorsal striatum and thus improves motor function. The same treatment, however, produces an overdose effect in the ventral striatum and frontal lobe. This can result in impaired performance on tasks that depend on ventral striato-frontal circuitry such as reversal learning, where you have to change your behavior to gain a reward (Graef & Heekeren, 2010).

Genetic data shows that different alleles can affect dopamine levels, which in turn have an effect on PFC function. The catecholamine-O-methyltransferase (COMT) gene is associated with the production of an enzyme that breaks down dopamine. There are different alleles of COMT, resulting in different levels of the enzyme. People with the allele that lowers the rate of dopamine breakdown have higher dopamine levels, especially in the PFC. Interestingly, this allele has been implicated in an increased risk for schizophrenia and other neuropsychiatric phenotypes.

|

FIGURE 1 Correlation of control network activity and measures of fluid intelligence.

Participants performed a working memory task. Trials were divided into those with lures where a mismatch was a stimulus that had been previously seen (and thus had potential for a false alarm) and trials without lures where the stimulus had not been seen. (a) Regions in prefrontal and parietal cortex that had increased BOLD response on lure trials compared to no-lure trials. (b) Correlation between individual scores on the Lure activity factor (Lure–No Lure) and measures of fluid intelligence (left) or a measure of working memory span from a different task (right).

|

|

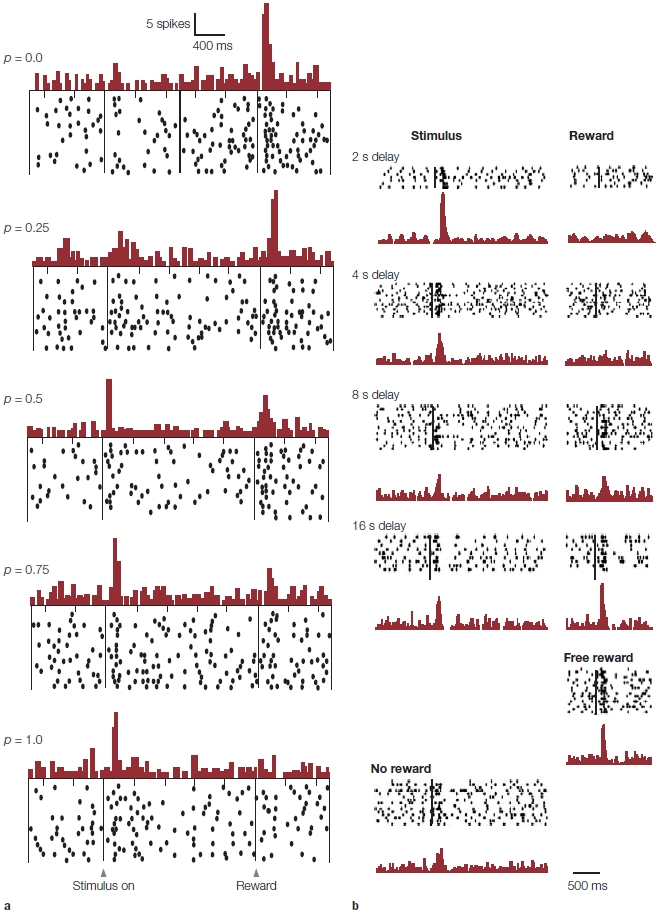

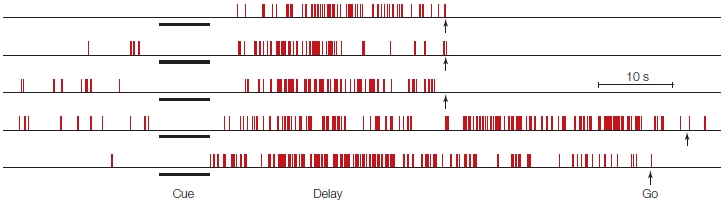

FIGURE 12.3 Prefrontal neurons can show sustained activity during delayed-response tasks.

Each row represents a single trial. The cue indicated the location for a forthcoming response. The monkey was trained to withhold the response until a “Go” signal (arrows) appeared. Each vertical tick represents an action potential. This cell did not respond during the cue interval. Rather, its activity increased when the cue was turned off, and activity persisted until the response.

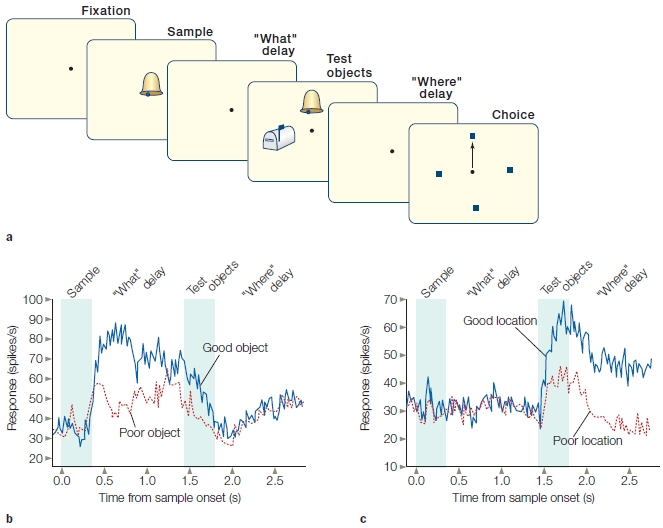

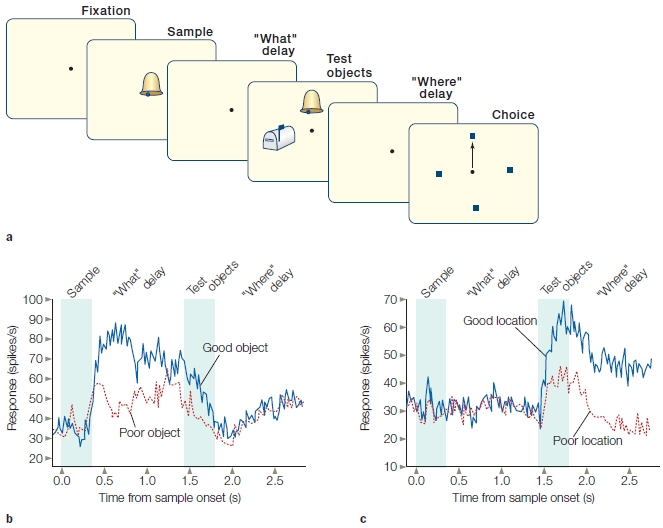

FIGURE 12.4 Coding of “what” and “where” information in single neurons of the prefrontal cortex in the macaque.

(a) Sequence of events in a single trial. See text for details. (b) Firing profile of a neuron that shows a preference for one object over another during the “what” delay. The neural activity is low once the response location is cued. (c) Firing profile of a neuron that shows a preference for one location. This neuron was not activated during the “what” delay.

Lateral prefrontal cortex (LPFC) cells simply could be providing a generic signal that supports representations in other cortical areas. Alternatively, they could be coding specific stimulus features. To differentiate between these possibilities, Earl Miller and his colleagues (Rao et al., 1997) trained monkeys on a working memory task that required successive coding of two stimulus attributes: identity and location. Figure 12.4a depicts the sequence of events in each trial. A sample stimulus is presented, and the animal must remember the identity of this object for a 1-s delay period in which the screen is blank. Then two objects are shown, one of which matches the sample. The position of the matching stimulus indicates the target location for a forthcoming response. The response, however, must be withheld until the end of a second delay. Within the lateral prefrontal cortex, cells characterized as “what,” “where,” and “what–where” were observed (Figure 12.4). For example, “what” cells responded to specific objects, and this response was sustained over the delay period. “Where” cells showed selectivity to certain locations. In addition, about half of the cells were “what–where” cells, responding to specific combinations of “what” and “where” information. A cell of this type exhibited an increase in firing rate during the first delay period when the target was the preferred stimulus. Moreover, the same cell continued to fire during the second delay period if the response was directed to a specific location.

These results indicate that, in terms of stimulus attributes, cells in the prefrontal cortex exhibit taskspecific selectivity. What’s more, the activity of these PFC cells is dependent on the monkey using that information to obtain a response. That is, the activity of the PFC cells is task-dependent. If the animal only has to passively view the stimuli, then the response of these cells is minimal right after the stimulus is presented and entirely absent during the delay period. Moreover, the response of these cells is malleable. If the task conditions change, the same cells become responsive to a new set of stimuli (Freedman et al., 2001).

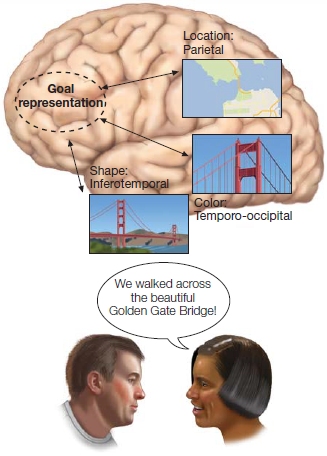

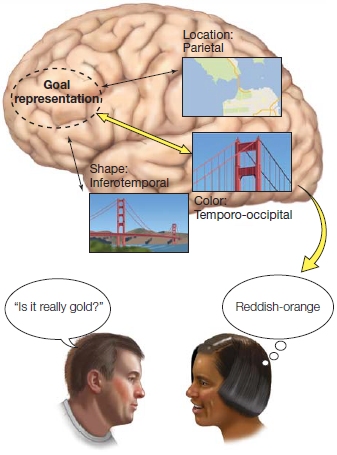

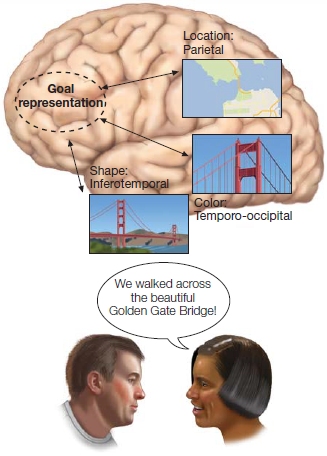

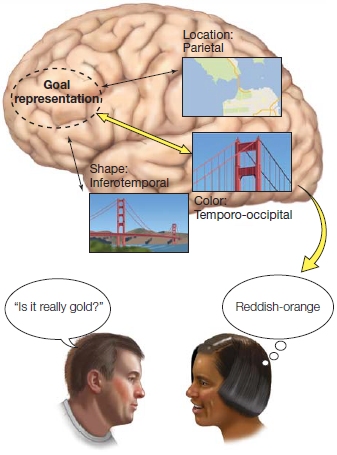

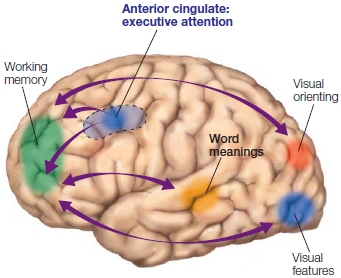

FIGURE 12.5 Working memory arises from the interaction of goal representations and the activation and maintenance of long-term knowledge.

In this example, the woman’s goal is to tell her friend about the highlights of her recent trip to San Francisco. Her knowledge of the Golden Gate Bridge requires activation of a distributed network of cortical regions that underlie the representation of long-term memory.

These cellular responses by themselves do not tell us what is represented by this protracted activity. It could be that long-term representations are stored in the prefrontal cortex, and the activity reflects the need to keep these representations active during the delay. Patients with frontal lobe lesions do not have deficits in long-term memory, however, so this hypothesis is unlikely. An alternative hypothesis is that prefrontal activation reflects a representation of the task goal, and as such, serves as an interface with task-relevant long-term representations in other neural regions (Figure 12.5). This latter hypothesis jibes nicely with the fact that the prefrontal cortex is extensively connected with postsensory regions of the temporal and parietal cortex. When a stimulus is perceived, a representation can be sustained through the interactions between prefrontal cortex and posterior brain regions, one that can facilitate goal-oriented behavior.

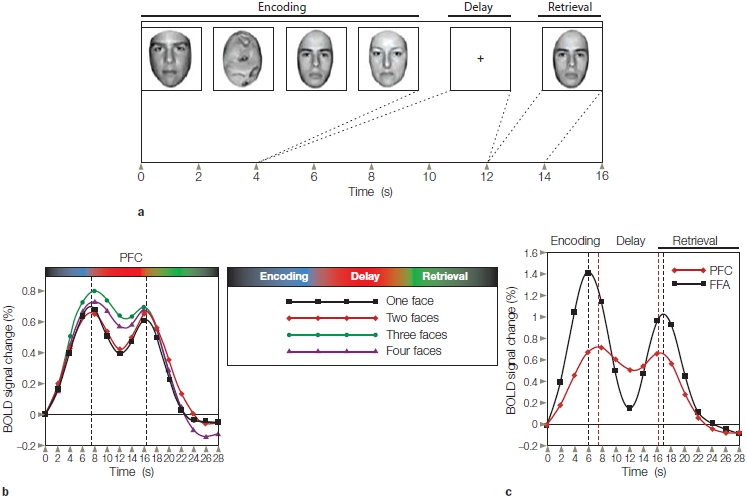

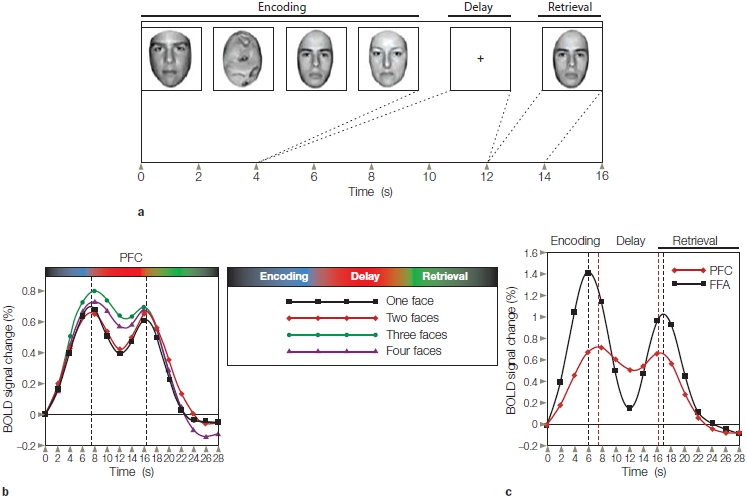

This alternative hypothesis has been examined in many functional imaging studies. In one representative study, researchers used a variant of a delayed-response task (Figure 12.6a). On each trial, four stimuli were presented successively for 1 s each during an encoding interval. The stimuli were either intact faces or scrambled faces. The participants were instructed to remember only the faces. Thus, by varying the number of intact faces presented during the encoding interval, the processing demands on working memory were manipulated. After an 8-s delay, a face stimulus—the probe—was presented, and the participant had to decide if the probe matched one of the faces presented during the encoding period. The BOLD response in the lateral prefrontal cortex bilaterally began to rise with the onset of the encoding period, and this response was maintained across the delay period even though the screen was blank (Figure 12.6b). This prefrontal response was sensitive to the demands on working memory. The sustained response during the delay period was greater when the participant had to remember three or four intact faces as compared to just one or two intact faces.

FIGURE 12.6 Functional MRI study of working memory.

(a) In a delayed-response task, a set of intact faces or scrambled faces is presented during an encoding period. After a delay period, a probe stimulus is presented and the participant indicates if that face was part of the memory set. (b) The BOLD response in lateral prefrontal cortex (PFC) rises during the encoding phase and remains high during the delay period. The magnitude of this effect is related to the number of faces that must be maintained in working memory. (c) The BOLD response in the lateral prefrontal cortex and the fusiform face area (FFA) rises during the encoding and retrieval periods. The black dotted and red dotted lines indicate the peak of activation in the FFA and PFC. During encoding, the peak is earlier in the FFA; during retrieval, the peak is earlier in the PFC.

By using faces, the experimenters could also compare activation in the prefrontal cortex with that observed in the fusiform face area, the inferior temporal (also called the inferotemporal) lobe region that was discussed in Chapter 6. The BOLD responses for these two regions are shown in Figure 12.6c, where the data are combined over the different memory loads. When the stimuli were presented, either during the encoding phase or for the memory probe, the BOLD response was much stronger in the FFA than in the prefrontal cortex. During the delay period, as noted already, the prefrontal response remains high. Note, however, that although a substantial drop in the FFA BOLD response occurs during the delay period, the response does not drop to baseline, thus suggesting that this area continues to be active during the delay period. In fact, the BOLD response in other perceptual areas of the inferior temporal cortex actually goes below baseline—the so-called rebound effect. Thus, although the sustained response is small in the FFA, it is considerably higher than what would be observed with nonfacial stimuli.

The timing of the peak activation in the prefrontal cortex and the FFA is also intriguing. During encoding, the peak response is slightly earlier in the FFA as compared to the prefrontal cortex. In contrast, during memory retrieval the peak response is slightly earlier in the prefrontal cortex. Although this study does not allow us to make causal inferences, the results are consistent with the general tenets of the model sketched in Figure 12.5. Lateral prefrontal cortex is critical for working memory by sustaining a representation of the task goal (to remember faces) and working in concert with inferotemporal cortex to sustain information across the delay period that is relevant for achieving that goal.

|

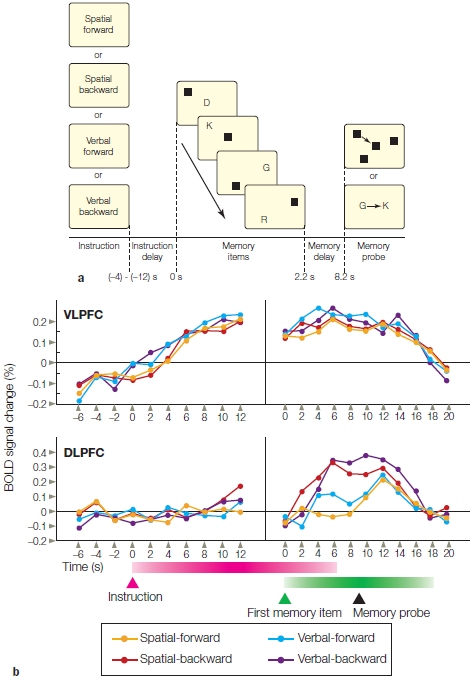

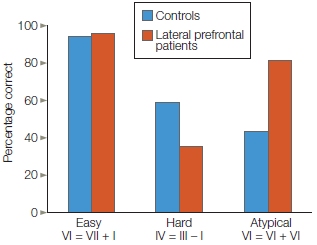

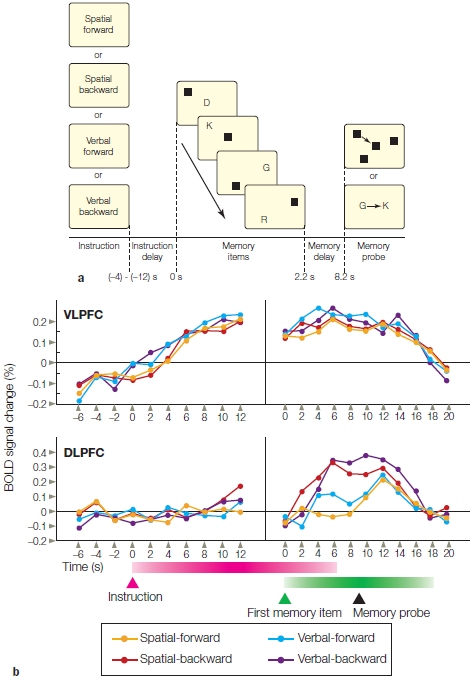

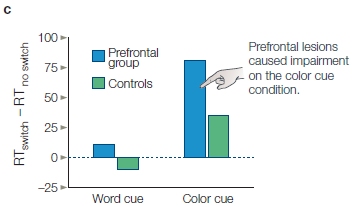

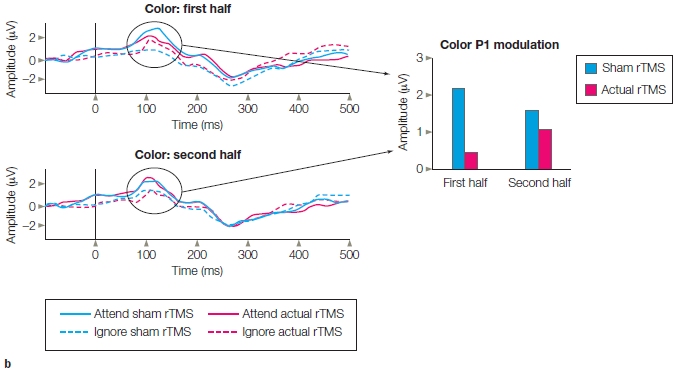

FIGURE 12.7 Subregions of the prefrontal cortex are sensitive to either contents or processing requirements of working memory.

(a) An instruction cue indicates the task required for the forthcoming trial. Following a delay period, a series of pictures containing letters and squares at various locations is presented. The participant must remember the order of the instruction-relevant stimuli to respond after the memory probe is presented. (b) Ventrolateral PFC is activated in a consistent fashion for all four tasks. Dorsolateral prefrontal cortex is more active when the stimuli must be remembered in reverse order, independent of whether the set is composed of locations or letters.

|

|

Processing Differences Across Prefrontal Cortex

Working memory is necessary for keeping task-relevant information active as well as manipulating that information to accomplish behavioral goals. Think about what happens when you reach for your wallet to pay a bill after dinner at a restaurant. Besides the listed price, you have to remember to add a tip, drawing on your long-term knowledge of the appropriate behavior in restaurants. With this goal in mind, you then do some fancy mental arithmetic (if your cell phone calculator isn’t handy). Michael Petrides (2000) suggests a model of working memory, in which information held in the posterior cortex is activated, retrieved, and maintained by the ventrolateral PFC (e.g., the standard percentage for a tip) and then manipulated with the relevant information (e.g., the price of the dinner) in more dorsal regions of lateral PFC, enabling successful attainment of the goal.

Let’s consider the experimental setup shown in Figure 12.7a. Memory was probed in two different ways. When the judgment required that the items be remembered in the forward direction, study participants had to internally maintain a representation of the stimuli using a natural scheme in which the items could be rehearsed in the order presented. The backward conditions were more challenging, because the representations of the items had to be remembered and manipulated. As Figure 12.7b shows, the BOLD response was similar in ventral prefrontal region (ventrolateral PFC) for all conditions. In contrast, the response in dorsolateral PFC was higher for the two backward conditions.

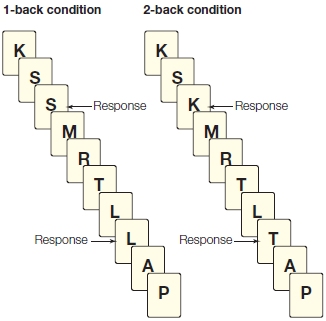

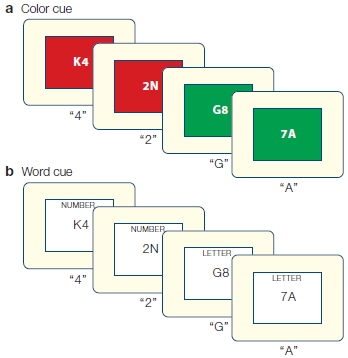

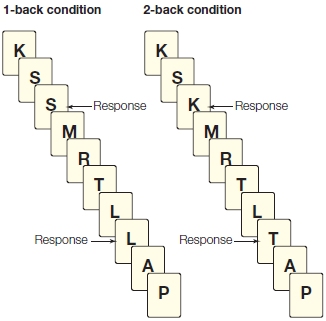

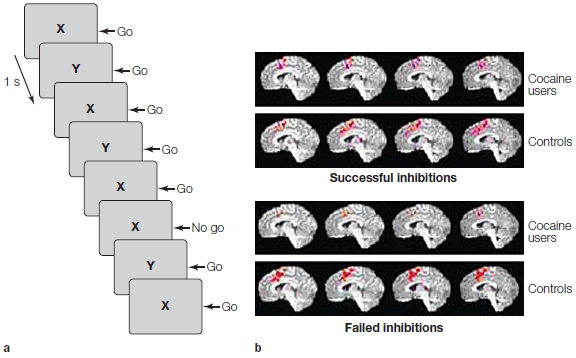

FIGURE 12.8 n-back tasks.

In n-back tasks, responses are required only when a stimulus matches one shown n trials earlier. The contents of working memory must be manipulated constantly in this task because the target is updated on each trial.

A similar pattern is observed in many imaging studies, indicating that dorsolateral prefrontal cortex is critical when the contents of working memory must be manipulated. One favorite testing variant for studying manipulations in working memory is the n-back task (Figure 12.8). Here the display consists of a continuous stream of stimuli. Participants are instructed to push a button when they detect a repeated stimulus. In the simplest version (n = 1), responses are made when the same stimulus is presented on two successive trials. In more complicated versions, n can equal 2 or more. With n-back tasks, it is not sufficient simply to maintain a representation of recently presented items; the working memory buffer must be updated continually to keep track of what the current stimulus must be compared to. Tasks such as n-back tasks require both the maintenance and the manipulation of information in working memory. Activation in the lateral prefrontal cortex increases as n-back task difficulty is increased, a response consistent with the idea that this region is critical for the manipulation operation.

The n-back tasks capture an essential aspect of prefrontal function, emphasizing the active part of working memory. The recency task that patients with frontal lobe lesions fail also reflects the updating aspect of working memory. That task requires the participants to remember whether something is familiar as well as the context in which the stimulus was previously encountered.

Hierarchical Organization of Prefrontal Cortex

The maintain-manipulate distinction offers a processing-based account of difference in PFC function along a ventral-dorsal gradient. This idea was primarily based on activation differences in more posterior regions of PFC. But what about the most anterior region, the frontal pole? The study comparing verbal and spatial working memory provides one hint. The frontal pole was the one region that was recruited upon presentation of the instruction cue in all four conditions (shown in Figure 12.7) and that maintained a high level of activity throughout the trial. It has been proposed that the frontal pole is essential for integrating the specific contents of mental activity into a general framework. Consider that the participants in the scanner had to remember to study the test items and other details, such as staying awake, not moving, and responding quickly. They also had to remember the big picture, the context of the test: They had volunteered to participate in an fMRI study with hopes of doing well so that they could volunteer to work in the lab and run their own cool experiments in the future.

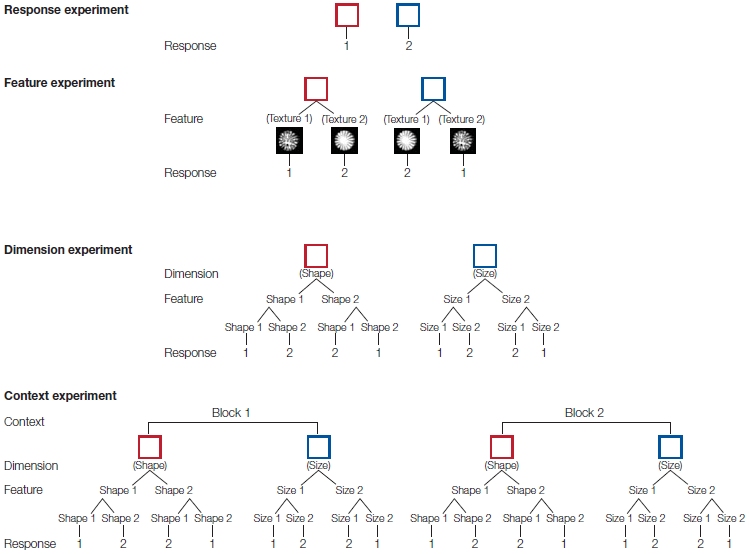

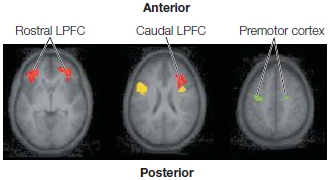

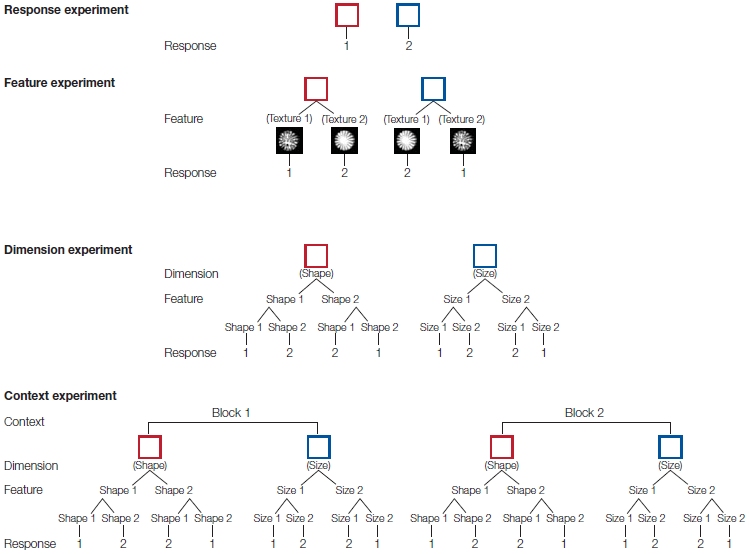

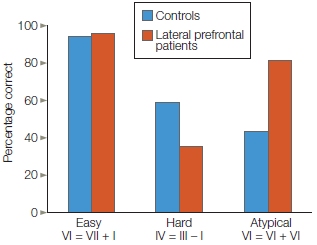

Work focused on the anterior–posterior gradient across the PFC suggests that activation patterns follow a crude hierarchy: For the simplest of working memory tasks, the activation may be limited to more posterior prefrontal regions or even secondary motor areas. For example, if the task requires the participant to press one key upon seeing a flower and another upon seeing an automobile, then these relatively simple stimulus–response rules can be sustained by the ventral prefrontal cortex and lateral premotor cortex. If the stimulus–response rule, however, is defined not by the objects themselves but by a color surrounding the object, then the frontal pole is also recruited (Bunge, 2004). When such contingencies are made even more challenging by changing the rules from one block of trials to the next, activation extends even farther in the anterior direction (Figure 12.9). These complex experiments demonstrate how goal-oriented behavior can require the integration of multiple pieces of information.

As a heuristic, we can think of PFC function as organized along three separate axes (see O’Reilly, 2010):

- A ventral–dorsal gradient organized in terms of maintenance and manipulation as well as in a manner that reflects general organizational principles observed in more posterior cortex, such as the ventral and dorsal visual pathways for “what” versus “how.”

- An anterior–posterior gradient that varies in abstraction, where the more abstract representations engage the most anterior regions (e.g., frontal pole) and the least abstract engage more posterior regions of the frontal lobes. In the extreme, we might think of the most posterior part of the frontal lobe, the primary motor cortex, as the point where abstract intentions are translated into concrete movement.

- A lateral–medial gradient related to the degree to which working memory is influenced by information in the environment (more lateral) or information related to personal history and emotional states (more medial). In this view, lateral regions of PFC integrate external information that is relevant for current goal-oriented behavior, whereas more medial regions allow information related to motivation and potential reward to influence goal-oriented behavior.

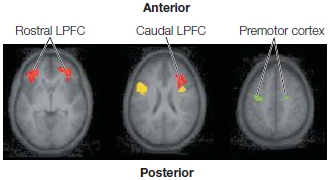

FIGURE 12.9 Hierarchical organization of the prefrontal cortex.

Prefrontal activation in an fMRI study increased along a posterior– anterior gradient as the experimental task became more complex. Activation in the premotor cortex shown in green was related to the number of stimulus–response mappings that had to be maintained. Activation in caudal LPFC shown in yellow was related to the contextual demands of the task. For example, a response to a letter might be made if the color of the letter was green, but not if it was white. Activation in rostral LPFC shown in red was related to variation in the instructions from one scanning run to the next. For example, the rules in one run might be reversed in the next run.

For example, suppose it is the hottest day of summer and you are at the lake. You think, “It would be great to have a frosty, cold drink.” This idea starts off as an abstract desire, but then is transformed into a concrete idea as you remember root beer floats from summer days past. This transformation entails a spread in activation from the most anterior regions of PFC to medial regions as orbitofrontal cortex helps with the recall of the high value you associate with your previous encounters with root beer floats. More posterior regions become active as you begin to develop an action plan. You become committed to the root beer float, and that goal becomes the center of working memory and thus engages lateral prefrontal cortex. You think about how good these drinks are at A&W restaurants, drawing on links from more ventral regions of PFC to long-term memories associated with their floats. You also draw on dorsal regions that will be essential for forming a plan of action to drive to the A&W. It’s a complicated plan, one that no other species would come close to accomplishing. Luckily for you, however, your PFC network is buzzing along now, highly motivated, with the ability to establish the sequence of actions required to accomplish your goal. Reward is just down the road.

TAKE-HOME MESSAGES

- Goal-oriented behaviors allow humans and other animals to interact in the world in a purposeful way.

- A goal-oriented action is based on the assessment of an expected reward and the knowledge that there is a causal relationship between the action and the reward. Goal-oriented behavior requires the retrieval, selection, and manipulation of task-relevant information.

- A habit is a response to a stimulus that is no longer based on a reward.

- Working memory consists of transient representations of task-relevant information. The prefrontal cortex (especially the lateral prefrontal cortex) is a key component in a working memory network.

- Physiological studies in primates show that cells in the prefrontal cortex remain active even when the stimulus is no longer present in delayed-response tasks. A similar picture is observed in functional imaging studies with humans. These studies also demonstrate that working memory requires the interaction of the prefrontal cortex with other brain regions.

- Various frameworks have been proposed to understand functional specialization within the prefrontal cortex. Three gradients have been described to account for processing differences in prefrontal cortex: anterior– posterior, ventral–dorsal, and lateral–medial.

Decision Making

Go back to the hot summer day when you thought, “Hmm ... that frosty, cold drink is worth looking for. I’m going to get one.” That type of goal-oriented behavior begins with a decision to pursue the goal. We might think of the brain as a decision-making device whose perceptual, memory, and motor capabilities evolved to support decisions that determine actions. Our brains start making decisions as soon as our eyes flutter open in the morning: Do I get up now, or stay cozy and warm for another hour? Should I surf my email or look over my homework before class? Do I skip class to take off for a weekend ski trip? Though humans tend to focus on complex decisions such as who gets their vote in the next election, all animals need to make decisions. Even an earthworm decides when to leave a patch of lawn and move on to greener pastures.

Rational observers, such as economists and mathematicians, tend to be puzzled when they consider human behavior. To them, our behavior frequently appears inconsistent or irrational, not based on what seems to be a sensible evaluation of the circumstances and options. For instance, why would someone who is concerned about eating healthy food eat a jelly doughnut? Why would someone who is paying so much money for tuition skip classes?—a question that your non-economist parents might even ask. And why are people willing to spend large sums of money to insure themselves against low-risk events (e.g., buying fire insurance even though the odds are overwhelmingly small that they will ever use it), yet are willing to engage in high-risk behaviors (e.g., driving after consuming alcohol)?

The field of neuroeconomics has emerged as an interdisciplinary enterprise with the goal of explaining the neural mechanisms underlying decision making. Economists want to understand how and why we make the choices we do. Many of their ideas can be tested both with behavioral studies and, as in all of cognitive neuroscience, with data from cellular activity, neuroimaging, or lesion studies. This work also helps us understand the functional organization of the brain.

Theories about our decision-making processes are either normative or descriptive. Normative decision theories define how people ought to make decisions that yield the optimal choice. Very often, however, such theories fail to predict what people actually choose. Descriptive decision theories attempt to describe what people actually do, not what they should do. Our inconsistent, sometimes suboptimal choices present less of a mystery to evolutionary psychologists. Our modular brain has been sculpted by evolution to optimize reproduction and survival in a world that differed quite a bit from the one we currently occupy. In that world, you might never pass up the easy pickings of a jelly doughnut (that is, something sweet and full of fat), and you would not engage in exercise solely for the sake of burning off valuable calories; conserving energy would likely be a much more powerful factor. Our current brains reflect this past, drawing on the mechanisms that were essential for survival in a world before readily available junk food. Many of these mechanisms, as with all brain functions, putter along below our consciousness. We are unaware that many of our decisions are made following simple, efficient rules (heuristics) that were sculpted and hard-coded by evolution. The results of these decisions may not seem rational, at least within the context of our current, highly mechanized world. But they may seem more rational if looked at from an evolutionary perspective.

Consistent with this point of view, the evidence indicates that we reach decisions in many different ways. As we touched on earlier, decisions can be goal oriented or habitual. The distinction is that goal-oriented decisions are based on the assessment of expected reward, whereas habits, by definition, are actions taken that are no longer under the control of reward: We simply execute them because the context triggers the action. A somewhat similar way of classifying decisions is dividing them into action– outcome decisions or stimulus–response decisions. With an action–outcome decision, the decision involves some form of evaluation (not necessarily conscious) of the expected outcomes. After we repeat that action, and if the outcome is consistent, the process becomes habitual; that is, it becomes a stimulus–response decision. Another distinction can be made between decisions that are model-free or model-based. Model-based means that the agent has an internal representation of some aspect of the world and uses this model to evaluate different actions. For example, a cognitive map would be a model of the spatial layout of the world, allowing you to choose an alternative path if you find the road blocked as you set off for the A&W restaurant. Model-free means that you just have an input–output mapping, similar to stimulus–response decisions. Here you know that to get to the A&W, you simply look for the tall tower at the center of town, knowing that the A&W is right next door. Decisions that involve other people are known as social decisions. Dealing with other individuals tends to make things much more complicated, a topic we will return to in Chapters 13 and 14.

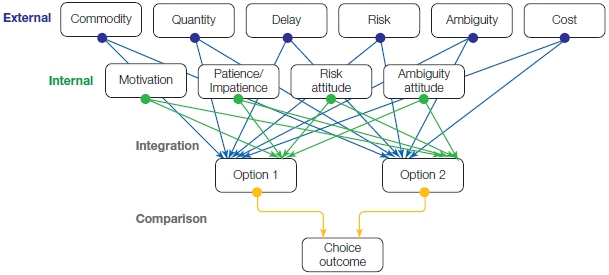

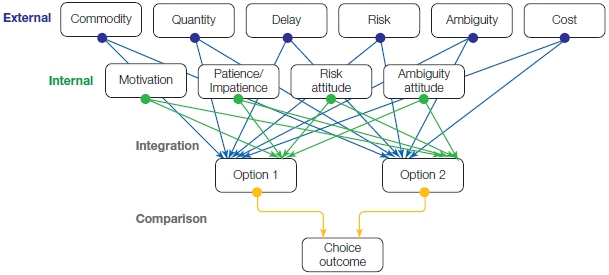

FIGURE 12.10 Decisions require the integration and evaluation of multiple factors.

In this example, the person is asked to choose between two objects, each of which has an inferred value (offer values). The values involve some weighted combination of multiple sources of information. Some sources are external to the agent: What will I gain (commodity), how much reward will be obtained, will I get the reward right away, and how certain am I to obtain the reward? Other factors are internal to the agent: Am I feeling motivated, am I willing to wait for the reward, is the risk worth it?

Is It Worth It? Value and Decision Making

A cornerstone idea in economic models of decision making is that before we make a decision, we first compute the value of each of the options and then make some sort of comparison of the different values (Padoa-Schioppa, 2011). Decision making in this framework is about making choices that will maximize value. For example, we want to obtain the highest possible reward or payoff (Figure 12.10). It is not enough, however, to just think about the possible reward level. We also have to consider the likelihood of receiving the reward, as well as the costs required to obtain that reward. Although many lottery players dream of winning the $1,000,000 prize, some may forgo a chance at the big money and buy a ticket with a maximum payoff of $100, knowing that their odds are much higher.

Components of Value

To understand the neural processes involved in decision making, we need to understand how the brain computes value and processes rewards. Some rewards, such as food, water, or sex, are primary reinforcers: They have a direct benefit for survival fitness. Their value, or our response to these reinforcers, is to some extent hardwired in our genetic code. But reward value is also flexible and shaped by experience. If you are truly starving, an item of disgust—say, a dead mouse—suddenly takes on reinforcing properties. Secondary reinforcers, such as money and status, are rewards that have no intrinsic value themselves but become rewarding through their association with other forms of reinforcement.

Reward value is not a simple calculation. Value has various components, both external and internal, that are integrated to form an overall subjective worth. Consider this scenario: You are out fishing along the shoreline and thinking about whether to walk around the lake to an out-of-the-way fishing hole. Do you stay put or pack up your gear? Establishing the value of these options requires considering several factors, all of which contribute to the representation of value:

Payoff: What kind and how much reward do the options offer? At the current spot, you might land a small trout or perhaps a bream. At the other spot, you’ve caught a few large-mouthed bass.

Probability: How likely are you to attain the reward? You might remember that the current spot almost always yields a few catches, whereas you’ve most often come back empty-handed from the secret hole.

Effort or cost: If you stay put, you can start casting right away. Getting to the fishing hole on the other side of the lake will take an hour of scrambling up and down the hillside. One form of cost that has been widely studied is temporal discounting. How long are you willing to wait for a reward? You may not catch large fish at the current spot, but you could feel that satisfying tug 30 minutes sooner if you stay where you are.

Context: This factor involves external things, like the time of day, as well as internal things, such as whether you are hungry or tired, or looking forward to an afternoon outing with some friends. Context also includes novelty—you might be the type who values an adventure and the possibility of finding an even better fishing hole on your way to the other side of the lake, or you might be feeling cautious, eager to go with a proven winner.

Preference: You may just like one fishing spot better than another for its aesthetics or a fond memory.

As you can see, there are many factors that contribute to subjective value, and they can change immensely from person to person and hour to hour. Given such variation, it is not so surprising that people are highly inconsistent in their decision-making behavior. What seems irrational thinking by another individual might not be, if we could peek into that person’s up-to-date value representation of the current choices.

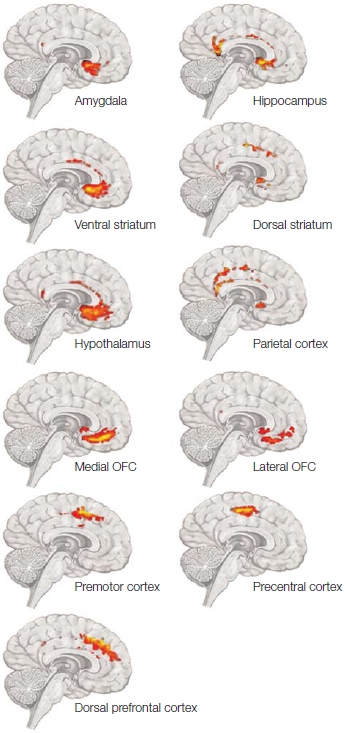

Representation of Value

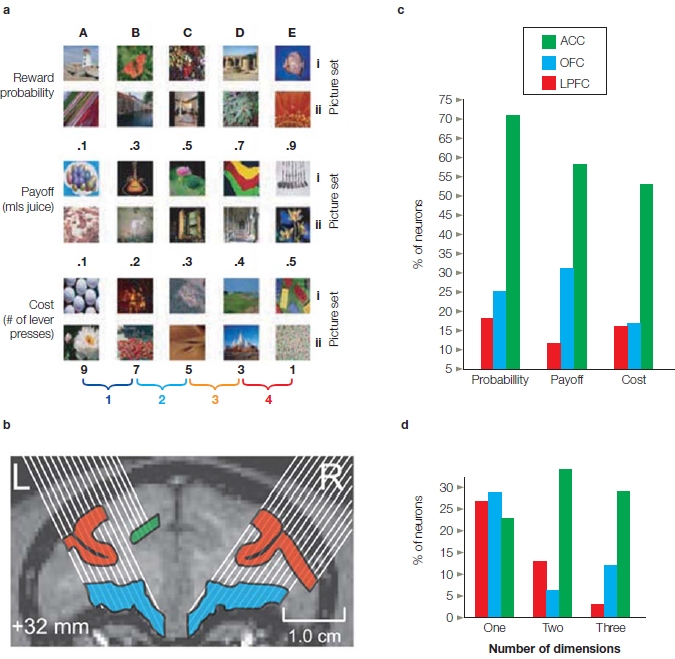

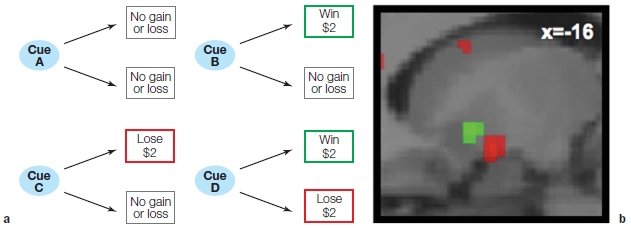

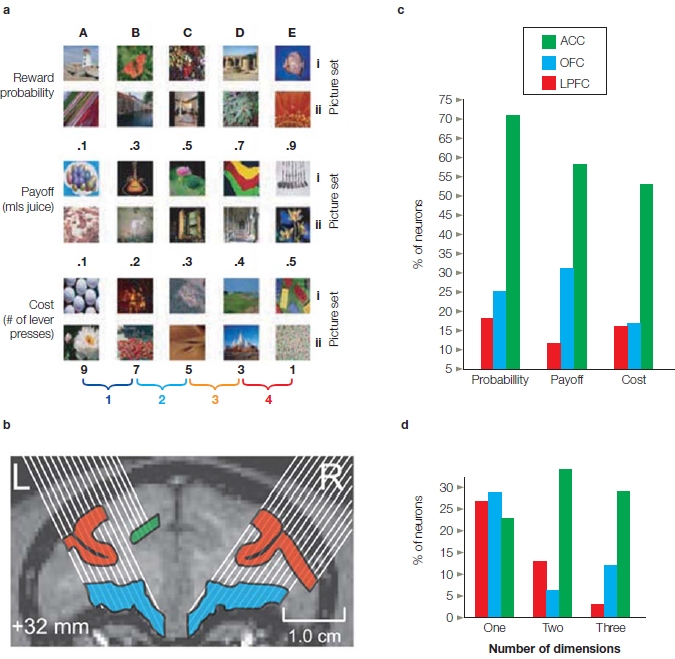

How and where is value represented in the brain? Is it all in one place or represented separately? Multiple single-cell studies and fMRI studies have been conducted to examine this question. Jon Wallis and his colleagues (Kennerley et al., 2009) looked at value representation in the frontal lobes of monkey brains. They targeted this region because damage to the frontal lobes is associated with impairments in decision making and goal-oriented behavior. While the monkey performed decision-making tasks, the investigators used multiple electrodes to record from cells in three regions: the anterior cingulate cortex (ACC), the lateral prefrontal cortex (LPFC), and the orbitofrontal cortex (OFC). Besides comparing cellular activity in different locations, the experimenters manipulated cost, probability, and payoff. The key question was whether the different areas would show selectivity to particular dimensions. For instance, would OFC be selective to payoff, LPFC to probability, and ACC to effort? Or, would they find an area that coded overall “value” independent of the variable?

The monkey’s decision involved choosing between two pictures (Figure 12.11). Each picture was associated with a specific value on one of the three dimensions: cost of payoff, amount of payoff, and probability of payoff. For instance, the guitar was always associated with a payoff of three drops of juice, and the flower was always associated with a two-drop juice payoff. On any given trial, choice value was manipulated along a single dimension while holding the other two dimensions constant. So, for example, when cost (the number of lever presses necessary to gain a reward) varied between the pictures, the probability and the payoff (a specific amount of juice) were constant. Each area included cells that responded to each dimension as well as cells that responded to multiple dimensions. Interestingly, there was no clear segregation between the representations of these three dimensions of value. There were some notable differences, however. First, many cells—especially in ACC, but also in the OFC—responded to all three dimensions. A pattern like this suggests these cells represent an overall measure of value. In contrast, LPFC cells usually encoded just one decision variable. Second, if cells represented one variable only, it was usually payoff or probability. If cells responded to effort, then they usually responded to something else also. The value signal in the cells’ activity generally preceded that associated with motor preparation, suggesting that choice value is being computed before the response is selected—an observation that makes intuitive sense.

FIGURE 12.11 Cellular representation of value in the prefrontal cortex.

(a) Stimuli used to test choice behavior in the monkey. On each trial, two neighboring stimuli from one row were presented and the animal chose one with a keypress. The stimuli varied in either probability of reward, amount of payoff, or number of required keypresses. Each row shows items in ascending order of value. (b) Simultaneous recordings were made from multiple electrodes that were positioned in lateral prefrontal cortex, orbitofrontal cortex, or the anterior cingulate cortex. (c) Percentage of neurons in one monkey that showed a variation in response rate for each dimension. Note some neurons increased the firing rate with increasing value and some decreased the firing rate with increasing value. (d) The percentage of neurons responding to one, two, or three dimensions.

Similar studies with human participants have been conducted with fMRI. Here the emphasis has been on how different dimensions preferentially activate different neural regions. For example, in one study, OFC activation was closely tied to variation in payoff, whereas activation in the striatum of the basal ganglia was related to effort (Croxson et al., 2009). In another study, lateral PFC activation was associated with the probability of reward, whereas the delay between the time of the action and the payoff was correlated with activity in medial PFC and lateral parietal lobe (Peters & Buchel, 2009).

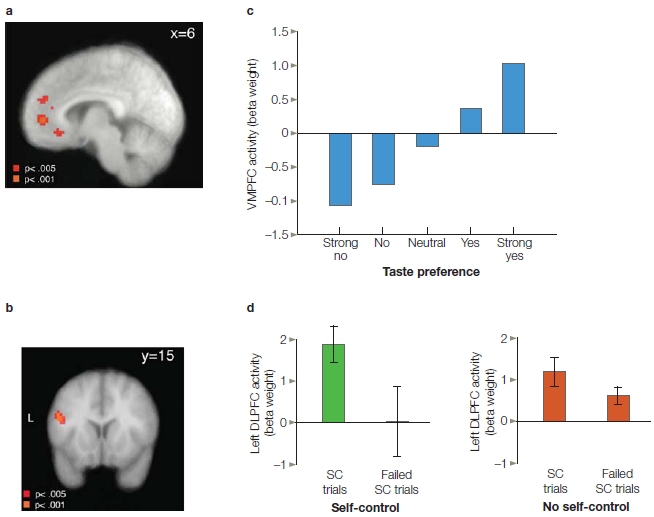

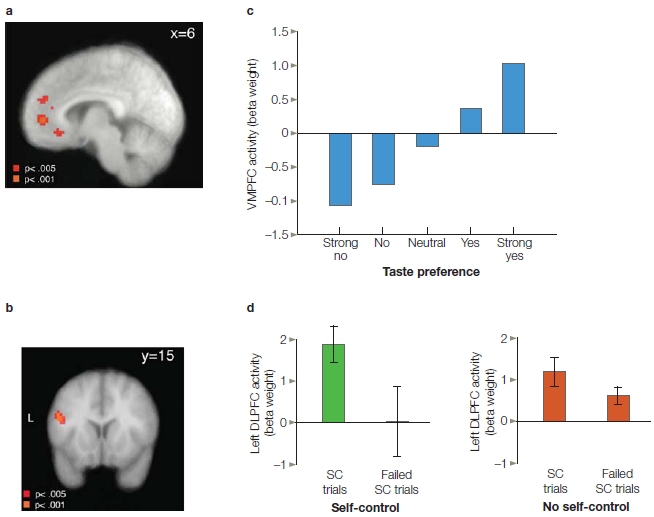

What happens in the all-too-common situation when a payoff has high short-term value but adverse long-term value? For example, what happens when dieters are given their choice of a tasty but unhealthy treat (like the jelly doughnut) and a healthy but perhaps less tasty one (the yogurt breakfast)? Interestingly, activity in ventromedial prefrontal cortex (VMPFC), including OFC, was correlated with taste preference, regardless of whether the item was healthy (Figure 12.12). In contrast, the dorsolateral prefrontal cortex (DLPFC) area was associated with the degree of control (Hare et al., 2009). Activity here was greater on trials in which a preferred, but unhealthy, item was refused compared to when that item was selected. Moreover, this difference was much greater in people who were judged to be better in exhibiting self-control. It may be that the VMPFC originally evolved to forecast the short-term value of stimuli. Over evolutionary time, structures such as the DLPFC began to modulate more primitive, or primary, value signals, providing humans with the ability to incorporate long-term considerations into value representations. These findings also suggest that a fundamental difference between successful and failed self-control might be the extent to which the DLPFC can modulate the value signal encoded in the VMPFC (see Chapter 13 for a related take on VMPFC).

Overall, the neurophysiological and neuroimaging studies indicate that OFC plays a key role in the representation of value. More lateral regions of the PFC are important for some form of modulatory control on these representations or the actions associated with them. We have seen one difference between the neurophysiological and neuroimaging results: The former emphasize a distributed picture of value representation, and the latter emphasize specialization within components of a decision-making network. The discrepancy, though, is likely due to the differential sensitivity of the two methods. The fine-grained spatial resolution of neurophysiology allows us to ask if individual cells are sensitive to particular dimensions. In contrast, fMRI studies generally provide relative answers, asking if an area is more responsive to variation in one dimension compared to another dimension.

FIGURE 12.12 Dissociation of VMPFC and DLPFC during a food selection task.

(a) Regions in VMPFC that showed a positive relationship between the BOLD response and food preference. This signal provides a representation of value. (b) Region in DLPFC in which the BOLD response was related to self control. The signal was stronger on trials in which the person exhibited self control (did not choose a highly rated, but nutritionally poor food) compared to trials in which they failed to exhibit self control. The difference was especially pronounced in participants who, based on survey data, were rated as having good self control. (c) Beta values in VMPFC increased with preference. (d) Region of left DLPFC showing greater activity in successful self-control trials in the self-control than the no self-control group. Both groups showed greater activity in DLPFC for successful versus failed self-control tasks. SC = self-control.

More Than One Type of Decision System?

The laboratory is an artificial environment. Many of the experimental paradigms used to study decision making involve conditions in which the participant has ready access to the different choices and at least some information about the potential rewards and costs. Thus, participants are in a position where they can calculate and compare values. In the natural environment, especially the one our ancestors roamed about in, this situation is the exception and not the norm. Rather, we frequently face situations where we must choose between an option with a known value and one or more options of unknown value (Rushworth et al., 2012). The classic example here is foraging: Animals have to make decisions about where to seek food and water, precious commodities that tend to occur in restricted locations and for only a short time. Foraging requires decisions such as, “Do I keep eating/hunting/fishing here or move on to (what may or may not be) greener pastures, birdier bushes, or fishier water holes?” In other words, do I continue to exploit the resources at hand or set out to explore in hopes of finding a richer niche? Here the animal must calculate the value of the current option, richness of the overall environment, and the costs of exploration.

Worms, bees, wasps, spiders, fish, birds, seals, monkeys, and human subsistence foragers all obey a basic principle in their foraging behavior. This principle is referred to as the marginal value theorem (Charnov, 1974). The animal exploits a foraging patch until its intake rate falls below the average intake rate for the overall environment. At that point, the animal becomes exploratory. Because this behavior is so consistent across so many species, scientists have hypothesized that this tendency may be deeply encoded in our genes. Indeed, biologists have identified a specific set of genes that influence how worms decide when it is time to start looking for “greener lawns” (Bendesky et al., 2011).

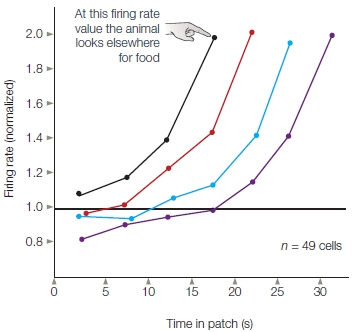

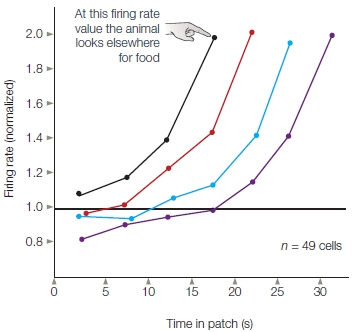

Benjamin Hayden (2011) and his colleagues investigated the neural mechanisms that might be involved in foraging-like decisions. They hypothesized that such decisions require a decision variable, a representation that specifies the current value of leaving a patch, even if the alternative choice is relatively unknown. When this variable reaches a threshold, a signal is generated indicating that it is time to look for greener pastures. A number of factors influence how soon this threshold is reached: the current expected payoff, the expected benefits and costs for traveling to a new patch, and the uncertainty of obtaining reward at the next location. For instance, in our fishing example, if it takes two hours instead of one to go around the lake to a better fishing spot, you are less likely to move.

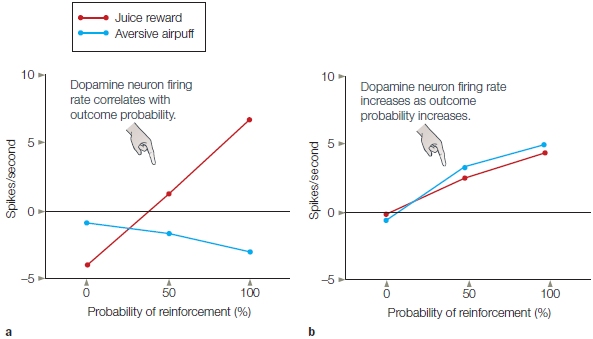

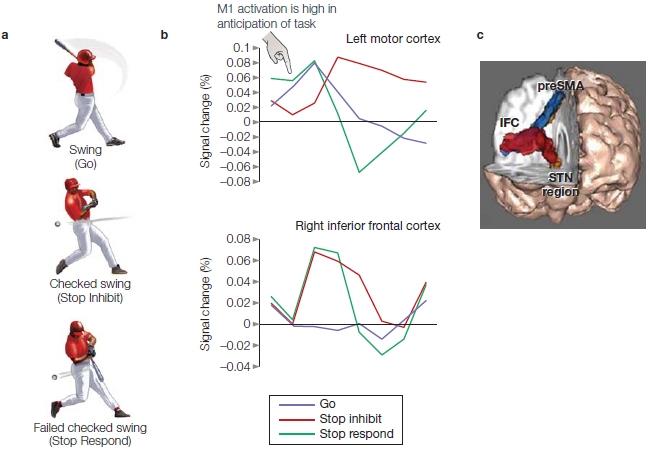

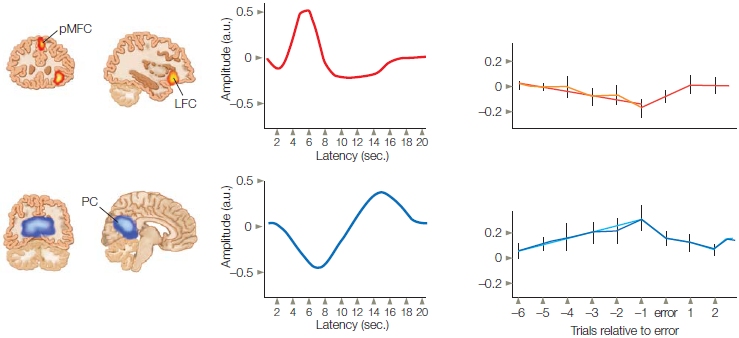

FIGURE 12.13 Neural activity in ACC is correlated with decision by monkeys to change to a new “patch” in a sequential foraging task. Data were sorted according to the amount of time the animal stayed in one patch (from shortest to longest: black, red, blue, purple). For each duration, the animal switched to a new patch when the firing rate of the ACC neurons was double the normal level of activity.

Hayden recorded from cells in the ACC of monkeys, choosing this region because it has been linked to the monitoring of actions and their outcomes (which we discuss later in the chapter). The animals were presented with a virtual foraging task in which they chose one of two targets. One stimulus was followed by a reward after a short delay, but the amount decreased with each successive trial (equivalent to remaining in a patch and reducing the food supply by eating it). The other stimulus allowed the animals to change the outcome contingencies. They received no reward on that trial, but after waiting for a variable period of time (the cost of exploration), the choices were presented again and the payoff for the rewarded stimulus was reset to its original value (a greener patch). Consistent with the marginal value theorem, they were less likely to choose the rewarded stimulus as the waiting time increased or the amount of reward decreased. What’s more, the cellular activity in ACC was highly predictive of the amount of time the animal would continue to “forage” by choosing the rewarding stimulus. Most interesting, the cells showed the property of a threshold: When the firing rate was greater than 20 spikes per second, the animal left the patch (Figure 12.13).

The hypothesis that the ACC plays a critical role in foraging-like decisions is further supported by fMRI studies with humans (Kolling et al., 2012). When the person is making choices about where to sample in a virtual world, the BOLD response in ACC correlates positively with search value (explore) and negatively with the encounter value (exploit) regardless of which choice participants made. In this condition, ventromedial regions of the PFC did not signal overall value. If, however, experimenters modified the task so that the participants were engaged in a comparison decision, activation in VMPFC reflected the chosen option value. Taken together, these studies suggest that ACC signals exert a type of control by promoting a particular behavior: exploring the environment for better alternatives compared to the current course of action (Rushworth et al., 2012).

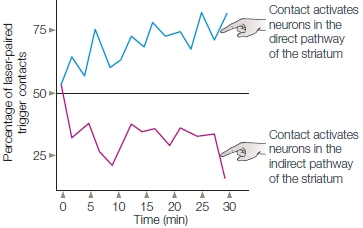

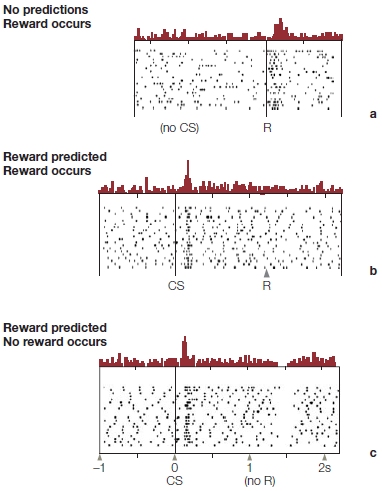

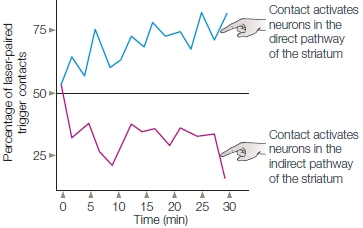

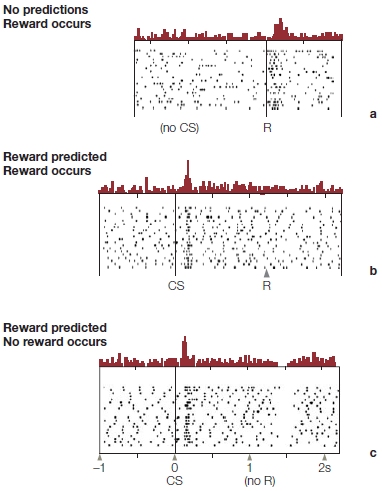

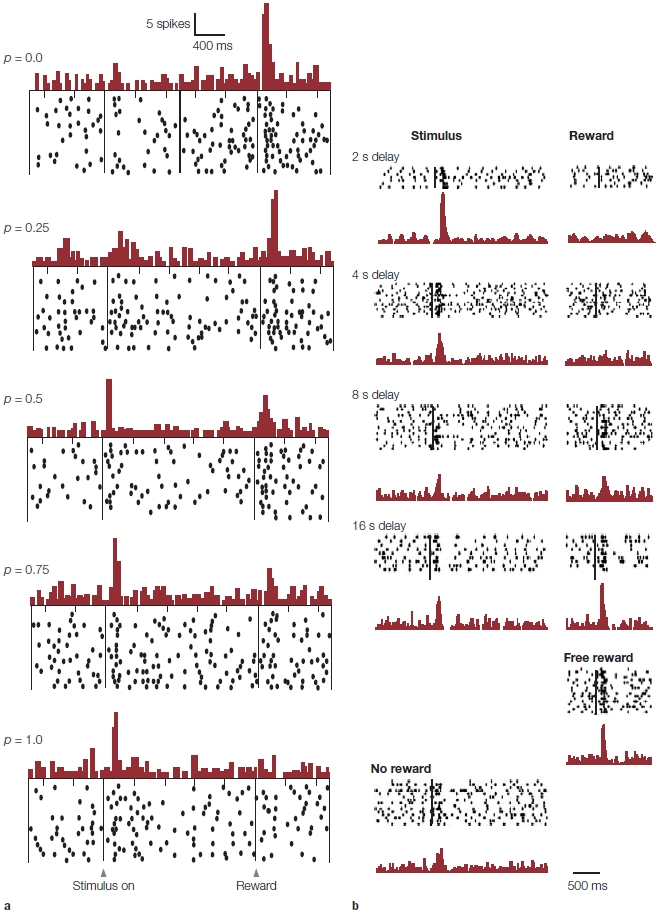

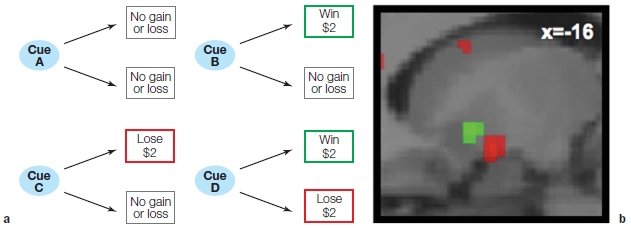

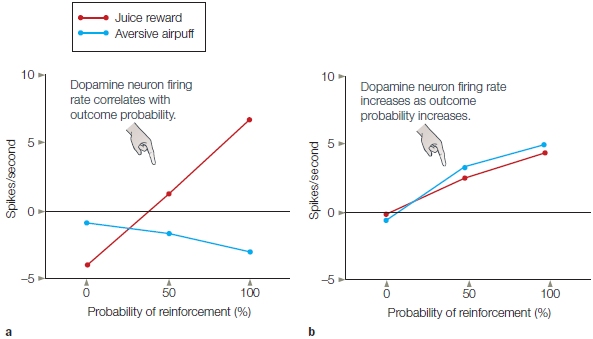

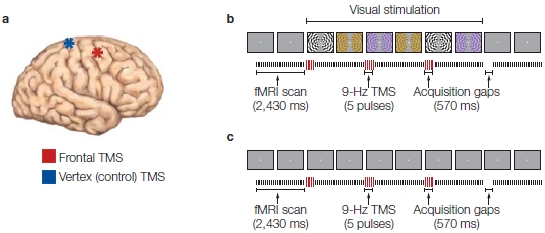

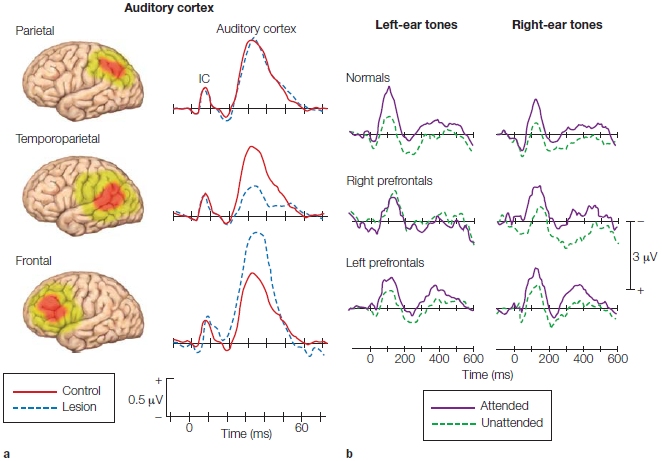

Dopamine Activity and Reward Processing