The anatomy of language

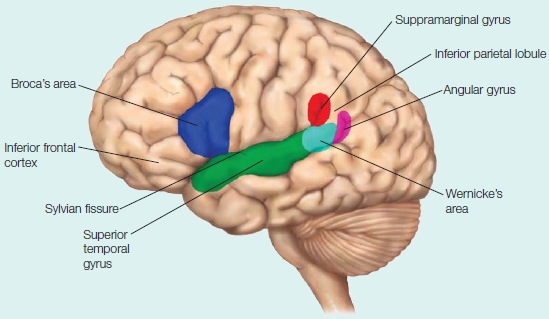

Language processing is located primarily in the left hemisphere. Many regions located on and around the Sylvian fissure form a language processing network.

|

“Language is to the mind more than light is to the eye.” ~ William Gibson |

Chapter 11

Language

OUTLINE

The Anatomy of Language

Brain Damage and Language Deficits

The Fundamentals of Language in the Human Brain

Language Comprehension

Neural Models of Language Comprehension

Neural Models of Speech Production

Evolution of Language

H.W., A WW II VETERAN, was 60, robust and physically fit, running his multi-million-dollar business, when he suffered a massive left-hemisphere stroke. After partially recovering, H.W. was left with a slight right-sided hemiparesis (muscle weakness) and a slight deficit in face recognition. His intellectual abilities were unaffected, and in a test of visuospatial reasoning, he ranked between the 91st and 95th percentiles for his age. When he decided to return to manning the helm of his company, he enlisted the help of two of his sons because he had been left with a difficult language problem: He couldn’t name most objects.

H.W. suffered from a severe anomia, the inability to find the words to label things in the world. Testing revealed that H.W. could retrieve adjectives better than verbs, but his retrieval of nouns was the most severely affected. He could understand both what was said to him and written language, but he had problems naming objects, though not with speaking per se. As the case of H.W. shows, anomia can be strikingly discrete. In one test where he was shown 60 items and asked to name them, H.W. could name only one item, a house. He was impaired in word repetition tests, oral reading of words and phrases, and generating numbers. Although he suffered what most would consider a devastating brain injury, H.W. was able to compensate for his loss. He could hold highly intellectual conversations through a combination of circumlocutions, pointing, pantomiming, and drawing the first letter of the word he wanted to say. For instance, in response to a question about where he grew up, he replied to researcher Margaret Funnell:

| H.W.: | It was, uh... leave out of here and where’s the next legally place down from here (gestures down). |

| M.F.: | Down? Massachusetts. |

| H.W.: | Next one (gestures down again). M.F.: Connecticut. |

| H.W.: | Yes. And that’s where I was. And at that time, the closest people to me were this far away (holds up five fingers). |

| M.F.: | Five miles? |

| H.W.: | Yes, okay, and, and everybody worked outside but I also, I went to school at a regular school. And when you were in school, you didn’t go to school by people brought you to school, you went there by going this way (uses his arms to pantomime walking). |

| M.F.: | By walking. |

| And to go all the way from there to where you where you went to school was actually, was, uh, uh (counts in a whisper) twelve. | |

| H.F.: | Twelve miles? |

| H.W.: | Yes, and in those years you went there by going this way (pantomimes walking). When it was warm, I, I found an old one of these (uses his arms to pantomime bicycling). |

| M.F.: | Bicycle. |

| H.W.: | And I, I fixed it so it would work and I would use that when it was warm and when it got cold you just, you do this (pantomimes walking). (Funnell et al., 1996, p. 180) |

Though H.W. was unable to produce nouns to describe aspects of his childhood, he did use proper grammatical structures and was able to pantomime the words he wanted. He was acutely aware of his deficits.

H.W.’s problem was not one of object knowledge. He knew what an object was and its use. He simply could not produce the word. He also knew that when he saw the word he wanted, he would recognize it. To demonstrate this, he would be given a description of something and then asked how sure he was about being able to pick the correct word for it from a list of 10 words. As an example, when asked if he knew the automobile instrument that measures mileage, he said he would recognize the word with 100 % accuracy.

We understand from H.W. that retrieval of object knowledge is not the same as retrieval of the linguistic label (the name of the object). You may have experienced this yourself: Sometimes when you try say someone’s name, you can’t come up with the correct one, but when someone tries to help you and mentions a bunch of names, you know for sure which ones are not correct. This experience is called the tip-of-the-tongue phenomenon. H.W.’s problems further illustrate that the ability to produce speech is not the same thing as the ability to comprehend language, and indeed the networks involved in language comprehension and language production differ.

Of all the higher functions humans possess, language is perhaps the most specialized and refined and may well be what most clearly distinguishes our species. Although animals have sophisticated systems for communication, the abilities of even the most prolific of our primate relatives is far inferior to that of humans. Because there is no animal homolog for human language, language is less well understood than sensation, memory, or motor control. Human language arises from the abilities of the brain, and thus, is called a natural language. It can be written, spoken, and gestured. It uses symbolic coding of information to communicate both concrete information and abstract ideas. Human language can convey information about the past, the present, and our plans for the future. Language allows humans to pass information between social partners. We also can gain information from those who are not present as well as from those who are no longer alive. Thus, we can learn from our own experiences and from those of previous generations (if we are willing to).

In this chapter, we concentrate on the cognitive neuroscience of language: how language arises from the structure and function of the human brain. Our current understanding began with the 19th-century researchers who investigated the topic through the study of patients with language deficits. Their findings produced the “classical model” of language, which emphasized that specific brain regions performed specific tasks, such as language comprehension and language production.

In the 1960s, researchers developed a renewed interest in studying patients with language deficits to understand the neural structures that enable language. At the same time, psycholinguistics, a branch of psychology and linguistics, used a different approach, concentrating on the cognitive processes underlying language. Cognitive neuroscience incorporates these neuropsychological and psycholinguistic approaches to investigate how humans comprehend and produce language. The development of new tools, such as ERP recordings and high-resolution functional and structural neuroimaging (Chapter 3), has accelerated our discovery of the brain bases of language, creating a revolution in cognitive neuroscience. The classical model of language is now being replaced by a new language systems approach, in which investigators are identifying brain networks that support human language and revealing the computational processes they enable.

In this chapter, we discuss how the brain derives meaning from both auditory speech input and visual language input, and how it in turn produces spoken and written language output to communicate meaning to others. We begin with a quick anatomical overview and then describe what we have learned from patients with language deficits. Next, with the help of psycholinguistic and cognitive neuroscience methods, we look at what we know about language comprehension and production and some current neuroanatomical models. Finally, we consider how this miraculous human mental faculty may have arisen through the course of primate evolution.

ANATOMICAL ORIENTATION

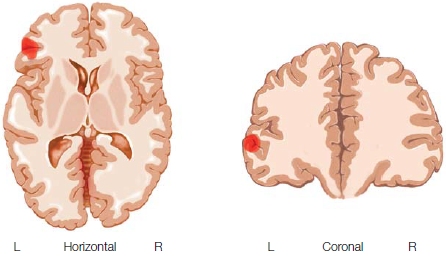

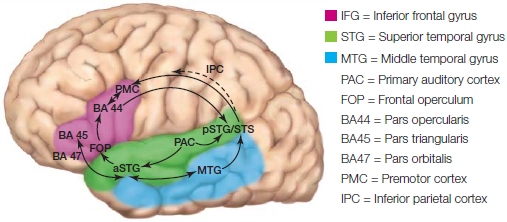

The anatomy of language

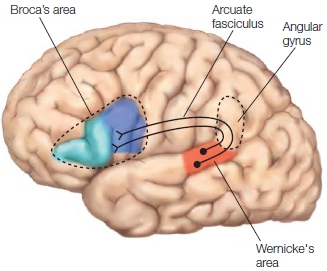

Language processing is located primarily in the left hemisphere. Many regions located on and around the Sylvian fissure form a language processing network.

The Anatomy of Language

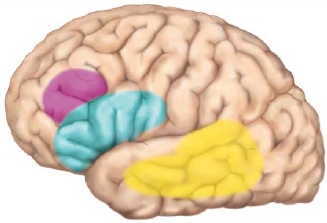

Split-brain patients as well as patients with lateralized, focal brain lesions have taught us that a great deal of language processing is lateralized to the left-hemisphere regions surrounding the Sylvian fissure. Neuroimaging data, cortical stimulation mapping, and electrical and magnetic brain recording methods are revealing the details of the neuroanatomy of language. Language areas include the left temporal cortex, which includes Wernicke’s area in the posterior superior temporal gyrus, portions of the left anterior temporal cortex, the inferior parietal lobe (which include the supramarginal gyrus and the angular gyrus), the left inferior frontal cortex, which includes Broca’s area, and the left insular cortex (see the Anatomical Orientation box). Collectively, these brain areas, and their interconnections, form the left perisylvian language network of the human brain (they surround the Sylvian fissure; Hagoort, 2013).

The left hemisphere may do the lion’s share of language processing, but the right hemisphere does make some contributions. The right superior temporal sulcus plays a role in processing the rhythm of language (prosody), and the right prefrontal cortex, middle temporal gyrus, and posterior cingulate activate when sentences have metaphorical meaning.

Language production, perception (think lip reading and sign language), and comprehension also involve both motor movement and timing. Thus, all the cortical (premotor cortex, motor cortex, and supplementary motor area—SMA) and subcortical (thalamus, basal ganglia, and cerebellum) structures involved with motor movement and timing that we discussed in Chapter 8, make key contributions to our ability to communicate (Kotz & Schwartze, 2010).

TAKE-HOME MESSAGES

Brain Damage and Language Deficits

Before the advent of neuroimaging, most of what was discerned about language processing came from studying patients who had brain lesions that resulted in aphasia. Aphasia is a broad term referring to the collective deficits in language comprehension and production that accompany neurological damage, even though the articulatory mechanisms are intact. Aphasia may also be accompanied by speech problems caused by the loss of control over articulatory muscles, known as dysarthria, and deficits in the motor planning of articulations, called speech apraxia. Aphasia is extremely common following brain damage. Approximately 40 % of all strokes (usually those located in the left hemisphere) produce some aphasia, though it may be transient. In many patients, the aphasic symptoms persist, causing lasting problems in producing or understanding spoken and written language.

Broca’s Aphasia

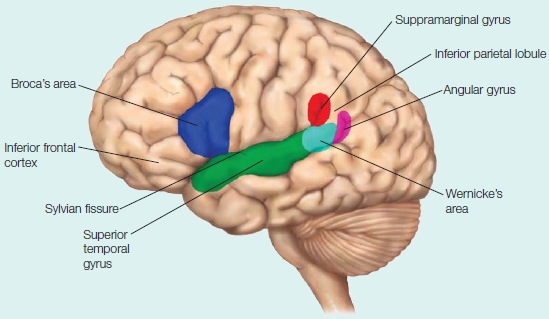

FIGURE 11.1 Broca’s area.

(a) The preserved brain of Leborgne (Broca’s patient “Tan”), which is maintained in a Paris museum. (b) Shading identifies the area in the left hemisphere known as Broca’s area.

Broca’s aphasia, also known as anterior aphasia, nonfluent aphasia, expressive aphasia, or agrammatic aphasia, is the oldest and perhaps best-studied form of aphasia. It was first clearly described by Parisian physician Paul Broca in the 19th century. He performed an autopsy on a patient who for several years before his death could speak only a single word, “tan.” Broca observed that the patient had a brain lesion in the posterior portion of the left inferior frontal gyrus, which is made up of the pars triangularis and pars opercularis, now referred to as Broca’s area (Figure 11.1). After studying many patients with language problems, Broca also concluded that brain areas that produce speech were localized in the left hemisphere.

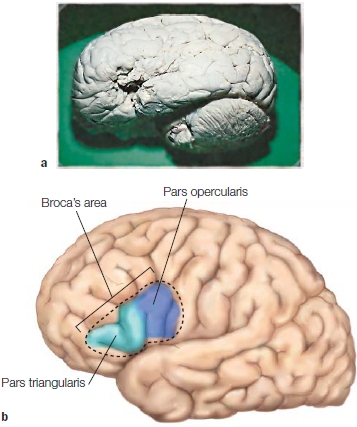

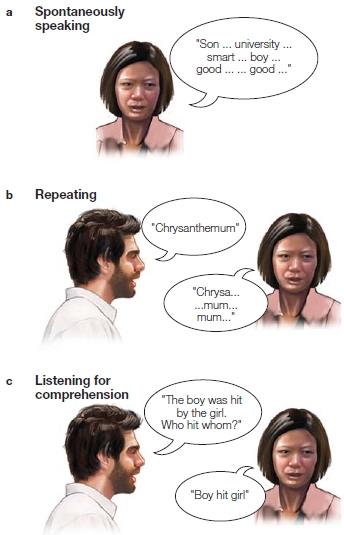

In the most severe forms of Broca’s aphasia, singleutterance patterns of speech, such as that of Broca’s original patient, are often observed. The variability is large, however, and may include unintelligible mutterings, single syllables or words, short simple phrases, sentences that mostly lack function words or grammatical markers, or idioms such as “Barking up the wrong tree.” Sometimes the ability to sing normally is undisturbed, as might be the ability to recite phrases and prose, or to count. The speech of Broca’s aphasics is often telegraphic, coming in uneven bursts, and very effortful (Figure 11.2a). Finding the appropriate word or combination of words and then executing the pronunciation is compromised. This condition is often accompanied by apraxia of speech (Figure 11.2b). Broca’s aphasics are aware of their errors and have a low tolerance for frustration.

Broca’s notion that these aphasics had only a disorder in speech production, however, is not correct. They can also have comprehension deficits related to syntax (rules governing how words are put together in a sentence). Often only the most basic and overlearned grammatical forms are produced and comprehended, a deficit known as agrammatic aphasia. For example, consider the following sentences: “The boy kicked the girl” and “The boy was kicked by the girl.” The first sentence can be understood from word order, and Broca’s aphasics understand such sentences fairly well. But the second sentence has a more complicated grammar, and in such cases Broca’s aphasics would misunderstand who kicked whom (Figure 11.2c).

When Broca first described this disorder, he related it to damage to the cortical region now known as Broca’s area (see Figure 11.1b). Challenges to the idea that Broca’s area was responsible for speech deficits seen in aphasia have been raised since Broca’s time. For example, aphasiologist Nina Dronkers (1996) at the University of California, Davis, reported 22 patients with lesions in Broca’s area, as defined by neuroimaging, but only 10 of these patients had Broca’s aphasia.

Broca never dissected the brain of his original patient, Leborgne, and could therefore not determine whether there was damage to structures in the brain that could not be seen on the surface of the brain. Leborgne’s brain was preserved and is now housed in Musée Dupuytren in Paris (as is Broca’s brain). Recent high-resolution MRI scans showed that Leborgne’s lesions extended into regions underlying the superficial cortical zone of Broca’s area, and included the insular cortex and portions of the basal ganglia (Dronkers et al., 2007). This finding suggested that damage to the classic regions of the frontal cortex known as Broca’s area may not be solely responsible for the speech production deficits of Broca’s aphasics.

Wernicke’s Aphasia

FIGURE 11.2 Speech problems in Broca’s aphasia.

Broca’s aphasics can have various problems when they speak or when they try to comprehend or repeat the linguistic input provided by the clinician. (a) The speech output of this patient is slow and effortful, and it lacks function words. It resembles a telegram. (b) Broca’s aphasics also may have accompanying problems with speech articulation because of deficits in regulation of the articulatory apparatus (e.g., muscles of the tongue). (c) Finally, these patients sometimes have a hard time understanding reversible sentences, where a full understanding of the sentence depends on correct syntactic assignment of the thematic roles (e.g., who hit whom?).

Wernicke’s aphasia, also known as posterior aphasia or receptive aphasia, was first described fully by the German physician Carl Wernicke, and is a disorder primarily of language comprehension. Patients with this syndrome have difficulty understanding spoken or written language and sometimes cannot understand language at all. Although their speech is fluent with normal prosody and grammar, what they say is nonsensical.

In performing autopsies on his patients who showed language comprehension problems, Wernicke discovered damage in the posterior regions of the superior temporal gyrus, which has since become known as Wernicke’s area (Figure 11.3). Because auditory processing occurs nearby (anteriorly) in the superior temporal cortex within Heschl’s gyri, Wernicke deduced that this more posterior region participated in the auditory storage of words—as an auditory memory area for words. This view is not commonly proposed today. As with Broca’s aphasia and Broca’s area, inconsistencies are seen in the relationship between brain lesion and language deficit in Wernicke’s aphasia. Lesions that spare Wernicke’s area can also lead to comprehension deficits.

More recent studies have revealed that dense and persistent Wernicke’s aphasia is ensured only if there is damage in Wernicke’s area and in the surrounding cortex of the posterior temporal lobe, or damage to the underlying white matter that connects temporal lobe language areas to other brain regions. Thus, although Wernicke’s area remains in the center of a posterior region of the brain whose functioning is required for normal comprehension, lesions confined to Wernicke’s area produce only temporary Wernicke’s aphasia. It appears that damage to this area does not actually cause the syndrome. Instead, secondary damage due to tissue swelling in surrounding regions contributes to the most severe problems. When swelling around the lesioned cortex goes away, comprehension improves.

FIGURE 11.3 Lateral view of the left hemisphere language areas and dorsal connections.

Wernicke’s area is shown shaded in red. The arcuate fasciculus is the bundle of axons that connects Wernicke’s and Broca’s areas. It originates in Wernicke’s area, goes through the angular gyrus, and terminates on neurons in Broca’s area.

Conduction Aphasia

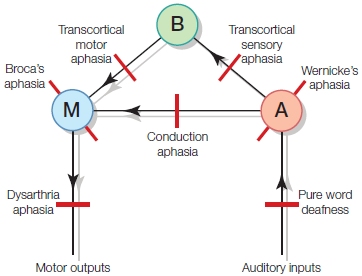

In the 1880s, Ludwig Lichtheim introduced the idea of a third region that stored conceptual information about words, not word storage per se. Once a word was retrieved from word storage, he proposed that the word information was sent to the concept area, which supplied all that was associated with the word. Lichtheim first described the classical localizationist model (Figure 11.4), where linguistic information, word storage (A 5 Wernicke’s area), speech planning (M 5 Broca’s area), and conceptual information stores (B) are located in separate brain regions interconnected by white matter tracts. The white matter tract that flows from Wernicke’s area to Broca’s area is the arcuate fasciculus. Wernicke predicted that a certain type of aphasia should result from damage to its fibers. It was not until the late 1950s, when neurologist Norman Geschwind became interested in aphasia and the neurological basis of language, that Wernicke’s connection idea resurfaced and was later revived (Geschwind, 1967). Disconnection syndromes, such as conduction aphasia, have been observed with damage to the arcuate fasciculus (see Figure 11.3).

Conduction aphasics can understand words that they hear or see and can hear their own speech errors, but they cannot repair them. They have problems producing spontaneous speech as well as repeating speech, and sometimes they use words incorrectly. Recall that H.W. was impaired in word-repetition tasks. Similar symptoms, however, are also evident with lesions to the insula and portions of the auditory cortex. One explanation for this similarity may be that damage to other nerve fibers is not detected, or that connections between Wernicke’s area and Broca’s area are not as strong as connections between the more widely spread anterior and posterior language areas outside these regions. Indeed, we now realize that the emphasis should not really be on Broca’s and Wernicke’s areas, but on the brain regions currently understood to be better correlated with the syndromes of Broca’s aphasia and Wernicke’s aphasia. Considered in this way, a lesion to the area surrounding the insula could disconnect comprehension from production areas.

We could predict from the model in Figure 11.4 that damage to the connections between conceptual representation areas (area B) and Wernicke’s area (A) would harm the ability to comprehend spoken inputs but not the ability to repeat what was heard (this is known as transcortical sensory aphasia). Such problems exist as the result of lesions in the supramarginal and angular gyri regions of patients. These patients have the unique ability to repeat what they have heard and to correct grammatical errors when they repeat it, but they are unable to understand the meaning. These findings have been interpreted as evidence that this aphasia may come from losing the ability to access semantic (the meaning of a word) information, without losing syntactic (grammatical) or phonological abilities. A third disconnection syndrome, transcortical motor aphasia, results from a disconnection between the concept centers (B) and Broca’s area (M) while the pathway between Wernicke’s area and Broca’s area remains intact. This condition produces symptoms similar to Broca’s aphasia, yet with the preserved ability to repeat heard phrases. Indeed, these patients may compulsively repeat phrases, a behavior known as echolalia. Finally, global aphasia is a devastating syndrome that results in the inability to both produce and comprehend language. Typically, this type of aphasia is associated with extensive left-hemisphere damage, including Broca’s area, Wernicke’s area, and regions between them.

FIGURE 11.4 Lichtheim’s classical model of language processing.

The area that stores permanent information about word sounds is represented by A. The speech planning and programming area is represented by M. Conceptual information is stored in area B. The arrows indicate the direction of information flow. This model formed the basis of predictions that lesions in the three main areas, or in the connections between the areas, or the inputs to or outputs from these areas, could account for seven main aphasic syndromes. The locations of possible lesions are indicated by the red line segments. A = Wernicke’s area. B = conceptual information stores. M=Broca’s area.

Although the classical localizationist model could account for many findings, it could not explain all of the neurological observations, nor can it explain current neuroimaging findings. Studies in patients with specific aphasic syndromes have revealed that the classical model’s assumption that only Broca’s and Wernicke’s areas are associated with Broca’s aphasia and Wernicke’s aphasia, respectively, is incorrect. Part of the problem is that the original lesion localizations were not very sophisticated. Another part of the problem lies in the classification of the syndromes themselves: Both Broca’s and Wernicke’s aphasias are associated with a mixed bag of symptoms and do not present with purely production and comprehension deficits, respectively. As we have seen, Broca’s aphasics may have apraxia of speech and problems with comprehension, which are different linguistic processes. It is not at all surprising that this variety of language functions is supported by more than Broca’s area. The tide has turned away from a purely locationist view, and scientists have begun to assume that language emerges from a network of brain regions and their connections. You also may have noticed that the original models of language were mostly concerned with the recognition and production of individual words and, as we discuss later in the chapter, language entails much more than that.

Information about language deficits following brain damage and studies in split-brain patients (see Chapter 4) have provided a wealth of information about the organization of human language in the brain, specifically identifying a left hemisphere language system. Language, however, is a vastly complicated cognitive system. To understand it, we need to know much more than merely the gross functional anatomy of language. We need to learn a bit about language itself.

TAKE-HOME MESSAGES

The Fundamentals of Language in the Human Brain

Words and the Representation of Their Meaning

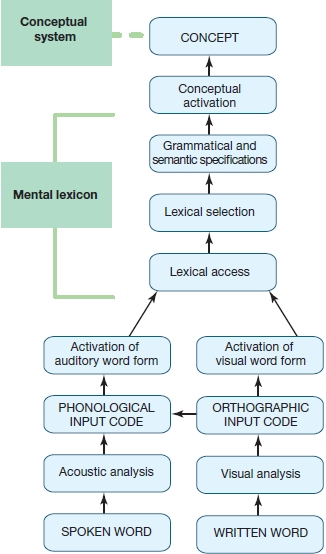

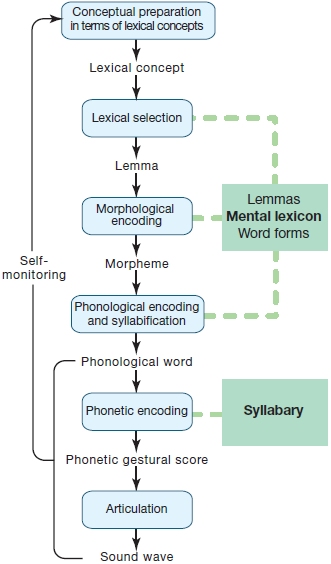

Let’s begin with some simple questions. How does the brain cope with spoken, signed, and written input to derive meaning? And, how does the brain produce spoken, signed, and written output to communicate meaning to others? We can tackle these questions by laying out the aspects of language we need to consider in this chapter. First, the brain must store words and concepts. One of the central concepts in word (lexical) representation is the mental lexicon—a mental store of information about words that includes semantic information (the words’ meanings), syntactic information (how the words are combined to form sentences), and the details of word forms (their spellings and sound patterns). Most theories agree on the central role for a mental lexicon in language. Some theories, however, propose one mental lexicon for both language comprehension and production, whereas other models distinguish between input and output lexica. In addition, the representation of orthographic (vision-based) and phonological (sound-based) forms must be considered in any model. The principal concept, though, is that a store (or stores) of information about words exists in the brain. Words we hear, or see signed or written must first, of course, be analyzed perceptually.

Once words are perceptually analyzed, three general functions are hypothesized: lexical access, lexical selection, and lexical integration. Lexical access refers to the stage(s) of processing in which the output of perceptual analysis activates word-form representations in the mental lexicon, including their semantic and syntactic attributes. Lexical selection is the next stage, where the lexical representation in the mental lexicon that best matches the input can be identified (selected). Finally, to understand the whole message, lexical integration integrates words into the full sentence, discourse, or larger context. Grammar and syntax are the rules by which lexical items are organized in a particular language to produce the intended meaning. We must also consider not only how we comprehend language but also how we produce it as utterances, signs, and in its written forms. First things first, though: We begin by considering the mental lexicon, the brain’s store of words and concepts, and ask how it might be organized, and how it might be represented in the brain.

A normal adult speaker has passive knowledge of about 50,000 words and yet can easily recognize and produce about three words per second. Given this speed and the size of the database, the mental lexicon must be organized in a highly efficient manner. It cannot be merely the equivalent of a dictionary. If, for example, the mental lexicon were organized in simple alphabetical order, it might take longer to find words in the middle of the alphabet, such as the ones starting with K, L, O, or U, than to find a word starting with an A or a Z. Fortunately, this is not the case.

Instead, the mental lexicon has other organizational principles that help us quickly get from the spoken or written input to the representations of words. First is the representational unit in the mental lexicon, called the morpheme, which is the smallest meaningful unit in a language. As an example consider the words frost, defrost, and defroster. The root of these words, frost, forms one morpheme; the prefix “de” in defrost changes the meaning of the word frost and is a morpheme as well; and finally the word defroster consists of three morphemes (adding the morpheme “er”). An example of a word with a lot of morphemes comes from a 2007 New York Times article on language by William Safire; he used the word editorializing. Can you figure out how many morphemes are in this word? A second organizational principle is that more frequently used words are accessed more quickly than less frequently used words; for instance, the word people is more readily available than the word fledgling.

A third organizing principle is the lexical neighborhood, which consists of those words that differ from any single word by only one phoneme or one letter (e.g., bat, cat, hat, sat). A phoneme is the smallest unit of sound that makes a difference to meaning. In English, the sounds for the letters L and R are two phonemes (the words late and rate mean different things), but in the Japanese language, no meaningful distinction is made between L and R, so they are represented by only one phoneme. Behavioral studies have shown that words having more neighbors are identified more slowly during language comprehension than words with few neighbors (e.g., bat has many neighbors, but sword does not). The idea is that there may be competition between the brain representations of different words during word recognition—and this phenomenon tells us something about the organization of our mental lexicon. Specifically, words with many overlapping phonemes or letters must be organized together in the brain, such that when incoming words access one word representation, others are also initially accessed, and selection among candidate words must occur, which takes time.

A fourth organizing factor for the mental lexicon is the semantic (meaning) relationships between words. Support for the idea that representations in the mental lexicon are organized according to meaningful relationships between words comes from semantic priming studies that use a lexical (word) decision task. In a semantic priming study, participants are presented with pairs of words. The first member of the word pair, the prime, is a word; the second member, the target, can be a real word (truck), a nonword (like rtukc), or a pseudoword (a word that follows the phonological rules of a language but is not a real word, like trulk). If the target is a real word, it can be related or unrelated in meaning to the prime. For the task, the participants must decide as quickly and accurately as possible whether the target is a word (i.e., make a lexical decision), pressing a button indicating their decision. Participants are faster and more accurate at making the lexical decision when the target is preceded by a related prime (e.g., the prime car for the target truck) than an unrelated prime (e.g., the prime sunny for the target truck). Related patterns are found when the participant is asked to simply read the target out loud and there are only real words presented. Here, naming latencies are faster for words related to the prime word than for unrelated ones. What does this pattern of facilitated response speed tell us about the organization of the mental lexicon? It reveals that words related in meaning must somehow be organized together in the brain, such that activation of the representation of one word also activates words that are related in meaning. This makes words easier to recognize when they follow a related word that primes their meaning.

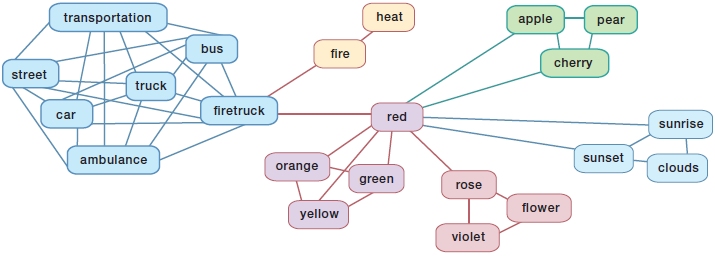

Models of the Mental Lexicon

Several models have been proposed to explain the effects of semantic priming during word recognition. In an influential model proposed by Collins and Loftus (1975), word meanings are represented in a semantic network in which words, represented by conceptual nodes, are connected with each other. Figure 11.5 shows an example of a semantic network. The strength of the connection and the distance between the nodes are determined by the semantic relations or associative relations between the words. For example, the node that represents the word car will be close to and have a strong connection with the node that represents the word truck.

A major component of this model is the assumption that activation spreads from one conceptual node to others, and nodes that are closer together will benefit more from this spreading activation than will distant nodes. If we hear “car,” the node that represents the word car in the semantic network will be activated. In addition, words like truck and bus that are closely related to the meaning of car, and are therefore nearby and well connected in the semantic network, will also receive a considerable amount of activation. In contrast, a word like rose most likely will receive no activation at all when we hear “car.” This model predicts that hearing “car” should facilitate recognition of the word truck but not rose, which is true.

Although the semantic-network model that Collins and Loftus proposed has been extremely influential, the way that word meanings are organized is still a matter of dispute and investigation. There are many other models and ideas of how conceptual knowledge is represented. Some models propose that words that co-occur in our language prime each other (e.g., cottage and cheese), and others suggest that concepts are represented by their semantic features or semantic properties. For example, the word dog has several semantic features, such as “is animate,” “has four legs,” and “barks,” and these features are assumed to be represented in the conceptual network. Such models are confronted with the problem of activation: How many features have to be activated for a person to recognize a dog? For example, it is possible to train dogs not to bark, yet we can recognize a dog even when it does not bark, and we can identify a barking dog that we cannot see. Furthermore, it is not exactly clear how many features would have to be stored. For example, a table could be made of wood or glass, and in both cases we would recognize it as a table. Does this mean that we have to store the features “is of wood/glass” with the table concept? In addition, some words are more “prototypical” examples of a semantic category than others, as reflected in our recognition and production of these words. When we are asked to generate bird names, for example, the word robin comes to mind as one of the first examples; but a word like ostrich might not come up at all, depending on where we grew up or have lived.

FIGURE 11.5 Semantic network.

Words that have strong associative or semantic relations are closer together in the network (e.g., car and truck) than are words that have no such relation (e.g., car and clouds). Semantically related words are colored similarly in the figure, and associatively related words (e.g., firetruck–fire) are closely connected.

In sum, it remains a matter of intense investigation how word meanings are represented. No matter how, though, everyone agrees that a mental store of word meanings is crucial to normal language comprehension and production. Evidence from patients with brain damage and from functional brain-imaging studies is revealing how the mental lexicon and conceptual knowledge may be organized.

Neural Substrates of the Mental Lexicon

Through observations of deficits in patients’ language abilities, we can infer a number of things about the functional organization of the mental lexicon. Different types of neurological problems create deficits in understanding and producing the appropriate meaning of a word or concept, as we described earlier. Patients with Wernicke’s aphasia make errors in speech production that are known as semantic paraphasias. For example, they might use the word horse when they mean cow. Patients with deep dyslexia make similar errors in reading: They might read the word horse where cow is written.

Patients with progressive semantic dementia initially show impairments in the conceptual system, but other mental and language abilities are spared. For example, these patients can still understand and produce the syntactic structure of sentences. This impairment has been associated with progressive damage to the temporal lobes, mostly on the left side of the brain. But the superior regions of the temporal lobe that are important for hearing and speech processing are spared (these areas are discussed later, in the subsection on spoken input). Patients with semantic dementia have difficulty assigning objects to a semantic category. In addition, they often name a category when asked to name a picture; when viewing a picture of a horse, they will say “animal,” and a picture of a robin will produce “bird.” Neurological evidence from a variety of disorders provides support for the semantic-network idea because related meanings are substituted, confused, or lumped together, as we would predict from the degrading of a system of interconnected nodes that specifies meaning relation.

In the 1970s and early 1980s, Elizabeth Warrington and her colleagues performed groundbreaking studies on the organization of conceptual knowledge in the brain, originating with her studies involving perceptual disabilities in patients possessing unilateral cerebral lesions. We have discussed these studies in some detail in Chapter 6, so we will only summarize them here. In Chapter 6 we discussed category-specific agnosias and how they might reflect the organization of semantic memory (conceptual) knowledge. Warrington and her colleagues found that semantic memory problems fell into semantic categories. They suggested that the patients’ problems were reflections of the types of information stored with different words in the semantic network. Whereas the biological categories rely more on physical properties or visual features, man-made objects are identified by their functional properties. Some of these studies were done on patients who would now be classified as suffering from semantic dementia.

Since these original observations by Warrington, many cases of patients with category-specific deficits have been reported, and there appears to be a striking correspondence between the sites of lesions and the type of semantic deficit. The patients whose impairment involved living things had lesions that included the inferior and medial temporal cortex, and often these lesions were located anteriorly. The anterior inferotemporal cortex is located close to areas of the brain that are crucial for visual object perception, and the medial temporal lobe contains important relay projections from association cortex to the hippocampus, a structure that, as you might remember from Chapter 9, has an important function in the encoding of information in long-term memory. Furthermore, the inferotemporal lobe is the end station for “what” information, or the object recognition stream, in vision (see Chapter 6).

Less is known about the localization of lesions in patients who show greater impairment for human-made things, simply because fewer of these patients have been identified and studied. But left frontal and parietal areas appear to be involved in this kind of semantic deficit. These areas are close to or overlap with areas of the brain that are important for sensorimotor functions, and so they are likely to be involved in the representation of actions that can be undertaken when human-made artifacts such as tools are being used.

Correlations between the type of semantic deficit and the area of brain lesion are consistent with a hypothesis by Warrington and her colleagues about the organization of semantic information. They have suggested that the patients’ problems are reflections of the types of information stored with different words in the semantic network. Whereas the biological categories (fruits, foods, animals) rely more on physical properties or visual features (e.g., what is the color of an apple?), human-made objects are identified by their functional properties (e.g., how do we use a hammer?).

This hypothesis by Warrington and colleagues has been both supported and challenged. The computational model by Martha Farah and James McClelland (1991), which has been discussed in Chapter 6, supported Warrington’s model. A challenge to Warrington’s proposal comes from observations by Alfonso Caramazza and others (e.g., Caramazza & Shelton, 1998) that the studies in patients did not always use well-controlled linguistic materials. For example, when comparing living things versus human-made things, some studies did not control the stimulus materials to ensure that the objects tested in each category were matched on things like visual complexity, visual similarity across objects, frequency of use, and the familiarity of objects. If these variables differ widely between the categories, then clear-cut conclusions about differences in their representation in a semantic network cannot be drawn. Caramazza has proposed an alternative theory in which the semantic network is organized along lines of the conceptual categories of animacy and inanimacy. He argues that the selective damage that has been observed in brain-damaged patients, as in the studies of Warrington and others, genuinely reflects “evolutionarily adapted domain-specific knowledge systems that are subserved by distinct neural mechanisms” (Caramazza & Shelton, 1998, p. 1).

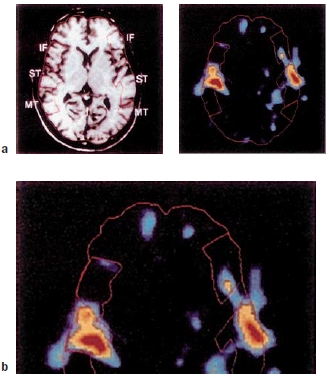

In the 1990s, studies using imaging techniques in neurologically unimpaired human participants looked further into the organization of semantic representations. Alex Martin and his colleagues (1996) at the National Institute of Mental Health (NIMH) conducted studies using PET imaging and functional magnetic resonance imaging (fMRI). Their findings reveal how the intriguing dissociations in neurological patients that we just described can be identified in neurologically normal brains. When participants read the names of or answered questions about animals, or when they named pictures of animals, the more lateral aspects of the fusiform gyrus (on the brain’s ventral surface) and the superior temporal sulcus were activated. But naming animals also activated a brain area associated with the early stages of visual processing—namely, the left medial occipital lobe. In contrast, identifying and naming tools were associated with activation in the more medial aspect of the fusiform gyrus, the left middle temporal gyrus, and the left premotor area, a region that is also activated by imagining hand movements. These findings are consistent with the idea that in our brains, conceptual representations of living things versus human-made tools rely on separable neuronal circuits engaged in processing of perceptual versus functional information.

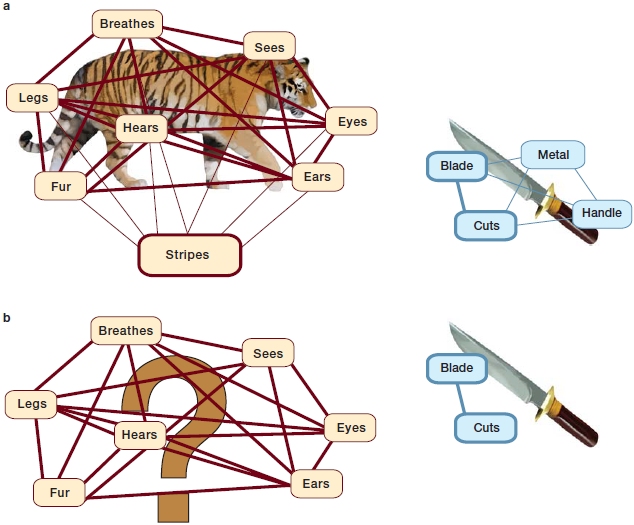

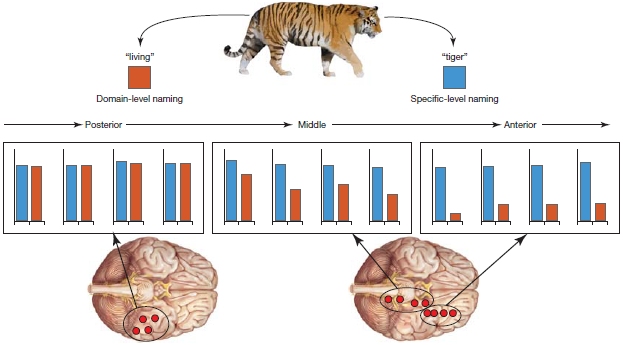

More recently, studies of the representation of conceptual information indicate that there is a network that connects the posterior fusiform gyrus in the inferior temporal lobe to the left anterior temporal lobes. Lorraine Tyler and her colleagues (Taylor et al., 2011) at the University of Cambridge have studied the representation and processing of concepts of living and nonliving things in patients with brain lesions to the anterior temporal lobes and in unimpaired participants using fMRI, EEG, and MEG measures. In these studies, participants are typically asked to name pictures of living (e.g., tiger) and nonliving (e.g., knife) things. Further, the level at which these objects should be named was varied. Participants were asked to name the pictures at the specific level (e.g., tiger or knife), or they were asked to name the pictures at the domain general level (e.g., living or nonliving). Tyler and colleagues suggest that naming at the specific level requires retrieval and integration of more detailed semantic information than at the domain general level. For example, whereas naming a picture at a domain general level requires activation of only a subset of features (e.g., for animals: has-legs, has-fur, has-eyes, etc.), naming at the specific level requires retrieval and integration of additional and more precise features (e.g., to distinguish a tiger from a panther, features such as “has-stripes” have to be retrieved and integrated as well). Interestingly, as can be seen in Figure 11.6, whereas nonliving things can be represented by only a few features (e.g., knife), living things are represented by many features (e.g., tiger). Thus, it may be more difficult to select the feature that distinguishes living things from each other (e.g., a tiger from a panther; has-stripes vs. has-spots) than it is to distinguish nonliving things (e.g., a knife from a spoon; cuts vs. scoops; Figure 11.6b). This model suggests that the dissociation between naming of nonliving and living things in patients with category-specific deficits may also be due to the complexity of the features that help distinguish one thing from another.

Tyler and colleagues observed that patients with lesions to the anterior temporal lobes cannot reliably name living things at the specific level, indicating that the retrieval and integration of more detailed semantic information is impaired. Functional MRI studies in unimpaired participants showed greater activation in the anterior temporal lobe with specific-level naming of living things than with domain-level naming (Figure 11.7).

Finally, studies with MEG and EEG have revealed interesting details about the timing of the activation of conceptual knowledge. Activation of the perceptual features occurs in primary cortices within the first 100 ms after a picture is presented; activation of more detailed semantic representations occurs in the posterior and anterior ventral–lateral cortex between 150 and 250 ms; and starting around 300 ms, participants are able to name the specific object that is depicted in the picture, which requires the retrieval and integration of detailed semantic information that is unique to the specific object.

FIGURE 11.6 Hypothetical conceptual structures for tiger and knife.

(a) One model suggests that living things are represented by many features that are not distinct whereas nonliving things can be represented by only a few features that are distinct. In this hypothetical concept structure, the thickness of the straight lines correlates with the strength of the features, and the thickness of the boxes’ border correlates with the distinctness of the features. Although the tiger has many features, it has fewer features that distinguish it from other living things, whereas the knife has more distinct features that separate it from other possible objects. (b) Following brain damage resulting in aphasia, patients find it harder to identify the distinctive feature(s) for living things (lower left panel) than for non-living objects.

TAKE-HOME MESSAGES

Language Comprehension

Perceptual Analyses of the Linguistic Input

In understanding spoken language and understanding written language, the brain uses some of the same processes; but there are also some striking differences in how spoken and written inputs are analyzed. When attempting to understand spoken words (Figure 11.8), the listener has to decode the acoustic input. The result of this acoustic analysis is translated into a phonological code because, as discussed above, that is how the lexical representations of auditory word forms are stored in the mental lexicon. After the acoustic input has been translated into a phonological format, the lexical representations in the mental lexicon that match the auditory input can be accessed (lexical access), and the best match can then be selected (lexical selection). The selected word includes grammatical and semantic information stored with it in the mental lexicon. This information helps to specify how the word can be used in the given language. Finally, the word’s meaning (store of the lexical-semantic information) results in activation of the conceptual information.

The process of reading words shares at least the last two steps of linguistic analysis (i.e., lexical and meaning activation) with auditory comprehension, but, due to the different input modality, it differs at the earlier processing steps, as illustrated in Figure 11.8. Given that the perceptual input is different, what are these earlier stages in reading? The first analysis step requires that the reader identify orthographic units (written symbols that represent the sounds or words of a language) from the visual input. These orthographic units may then be directly mapped onto orthographic (vision-based) word forms in the mental lexicon, or alternatively, the identified orthographic units might be translated into phonological units, which in turn activate the phonological word form in the mental lexicon as described for auditory comprehension.

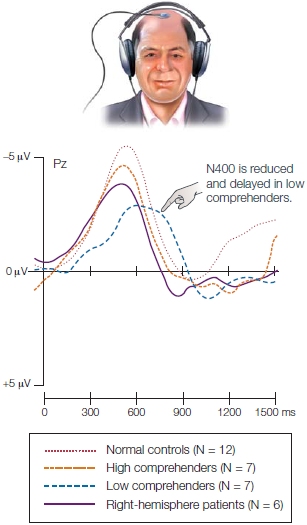

FIGURE 11.7 The anterior temporal lobes are involved in naming living things.

When identifying the tiger at the less complex domain level (living things), activity was restricted to more posterior occipitotemporal sites (red bars). Naming the same object stimulus at the specific-level (blue bars) was associated with activity in both posterior occipitotemporal and anteromedial temporal lobes.

FIGURE 11.8 Schematic representation of the components involved in spoken and written language comprehension.

Inputs can enter via either auditory (spoken word) or visual (written word) modalities. Notice that the information flows from the bottom up in this figure, from perceptual identification to “higher level” word and meaning activation. So-called interactive models of language understanding would predict top-down influences to play a role as well. For example, activation at the word-form level would influence earlier perceptual processes. We could introduce this type of feedback into this schematic representation by making the arrows bidirectional (see “How the Brain Works: Modularity Versus Interactivity”).

In the next few sections, we delve into the processes involved in the understanding of spoken and written inputs of words. Then we consider the understanding of sentences. We begin with auditory processing and then turn to the different steps involved in the comprehension of reading, also known as visual language input.

Spoken Input: Understanding Speech

The input signal in spoken language is very different from that in written language. Whereas for a reader it is immediately clear that the letters on a page are the physical signals of importance, a listener is confronted with a variety of sounds in the environment and has to identify and distinguish the relevant speech signals from other “noise.”

As introduced earlier, important building blocks of spoken language are phonemes. These are the smallest units of sound that make a difference to meaning; for example, in the words cap and tap the only difference is the first phoneme (/c/ versus /t/). The English language uses 40 phonemes; other languages may use more or less. Perception of phonemes is different for speakers of different languages. As we mentioned earlier in this chapter, for example, in English, the sounds for the letters L and R are two phonemes (the words late and rate mean different things, and we easily hear that difference). But in the Japanese language, L and R cannot be distinguished by adult native speakers, so these sounds are represented by only one phoneme.

Interestingly, infants have the perceptual ability to distinguish between any possible phonemes during their first year of life. Patricia Kuhl and her colleagues at the University of Washington found that, initially, infants could distinguish between any phonemes presented to them; but during the first year of life, their perceptual sensitivities became tuned to the phonemes of the language they experienced (Kuhl et al., 1992). So, for example, Japanese infants can distinguish L from R sounds, but then lose that ability over time. American infants, on the other hand, do not lose that ability, but do lose the ability to distinguish phonemes that are not part of the English language. The babbling and crying sounds that infants articulate from ages 6–12 months grow more and more similar to the phonemes that they most frequently hear. By the time babies are one year old, they no longer produce (nor perceive) nonnative phonemes. Learning another language often involves phonemes that don’t occur in a person’s native language, such as the guttural sounds of Dutch or the rolling R of Spanish. Such nonnative sounds can be difficult to learn, especially when we are older and our native phonemes have become automatic, and make it challenging or impossible to lose our native accent. Perhaps that was Mark Twain’s problem when he quipped, “In Paris they just simply opened their eyes and stared when we spoke to them in French! We never did succeed in making those idiots understand their own language” (from The Innocents Abroad).

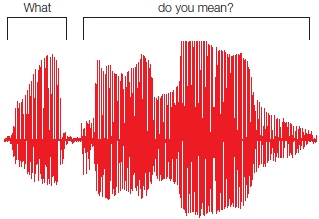

Recognizing that phonemes are important building blocks of spoken language and that we all become experts in the phonemes of our native tongue does not eliminate all challenges for the listener. The listener’s brain must resolve a number of additional difficulties with the speech signal; some of these challenges have to do with (a) the variability of the signal (e.g., male vs. female speakers), and (b) the fact that phonemes often do not appear as separate little chunks of information. Unlike the case for written words, auditory speech signals are not clearly segmented, and it can be difficult to discern where one word begins and another word ends. When we speak, we usually spew out about 15 phonemes per second, which adds up to about 180 words a minute. The puzzling thing is that we say these phonemes with no gaps or breaks: that is, there are no pauses between words. Thus, the input signal in spoken language is very different from that in written language, where the letters and phonemes are neatly separated into word chunks. Two or more spoken words can be slurred together or, in other words, speech sounds are often coarticulated. There can also be silences within words as well. The question of how we differentiate auditory sounds into separate words is known as the segmentation problem. This is illustrated in Figure 11.9, which shows the speech signal of the sentence, “What do you mean?”

How do we identify the spoken input, given this variability and the segmentation problem? Fortunately, other clues help us divide the speech stream into meaningful segments. One important clue is the prosodic information, which is what the listener derives from the speech rhythm and the pitch of the speaker’s voice. The speech rhythm comes from variation in the duration of words and the placement of pauses between them. Prosody is apparent in all spoken utterances, but it is perhaps most clearly illustrated when a speaker asks a question or emphasizes something. When asking a question, a speaker raises the frequency of the voice toward the end of the question; and when emphasizing a part of speech, a speaker raises the loudness of the voice and includes a pause after the critical part of the sentence.

In their research, Anne Cutler and colleagues (Tyler and Cutler, 2009) at the Max Planck Institute for Psycholinguistics in the Netherlands have revealed other clues that can be used to segment the continuous speech stream. These researchers showed that English listeners use syllables that carry an accent or stress (strong syllables) to establish word boundaries. For example, a word like lettuce, with stress on the first syllable, is usually heard as a single word and not as two words (“let us”). In contrast, words such as invests, with stress on the last syllable, are usually heard as two words (“in vests”) and not as one word.

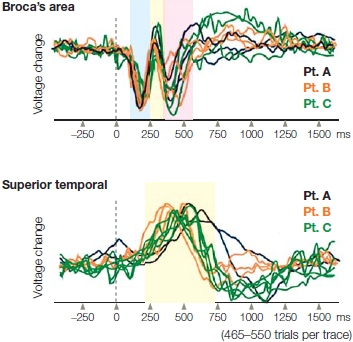

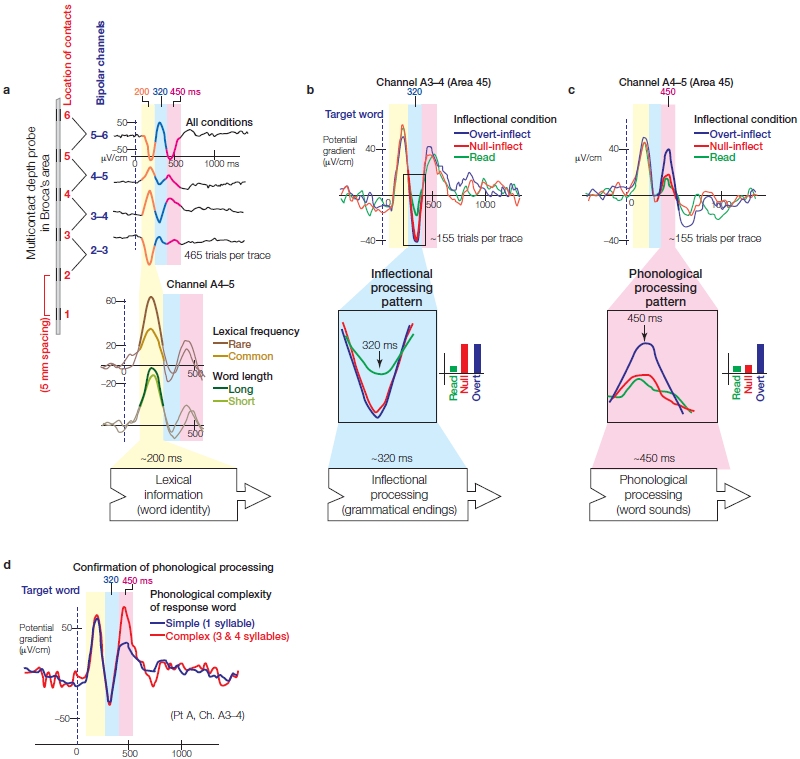

Neural Substrates of Spoken-Word Processing Now we turn to the questions of where in the brain the processes of understanding speech signals may take place and what neural circuits and systems support them. From animal studies, studies in patients with brain lesions, and imaging and recording (EEG and MEG) studies in humans, we know that the superior temporal cortex is important to sound perception. At the beginning of the 20th century, it was already well understood that patients with bilateral lesions restricted to the superior parts of the temporal lobe had the syndrome of “pure word deafness.” Although they could process other sounds relatively normally, these patients had specific difficulties recognizing speech sounds. Because there was no difficulty in other aspects of language processing, the problem seemed to be restricted primarily to auditory or phonemic deficits—hence the term pure word deafness. With evidence from more recent studies in hand, however, we can begin to determine where in the brain speech and nonspeech sounds are first distinguished.

FIGURE 11.9 Speech waveform for the question, “What do you mean?”

Note that the words do you mean are not physically separated. Even though the physical signal provides few cues to where the spoken words begin and end, the language system is able to parse them into the individual words for comprehension.

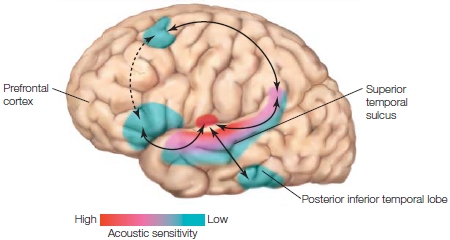

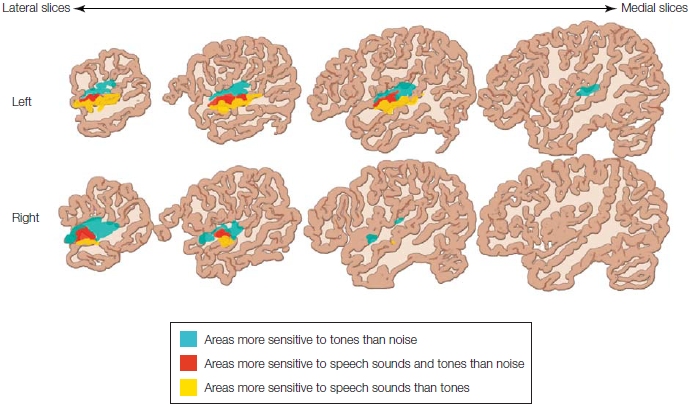

When the speech signal hits the ear, it is first processed by pathways in the brain that are not specialized for speech but that are used for hearing in general. Heschl’s gyri, which are located on the supratemporal plane, superior and medial to the superior temporal gyrus (STG) in each hemisphere, contain the primary auditory cortex, or the area of cortex that processes the auditory input first (see Chapter 2). The areas that surround Heschl’s gyri and extend into the superior temporal sulcus (STS) are collectively known as auditory association cortex. Imaging and recording studies in humans have shown that Heschl’s gyri of both hemispheres are activated by speech and nonspeech sounds (e.g., tones) alike, but that the activation in the STS of both hemispheres is modulated by whether the incoming auditory signal is a speech sound or not. This view is summarized in Figure 11.10 showing that there is a hierarchy in the sensitivity to speech in our brain (Peelle et al., 2010; Poeppel et al., 2012). As we move farther away from Heschl’s gyrus toward anterior and posterior portions of the STS, the brain becomes less sensitive to changes in nonspeech sounds but more sensitive to speech sounds. Although more left lateralized, the posterior portions of the STS of both hemispheres seem especially relevant to processing of phonological information. It is clear from many studies, however, that the speech perception network expands beyond the STS.

|

FIGURE 11.10 Brain areas important to speech perception and language comprehension. |

|

As described earlier, Wernicke found that patients with lesions in the left temporoparietal region that included the STG (Wernicke’s area) had difficulty understanding spoken and written language. This observation led to the now-century-old notion that this area is crucial to word comprehension. Even in Wernicke’s original observations, however, the lesions were not restricted to the STG. We can now conclude that the STG alone is probably not the seat of word comprehension.

One study that has contributed to our new understanding of speech perception is an fMRI study done by Jeffrey Binder and colleagues (2000) at the Medical College of Wisconsin. Participants in the study listened to different types of sounds, both speech and nonspeech. The sounds were of several types: white noise without systematic frequency or amplitude modulations; tones that were frequency modulated between 50 and 2,400 Hz; reversed speech, which was real words played backward; pseudowords, which were pronounceable strings of nonreal words that contain the same letters as the real word—for example, sked from desk; and real words.

Figure 11.11 shows the results of the Binder study. Relative to noise, the frequency-modulated tones activated posterior portions of the STG bilaterally. Areas that were more sensitive to the speech sounds than to tones were more ventrolateral, in or near the superior temporal sulcus, and lateralized to the left hemisphere. In the same study, Binder and colleagues showed that these areas are most likely not involved in lexical-semantic aspects of word processing (i.e., the processing of word forms and word meaning), because they were equally activated for words, pseudowords, and reversed speech.

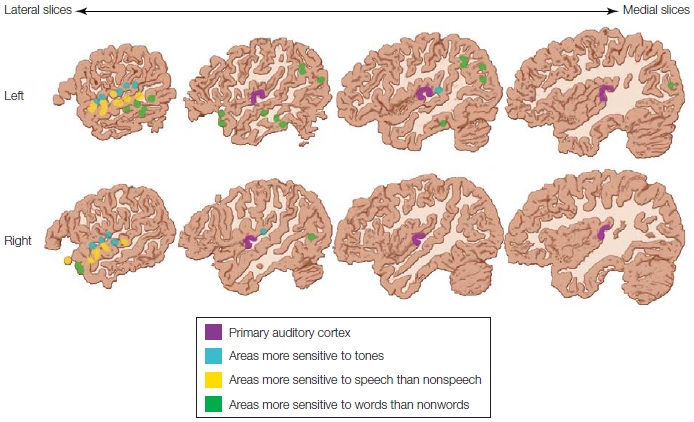

Based on their fMRI findings and the findings of other groups identifying brain regions that become activated in relation to subcomponents of speech processing, Binder and colleagues (2000) proposed a hierarchical model of word recognition (Figure 11.12). In this model, processing proceeds anteriorly in the STG. First, the stream of auditory information proceeds from auditory cortex in Heschl’s gyri to the superior temporal gyrus. In these parts of the brain, no distinction is made between speech and nonspeech sounds, as noted earlier. The first evidence of such a distinction is in the adjacent mid-portion of the superior temporal sulcus, but still, no lexical-semantic information is processed in this area.

Neurophysiological studies now indicate that recognizing whether a speech sound is a word or a pseudoword happens in the first 50–80 ms (MacGregor et al., 2012). This processing tends to be lateralized more to the left hemisphere, where the combinations of the different features of speech sounds are analyzed (pattern recognition). From the superior temporal sulcus, the information proceeds to the final processing stage of word recognition in the middle temporal gyrus and the inferior temporal gyrus, and finally to the angular gyrus, posterior to the temporal areas just described (see Chapter 2), and in more anterior regions in the temporal pole (Figure 11.10).

Over the course of the decade following the Binder study, multiple studies were done in an attempt to localize speech recognition processes. In reviewing 100 fMRI studies, Iain DeWitt and Josef Rauschecker (2012) of Georgetown University Medical Center confirmed the findings that the left mid-anterior STG responds preferentially to phonetic sounds of speech. Researchers also have tried to identify areas in the brain that are particularly important for the processing of phonemes. Recent fMRI studies from the lab of Sheila Blumstein at Brown University suggest a network of areas involved in phonological processing during speech perception and production, including the left posterior superior temporal gyrus (activation), the supramarginal gyrus (selection), inferior frontal gyrus (phonological planning), and precentral gyrus (generating motor plans for production; Peramunage et al., 2011).

FIGURE 11.11 Superior temporal cortex activations to speech and nonspeech sounds.

Four sagittal slices are shown for each hemisphere. The posterior areas of the superior temporal gyrus are more active bilaterally for frequency-modulated tones than for simple noise (in blue). Areas that are more active for speech sounds and tones than for noise are indicated in red. Areas that are more sensitive to speech sounds (i.e., reversed words, pseudo words, and words) are located ventrolaterally to this area (in yellow), in or near the superior temporal sulcus. This latter activation is somewhat lateralized to the left hemisphere (top row).

TAKE-HOME MESSAGES

Written Input: Reading Words

Reading is the perception and comprehension of written language. For written input, readers must recognize a visual pattern. Our brain is very good at pattern recognition, but reading is a quite recent invention (about 5,500 years old). Although speech comprehension develops without explicit training, reading requires instruction. Specifically, learning to read requires linking arbitrary visual symbols into meaningful words. The visual symbols that are used vary across different writing systems. Words can be symbolized in writing in three different ways: alphabetic, syllabic, and logographic. For example, many Western languages use the alphabetic system, Japanese uses the syllabic system, and Chinese uses the logographic system.

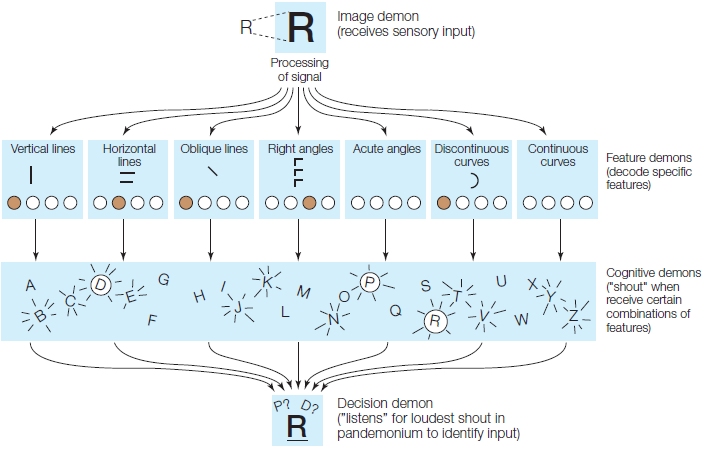

Regardless of the writing system used, readers must be able to analyze the primitive features, or the shapes of the symbols. In the alphabetic system—our focus here—this process involves the visual analysis of horizontal lines, vertical lines, closed curves, open curves, intersections, and other elementary shapes.

In a 1959 paper that was a landmark contribution to the emerging science of artificial intelligence (i.e., machine learning), Oliver Selfridge proposed a collection of small components or demons (a term he used to refer to a discrete stage or substage of information processing) that together would allow machines to recognize patterns. Demons record events as they occur, recognize patterns in those events, and may trigger subsequent events according to patterns they recognize. In his model, known as the pandemonium model, the sensory input (R) is temporarily stored as an iconic memory by the so-called image demon. Then 28 feature demons each sensitive to a particular feature like curves, horizontal lines, and so forth start to decode features in the iconic representation of the sensory input (Figure 11.13). In the next step, all representations of letters with these features are activated by cognitive demons. Finally, the representation that best matches the input is selected by the decision demon. The pandemonium model has been criticized because it consists solely of stimulus-driven (bottom-up) processing and does not allow for feedback (top-down) processing, such as in the word superiority effect (see Chapter 3). Humans are better at processing letters found in words than letters found in nonsense words or even single letters.

FIGURE 11.12 Regions involved in a hierarchical processing stream for speech processing (see text for explanation).

Heschl’s gyri, which contain the primary auditory cortex, are in purple. Shown in blue are areas of the dorsal superior temporal gyri that are activated more by frequency-modulated tones than by random noise. Yellow areas are clustered in the superior temporal sulcus and are speech-sound specific; they show more activation for speech sounds (words, pseudowords, or reversed speech) than for nonspeech sounds. Green areas include regions of the middle temporal gyrus, inferior temporal gyrus, angular gyrus, and temporal pole and are more active for words than for pseudowords or nonwords. Critically, these “word” areas are lateralized mostly to the left hemisphere.

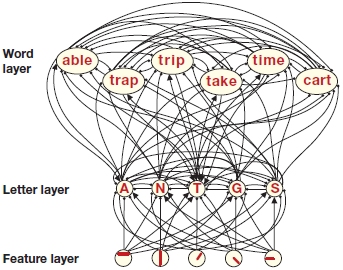

In 1981, James McClelland and David Rumelhart proposed a computational model that has been important for visual letter recognition. This model assumes three levels of representation: (a) a layer for the features of the letters of words, (b) a layer for letters, and (c) a layer for the representation of words. An important characteristic of this model is that it permits top-down information (i.e., information from the higher cognitive levels, such as the word layer) to influence earlier processes that happen at lower levels of representation (the letter layer and/or the feature layer).

This model contrasts sharply with Selfridge’s model, where the flow of information is strictly bottom up (from the image demon to the feature demons to the cognitive demons and finally to the decision demon). Another important difference between the two models is that, in the McClelland and Rumelhart model, processes can take place in parallel such that several letters can be processed at the same time, whereas in Selfridge’s model, one letter is processed at a time in a serial manner. As Figure 11.14 shows, the model of McClelland and Rumelhart permits both excitatory and inhibitory links between all the layers.

FIGURE 11.13 Selfridge’s (1959) pandemonium model of letter recognition.

For written input, the reader must recognize a pattern that starts with the analysis of the sensory input. The sensory input is stored temporarily in iconic memory by the image demon, and a set of 28 feature demons decodes the iconic representations. The cognitive demons are activated by the representations of letters with these features, and the representation that best matches the input is then selected by the decision demon.

The empirical validity of a model can be tested on real-life behavioral phenomena or against physiological data. McClelland and Rumelhart’s connectionist model does an excellent job of mimicking reality for the word superiority effect. This remarkable result indicates that words are probably not perceived on a letter-by-letter basis. The word superiority effect can be explained in terms of the McClelland and Rumelhart model, because the model proposes that top-down information of the words can either activate or inhibit letter activations, thereby helping the recognition of letters.

We learned in Chapters 5 and 6 that single-cell recording techniques have enlightened us about the basics of visual feature analysis and how the brain analyzes edges, curves, and so on. Unresolved questions remain, however, because letter and word recognition are not really understood at the cellular level, and recordings in monkeys are not likely to enlighten us about letter and word recognition in humans. Recent studies using PET and fMRI have started to shed some light on where letters are processed in the human brain.

FIGURE 11.14 Fragment of a connectionist network for letter recognition.

Nodes at three different layers represent letter features, letters, and words. Nodes in each layer can influence the activational status of the nodes in the other layers by excitatory (arrows) or inhibitory (lines) connections.

Neural Substrates of Written-Word Processing The actual identification of orthographic units may take place in occipitotemporal regions of the left hemisphere. It has been known for over 100 years that lesions in this area can give rise to pure alexia, a condition in which patients cannot read words, even though other aspects of language are normal. In early PET imaging studies, Steven Petersen and his colleagues (1990) contrasted words with non-words and found regions of occipital cortex that preferred word strings. They named these regions the visual word form area. In later studies using fMRI in normal participants, Gregory McCarthy at Yale University and his colleagues (Puce et al., 1996) contrasted brain activation in response to letters with activation in response to faces and visual textures. They found that regions of the occipitotemporal cortex were activated preferentially in response to unpronounceable letter strings (Figure 11.15). Interestingly, this finding confirmed results from an earlier study by the same group (Nobre et al., 1994), in which intracranial electrical recordings were made from this brain region in patients who later underwent surgery for intractable epilepsy. In this study, the researchers found a large negative polarity potential at about 200 ms in occipitotemporal regions, in response to the visual presentation of letter strings. This area was not sensitive to other visual stimuli, such as faces, and importantly, it also appeared to be insensitive to lexical or semantic features of words.

FIGURE 11.15 Regions in occipitotemporal cortex were preferentially activated in response to letter strings.

Stimuli were faces (a) or letter strings (b). (c) Left hemisphere coronal slice at the level of the anterior occipital cortex. Faces activated a region of the lateral fusiform gyrus (yellow); letter strings activated a region of the occipitotemporal sulcus (red). (d) Graph shows the corresponding time course of fMRI activations averaged over all alternation cycles for faces (yellow line) and letter strings (pink line).

In a combined ERP and fMRI study that included d healthy persons and patients with callosal lesions, Laurent Cohen, Stanislas Dehaene, and their colleagues (2000) investigated the visual word form area. While the participants fixated on a central crosshair, a word or a non-word was flashed to either their right or left visual field. Non-words were consonant strings incompatible with French orthographic principles and were impossible to translate into phonology. When a word flashed on the screen, they were to repeat it out loud, and if a non-word flashed, they were to think “rien” (which means nothing; this was a French study after all).

The event-related potentials (ERPs) indicated that initial processing was confined to early visual areas contralateral to the stimulated visual hemifield. Activations then revealed a common processing stage, which was associated with the activation of a precise, reproducible site in the left occipitotemporal sulcus (anterior and lateral to area V4), part of the visual word form area, which coincides with the lesion site that causes pure alexia (Cohen et al., 2000). This and later studies showed that this activation was visually elicited (Dehaene et al., 2002) only for prelexical forms (before the word form was associated with a meaning), yet was invariant for the location of the stimulus (right or left visual field) and the case of the word stimulus (Dehaene et al., 2001). These findings were also in agreement with Nobre’s findings. Finally, the researchers found that the processing beyond this point was the same for all word stimuli from either visual field—a result that corresponds to the standard model of word reading. Activation of the visual word form area is reproducible across cultures that use different types of symbols, such as Japanese kana (syllabic) and kanji (logographic; Bolger et al., 2005). This convergent neurological and neuroimaging evidence gives us clues as to how the human brain solves the perceptual problems of letter recognition.

TAKE-HOME MESSAGES

THE COGNITIVE NEUROSCIENTIST’S TOOLKIT

Stimulation Mapping of the Human Brain

Awake, a young man lies on his side on a table, draped with clean, light-green sheets. His head is partially covered by a sheet of cloth, so we can see his face if we wish. On the other side of the cloth is a man wearing a surgical gown and mask. One is a patient; the other is his surgeon. His skull has been cut through, and his left hemisphere is exposed. Rather than being a scene from a sci-fi thriller, this is a routine procedure at the University of Washington Medical School, where George Ojemann and his colleagues (1989) have been using direct cortical stimulation to map the brain’s language areas.

The patient suffers from epilepsy and is about to undergo a surgical procedure to remove the epileptic tissue. Because this epileptic focus is in the left, language-dominant hemisphere, it is first essential to determine where language processes are localized in the patient’s brain. Such localization can be done by electrical stimulation mapping. Electrodes are used to pass a small electrical current through the cortex, momentarily disrupting activity; thus, electrical stimulation can probe where a language process is localized. The patient has to be awake for this test. Language-related areas vary among patients, so these areas must be mapped carefully. During surgery, it is essential to leave the critical language areas intact.

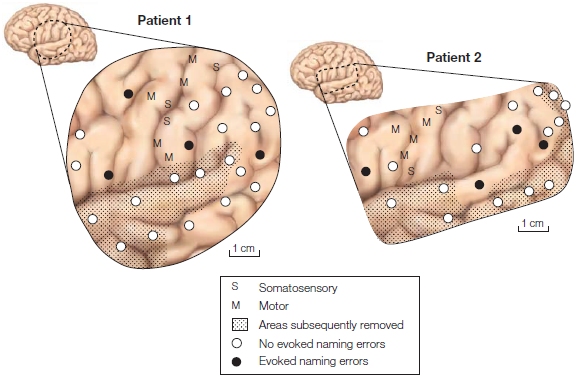

One benefit of this work is that we can learn more about the organization of the human language system (Figure 1). Patients are shown line drawings of everyday objects and are asked to name those objects. During naming, regions of the left perisylvian cortex are stimulated with low amounts of electricity. When the patient makes an error in naming or is unable to name the object, the deficit is correlated with the region being stimulated during that trial, so that area of cortex is assumed to be critical for language production and comprehension.

Stimulation of between 100 and 200 patients revealed that aspects of language representation in the brain are organized in mosaic-like areas of 1 to 2 cm2. These mosaics usually include regions in the frontal cortex and posterior temporal cortex. In some patients, however, only frontal or posterior temporal areas were observed. The correlation between these effects in either Broca’s area or Wernicke’s area was weak; some patients had naming disruption in the classic areas, and others did not. Perhaps the single most intriguing fact is how much the anatomical localizations vary across patients. This finding has implications for how across-subject averaging methods, such as PET activation studies, reveal significant effects.

FIGURE 1 Regions of the brain of two patients studied with cortical stimulation mapping.

During surgery, with the patient awake and lightly anesthetized, the surgeon maps the somatosensory and motor areas by stimulating the cortex and observing the responses. The patient also is shown pictures and asked to verbally name them. Discrete regions of the cortex are stimulated with electrical current during the task. Areas that induce errors in naming when they are stimulated are mapped, and those regions are implicated as being involved in language. The surgeon uses this mapping to avoid removing any brain tissue associated with language. The procedure thus treats brain tumors or epilepsy as well as enlightens us about the cortical organization of language functions.

The Role of Context in Word Recognition

We come now to the point in word comprehension where auditory and visual word comprehension share processing components. Once a phonological or visual representation is identified as a word, then for it to gain any meaning, semantic and syntactic information must be retrieved. Usually words are not processed in isolation, but in the context of other words (sentences, stories, etc.). To understand words in their context, we have to integrate syntactic and semantic properties of the recognized word into a representation of the whole utterance.

At what point during language comprehension do linguistic and nonlinguistic context (e.g., information seen in pictures) influence word processing? Is it possible to retrieve word meanings before words are heard or seen when the word meanings are highly predictable in the context? More specifically, does context influence word processing before or after lexical access and lexical selection are complete?

Consider the following sentence, which ends with a word that has more than one meaning. “The tall man planted a tree on the bank.” Bank can mean both “financial institution” and “side of the river.” Semantic integration of the meaning of the final word bank into the context of the sentence allows us to interpret bank as the “side of the river” and not as a “financial institution.” The relevant question is, when does the sentence’s context influence the activation of the multiple meanings of the word bank? Do both the contextually appropriate meaning of bank (in this case “side of the river”) and the contextually inappropriate meaning (in this case “financial institution”) become briefly activated regardless of the context of the sentence? Or does the sentence context immediately constrain the activation to the contextually appropriate meaning of the word bank?

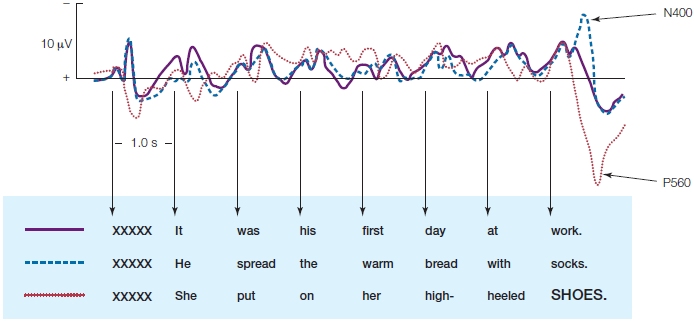

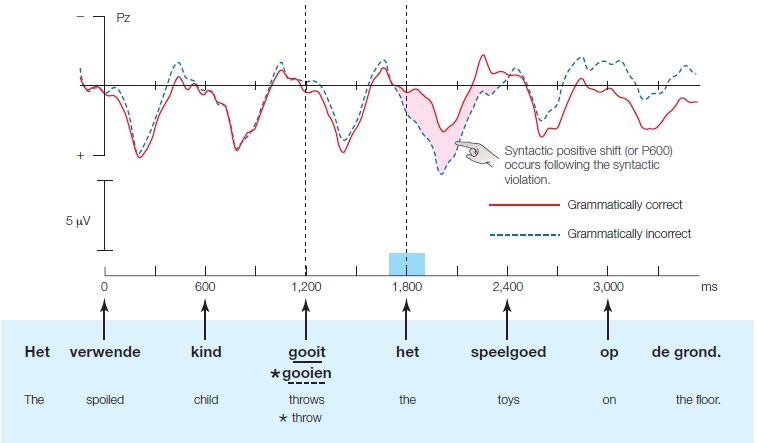

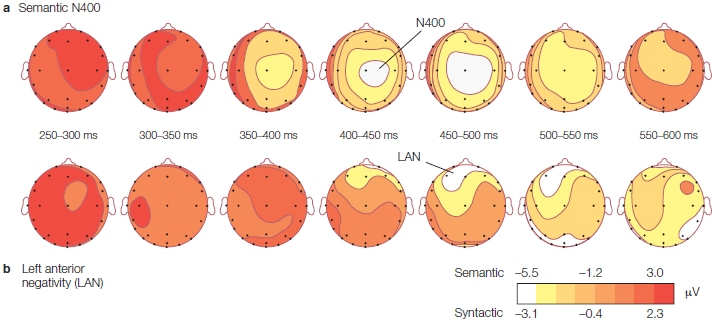

From this example, we can already see that two types of representations play a role in word processing in the context of other words: lower-level representations, those constructed from sensory input (in our example, the word bank itself); and higher-level representations, those constructed from the context preceding the word to be processed (in our example, the sentence preceding the word bank). Contextual representations are crucial to determine in what sense or what grammatical form a word should be used. Without sensory analysis, however, no message representation can take place. The information has to interact at some point. The point where this interaction occurs differs in competing models.