|

Any emotion, if it is sincere, is involuntary.

~ Mark Twain

When dealing with people, remember you are not dealing with creatures of logic, but creatures of emotion.

~ Dale Carnegie

|

Chapter 10

Emotion

OUTLINE

What Is an Emotion?

Neural Systems Involved in Emotion Processing

Categorizing Emotions

Theories of Emotion Generation

The Amygdala

Interactions Between Emotion and Other Cognitive Processes

Get a Grip! Cognitive Control of Emotion

Other Areas, Other Emotions

Unique Systems, Common Components

AT AGE 42, the last time that S.M. remembered actually being scared was when she was 10. This was not because she had not been in frightening circumstances; in fact, she had been in plenty. She had been held at both knife- and gun-point, physically accosted by a woman twice her size, and nearly killed in a domestic violence attack, among other experiences (Feinstein et al., 2011).

Oddly enough, S.M. doesn’t really notice that things don’t frighten her. What she did notice, beginning at age 20, were seizures. A CT scan and an MRI revealed that both of S.M.’s amygdalae were severely atrophied (Figure 10.1). Further tests revealed that she had a rare autosomal recessive genetic disorder, Urbach–Wiethe disease, which leads to degeneration of the amygdalae (Adolphs et al., 1994, 1995; Tranel & Hyman, 1990), typically with an onset around 10 years of age. The deterioration of her amygdalae was highly specific; surrounding white matter showed minimal damage. On standard neuropsychological tests, her intelligence scores were in the normal range, and she had no perceptual or motor problems. Something curious popped up, however, when her emotional processing was tested. S.M. was shown a large set of photographs and asked to judge the emotion being expressed by the individuals in the pictures. She accurately identified expressions conveying sadness, anger, disgust, happiness, and surprise. But one facial expression stumped her: fear (see the bottom right photo of Figure 10.6, for a similar example expressing fear). S.M. seemed to know that some emotion was being expressed, and she was capable of recognizing facial identities (Adolphs et al., 1994), but she was not able to recognize fear in facial expressions. She also had another baffling deficit. When asked to draw pictures depicting different emotions, she was able to provide reasonable cartoons of a range of states, except when asked to depict fear. When prodded to try, she scribbled for a few minutes, only to reveal a picture of a baby crawling, but couldn’t say why she had produced this image (Figure 10.2).

FIGURE 10.1 Bilateral amygdala damage in patient S.M.

The white arrows indicate where the amygdala are located in the right and left hemispheres. Patient S.M. has severe atrophy of the amygdala, and the brain tissue is now replaced by cerebrospinal fluid (black).

One tantalizing possibility was that S.M. was unable to process the concept of fear. This idea was rejected, however, because she was able to describe situations that would elicit fear, used words describing fear properly (Adolphs et al., 1995), and she had no trouble labeling fearful tones in voices (Adolphs & Tranel, 1999). She even stated that she “hated” snakes and spiders and tried to avoid them (Feinstein et al., 2011). Although S.M. was able to describe fear and had indicated she was afraid of snakes, when her researchers objectively investigated whether she also had abnormal fear reactions, they found that she had a very much reduced experience of fear. She was taken to an exotic pet store that had a large collection of snakes and spiders. Contrary to her declarations, she spontaneously went to the snake terrariums and was very curious and captivated. She readily held one, rubbed its scales, touched its tongue, and commented, “This is so cool!” (Feinstein et al., 2011). What’s more, she repeatedly asked if she could touch the larger (some poisonous) snakes. While handling the snake, she reported her fear rating was never more than 2 on a 0–10 scale. Other attempts to elicit fear in S.M., such as going to a haunted house or watching a scary film, received a rating of zero, though she knew that other people would consider the experiences scary. Thus, her inability to experience fear was not the result of misunderstanding the concept of fear or not recognizing it. She did exhibit appropriate behavior when viewing film clips meant to induce all the other emotions, so it wasn’t that she had no emotional experience. Nor was it because she had never experienced fear. She described being cornered by a growling Doberman pincher when she was a child (before her disease manifested itself), screaming for her mother and crying, along with all the accompanying visceral fear reactions. Perhaps this is why she drew a toddler when asked to depict fear. It was not for lack of real-life fear-inducing episodes, either. S.M. had experienced those events we described earlier. In fact, her difficulty in detecting and avoiding threatening situations had probably resulted in her being in them more often than most people. These observations appeared to rule out a generalized conceptual deficit: She understood the notion, she just didn’t experience it.

Another interesting facet of S.M.’s behavior is that after being extensively studied for over 20 years, she continues to have no insight into her deficit and is unaware that she still becomes involved in precarious situations. It seems that because she cannot experience fear, she does not avoid them. (It sounds like the interpreter system is not getting any input about feeling fear; see Chapter 4.) What can be surmised about the amygdala and emotional processing from S.M.?

|

FIGURE 10.2 S.M.’s deficit in comprehending fear is also observed on a production task.

She was asked to draw faces that depicted basic emotions. When prompted to draw a person who was afraid, S.M. hesitated and then produced the picture of the baby. She was, however, not happy with her drawing of “afraid.”

|

- First, the amygdala must play a critical role in the identification of facial expressions of fear.

- Second, although S.M. fails to experience the emotion of fear, she has little impairment in her comprehension of other emotions.

- Third, her inability to feel a particular emotion, fear, seems to have contributed to her inability to avoid dangerous situations.

It is difficult to understand who we are or how we interact with the world without considering our emotional lives. Under the umbrella of cognitive neuroscience, the study of emotion was slow to emerge because, for a number of reasons, emotion is difficult to study systematically. For a long time, emotion was considered to be subjective to the individual and thus, not amenable to empirical analysis. Researchers eventually realized that conscious emotions arise from unconscious processes that can be studied using the tools of psychology and cognitive neuroscience (see a review of the problem in LeDoux, 2000). It has become apparent that emotion is involved with much of cognitive processing. Its involvement ranges from influencing what we remember (Chapter 9), to where we direct our attention (Chapter 7), to the decisions that we make (Chapter 12). Our emotions modulate and bias our own behavior and actions. Underlying all emotion research is a question: Is there a neural system dedicated to emotions or are they just another form of cognition that is only phenomenologically different (S. Duncan & Barrett, 2007)? The study of emotion is emerging as a critical and exciting research topic.

We begin this chapter with some attempts to define emotion. Next, we review the areas of the brain that are thought to mediate emotion processing. We also survey the theories about emotions and how they are generated. Much of the research on emotion has concentrated on the workings of the amygdala, so we examine this part of the brain in some detail. We also look at the progress made in answering the questions that face emotion researchers:

- What is an emotion?

- Are some emotions basic to everyone?

- How are emotions generated?

- Is emotion processing localized, generalized, or a combination of the two?

- What effect does emotion have on the cognitive processes of perception, attention, learning, memory, and decision making and on our behavior?

- Do these cognitive processes exert any control over our emotions?

We close the chapter with a look at several (especially) complex emotions, including happiness and love.

What Is an Emotion?

People have been struggling with this question for at least several thousand years. Even today, the answer remains unsettled. In the current Handbook of Emotions (3rd ed.), the late philosopher Robert Soloman (2008) devotes an entire chapter to discussing the lack of a good definition of emotion and looking at why it is so difficult to define. How would you define emotion?

Maybe your definition starts with “An emotion is a feeling you get when....” And we already have a problem, because many researchers claim that a feeling is the subjective experience of the emotion, but not the emotion itself. These two events are dissociable and, as we see later in this chapter, they use separate neural systems. Perhaps evolutionary principles can help us with a general definition. Emotions are neurological processes that have evolved, which guide behavior in such a manner as to increase survival and reproduction. How’s that for vague? Here is a definition from Kevin Ochsner and James Gross (2005), two researchers whose work we look at in this chapter:

Current models posit that emotions are valenced responses to external stimuli and/or internal mental representations that

- involve changes across multiple response systems (e.g., experiential, behavioral, peripheral, physiological),

- are distinct from moods, in that they often have identifiable objects or triggers,

- can be either unlearned responses to stimuli with intrinsic affective properties (e.g., pulling your hand away when you burn it) or learned responses to stimuli with acquired emotional value (e.g., fear when you see a dog that previously bit you),

- can involve multiple types of appraisal processes that assess the significance of stimuli to current goals, that

- depend upon different neural systems.

Most psychologists agree that emotion consists of three components:

- A physiological reaction to a stimulus,

- a behavioral response, and

- a feeling.

Neural Systems Involved in Emotion Processing

Many parts of the nervous system are involved in our emotions. When emotions are triggered by an external event or stimulus (as they often are), our sensory systems play a major role. Sometimes emotions are triggered by an episodic memory, in which case our memory systems are involved (see Chapter 9). The physiologic components of emotion (that shiver up the spine, or the racing heart and dry mouth people experience with fear) involve the autonomic nervous system (ANS), a division of the peripheral nervous system. Recall from Chapter 2 that the ANS is made up of the sympathetic and the parasympathetic nervous systems (see Figure 2.17), and its motor and sensory neurons extend to the heart, lungs, gut, bladder, and sexual organs. The two systems work in combination to achieve homeostasis. As a rule of thumb, the sympathetic system promotes “fight or flight” arousal, and the parasympathetic promotes “rest and digest.” The ANS is regulated by the hypothalamus. The hypothalamus also controls the release of hormones from the pituitary gland. Of course, the fight-or-flight response uses the motor system. Arousal is a critical part of many theories on emotion. The arousal system is regulated by the reticular activating system, which is composed of sets of neurons running from the brainstem to the cortex via the rostral intralaminar and thalamic nuclei.

All of the neural systems mentioned so far are important in triggering an emotion or in generating physiological and behavioral responses. Yet where do emotions reside? We turn to that question next.

Early Concepts: The Limbic System as the Emotional Brain

The notion that emotion is separate from cognition and has its own network of brain structures underlying emotional behavior is not new. As we mentioned in Chapter 2, James Papez (pronounced “payps”) proposed a circuit theory of the brain and emotion in 1937, suggesting that emotional responses involve a network of brain regions made up of the hypothalamus, anterior thalamus, cingulate gyrus, and hippocampus. Paul MacLean (1949, 1952) later named these structures the Papez circuit. He then extended this emotional network to include what he called the visceral brain, adding Broca’s limbic lobe and some subcortical nuclei and portions of the basal ganglia. Later, MacLean included the amygdala and the orbitofrontal cortex. He called this extended neural circuit of emotion the limbic system, from the Latin limbus, meaning “rim.” The structures of the limbic system roughly form a rim around the corpus callosum (Anatomical Orientation figure; also see Figure 2.26).

The anatomy of emotion

The limbic system.

FIGURE 10.3 Specific brain regions are hypothesized to be associated with specific emotions.

The rust colored orbitofrontal cortex is associated with anger, the anterior cingulate gyrus in purple with sadness, the blue insula with disgust, and the green amygdala with fear.

MacLean’s early work identifying the limbic system as the “emotional” brain was influential. To this day, studies on the neural basis of emotion include references to the “limbic system” or “limbic” structures. The continued popularity of the term limbic system in more recent work is due primarily to the inclusion of the orbitofrontal cortex and amygdala in that system. As we shall see, these two areas have been the focus of investigation into the neural basis of emotion (Figure 10.3; Damasio, 1994; LeDoux, 1992). The limbic system concept as strictly outlined by MacLean, however, has not been supported over the years (Brodal, 1982; Kotter & Meyer, 1992; LeDoux, 1991; Swanson, 1983). We now know that many brainstem nuclei that are connected to the hypothalamus are not part of the limbic system. Similarly, many brainstem nuclei that are involved in autonomic reactions important to MacLean’s idea of a visceral brain are not part of the limbic system. Although several limbic structures are known to play a role in emotion, it has been impossible to establish criteria for defining which structures and pathways should be included in the limbic system. At the same time, classic limbic areas such as the hippocampus have been shown to be more important for other, nonemotional processes, such as memory (see Chapter 9). With no clear understanding as to why some brain regions and not others are part of the limbic system, MacLean’s concept has proven to be more descriptive and historical than functional in our current understanding of the neural basis of emotion.

Early attempts to identify neural circuits of emotion viewed emotion as a unitary concept that could be localized to one specific circuit, such as the limbic system. Viewing the “emotional brain” as separate from the rest of the brain spawned a locationist view of emotions. The locationist account hypothesizes that all mental states belonging to the same emotion category are produced by activity that is recurrently associated with a specific region in the brain (Figure 10.3). Also, this association is an inherited trait, and homologies are seen in other mammalian species (Panksepp, 1998; for a contrary view, see Lindquist et al., 2012).

Emerging Concepts of Emotional Networks

Over the last several decades, scientific investigations of emotion have become more detailed and complex. By measuring brain responses to emotionally salient stimuli, researchers have revealed a complex interconnected network involved in the analysis of emotional stimuli. This network includes the thalamus, the somatosensory cortex, higher order sensory cortices, the amygdala, the insular cortex (also called the insula), and the medial prefrontal cortex, including the orbitofrontal cortex, ventral striatum, and anterior cingulate cortex (ACC).

Those who study emotion now acknowledge that it is a multifaceted behavior that may vary along a spectrum from basic to more complex: It isn’t captured by one definition or contained within a single neural circuit. Indeed, S.M.’s isolated emotional deficit in fear recognition following bilateral amygdala damage supports the idea that there is no single emotional circuit. Emotion research now focuses on specific types of emotional tasks and on identifying the neural systems underlying specific emotional behaviors. Depending on the emotional task or situation, we can expect different neural systems to be involved. The question remains, however, whether discrete neural mechanisms and circuits underlie the different emotion categories, or if emotions emerge out of basic operations that are not specific to emotion (psychological constructionist approach), or if a combination exists whereby some brain systems are common to all emotions allied with separable regions dedicated to processing individual emotions such as fear, anger, and disgust. According to the constructionist approach, the brain does not necessarily function within emotion categories (L. F. Barrett, 2009; S. Duncan & Barrett, 2007; Lindquist et al., 2012; Pessoa, 2008). Instead, the psychological function mediated by an individual brain region is determined, in part, by the network of brain regions it is firing with (A. R. McIntosh, 2004). In this view, each brain network might involve some brain regions that are more or less specialized for emotional processing, along with others that serve many functions, depending on what role a particular emotion plays. For instance, the dorsomedial prefrontal areas that represent self and others are active across all emotions (Northoff et al., 2005), while brain regions that support attentional vigilance are recruited to detect threat signals; the brain regions that represent the consequence that a stimulus will have for the body are activated for disgust, but not only for disgust. So, just as a definition for emotion is in flux, so too are the anatomical correlates of emotional processing.

TAKE-HOME MESSAGES

- The Papez circuit describes the brain areas that James Papez believed were involved in emotion. They include the hypothalamus, anterior thalamus, cingulate gyrus, and hippocampus. The limbic system includes these structures and the amygdala, orbitofrontal cortex, and portions of the basal ganglia.

- Investigators no longer think there is only one neural circuit of emotion. Rather, depending on the emotional task or situation, we can expect different neural systems to be involved.

Categorizing Emotions

At the core of emotion research is the issue of whether emotions are “psychic entities” that are specific, biologically fundamental, and hardwired with dedicated brain mechanisms (as Darwin supposed). Or, are emotions states of mind that are assembled from more basic, general causes, as William James suggested?

The trouble with the emotions in psychology is that they are regarded too much as absolutely individual things. So long as they are set down as so many eternal and sacred psychic entities, like the old immutable species in natural history, all that can be done with them is reverently to catalogue their separate characters, points, and effects. But if we regard them as products of more general causes (as “species” are now regarded as products of heredity and variation), the mere distinguishing and cataloguing becomes of subsidiary importance. Having the goose which lays the golden eggs, the description of each egg already laid is a minor matter. (James, 1890, p. 449)

James was of the opinion that emotions were not basic, nor were they found in dedicated neural structures, but were the melding of a mélange of psychological ingredients honed by evolution.

As we noted earlier in this chapter, most emotion researchers agree that the response to emotional stimuli is adaptive, comprised of three psychological states: a peripheral physiological response (e.g., heart racing), a behavioral response, and the subjective experience (i.e., feelings). What they don’t agree on are the underlying mechanisms. The crux of the disagreement among the different theories of emotion generation involves the timing of these three components and whether cognition plays a role. An emotional stimulus is a stimulus that is highly relevant for the well-being and survival of the observer. Some stimuli, such as predators or dangerous situations, may be threats; others may offer opportunities for betterment, such as food or potential mates. How the status of a stimulus is determined is another issue, as is whether the perception of the emotional stimulus leads to quick automatic processing and stereotyped emotional responses or if the response is modified by cognition. Next, we discuss the basic versus dimensional categorization of emotion and then look at representatives of the various theories of emotion generation.

Fearful, sad, anxious, elated, disappointed, angry, shameful, disgusted, happy, pleased, excited, and infatuated are some of the terms we use to describe our emotional lives. Unfortunately, our rich language of emotion is difficult to translate into discrete states and variables that can be studied in the laboratory. In an effort to apply some order and uniformity to our definition of emotion, researchers have focused on three primary categories of emotion:

- Basic emotions comprise a closed set of emotions, each with unique characteristics, carved by evolution, and reflected through facial expressions.

- Complex emotions are combinations of basic emotions, some of which may be socially or culturally learned, that can be identified as evolved, long-lasting feelings.

- Dimensions of emotion describe emotions that are fundamentally the same but that differ along one or more dimensions, such as valence (pleasant or unpleasant, positive or negative) and arousal (very pleasant to very unpleasant), in reaction to events or stimuli.

Basic Emotions

We may use delighted, joyful, and gleeful to describe how we feel, but most people would agree that all of these words represent a variation of feeling happy. Central to the hypothesis that basic emotions exist is the idea that emotions reflect an inborn instinct. If a relevant stimulus is present, it will trigger an evolved brain mechanism in the same way, every time. Thus, we often describe basic emotions as being innate and similar in all humans and many animals. As such, basic emotions exist as entities independent of our perception of them. In this view, each emotion produces predictable changes in sensory, perceptual, motor, and physiological functions that can be measured and thus provide evidence that the emotion exists.

Facial Expressions and Basic Emotions For the past 150 years, many investigators have considered facial expressions to be one of those predictable changes. Accordingly, it is believed that research on facial expressions opens an extraordinary window into these basic emotions. This belief is based on the assumption that facial expressions are observable, automatic manifestations that correspond to a person’s inner feelings. Duchenne de Boulogne carried out some of the earliest research on facial expressions. One of his patients was an elderly man who suffered from near-total facial anesthesia. Duchenne developed a technique to electrically stimulate the man’s facial muscles and methodically trigger muscle contractions, and he recorded the results with the newly invented camera (Figure 10.4). He published his findings in The Mechanism of Human Facial Expression (1862). Duchenne believed that facial expressions revealed underlying emotions. Duchenne’s studies influenced Darwin’s work on the evolutionary basis of human emotional behavior, outlined in The Expression of the Emotions in Man and Animals (1873). Darwin had questioned people familiar with different cultures about the emotional lives of these varied cultures. From these discussions, Darwin determined that humans have evolved to have a finite set of basic emotional states, and each state is unique in its adaptive significance and physiological expression. The idea that humans have a finite set of universal, basic emotions was born, and this was the idea that William James protested.

FIGURE 10.4 Duchenne triggering muscle contractions in his patient, who had facial anesthesia.

The study of facial expressions was not taken up again until the 1960s, when Paul Ekman sought evidence for his hypothesis that (a) emotions varied only along a pleasant to unpleasant scale; (b) the relationship between a facial expression and what it signified was learned socially; and (c) the meaning of a particular facial expression varied among cultures. He studied cultures from around the world and discovered that, counter to his early hypothesis, the facial expressions humans use to convey emotion do not vary much from culture to culture. Whether people are from the Bronx, Beijing, or Papua New Guinea, the facial expressions we use to show that we are happy, sad, fearful, disgusted, angry, or surprised are pretty much the same (Ekman & Friesen, 1971; Figure 10.5). From this work, Ekman and others suggested that anger, fear, disgust, sadness, happiness, and surprise are the six basic human facial expressions and that each expression represents a basic emotional state (Table 10.1). Since then, other emotions have been added as potential candidate basic emotions.

Jessica Tracy and David Matsumoto (2008) have provided evidence that might change the rank of pride and shame to that of true basic emotions. They looked at the nonverbal expressions of pride or shame in reaction to winning or losing a judo match at the 2004 Olympic and Paralympic Games in contestants from 37 nations. Among the contestants, some were congenitally blind. Thus, the researchers assumed that in congenitally blind participants, the body language of their behavioral response was not learned culturally. All of the contestants displayed prototypical expressions of pride upon winning (Figure 10.6). Most cultures displayed behaviors associated with shame upon losing, though the response was less pronounced in athletes from highly individualistic cultures. This finding suggested to these researchers that behavior associated with pride and shame is innate and that these two emotions are basic.

FIGURE 10.5 The universal emotional expressions.

The meaning of these facial expressions is similar across all cultures. Can you match the faces to the emotional states of anger, disgust, fear, happiness, sadness, and surprise?

Although considerable debate continues as to whether any single list is adequate to capture the full range of emotional experiences, most scientists accept the idea that all basic emotions share three main characteristics. They are all innate, universal, and short-lasting human emotions. Table 10.2 is a set of criteria that some emotion researchers, such as Ekman, believe are common to all basic emotions.

Some basic emotions such as fear and anger have been confirmed in animals, which show dedicated subcortical circuitry for such emotions. Ekman also found that humans have specific physiological reactions for anger, fear, and disgust (see Ekman, 1992, for a review). Consequently, many researchers start with the assumption that everyone, including animals, has a set of basic emotions.

|

table 10.1 The Well-Established and Possible Basic Emotions According to Ekman (1999)

|

| Well-established basic emotions | Candidate basic emotions |

| Anger | Contempt |

| Fear | Shame |

| Sadness | Guilt |

| Enjoyment | Embarrassment |

| Disgust | Awe |

| Surprise | Amusement |

| | Excitement |

| | Pride in achievement |

| | Relief |

| | Satisfaction |

| | Sensory pleasure |

| | Enjoyment |

Complex Emotions

Even if we accept that basic emotions exist, we are still faced with identifying which emotions are basic and which are complex (Ekman, 1992; Ortigue et al., 2010a). Some commonly recognized emotions, such as jealousy and parental love, are absent from Ekman’s list (see Table 10.1; Ortigue et al., 2010a; Ortigue & Bianchi-Demicheli, 2011). Ekman did not exclude these intense feelings from his list of emotions, but called them “emotion complexes” (see Darwin et al., 1998). He differentiated them from basic emotions as follows: “Parental love, romantic love, envy, or jealousy last for much longer periods—months, years, a lifetime for love and at least hours or days for envy or jealousy” (Darwin et al., 1998, p. 83). Jealousy is one of the most interesting of the complex emotions (Ortigue & Bianchi-Demicheli, 2011). A review of the clinical literature of patients who experienced delusional jealousy following a brain infarct or a traumatic brain injury revealed that delusional jealousy is mediated by more than just the limbic system. A broad network of regions within the brain, including higher order cortical areas involved with social cognition (Chapter 13), theory of mind (Chapter 13), and interpretation of actions performed by others (Chapter 8) are involved (Ortigue & Bianchi-Demicheli, 2011). Clearly, jealousy is a complex emotion.

Similarly, romantic love is far more complicated than researchers initially thought (Ortigue et al., 2010a). (We do have to wonder who ever thought love was not complicated.) Ekman differentiates love from the basic emotions because no universal facial expressions exist for romantic love (see Table 10.1; Sabini & Silver, 2005). As Charles Darwin mentioned, “Although the emotion of love, for instance that of a mother for her infant, is one of the strongest of which the mind is capable, it can hardly be said to have any proper or peculiar means of expression” (Darwin, 1873, p. 215). Indeed, with love we can feel intense feelings and inner thoughts that facial expressions cannot reflect. Love may be described as invisible—though some signs of love, such as kissing and hand-holding, are explicit and obvious (Bianchi-Demicheli et al., 2006, 2010b). The visible manifestations of love, however, are not love per se (Ortigue et al., 2008, 2010b). The recent localization of love in the human brain—within subcortical reward, motivation, and emotion systems as well as higher order cortical brain networks involved in complex cognitive functions and social cognition—reinforces the assumption that love is a complex, goal-directed emotion rather than a basic one (Ortigue et al., 2010a; Bianchi-Demicheli et al., 2006). Complex emotions, such as love and jealousy, are considered to be refined, long-lasting cognitive versions of basic emotions that are culturally specific or individual.

FIGURE 10.6 Athletes from 37 countries exhibit spontaneous pride and shame behaviors.

The graphs compare the mean levels of nonverbal behaviors spontaneously displayed in response to wins and losses by sighted athletes on the top and congenitally blind athletes on the bottom.

|

table 10.2 Criteria of the Basic Emotions According to Ekman (1994)

|

- Distinctive universal signals

- Presence in other primates

- Distinctive physiology

- Distinctive universals in antecedent events

- Rapid onset

- Brief duration

- Automatic appraisal

- Unbidden occurrence

|

|

NOTE: In 1999, Ekman developed three additional criteria: (1) distinctive appearance developmentally; (2) distinctive thoughts, memories, images; and (3) distinctive subjective experience.

|

Dimensions of Emotion

Another way of categorizing emotions is to describe them as reactions that vary along a continuum of events in the world, rather than as discrete states. That is, some people hypothesize that emotions are better understood by how arousing or pleasant they may be or by how motivated they make a person feel about approaching or withdrawing from an emotional stimulus.

Valence and Arousal Most researchers agree that emotional reactions to stimuli and events can be characterized by two factors: valence (pleasant–unpleasant or good–bad) and arousal (the intensity of the internal emotional response, high–low; Osgood et al., 1957; Russell, 1979). For instance, most of us would agree that being happy is a pleasant feeling (positive valence) and being angry is an unpleasant feeling (negative valence). If we find a quarter on the sidewalk, however, we would be happy but not really all that aroused. If we were to win $10 million in a lottery, we would be intensely happy (ecstatic) and intensely aroused. Although in both situations we experience something that is pleasant, the intensity of that feeling is certainly different. By using this dimensional approach—tracking valence and arousal—researchers can more concretely assess the emotional reactions elicited by stimuli. Instead of looking for neural correlates of specific emotions, these researchers look for the neural correlates of the dimensions—arousal and valence.

Approach or Withdraw A second dimensional approach characterizes emotions by the actions and goals that they motivate. Richard Davidson and colleagues (1990) at the University of Wisconsin–Madison suggested that different emotional reactions or states can motivate us to either approach or withdraw from a situation. For example, the positive emotion of happiness may excite a tendency to approach or engage in the eliciting situations, whereas the negative emotions of fear and disgust may motivate us to withdraw from the eliciting situations. Motivation, however, involves more than just valence. Anger, a negative emotion, can motivate approach. Sometimes the motivating stimuli can excite both approach and withdrawal: It is 110 degrees, and for hours you have been traveling across the Australian outback on a bus with no air conditioning. You are hot, sweaty, dirty, and your only desire is to jump into the river you’ve been slowly approaching all day. You are finally dropped off at your campground by the Katherine River, where you see a rope swing dangling invitingly next to the water. You drop your pack and trot to the river, which is stimulating you to approach. As you get closer, you catch a glimpse of a typically Australian sign next to the river’s edge: “Watch out for crocs.” Hmm... the river is no longer as approachable. You want to go in, and yet....

Categorizing emotions as basic, complex, and dimensional does not adequately capture all of our emotional experiences. Think of these categories instead as a framework that we can use in our scientific investigations of emotion. No single approach is correct all of the time, so we must not get drawn into an either-or debate. It is essential, though, to understand how emotion is defined, so that as we analyze specific examples of emotion research, meaningful consensus can emerge from a range of results. Next we examine some of the many theories of how emotions are generated.

TAKE-HOME MESSAGES

- Emotions have been categorized as either basic or complex, or varying along dimensional lines.

- Six basic human facial expressions represent emotional states: anger, fear, disgust, happiness, sadness, and surprise.

- Complex emotions (such as love) may vary conceptually as a function of culture and personal experiences.

- The dimensional approach, instead of describing discrete states of emotion, describes emotions as reactions that vary along a continuum.

Theories of Emotion Generation

As we outlined near the beginning of this chapter, every emotion, following the perception of an emotion-provoking stimulus, has three components. There is a physiological response, a behavioral response, and a feeling. The crux of every theory of emotion generation involves the timing of the physiological reaction (for instance, the racing heart), the behavior reaction (such as the fight-or-flight response), and the experiential feeling (I’m scared!).

James–Lange Theory

William James proposed that the emotions were the perceptual results of somatovisceral feedback from bodily responses to an emotion-provoking stimulus. He used the example of fear associated with spotting a bear.

Our natural way of thinking about these standard emotions is that the mental perception of some fact excites the mental affection called the emotion, and that this latter state of mind gives rise to the bodily expression. My thesis on the contrary is that the bodily changes follow directly the PERCEPTION of the exciting fact, and that our feeling of the same changes as they occur IS the emotion. Common sense says,... we meet a bear, are frightened and run;... The hypothesis here to be defended says that this order of sequence is incorrect, that the one mental state is not immediately induced by the other, that the bodily manifestations must first be interposed between, and that the more rational statement is that we feel... afraid because we tremble, and not that we... tremble, because we are... fearful, as the case may be. Without the bodily states following on the perception, the latter would be purely cognitive in form, pale, colourless, destitute of emotional warmth. We might then see the bear, and judge it best to run... but we could not actually feel afraid. (James, 1884, p.189)

Thus, in James’s view, you don’t run because you are afraid, you are afraid because you become aware of your bodily change when you run. A similar proposition was suggested by a contemporary of James, Carl Lange, and the theory was dubbed the James–Lange theory.

So Lange and James theorize that

The bear (perception of stimulus) → physiologic reaction (adrenaline released causing increased heart and respiratory rates, sweating, and fight-or-flight response) → automatic, nonconscious interpretation of the physiological response (my heart is beating fast, I am running; I must be afraid) = subjective emotional feeling (scared!).

Thus James and Lange believed that with emotion there is a specific physiological reaction and that people could not feel an emotion without first having a bodily reaction.

Cannon–Bard Theory

James’s proposal caused quite an uproar. A counterproposal was offered several years later by a pair of physiologists from Harvard, Walter Cannon and Philip Bard. They thought that physiological responses were not distinct enough to distinguish among fear, anger, and sexual attraction, for example. Cannon and Bard also believed that the neuronal and hormonal feedback processes are too slow to precede and account for the emotions. Cannon (who was the first person to describe the fight-or-flight response) thought that the sympathetic nervous system coordinated the reaction while the cortex simultaneously generated the emotional feeling. Cannon found that when he severed the cortex from the brainstem above the hypothalamus and thalamus, cats still had an emotional reaction when provoked. They would growl, bare their teeth, and their hair would stand on end. They had the emotional reaction without cognition. These researchers proposed that an emotional stimulus was processed by the thalamus and sent simultaneously to the neocortex and to the hypothalamus that produced the peripheral response. Thus the neocortex generated the emotional feeling while the periphery carried out the slower emotional reaction. Returning to the bear-in-the-woods scenario, the Cannon–Bard theory is

|

fast

|

|

cortex (interpretation: → → → scared dangerous situation)

|

|

|

|

↑

|

|

|

|

The bear

|

→

|

thalamus

|

|

|

|

|

|

↓

|

|

|

|

|

|

|

slower

|

|

hypothalamus

(sympathetic nervous system)

|

→

|

emotional reaction

(fight or flight)

|

Subsequent research, however, refuted some of Cannon’s and Bard’s ideas. For instance, Paul Ekman showed that at least some emotional responses (anger, fear, and disgust) can be differentiated by autonomic activity. The Cannon–Bard theory remains important, however, because it introduced into emotion research the model of parallel processing.

Appraisal Theory

Appraisal theory is a group of theories in which emotional processing is dependent on an interaction between the stimulus properties and their interpretation. The theories differ about what is appraised and the criteria used for this appraisal. Since appraisal is a subjective step, it can account for the differences in how people react. Richard Lazarus proposed a version of appraisal theory in which emotions are a response to the reckoning of the ratio of harm versus benefit in a person’s encounter with something. In this appraisal step, each of us considers personal and environmental variables when deciding the significance of the stimulus for our well-being. Thus, the cause of the emotion is both the stimulus and its significance. The cognitive appraisal comes before the emotional response or feeling. This appraisal step may be automatic and unconscious.

He sees the bear → cognition (A quick risk–benefit appraisal is made: A dangerous wild animal is lumbering toward me, and he is showing his teeth → risk/benefit = high risk/no foreseeable benefit → I am in danger!) → Feels the emotion (he’s scared!) → response (fight or flight).

Singer–Schachter Theory: Cognitive Interpretation of Arousal

You may have read about the experiment in which investigators gave two different groups of participants an injection of adrenaline (Schachter & Singer, 1962). The control group was told that they would experience the symptoms associated with adrenaline, such as a racing heart. The other group was told they had been injected with vitamins and should not experience any side effects. Each of the participants was then placed with a confederate, who was acting in either a euphoric or an angry manner. When later asked how they felt and why, the participants who knowingly received an adrenaline injection attributed their physiological responses to the drug, and those who did not know they had been given adrenaline attributed their symptoms to the environment (the happy or angry confederate) and interpreted their emotion accordingly. The Singer–Schachter theory of emotion generation is based on these findings. The theory is a blend of the James–Lange and appraisal theories. Singer and Schachter proposed that emotional arousal and then reasoning is required to appraise a stimulus before the emotion can be identified.

So they see the bear → physiological reaction (arousal: heart races, ready to run) → cognition (What’s going on? Yikes! We are between a mother and her cub!) = feel the emotion (they’re scared!).

Constructivist Theories

Constructivist theories suggest that emotion emerges from cognition as molded by our culture and language. A recent and influential constructivist theory is the conceptual act model, proposed by Lisa Barrett. In this theory, emotions are human-made concepts that emerge as we make meaning out of sensory input from the body and from the world. First we form a mental representation of the bodily changes that have been called core affect (Russell, 2003). This representation is then classified according to language-based emotion categories. Barrett suggests that these categories vary with a person’s experience and culture, so there are no empirical criteria for judging an emotion (Barrett, 2006b).

Sensory input (she sees the bear) → physiologic response (her heart races, she feels aroused in a negative way) → her brain calculates all previous bear encounters, episodes of racing heart, degree of arousal, valence, and you name it → categorizes the current reaction in reference to all the past ones and ones suggested by her culture and language → ah, this is an emotion, and I call it fear.

Evolutionary Psychology Approach

Evolutionary psychologists Leda Cosmides and John Tooby proposed that emotions are conductors of an orchestra of cognitive programs that need to be coordinated to produce successful behavior (Cosmides & Tooby, 2000). They suggest that the emotions are an overarching program that directs the cognitive subprograms and their interactions.

From this viewpoint, an emotion is not reducible to any one category of effects, such as effects on physiology, behavioral inclinations, cognitive appraisals, or feeling states, because it involves coordinated, evolved instructions for all of them together. An emotion also involves instructions for other mechanisms distributed throughout the human mental and physical architecture.

They see the bear → possible stalking and ambush situation is detected (a common scenario of evolutionary significance) and automatically activates a hardwired program (that has evolved thanks to being successful in these types of situations) that directs all of the subprograms.

Response: Perception and attention shift automatically; goal and motivations change from a picnic in the woods to stayin’ alive; information-gathering mechanisms are redirected and a change in concepts takes place: looking for the tree as shade for a picnic becomes looking for a tall tree for escape; memory comes on board; communication changes; interpretive systems are activated (did the bear see us? If the answer is no, the people automatically adopt freeze behavior; if it is yes, they scamper); learning systems go on (they may develop a conditioned response to this trail in the future); physiology changes; behavior decision rules are activated (which may be automatic or involuntary) → they run for the tree (whew).

LeDoux’s High Road and Low Road

Joseph LeDoux of New York University has proposed that humans have two emotion systems operating in parallel. One is a neural system for our emotional responses that is separate from a system that generates the conscious feeling of emotion. This emotion-response system is hardwired by evolution to produce fast responses that increase our chances of survival and reproduction. Conscious feelings are irrelevant to these responses and are not hardwired, but learned by experience.

|

fast hardwired fight-or-flight response

|

|

LeDoux sees the bear:

|

|

|

|

slow cognition (whoa, that looks suspiciously like an Ursus arctos horribilis, good thing I’ve been keeping in shape) → emotion (feels scared)

|

LeDoux was one of the first cognitive neuroscientists to study emotions. His research on the role of the amygdala in fear has shown that the amygdala plays a major role in emotional processing in general, not just fear. Researchers know more about the role of the amygdala in emotion than they do about the role of other regions of the brain in emotion.

TAKE-HOME MESSAGES

- Emotions are made up of three psychological components—a physiological response, a behavioral response, and a subjective feeling—that have evolved to allow humans to respond to significant stimuli. The underlying mechanisms and timing of the components are disputed.

- Researchers do not agree on how emotions are generated, and many theories exist.

The Amygdala

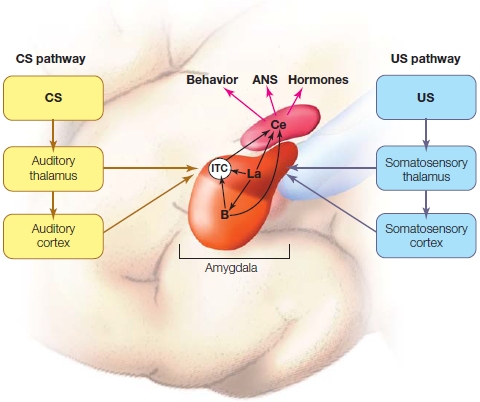

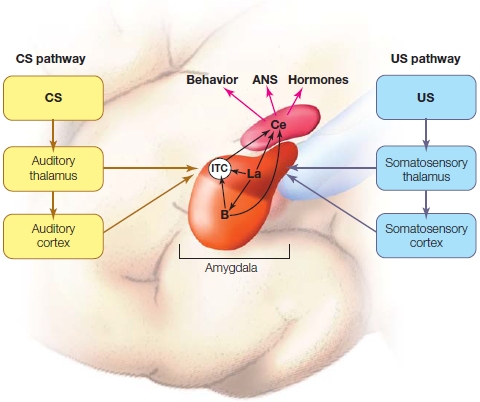

The amygdalae (singular: amygdala) are small, almond-shaped structures in the medial temporal lobe adjacent to the anterior portion of the hippocampus (Figure 10.7a). Each amygdala is an intriguing and complex structure that in primates is a collection of 13 nuclei. There has been some controversy about the concept of “the amygdala” as a single entity, and some neurobiologists consider the amygdala to be neither a structural nor a functional unit (Swanson & Petrovich, 1998). The nuclei can be grouped into three main amygdaloid complexes (Figure 10.7b).

- The largest area is the basolateral nuclear complex, consisting of the lateral, basal, and accessory basal nuclei. The basal nucleus is the gatekeeper of the amygdala input, receiving inputs from all the sensory systems. The multifaceted basal nucleus is important for mediating instrumental behavior, such as running from bears.

- The centromedial complex consists of the central nucleus and the medial nucleus. The latter is the output region for innate emotional responses including behavioral, autonomic, and endocrine responses. Figure 10.7b depicts some of the inputs and outputs of the lateral (La), basal (B), and central nuclei (Ce).

- The smallest complex is the cortical nucleus, which is also known as the “olfactory part of the amygdala” because its primary input comes from the olfactory bulb and olfactory cortex.

|

FIGURE 10.7 Location and circuitry of the amygdala.

(a) The left hemisphere amygdala is shown here in its relative position to the lateral brain aspect. It lies deep within the medial temporal lobe adjacent to the anterior aspect of the hippocampus. (b) Inputs and outputs to some of the lateral (La), basal (B), and central nuclei (Ce) of the amygdala. Note that the lateral nucleus is the major site receiving sensory inputs and the central nucleus is thought to be the major output region for the expression of innate emotional responses and the physiological responses associated with them. Output connections of the basal nucleus connect with striatal areas involved in the control of instrumental behaviors.

|

|

Structures in the medial temporal lobe were first proposed to be important for emotion in the early 20th century, when Heinrich Klüver and Paul Bucy at the University of Chicago (1939) documented unusual emotional responses in monkeys following damage to this region. One of the prominent characteristics, of what later came to be known as Klüver–Bucy syndrome (Weiskrantz, 1956), was a lack of fear manifested by a tendency to approach objects that would normally elicit a fear response. The observed deficit was called psychic blindness because of an inability to recognize the emotional importance of events or objects. In the 1950s, the amygdala was identified as the primary structure underlying these fear-related deficits. When the amygdala of monkeys was lesioned more selectively, monkeys manifested a normal disproportionate impairment in cautiousness and distrust: They approached novel or frightening objects or potential predators, such as snakes or human strangers. Not just once, they did it again and again, even if they had a bad experience. Once bitten, they were not twice shy. Although humans with amygdala damage do not show all of the classic signs of Klüver–Bucy syndrome, they do exhibit deficits in fear processing, as S.M. demonstrated. She exhibited a lack of cautiousness and distrust (Feinstein et al., 2011), and she too did not learn to avoid what others would term fearful experiences.

While studying the amygdala’s role in fear processing, investigators came to realize that it was important for emotional processing in general, because of its vast connections to many other brain regions. In fact, the amygdala is the veritable Godfather of the forebrain and is its most connected structure. The extensive connections to and from the amygdala reflect its critical roles in learning, memory, and attention in response to emotionally significant stimuli. The amygdala contains receptors for the neurotransmitters glutamate, dopamine, norepinephrine, serotonin, and acetylcholine. It also contains hormone receptors for glucocorticoids and estrogen, and peptide receptors for opiods, oxytocin, vasopressin, corticotropin-releasing factor, and neuropeptide Y. There are many ideas concerning what role the amygdala plays. Luiz Pessoa (2011) boils down the amygdala’s job description by suggesting that it is involved in determining what a stimulus is and what is to be done about it; thus, it is involved in attention, perception, value representation, and decision making. In this vein, Karen Lindquist and colleagues (2012) have proposed that the amygdala is active when the rest of the brain cannot easily predict what sensations mean, what to do about them, or what value they hold in a given context. The amygdala signals other parts of the brain to keep working until these issues have been figured out (Whalen, 2007). Lindquist’s proposal has been questioned, however, by people who have extensively studied patient S.M. (Feinstein et al., 2011), the woman we met at the beginning of this chapter. S.M. appears to have no deficit in any emotion other than fear. Even without her amygdala, she correctly understands the salience of emotional stimuli, but she has a specific impairment in the induction and experience of fear across a wide range of situations. People who have studied S.M. suggest that the amygdala is a critical brain region for triggering a state of fear in response to encounters with threatening stimuli in the external environment. They hypothesize that the amygdala furnishes connections between sensory and association cortex that are required to represent external stimuli, as well as connections between the brainstem and hypothalamic circuitry, which are necessary for orchestrating the action program of fear. As we’ll see later in this chapter, damage to the lateral amygdala prevents fear conditioning. Without the amygdala, the evolutionary value of fear is lost. For much of the remainder of this chapter, we look at the interplay of emotions and cognitive processes, such as learning, attention, and perception. Although we cannot yet settle the debate on the amygdala’s precise role, we will get a feel for how emotion is involved in various cognitive domains as we learn about the amygdala’s role in emotion processing.

TAKE-HOME MESSAGES

- The amygdala is the most connected structure in the forebrain.

- The amygdala contains receptors for many different neurotransmitters and for various hormones.

- The role that the amygdala plays in emotion is still controversial.

Interactions Between Emotion and Other Cognitive Processes

In previous chapters, we have not addressed how emotion affects the various cognitive processes that have been discussed. We all know from personal experience, however, that this happens. For instance, if we are angry about something, we may find it hard to concentrate on reading a homework assignment. If we are really enjoying what we are doing, we may not notice we are tired or hungry. When we are sad, we may find it difficult to make decisions or carry out any physical activities. In this section, we look at how emotions modulate the information processing involved in cognitive functions such as learning, attention, and decision making.

The Influence of Emotion on Learning

One day, early in the 20th century, Swiss neurologist and psychologist Édouard Claparède greeted his patient and introduced himself. She introduced herself and shook his hand. Not such a great story, until you know that he had done the same thing every day for the previous five years and his patient never remembered him. She had Korsakoff’s syndrome (Chapter 9), characterized by an absence of any short-term memory. One day Claparède concealed a pin in his palm that pricked his patient when they shook hands. The next day, once again, she did not remember him; but when he extended his hand to greet her, she hesitated for the first time. Claparède was the first to provide evidence that two types of learning, implicit and explicit, apparently are associated with two different pathways (Kihlstrom, 1995).

Implicit Emotional Learning

As first noted by Claparède, implicit learning is a type of Pavlovian learning in which a neutral stimulus (the handshake) acquires aversive properties when paired with an aversive event (the pin prick). This process is a classic example of fear conditioning. It is a primary paradigm used to investigate the amygdala’s role in emotional learning. Fear conditioning is a form of classical conditioning in which the unconditioned stimulus is aversive. One advantage of using the fear-conditioning paradigm to investigate emotional learning is that it works essentially in the same way across a wide range of species, from fruit flies to humans. One laboratory version of fear conditioning is illustrated in Figure 10.8.

FIGURE 10.8 Fear conditioning.

(a) Before training, three different stimuli—light (CS), foot shock (US1), and loud noise (US2)—are presented alone, and both the foot shock and the noise elicit a normal startle response in rats. (b) During training, light (CS) and foot shock (US1) are paired to elicit a normal startle response (UR). (c) In tests following training, presentation of light alone now elicits a response (CR), and presentation of the light together with a loud noise but no foot shock elicits a potentiated startle (potentiated CR) because the rat is startled by the loud noise and has associated the light (CS) with the startling foot shock (US).

The light is the conditioned stimulus (CS). In this example, we are going to condition the rat to associate this neutral stimulus with an aversive stimulus. Before training (Figure 10.8a), however, the light is solely a neutral stimulus and does not evoke a response from the rat. In this pretraining stage, the rat will respond with a normal startle response to any innately aversive unconditioned stimulus (US)—for example, a foot shock or a loud noise—that invokes an innate fear response. During training (Figure 10.8b), the light is paired with a shock that is delivered immediately before the light is turned off. The rat has a natural fear response to the shock (usually startle or jump), called the unconditioned response (UR). This stage is referred to as acquisition. After a few pairings of the light (CS) and the shock (US), the rat learns that the light predicts the shock, and eventually the rat exhibits a fear response to the light alone (Figure 10.8c). This anticipatory fear response is the conditioned response (CR).

The CR can be enhanced in the presence of another fearful stimulus or an anxious state, as is illustrated by the potentiated startle reflex exhibited by a rat when it sees the light (the CS) at the same time that it experiences a loud noise (a different US). The CS and resulting CR can become unpaired again if the light (CS) is presented alone, without the shock, for many trials. This phenomenon is called extinction because at this point the CR is considered extinguished (and the rat will again display the same response to light as in Figure 10.8a).

Many responses can be assessed as the CR in this type of fear-learning paradigm, but regardless of the stimulus used or the response evoked, one consistent finding has emerged in rats (and we will soon see that this also holds true in humans): Damage to the amygdala impairs conditioned fear responses. Amygdala lesions block the ability to acquire and express a CR to the neutral CS that is paired with the aversive US.

Two Pathways: The High and Low Roads Using the fear-conditioning paradigm, researchers such as Joseph LeDoux (1996), Mike Davis (1992) of Emory University, and Bruce Kapp and his colleagues (1984) of the University of Vermont have mapped out the neural circuits of fear learning, from stimulus perception to emotional response. As Figure 10.9 shows, the lateral nucleus of the amygdala serves as a region of convergence for information from multiple brain regions, allowing for the formation of associations that underlie fear conditioning. Based on results from single-unit recording studies, it is widely accepted that cells in the superior dorsal lateral amygdala have the ability to rapidly undergo changes that pair the CS to the US. After several trials, however, these cells reset to their starting point; but by then, cells in the inferior dorsal lateral region have undergone a change that maintains the adverse association. This result may be why fear that has seemingly been eliminated can return under stress—because it is retained in the memory of these cells (LeDoux, 2007). The lateral nucleus is connected to the central nucleus of the amygdala. These projections to the central nucleus initiate an emotional response if a stimulus, after being analyzed and placed in the appropriate context, is determined to represent something threatening or potentially dangerous.

|

FIGURE 10.9 Amygdala pathways and fear conditioning.

Both the CS and US sensory information enter the amygdala through cortical sensory inputs and thalamic inputs to the lateral nucleus. The convergence of this information in the lateral nucleus induces synaptic plasticity, such that after conditioning, the CS information flows through the lateral nucleus and intra-amygdalar connections to the central nucleus just as the US information does. ITC are intercalated cells, which connect the lateral and basal nuclei with the central nucleus.

|

FIGURE 10.10 The amygdala receives sensory input along two pathways.

When a hiker chances upon a bear, the sensory input activates affective memories through the cortical “high road” and subcortical “low road” projections to the amygdala. Even before these memories reach consciousness, however, they produce autonomic changes, such as an increased heart rate, blood pressure, and a startled response such as jumping back. These memories also can influence subsequent actions through the projections to the frontal cortex. The hiker will use this emotion-laden information in choosing his next action: Turn and run, slowly back up, or shout at the bear?

An important aspect of this fear-conditioning circuitry is that information about the fear-inducing stimulus reaches the amygdala through two separate but simultaneous pathways (Figure 10.10; LeDoux, 1996). One goes directly from the thalamus to the amygdala without being filtered by conscious control. Signals sent by this pathway, sometimes called the low road, reach the amygdala rapidly (15 ms in a rat), although the information this pathway sends is crude. At the same time, sensory information about the stimulus is being projected to the amygdala via another cortical pathway, sometimes referred to as the high road. The high road is slower, taking 300 ms in a rat, but the analysis of the stimulus is more thorough and complete. In this pathway, the sensory information projects to the thalamus; then the thalamus sends this information to the sensory cortex for a finer analysis. The sensory cortex projects the results of this analysis to the amygdala. The low road allows for the amygdala to receive information quickly in order to prime, or ready, the amygdala for a rapid response if the information from the high road confirms that the sensory stimulus is the CS. Although it may seem redundant to have two pathways to send information to the amygdala, when it comes to responding to a threatening stimulus, it is adaptive to be both fast and sure. Now we see the basis of LeDoux’s theory of emotion generation (see p. 436). After seeing the bear, the person’s faster low road sets in motion the fight-or-flight response, while the slower high road through the cortex provides the learned account of the bear and his foibles.

Is the amygdala particularly sensitive to certain categories of stimuli such as animals? Two lines of evidence suggest that it is. The first has to do with what is called biological motion. The visual system extracts subtle movement information from a stimulus that it uses to categorize the stimulus as either animate (having motion characteristic of a biological entity) or inanimate. This ability to recognize biological motion is innate. It has been demonstrated in newborn babies, who will attend to biological motion within the first few days of life (Simion et al., 2008), and it has been identified in other mammals (Blake, 1993). This preferential attention to biological motion is adaptive, alerting us to the presence of other living things. Interestingly, PET studies have shown that the right amygdala is activated when an individual perceives a stimulus exhibiting biological motion (Bonda et al., 1996).

The second line of evidence comes from single-cell recordings from the right amygdala. Neurons in this region have been found to respond preferentially to images of animals. This effect was shown by a group of researchers who did single-cell recordings from the amygdala, hippocampus, and entorhinal cortex in patients who had had electrodes surgically implanted to monitor their epilepsy. The recordings were made as patients looked at images of persons, animals, landmarks, or objects. Neurons in the right amygdala, but not the left, responded preferentially to pictures of animals rather than to pictures of other stimulus categories. There was no difference in the amygdala’s response to threatening or cute animals. This categorical selectivity provides evidence of a domain-specific mechanism for processing this biologically important class of stimuli that includes predators or prey (Mormann et al., 2011).

FIGURE 10.11 Bilateral amygdala lesions in patient S.P.

During a surgical procedure to reduce epileptic seizures, the right amygdala and a large section of the right temporal lobe, including the hippocampus, were removed (circled regions). Pathology in the left amygdala is visible in the white band, indicating regions where cells were damaged by neural disease.

Amygdala’s Effect on Implicit Learning The role of the amygdala in learning to respond to stimuli that have come to represent aversive events through fear conditioning is said to be implicit. This term is used because the learning is expressed indirectly through a behavioral or physiological response, such as autonomic nervous system arousal or potentiated startle. When studying nonhuman animals, we can assess the CR only through indirect, or implicit, means of expression. The rat is startled when the light goes on. In humans, however, we can also assess the response directly, by asking the participants to report if they know that the CS represents a potential aversive consequence (the US). Patients with amygdala damage fail to demonstrate an indirect CR—for instance, they would not shirk Claparède’s handshake. When asked to report the parameters of fear conditioning explicitly or consciously, however, these patients demonstrate no deficit, and might respond with “Oh, the handshake, sure, it will hurt a bit.” Thus, we know that they learned that the stimulus is associated with an aversive event. Damage to the amygdala appears to leave this latter ability intact (A. K. Anderson & Phelps, 2001; Phelps et al., 1998; Bechara et al., 1995; LaBar et al., 1995).

This concept is illustrated by the study of a patient very much like S.M. Patient S.P. also has bilateral amygdala damage (Figure 10.11). To relieve epilepsy, at age 48 S.P. underwent a lobectomy that removed her right amygdala. MRI at that time revealed that her left amygdala was already damaged, most likely from mesial temporal sclerosis, a syndrome that causes neuronal loss in the medial temporal regions of the brain (A. K. Anderson & Phelps, 2001; Phelps et al., 1998). Like S.M., S.P. is unable to recognize fear in the faces of others (Adolphs et al., 1999).

In a study on the role of the amygdala in human fear conditioning, S.P. was shown a picture of a blue square (the CS), which the experimenters periodically presented for 10 s. During the acquisition phase, S.P. was given a mild electrical shock to the wrist (the US) at the end of the 10-s presentation of the blue square (the CS). In measures of skin conductance response (Figure 10.12), S.P.’s performance was as predicted: She showed a normal fear response to the shock (the UR), but no change in response when the blue square (the CS) was presented, even after several acquisition trials. This lack of change in the skin conductance response to the blue square demonstrates that she failed to acquire a CR.

|

FIGURE 10.12 S.P. showed no skin conductance response to conditioned stimuli.

Unlike control participants, S.P. (red line) showed no response to the blue square (CS) after training but did respond to the shock (the US).

|

|

Following the experiment, S.P. was shown her data and that of a control participant, as illustrated in Figure 10.12, and she was asked what she thought. She was somewhat surprised that she showed no change in skin conductance response (the CR) to the blue square (the CS). She reported that she knew after the very first acquisition trial that she was going to get a shock to the wrist when the blue square was presented. She claimed to have figured this out early on and expected the shock whenever she saw the blue square. She was not sure what to make of the fact that her skin conductance response did not reflect what she consciously knew to be true. This dissociation between intact explicit knowledge of the events that occurred during fear conditioning and impaired conditioned responses has been observed in other patients with amygdala damage (Bechara et al., 1995; LaBar et al., 1995).

As discussed in Chapter 9, explicit or declarative memory for events depends on another medial temporal lobe structure: the hippocampus, which, when damaged, impairs the ability to explicitly report memory for an event. When the conditioning paradigm that we described for S.P. was conducted with patients who had bilateral damage to the hippocampus but an intact amygdala, the opposite pattern of performance emerged. These patients showed a normal skin conductance response to the blue square (the CS), indicating acquisition of the conditioned response. When asked what had occurred during conditioning, however, they were unable to report that the presentations of the blue square were paired with the shock, or even that a blue square was presented at all—just like Claparède’s patient.

This double dissociation between patients who have amygdala lesions and patients with hippocampal lesions is evidence that the amygdala is necessary for the implicit expression of emotional learning, but not for all forms of emotional learning and memory. The hippocampus is necessary for the acquisition of explicit or declarative knowledge of the emotional properties of a stimulus, whereas the amygdala is critical for the acquisition and expression of an implicitly conditioned fear response.

Explicit Emotional Learning

The double dissociation just described clearly indicates that the amygdala is necessary for implicit emotional learning, but not for explicit emotional learning. This does not mean that the amygdala is uninvolved with explicit learning and memory. How do we know? Let’s look at an example of explicit emotional learning.

Liz is walking down the street in her neighborhood and sees a neighbor’s dog, Fang, on the sidewalk. Even though she is a dog owner herself and likes dogs in general, Fang scares her. When she encounters him, she becomes nervous and fearful, so she decides to walk on the other side of the street. Why might Liz, who likes dogs, be afraid of this particular dog? There are a few possible reasons: For example, perhaps Fang bit her once. In this case, her fear response to Fang was acquired through fear conditioning. Fang (the CS) was paired with the dog bite (the US), resulting in pain and fear (the UR) and an acquired fear response to Fang in particular (the CR).

Liz may fear Fang for another reason, however. She has heard from her neighbor that this is a mean dog that might bite her. In this case she has no aversive experience linked to this particular dog. Instead, she learned about the aversive properties of the dog explicitly. Her ability to learn and remember this type of information depends on her hippocampal memory system. She likely did not experience a fear response when she learned this information during a conversation with her neighbor. She did not experience a fear response until she actually encountered Fang. Thus, her reaction is not based on actual experience with the dog, but rather is anticipatory and based on her explicit knowledge of the potential aversive properties of this dog. This type of learning, in which we learn to fear or avoid a stimulus because of what we are told (as opposed to actually having the experience), is a common example of emotional learning in humans.

The Amygdala Effect on Explicit Learning The question is this: Does the amygdala play a role in the indirect expression of the fear response in instructed fear? From what we know about patient S.M., what would you guess? Elizabeth Phelps of New York University and her colleagues (Funayama et al., 2001; Phelps et al., 2001) addressed this question using an instructed fear paradigm, in which the participant was told that a blue square may be paired with a shock. They found that, even though explicit learning of the emotional properties of the blue square depends on the hippocampal memory system, the amygdala is critical for the expression of some fear responses to the blue square (Figure 10.13a). During the instructed-fear paradigm, patients with amygdala damage were able to learn and explicitly report that some presentations of the blue square might be paired with a shock to the wrist. In truth, though, none of the participants ever received a shock. Unlike normal control participants, however, patients with amygdala damage did not show a potentiated startle response when the blue square was presented. They knew consciously that they would receive a shock, but had no emotional response. Normal control participants showed an increase in skin conductance response to the blue square that was correlated with amygdala activity (Figure 10.13b). These results suggest that, in humans, the amygdala is sometimes critical for the indirect expression of a fear response when the emotional learning occurs explicitly. Similar deficits have been observed when patients with amygdala lesions respond to emotional scenes (Angrilli et al., 1996; Funayama et al., 2001).

Although animal models of emotional learning highlight the role of the amygdala in fear conditioning and the indirect expression of the conditioned fear response, human emotional learning can be much more complex. We can learn that stimuli in the world are linked to potentially aversive consequences in a variety of ways, including instruction, observation, and experience. In whatever way we learn the aversive or threatening nature of stimuli—whether explicit and declarative, implicit, or both—the amygdala may play a role in the indirect expression of the fear response to those stimuli.

Amygdala, Arousal, and Modulation of Memory The instructed-fear studies indicate that when an individual is taught that a stimulus is dangerous, amygdala activity can be influenced by a hippocampal-dependent declarative representation about the emotional properties of stimuli (in short, the memory that someone told you the dog was mean). The amygdala activity subsequently modulates some indirect emotional responses. But is it possible for the reverse to occur? Can the amygdala modulate the activity of the hippocampus? Put another way, can the amygdala influence what you learn and remember about an emotional event?

FIGURE 10.13 Responses to instructed fear.

(a) While performing a task in the instructed fear protocol, participants showed an arousal response (measured by skin conductance response) consistent with fear to the blue square, which they were told might be linked to a shock. The presentation of the blue square also led to amygadal activation. (b) There is a correlation between the strength of the skin conductance response indicating arousal and the activation of the amygdala.

The types of things we recollect every day are things like where we left the keys, what we said to a friend the night before, or whether we turned the iron off before leaving the house. When we look back on our lives, however, we do not remember these mundane events. We remember a first kiss, being teased by a friend in school, opening our college acceptance letter, or hearing about a horrible accident. The memories that last over time are those of emotional (not just fearful) or important (i.e., arousing) events. These memories seem to have a persistent vividness that other memories lack.

James McGaugh and his colleagues (1992, 1996; Ferry & McGaugh, 2000) at the University of California, Irvine, investigated whether this persistence of emotional memories is related to the action of the amygdala during emotional arousal. An arousal response can influence people’s ability to store declarative or explicit memories. For example, investigators frequently use the Morris water maze task (see Chapter 9) to test a rat’s spatial abilities and memory. McGaugh found that a lesion to the amygdala does not impair the rats’ ability to learn this task under ordinary circumstances. If a rat with a normal amygdala is aroused immediately after training, by either a physical stressor or the administration of drugs that mimic an arousal response, then the rat will show improved retention of this task. The memory is enhanced by arousal. In rats with a lesion to the amygdala, however, this arousal-induced enhancement of memory, rather than memory acquisition itself, is blocked (McGaugh et al., 1996). Using pharmacological lesions to temporarily disable the amygdala immediately after learning also eliminates any arousal-enhanced memory effect (Teather et al., 1998).

Two important aspects of this work help us understand the mechanism underlying the role of the amygdala in enhancing declarative memory that has been observed with arousal. The first is that the amygdala’s role is modulatory. The tasks used in these studies depend on the hippocampus for acquisition. In other words, the amygdala is not necessary for learning this hippocampal-dependent task, but it is necessary for the arousal-dependent modulation of memory for this task.