|

Any man who can drive safely while kissing a pretty girl is simply not giving the kiss the attention it deserves. ~ Albert Einstein |

Chapter 7

Attention

OUTLINE

The Anatomy of Attention

The Neuropsychology of Attention

Models of Attention

Neural Mechanisms of Attention and Perceptual Selection

Attentional Control Networks

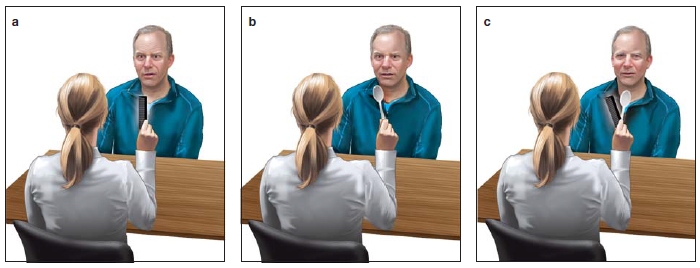

A PATIENT, WHO HAD a severe stroke several weeks earlier, sits with his wife as she talks with his neurologist. Although at first it seemed that the stroke had left him totally blind, his wife states that he can sometimes see things. They are hoping his vision will improve. The neurologist soon realizes that her patient’s wife is correct. The man does have serious visual problems, but he is not completely blind. Taking a comb from her pocket, the doctor holds it in front of her patient and asks him, “What do you see?” (Figure 7.1a).

“Well, I’m not sure,” he replies, “but... oh... it’s a comb, a pocket comb.”

“Good,” says the doctor. Next she holds up a spoon and asks the same question (Figure 7.1b).

After a moment the patient replies, “I see a spoon.”

The doctor nods and then holds up the spoon and the comb together. “What do you see now?” she asks.

He hesitantly replies, “I guess... I see a spoon.”

“Okay...,” she says as she overlaps the spoon and comb in a crossed fashion so they are both visible in the same location. “What do you see now?” (Figure 7.1c). Oddly enough, he sees only the comb. “What about a spoon?” she asks.

“Nope, no spoon,” he says, but then suddenly blurts out, “Yes, there it is, I see the spoon now.”

“Anything else?”

Shaking his head, the patient replies, “Nope.”

Shaking the spoon and the comb vigorously in front of her patient’s face, the doctor persists, “You don’t see anything else, nothing at all?”

He stares straight ahead, looking intently, and finally says, “Yes... yes, I see them now... I see some numbers.”

“What?” says the puzzled doctor. “Numbers?”

“Yes,” he squints and appears to strain his vision, moving his head ever so slightly, and replies, “I see numbers.” The doctor then notices that the man’s gaze is directed to a point beyond her and not toward the objects she is holding. Turning to glance over her own shoulder, she spots a large clock on the wall behind her!

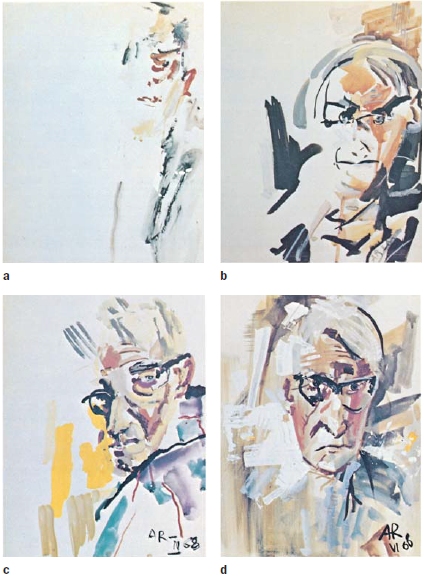

FIGURE 7.1 Examination of a patient recovering from a cortical stroke.

(a) The doctor holds up a pocket comb and asks the patient what he sees. The patient reports seeing the comb. (b) The doctor then holds up a spoon, and the patient reports seeing the spoon too. (c) But when the doctor holds up both the spoon and the comb at the same time, the patient says he can see only one object at a time. The patient has Bálint’s syndrome.

Even though the doctor is holding both objects in one hand directly in front of her patient, overlapping them in space and in good lighting, he sees only one item at a time. That one item may even be a different item altogether: one that is merely in the direction of his gaze, such as the clock on the wall. The neurologist diagnoses the patient: He has Bálint’s syndrome, first described in the late 19th century by the Hungarian neurologist and psychiatrist Rezső Bálint. It is a severe disturbance of visual attention and awareness, caused by bilateral damage to regions of the posterior parietal and occipital cortex. The result of this attention disturbance is that only one or a small subset of available objects are perceived at any one time and are mislocalized in space. The patient can “see” each of the objects presented by the doctor—the comb, the spoon, and even the numbers on the clock. He fails, however, to see them all together and cannot accurately describe their locations with respect to each other or to himself.

Bálint’s syndrome is an extreme pathological instance of what we all experience daily: We are consciously aware of only a small bit of the vast amount of information available to our sensory systems from moment to moment. By looking closely at patients with Bálint’s syndrome and the lesions that cause it, we have come to learn more about how, and upon what, our brain focuses attention. The central problem in the study of attention is how the brain is able to select some information at the expense of other information.

Robert Louis Stevenson wrote, “The world is full of a number of things, I’m sure we should all be as happy as kings.” Although those things may make us happy, the sheer number of them presents a problem to our perception system: information overload. We know from experience that we are surrounded by more information than we can handle and comprehend at any given time. The nervous system, therefore, has to make “decisions” about what to process. Our survival may depend on which stimuli are selected and in what order they are prioritized for processing. Selective attention is the ability to prioritize and attend to some things while ignoring others. What determines the priority? Many things. For instance, an optimal strategy in many situations is to attend to stimuli that are relevant to current behavior and goals. For example, to survive this class, you need to attend to this chapter rather than your Facebook page. This is goal-driven control (also called top-down control) driven by an individual’s current behavioral goals and shaped by learned priorities based on personal experience and evolutionary adaptations. Still, if you hear a loud bang, even while dutifully attending this book, you reflexively pop up your head and check it out. That is good survival behavior because a loud noise may presage danger. Your reaction was stimulus driven and is therefore termed stimulus-driven control (also known as bottom-up or reflexive control), which is much less dependent on current behavioral goals.

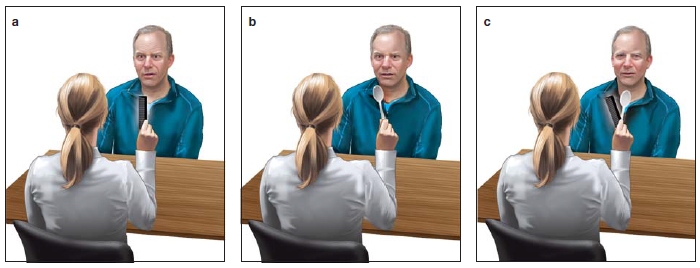

Attention grabbed the attention of William James (Figure 7.2). At the end of the 19th century, this great American psychologist made an astute observation:

Everyone knows what attention is. It is the taking possession by the mind, in clear and vivid form, of one out of what seem several simultaneously possible objects or trains of thought. Focalization, concentration of consciousness are of its essence. It implies withdrawal from some things in order to deal effectively with others, and is a condition which has a real opposite in the confused, dazed, scatterbrain state. (James, 1890)

FIGURE 7.2

William James (1842–1910), the great American psychologist.

In this insightful quote, James has captured key characteristics of attentional phenomena that are under investigation today. For example, his statement “it is the taking possession by the mind” suggests that we can choose the focus of attention; that is, it can be voluntary. His mention of “one out of what seem several simultaneously possible objects or trains of thought” refers to the inability to attend to many things at once, and hence the selective aspects of attention. James raises the idea of limited capacity in attention, by noting that “it implies withdrawal from some things in order to deal effectively with others.”

As clear and articulate as James’s writings were, little was known about the behavioral, computational, or neural mechanisms of attention during his lifetime. Since then, knowledge about attention has blossomed, and researchers have identified multiple types and levels of attentive behavior. First, let’s distinguish selective attention from arousal. Arousal refers to the global physiological and psychological state of the organism. Our level of arousal is the point where we fall on the continuum from being hyperaroused (such as during periods of intense fear) to moderately aroused (which must describe your current state as you start to read about the intriguing subject of attention) to groggy (when you first got up this morning) to lightly sleeping to deeply asleep.

Selective attention, on the other hand, is not a global brain state. Instead, it is how—at any level of arousal—attention is allocated among relevant inputs, thoughts, and actions while simultaneously ignoring irrelevant or distracting ones. As shorthand, we will use the term attention when referring to the more specific concept of selective attention. Attention influences how people code sensory inputs, store that information in memory, process it semantically, and act on it to survive in a challenging world. This chapter focuses on the mechanisms of selective attention and its role in perception and awareness.

Mechanisms that determine where and on what our attention is focused are referred to as attentional control mechanisms. They involve widespread, but highly specific, brain networks. These attentional control mechanisms influence specific stages of information processing, where it is said that “selection” of inputs (or outputs) takes place—hence the term selective attention. In this chapter, we first review the anatomical structures involved in attention. Then, we consider how damage to the brain changes human attention and gives us insights into how attention is organized in the brain. Next, we discuss how attention influences sensation and perception. We conclude with a discussion of the brain networks used for attentional control.

TAKE-HOME MESSAGES

The Anatomy of Attention

Our attention system uses subcortical and cortical networks within the brain that interact to enable us to selectively process information in the brain.

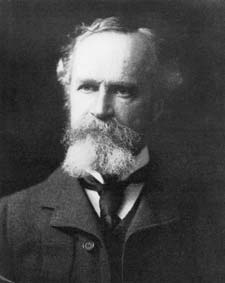

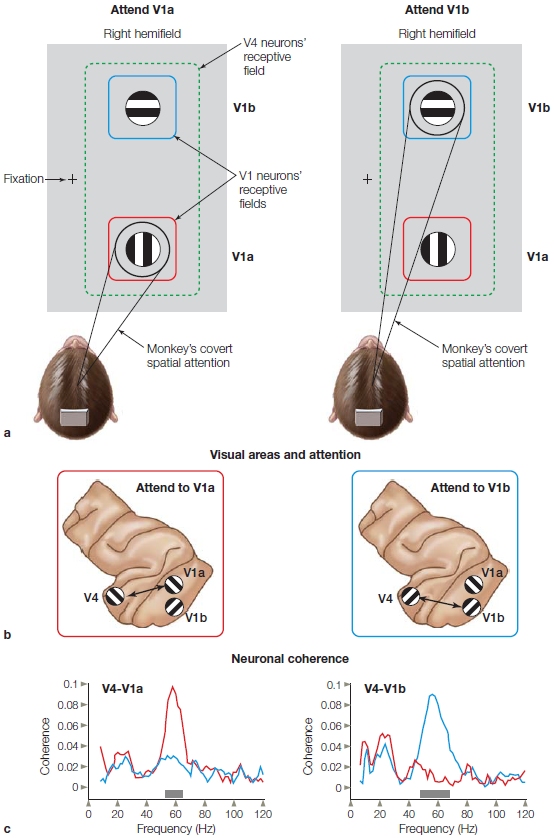

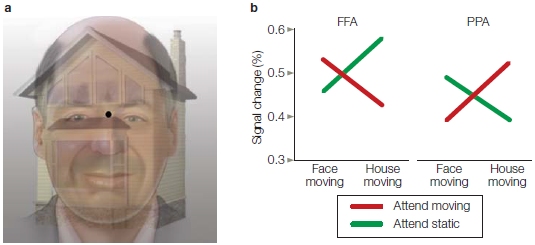

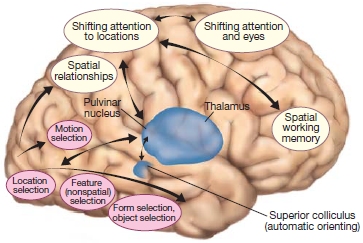

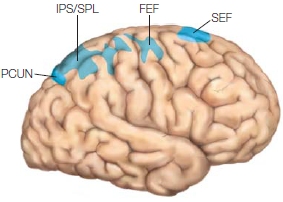

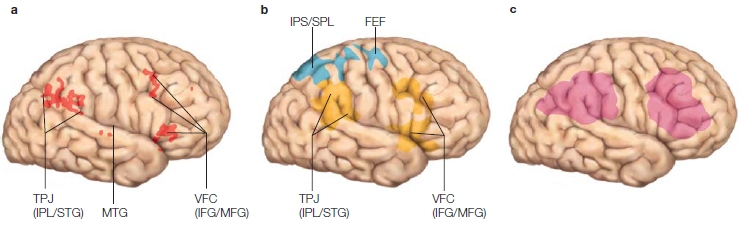

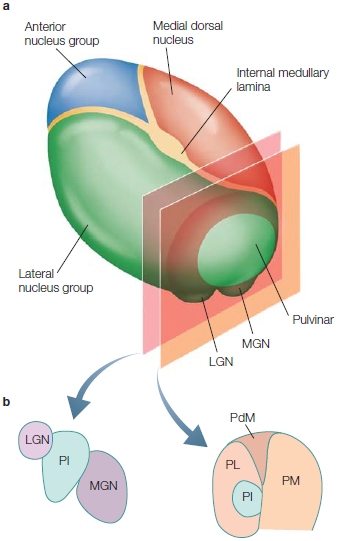

Several subcortical structures are relevant to both attentional control and selection. The superior colliculus in the midbrain and the pulvinar are involved in aspects of the control of attention. We know that damage to these structures can lead to deficits in the ability to orient overt (i.e., eye gaze) and covert (i.e., attention directed without changes in eyes, head, or body orientation) attention. Within the cortex are several areas that are important in attention—portions of the frontal cortex, posterior parietal cortex, and posterior superior temporal cortex as well as more medial brain structures including the anterior cingulate cortex, the posterior cingulate cortex, and insula. Cortical and subcortical areas involved in controlling attention are shown in the Anatomical Orientation box. As we will learn, cortical sensory regions are also involved, because attention affects how sensory information is processed in the brain.

The Neuropsychology of Attention

Much of what neuroscientists know about brain attention systems has been gathered by examining patients who have brain damage that influences attentional behavior. Many disorders result in deficits in attention, but only a few provide clues to which brain systems are being affected. Some of the best-known disorders of attention (e.g., attention deficit/hyperactivity disorder, or ADHD) are the result of disturbances in neural processing within brain attention systems. The portions of the brain’s attention networks affected by ADHD have only recently begun to be identified.

In contrast, important information has been derived about attentional mechanisms and the underlying neuroanatomical systems supporting attention, by investigating classic syndromes like “unilateral spatial neglect” (described next) and Bálint’s syndrome. These disorders are the result of focal brain damage (e.g., stroke) that can be mapped in postmortem analyses and with brain imaging in the living human. Let’s consider how brain damage has helped us understand brain attention mechanisms.

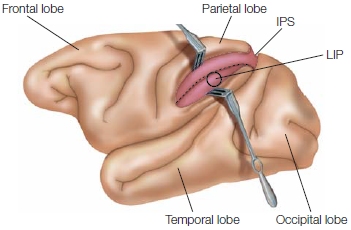

ANATOMICAL ORIENTATION

The anatomy of attention

The major regions of the brain involved in attention are portions of the frontal and parietal lobes, and subcortical structures, including parts of the thalamus and the superior colliculi.

Neglect

Unilateral spatial neglect, or simply neglect, results when the brain’s attention network is damaged in only one hemisphere. The damage typically occurs from a stroke and, unfortunately, is quite common. Although either hemisphere could be affected, the more severe and persistent effects occur when the right hemisphere is damaged. Depending on the severity of the damage, its location, and how much time has passed since the damage occurred, patients may have reduced arousal and processing speeds, as well as an attention bias in the direction of their lesion (ipsilesional). For example, a right-hemisphere lesion would bias attention toward the right, resulting in a neglect of what is going on in the left visual field. Careful testing can show that these symptoms are not the result of partial blindness, as we will describe later. A patient’s awareness of his lesion and deficit can be severely limited or lacking altogether. For instance, patients with right-hemisphere lesions may behave as though the left regions of space and the left parts of objects simply do not exist. If you were to visit a neglect patient and enter the room from the left, he might not notice you. He may have groomed only the right side of his body, leaving half his face unshaved and half his hair uncombed. If you were to serve him dinner, he may eat only what is on the right side of his plate; when handed a book, he may read only the right-hand page. What’s more, he may deny having any problems. Such patients are said to “neglect” the information.

FIGURE 7.3 Recovering from a stroke.

Self-portraits by the late German artist Anton Raederscheidt, painted at different times following a severe right-hemisphere stroke, which left him with neglect to contralesional space. © 2013 Artists Rights Society (ARS), New York/VG Bild-Kunst, Bonn.

A graphic example of neglect is seen in paintings by the late German artist Anton Raederscheidt. At age 67, Raederscheidt suffered a stroke in the right hemisphere, which left him with neglect. The pictures in Figure 7.3 are self-portraits that he painted at different times after the stroke occurred and during his partial recovery. The paintings show his failure to represent portions of contralateral space—including, remarkably, portions of his own face. Notice in the first painting (Figure 7.3a), done shortly after his stroke, that almost the entire left half of the canvas is untouched. The image he paints of himself, in addition to being poorly formed, is missing the left half. The subject has one eye, part of a nose, and one ear; toward the left, the painting fades away. In each of the next three paintings (Figure 7.3b–d), made over the following several weeks and months, Raederscheidt uses more and more of the canvas and includes more and more of his face, until in Figure 7.3d, he uses most of the canvas. He now has a bilaterally symmetrical face, although some minor asymmetries persist in his painting.

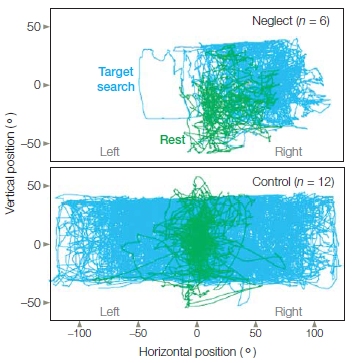

FIGURE 7.4 Gaze bias in neglect patients.

Neglect patients (top) show an ipsilesional gaze bias while searching for a target letter in a letter array (blue traces) and at rest (green traces). Non-neglect patients (bottom) showed no bias.

Typically, patients show only a subset of these extreme signs of neglect, and indeed, neglect can manifest itself in different ways. The common thread is that, despite normal vision, neglect involves deficits in attending to and acting in the direction that is opposite the side of the unilateral brain damage. One way to observe this phenomenon is to look at the patterns of eye movements in patients with neglect. Figure 7.4 (top) shows eye movement patterns in a patient with a right hemisphere lesion and neglect during rest and when searching a bilateral visual array for a target letter. The patient’s eye movements are compared to those of patients with right hemisphere strokes who showed no signs of neglect (Figure 7.4, bottom). The neglect patient shows a pattern of eye movements that are biased in the direction of the right visual field, while those without neglect search the entire array, moving their eyes equally to the left and right.

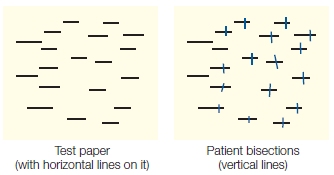

Neuropsychological Tests of Neglect

To diagnose neglect of contralesional space, neuropsychological tests are used. In the line cancellation test, patients are given a sheet of paper containing many horizontal lines and are asked to bisect the lines precisely in the middle by drawing a vertical line. Patients with lesions of the right hemisphere and neglect tend to bisect the lines to the right of the midline. They may also completely miss lines on the left side of the paper (Figure 7.5). In this example, the pattern of line cancellation is evidence of neglect at the level of object representations (each line) as well as visual space (the visual scene represented by the test paper).

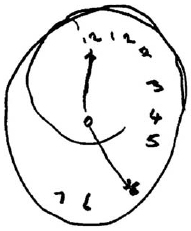

A related test is copying objects or scenes. When asked to copy a simple line drawing, such as a flower or clock face, patients with neglect have difficulty. Figure 7.6 shows an example from a patient with a right-hemisphere stroke who was asked to copy a clock. Like the artist Raederscheidt, the patient shows an inability to draw the entire object and tends to neglect the left side. Even when they know and can state that clocks are round and include numbers 1 to 12, they cannot properly copy the image or draw it from memory.

FIGURE 7.5 Patients with neglect are biased in the cancellation tasks.

Patients suffering from neglect are given a sheet of paper containing many horizontal lines and asked under free-viewing conditions to bisect the lines precisely in the middle with a vertical line. They tend to bisect the lines to the right (for a right-hemisphere lesion) of the midline of each page and/or each line, owing to neglect for contralesional space and the contralesional side of individual objects.

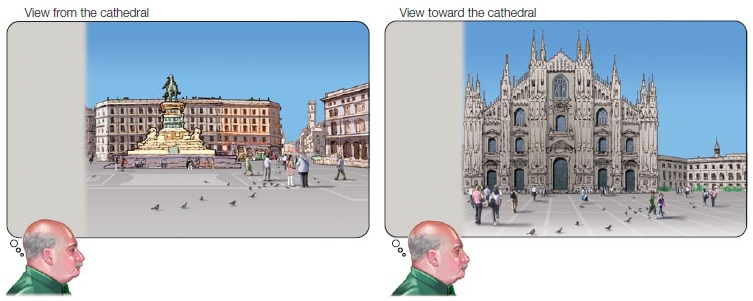

So far, we have considered neglect for items that are actually present in the visual world. But neglect can also affect the imagination and memory. Eduardo Bisiach and Claudio Luzzatti (1978) studied patients with neglect caused by unilateral damage to their right hemisphere. They asked their patients, who were from Milan, to imagine themselves standing on the steps of the Milan Cathedral (the Duomo di Milano) and to describe from memory the piazza (church square) from that viewpoint. Amazingly, the patients neglected things on the side of the piazza contralateral to their lesion, just as if they were actually standing there looking at it. When the researchers next asked the patients to imagine themselves standing across the piazza, facing toward the Duomo, they reported items from visual memory that they had previously neglected, and neglected the side of the piazza that they had just described (Figure 7.7).

FIGURE 7.6 Image drawn by a right-hemisphere stroke patient who has neglect.

See text for details.

Thus, neglect is found for items in visual memory during remembrance of a scene as well as for items in the external sensory world. The key point in the Bisiach and Luzzatti experiment is that the patients’ neglect could not be attributed to lacking memories but rather indicated that attention to parts of the recalled images was biased.

Extinction

How do we distinguish neglect from blindness in the contralateral visual hemifields? Well, visual field testing can show that neglect patients detect stimuli normally when those stimuli are salient and presented in isolation. For example, when simple flashes of light or the wiggling fingers of a neurologist are shown at different single locations within the visual field of a neglect patient, he can see all the stimuli, even those that are in the contralateral (neglected) hemifield. This result tells us that the patient does not have a primary visual deficit. The patient’s neglect becomes obvious when he is presented simultaneously with two stimuli, one in each hemifield. In that case, the patient fails to perceive or act on the contralesional stimulus. This result is known as extinction, because the presence of the competing stimulus in the ipsilateral hemifield prevents the patient from detecting the contralesional stimulus. With careful testing, doctors often can see residual signs of extinction, even after the most obvious signs of neglect have remitted as a patient recovers. Figure 7.8 shows a neurologist testing a patient with right parietal damage in order to investigate his vision, and to reveal his neglect by showing extinction.

FIGURE 7.7 Visual recollections of two ends of an Italian piazza by a neglect patient.

The neglected side in visual memory (shaded gray) was contralateral to the side with cortical damage. The actual study was performed using the famous Piazza del Duomo in Milan.

FIGURE 7.8 Test of neglect and extinction.

To a patient with a right-hemisphere lesion from a stroke, a neurologist presented a visual stimulus (raised fingers) first in the left hemifield (a) and then in the right hemifield (b). The patient correctly detected and responded (by pointing) to the stimuli if presented one at a time, demonstrating an ability to see both stimuli and therefore no major visual field defects. When the stimuli were presented simultaneously in the left and right visual fields (c), however, the patient reported seeing only the one in the right visual field. This effect is called extinction because the simultaneous presence of the stimulus in the patient’s right field leads to the stimulus on the left of the patient being extinguished from awareness.

It’s important to realize that these biases against the contralesional sides of space and objects can be overcome if the patient’s attention is directed to the neglected locations of items. This is one reason the condition is described as a bias, rather than a loss of the ability to focus attention contralesionally.

One patient’s comments help us understand how these deficits might feel subjectively: “It doesn’t seem right to me that the word neglect should be used to describe it. I think concentrating is a better word than neglect. It’s definitely concentration. If I am walking anywhere and there’s something in my way, if I’m concentrating on what I’m doing, I will see it and avoid it. The slightest distraction and I won’t see it” (Halligan & Marshall, 1998).

Comparing Neglect and Bálint’s Syndrome

Let’s compare the pattern of deficits in neglect with those of the patient with Bálint’s syndrome, described at the beginning of this chapter. In contrast to the patient with neglect, a Bálint’s patient demonstrates three main deficits that are characteristic of the disorder: simultanagnosia, ocular apraxia, and optic ataxia.

Simultanagnosia is difficulty perceiving the visual field as a whole scene, such as when the patient saw only the comb or the spoon, but not both at the same time. Ocular apraxia is a deficit in making eye movements (saccades) to scan the visual field, resulting in the inability to guide eye movements voluntarily. When the physician overlapped the spoon and comb in space (see Figure 7.1), the Bálint’s patient should have been able, given his direction of gaze, to see both objects, but he could not. Lastly, Bálint’s patients also suffer from optic ataxia, a problem in making visually guided hand movements. If the doctor had asked the Bálint’s patient to reach out and grasp the comb, he would have had a difficult time reaching through space to grasp the object.

Both neglect and Bálint’s syndrome include severe disturbances in perception. The patterns of perceptual deficits are quite different, however, because different brain areas are damaged in each disorder. Neglect is the result of unilateral lesions of the parietal, posterior temporal, and frontal cortex. Neglect also can be due to damage in subcortical areas including the basal ganglia, thalamus, and midbrain. Bálint’s patients suffer from bilateral occipitoparietal lesions. Thus, researchers obtain clues about the organization of the brain’s attention system by considering the location of the lesions that cause these disorders and the differing perceptual and behavioral results. Neglect shows us that a network of cortical and subcortical areas, especially in the right hemisphere, result in disturbances of spatial attention. Bálint’s syndrome shows us that posterior parietal and occipital damage to both hemispheres leads to an inability to perceive multiple objects in space, which is necessary to create a scene.

What else can we understand about attention by contrasting patients with neglect to those with Bálint’s syndrome? From patients with neglect, we understand that the symptoms involve biases in attention based on spatial coordinates, and that these coordinates can be described in different reference frames. Put another way, neglect can be based on spatial coordinates either with respect to the patient (egocentric reference frame) or with respect to an object in space (allocentric reference frame). This finding tells us that attention can be directed within space and also within objects. Most likely these two types of neglect are guided by different processes. Indeed, the brain mechanisms involved with attending objects can be affected even when no spatial biases are seen. This phenomenon is seen in patients with Bálint’s syndrome, who have relatively normal visual fields but cannot attend to more than one or a few objects at a time, even when the objects overlap in space.

The phenomenon of extinction in neglect patients suggests that sensory inputs are competitive, because when two stimuli presented simultaneously compete for attention, the one in the ipsilesional hemifield will win the competition and reach awareness. Extinction also demonstrates that after brain damage, patients experience reduced attentional capacity: When two competing stimuli are presented at once, the neglect patient is aware of only one of them.

It is important to note that none of these attentional deficits are the result of damage to the visual system per se, because the patient is not simply blind. These observations from brain damage and resultant attentional problems set the stage for us to consider several questions:

To answer these questions, let’s look next at the cognitive and neural mechanisms of attention.

TAKE-HOME MESSAGES

Models of Attention

When people turn their attention to something, the process is called orienting. The concept of orienting our selective attention can be divided into two categories: voluntary attention and reflexive attention. Voluntary attention is our ability to intentionally attend to something, such as this book. It is a goal-driven process, meaning that goals, knowledge, or expectations are used to guide information processing. Reflexive attention is a bottom-up, stimulus-driven process in which a sensory event—maybe a loud bang, the sting of a mosquito, a whiff of garlic, a flash of light or motion—captures our attention. As we will see later in this chapter, these two forms of attention differ in their properties and perhaps partly in their neural mechanisms.

Attentional orienting also can be either overt or covert. We all know what overt attention is—when you turn your head to orient toward a stimulus, whether it is for your eyes to get a better look, your ears to pick up a whisper, or your nose to sniff the frying bacon—you are exhibiting overt attention. You could appear to be reading this book, however, while actually paying attention to the two students whispering at the table behind you. This behavior is covert attention.

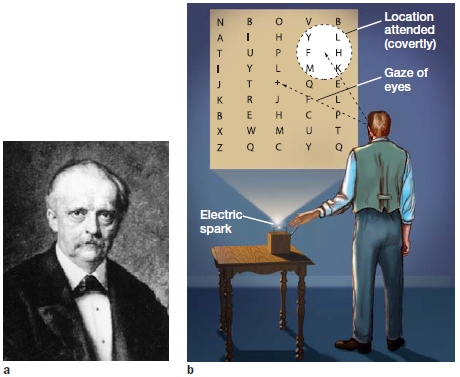

Hermann von Helmholtz and Covert Attention

In 1894, Hermann von Helmholtz (Figure 7.9a) performed a fascinating experiment in visual perception. He constructed a screen on which letters were painted at various distances from the center (Figure 7.9b). He hung the screen at one end of his lab and then turned off all the lights to create a completely dark environment. Helmholtz then used an electric spark to make a flash of light that briefly illuminated the screen. His goal was to investigate aspects of visual processing when stimuli were briefly perceived. As often happens in science, however, he stumbled on an interesting phenomenon.

|

FIGURE 7.9 Helmholtz’s visual attention experiment. |

|

Helmholtz noted that the screen was too large to view in its entirety without moving his eyes. Nonetheless, even when he kept his eyes fixed right at the center of the screen, he could decide in advance where he would pay attention: He made use of his covert attention. As we noted in the introduction to this section, covert means that the location he directed his attention toward could be different from the location toward which he was looking. Through these covert shifts of attention, Helmholtz observed that during the brief period of illumination, he could perceive letters located within the focus of his attention better than letters that fell outside the focus of his attention, even when his eyes remained directed toward the center of the screen.

Try this yourself using Figure 7.9. Hold the textbook 12 inches in front of you and stare at the plus sign in the center of Helmholtz’s array of letters. Now, without moving your eyes from the plus sign, read out loud the letters closest to the plus sign in a clockwise order. You have covertly focused on the letters around the plus sign. As Helmholtz wrote in his Treatise on Physiological Optics (translated into English in 1924), “These experiments demonstrated, so it seems to me, that by a voluntary kind of intention, even without eye movements, and without changes of accommodation, one can concentrate attention on the sensation from a particular part of our peripheral nervous system and at the same time exclude attention from all other parts.”

In the mid 20th century, experimental psychologists began to develop methods for quantifying the influence of attention on perception and awareness. Models of how the brain’s attention system might work were built from these data and from observations like those of Helmholtz—and from everyday experiences, such as attending a Super Bowl party.

The Cocktail Party Effect

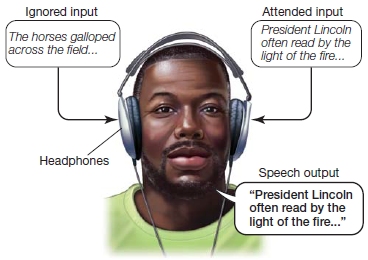

Imagine yourself at a Super Bowl party having a conversation with a friend. How can you focus on this single conversation while the TV is blasting and boisterous conversations are going on around you? British psychologist E. C. Cherry (1953) wondered the same thing while attending cocktail parties. His curiosity and subsequent research helped to found the modern era of attention studies, with what was dubbed the cocktail party effect.

Selective auditory attention allows you to participate in a conversation at a busy bar or party while ignoring the rest of the sounds around you. By selectively attending, you can perceive the signal of interest amid the other noises. If, however, the person you are conversing with is boring, then you can give covert attention to a conversation going on behind you while still seeming to focus on the conversation in front of you (Figure 7.10).

FIGURE 7.10 Auditory selective attention in a noisy environment.

The cocktail party effect of Cherry (1953), illustrating how, in the noisy, confusing environment of a cocktail party, people are able to focus attention on a single conversation, and, as the man in the middle right of the cartoon illustrates, to covertly shift attention to listen to a more interesting conversation than the one in which they continue to pretend to be engaged.

Cherry investigated this ability by designing a cocktail party in the lab: Normal participants, wearing headphones, listened to competing speech inputs to the two ears—this setup is referred to as dichotic listening. Cherry then asked the participants to attend to and verbally “shadow” the speech (immediately repeat each word) coming into one ear, while simultaneously ignoring the input to the other ear. Cherry discovered that under such conditions, participants could not (mostly) report any details of the speech in the unattended ear (Figure 7.11). In fact, all they could reliably report from the unattended ear was whether the speaker was male or female. Attention, in this case voluntary attention, affected what was processed. This finding led Cherry and others to propose that attention to one ear results in better encoding of the inputs to the attended ear and loss or degradation of the unattended inputs to the other ear. You experience this type of thing when the person sitting next to you in lecture whispers a juicy tidbit in your ear. A moment later, you realize that you just missed what the lecturer said, although you could just as well have heard him with your other ear. As foreshadowed by William James, information processing bottlenecks seem to occur at stages of perceptual analysis that have a limited capacity. What is processed are the high-priority inputs that you selected. Many processing stages take place between the time information enters the eardrum and you become aware of speech. At what stages do these bottlenecks exist such that attention is necessary to favor the attended over the unattended signals?

FIGURE 7.11 Dichotic listening study setup.

Different auditory information (stories) are presented to each ear of a participant. The participant is asked to “shadow” (immediately repeat) the auditory stimuli from one ear’s input (e.g., shadow the left-ear story and ignore the right-ear input).

This question has been difficult to answer. It has led to one of the most debated issues in psychology over the past five decades. Are the effects of selective attention evident early in sensory processing or only later, after sensory and perceptual processing are complete? Think about this question differently: Does the brain faithfully process all incoming sensory inputs to create a representation of the external world, or can processes like attention influence sensory processing? Is what you perceive a combination of what is in the external world and what is going on inside your brain? By “going on inside your brain,” we mean what your current goals may be, and what knowledge is stored in your brain. Consider the example in Figure 7.12. The first time you look at this image, you won’t see the Dalmatian dog in the black-and-white scene; you cannot perceive it easily. Once it is pointed out to you, however, you perceive the dog whenever you are shown the picture. Something has changed in your brain, and it is not simply knowledge that it is a photo of dog—the dog jumps out at you, even when you forget having seen the photo before. This is an example of the knowledge stored in your brain influencing your perception. Perhaps it is not either-or; it may be that attention affects processing at many steps along the way from sensory transduction to awareness.

FIGURE 7.12

Dalmatian illusion.

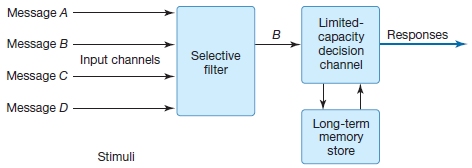

Early Versus Late Selection Models

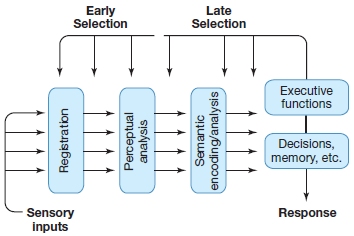

Cambridge University psychologist Donald Broadbent (1958) elaborated on the idea that the information processing system has a limited-capacity stage or stages through which only a certain amount of information can pass (Figure 7.13)—that is, a bottleneck, as hinted at by the writings of James and the experiments of Cherry. In Broadbent’s model, the sensory inputs that can enter higher levels of the brain for processing are screened so that only the “most important,” or attended, events pass through. Broadbent described this mechanism as a gate that could be opened for attended information and closed for ignored information. Broadbent argued for information selection early in the information processing stream. Early selection, then, is the idea that a stimulus can be selected for further processing, or it can be tossed out as irrelevant before perceptual analysis of the stimulus is complete.

FIGURE 7.13 Broadbent’s model of selective attention.

In this model, a gating mechanism determines what limited information is passed on for higher level analysis. The gating mechanism shown here takes the form of descending influences on early perceptual processing, under the control of higher order executive processes. The gating mechanism is needed at stages where processing has limited capacity.

In contrast, models of late selection hypothesize that all inputs are processed equally by the perceptual system. Selection follows to determine what will undergo additional processing, and perhaps what will be represented in awareness. The late-selection model implies that attentional processes cannot affect our perceptual analysis of stimuli. Instead, selection takes place at higher stages of information processing that involve internal decisions about whether the stimuli should gain complete access to awareness, be encoded in memory, or initiate a response. (The term decisions in this context refers to nonconscious processes, not conscious decisions made by the observer.) Figure 7.14 illustrates the differential stages of early versus late selection.

FIGURE 7.14 Early versus late selection of information processing.

This conceptualization is concerned with the extent of processing that an input signal might attain before it can be selected or rejected by internal attentional mechanisms. Early-selection mechanisms of attention would influence the processing of sensory inputs before the completion of perceptual analyses. In contrast, late-selection mechanisms of attention would act only after the complete perceptual processing of the sensory inputs, at stages where the information had been recoded as a semantic or categorical representation (e.g., “chair”).

The original “all or none” early selection models, exemplified by gating models, quickly ran into a problem. Cherry observed in his cocktail party experiments that sometimes salient information from the unattended ear was consciously perceived, for example, when the listener’s own name or something very interesting was included in a nearby conversation. The idea of a simple gating mechanism, which assumed that ignored information was completely lost, could not explain this experimental finding. Anne Treisman (1969), now at Princeton University, proposed that unattended channel information was not completely blocked from higher analysis but was degraded or attenuated instead—a point Broadbent agreed with. Thus, early-selection versus lateselection models were modified to make room for the possibility that information in the unattended channel could reach higher stages of analysis, but with greatly reduced signal strength. To test these competing models of attention, researchers employed increasingly sensitive methods for quantifying the effects of attention. Their methods included chronometric analysis—the analysis of the time course of information processing on a millisecond-tomillisecond level of resolution, as described next.

Quantifying the Role of Attention in Perception

One way of measuring the effect of attention on information processing is to examine how participants respond to target stimuli under differing conditions of attention. Various experimental designs have been used for these explorations, and we describe some of them later in this chapter. One popular method is to provide cues that direct the participant’s attention to a particular location or target feature before presenting the task-relevant target stimulus. In these so-called cuing tasks, the focus of attention is manipulated by the information in the cue.

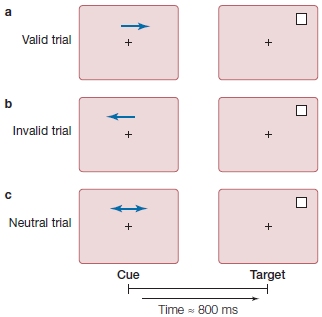

In cuing studies of voluntary spatial attention, participants are presented a cue that directs their attention to one location on a video screen (Figure 7.15). Next, a target stimulus is flashed onto the screen at either the cued location or another location. Participants may be asked to press a button as fast as they can following the presentation of a target stimulus to indicate that it occurred; or they may be asked to respond to something about the stimulus, such as, “was it red or blue?” Such designs can provide information on how long it takes to perform the task (reaction time or response time), how accurately the participant performs the task, or both. In one version of this experiment, participants are instructed that although the cue, such as an arrow, will indicate the most likely location of the upcoming stimulus, they are to respond to the target wherever it appears. The cue, therefore, predicts the location of the target on most trials (a trial is one presentation of the cue and subsequent target, along with the required response). This form of cuing is known as endogenous cuing. Here, the orienting of attention to the cue is driven by the participant’s voluntary compliance with the instructions and the meaning of the cue, rather than merely by the cue’s physical features (see Reflexive Attention, later in this chapter, for a contrasting mechanism).

FIGURE 7.15 The spatial cuing paradigm popularized by Michael Posner and colleagues at the University of Oregon.

A participant sits in front of a computer screen, fixates on the central cross, and is told never to deviate eye fixation from the cross. An arrow cue indicates which visual hemifield the participant should covertly attend to. The cue is then followed by a target (the white box) in either the correctly cued (a) or the incorrectly cued (b) location. On other trials (c), the cue (e.g., double-headed arrow) tells the participant that it is equally likely that the target will appear in the right or left location.

When a cue correctly predicts the location of the subsequent target, we say we have a valid trial (Figure 7.15a). If the relation between cue and target is strong—that is, the cue usually predicts the target location (say, 90 % of the time)—then participants learn to use the cue to predict the next target’s location. Sometimes, though, because the target may be presented at a location not indicated by the cue, the participant is misled, and we call this an invalid trial (Figure 7.15b). Finally, the researcher may include some cues that give no information about the most likely location of the impending target; we call this situation a neutral trial (Figure 7.15c).

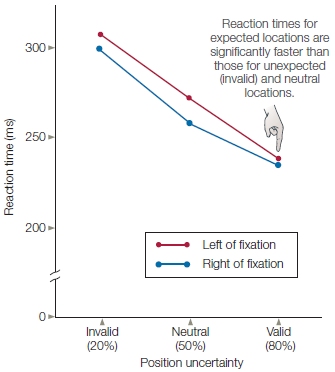

In cuing studies of voluntary attention, the time between the presentation of the attention-directing cue and the presentation of the subsequent target might be very brief or last up to a second or more. When participants are not permitted to move their eyes to the cued spot, but the cue correctly predicts the target’s location, participants respond faster than when neutral cues are given (Figure 7.16). This faster response demonstrates the benefits of attention. In contrast, reaction times are slower when the stimulus appears at an unexpected location, revealing the costs of attention. If the participants are asked to discriminate some feature of the target, then benefits and costs of attention can be expressed in terms of accuracy instead of, or in addition to, reaction time measures.

FIGURE 7.16 Quantification of spatial attention using behavioral measures.

Results of the study by Posner and colleagues illustrated in Figure 7.15, as shown by reaction times to unexpected, neutral, and expected location targets for the right and left visual hemifields.

Benefits and costs of attention have been attributed to the influence of covert attention on the efficiency of information processing. According to some theories, such effects result when the predictiveness of the cue induces the participants to direct their covert attention internally—a sort of mental “spotlight” of attention—to the cued visual field location. The spotlight is a metaphor to describe how the brain may attend to a spatial location. Because participants are typically required to keep their eyes on a central fixation spot on the viewing screen, internal or covert mechanisms must be at work.

Among others, University of Oregon professor Michael Posner and his colleagues (1980) have suggested that this attentional spotlight affected reaction times by influencing sensory and perceptual processing: Thus the stimuli that appeared in an attended location were processed faster than the stimuli that appeared in the unattended location. This enhancement of attended stimuli, a type of early selection, suggests that changes in perceptual processing can happen when the participant is attending a stimulus location. Now you might be thinking, “Ahhh, wait a minute there, fellas... responding more quickly to a target appearing at an attended location does not imply that the target was more efficiently processed in our visual cortex (early selection). These measures of reaction time—or behavioral measures more generally—are not measures of specific stages of neural processing. They provide only indirect measures. These time effects could solely reflect events going on in the motor system.” Exactly. Can we be sure that the perceptual system actually is responsible? In order to determine if changes in attention truly affected perceptual processing, researchers turned to some cognitive neuroscience methods in combination with the voluntary cuing paradigm.

TAKE-HOME MESSAGES

Neural Mechanisms of Attention and Perceptual Selection

Although most of the experiments discussed in this chapter focus on the visual system and, hence, on visual attention, this should not be taken to suggest that attention is only a visual phenomenon. Selective attention operates in all sensory modalities. In fact, it was investigations of the auditory system, spurred on by curiosity about the cocktail party effect, that led to the first round of cognitive neuroscience studies looking at the affect of attention on perceptual selection. These early studies made it clear that attention did affect early processing of perceptual stimuli, but not without some bumps in the road. Take a look at the How the Brain Works: Attention, Arousal, and Experimental Design box before we proceed to the land of visual attention.

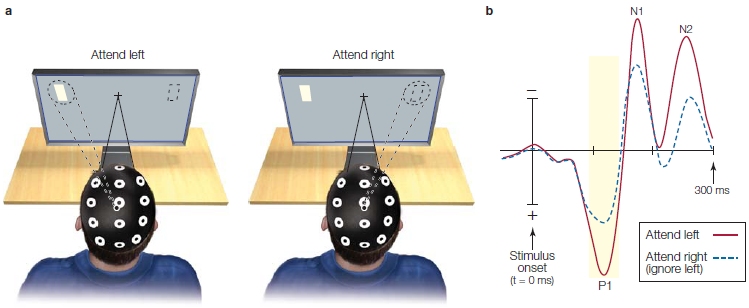

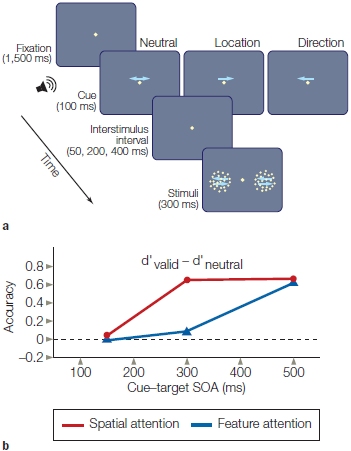

After these early auditory ERP studies were conducted (heeding Näätänen’s precautions discussed in the box), vision researchers became interested in studying the effects of attention on their favorite sense. They wanted to know if attention affected visual processing, and if so, when and where during processing it occurred. We begin with research of voluntary visual-spatial attention. Visual spatial attention involves selecting a stimulus on the basis of its spatial location. It can be voluntary, such a when you look at this page, or it can be reflexive, when you might glance up having been diverted by a motion or flash of light.

Voluntary Spatial Attention

Cortical Attention Effects Neural mechanisms of visual selective attention have been investigated using cuing paradigm methods, which we have just described. In a typical experiment, participants are given instructions to covertly (without diverting gaze from a central fixation spot) attend to stimuli presented at one location (e.g., right field) and ignore those presented at another (e.g., left field) while event-related potential (ERP) recordings are made (see Chapter 3, page 100).

HOW THE BRAIN WORKS

Attention, Arousal, and Experimental Design

Since the turn of the 19th century, scientists have known that the ascending auditory pathway carries two-way traffic. Each neural relay sends axons to the auditory cortex and also sends return axons back to the preceding processing stage, even out to the cochlea via the olivocochlear bundle (OCB). Because this appears to be a sign of top-down communication in the auditory system, researchers have investigated whether the behavioral effects of attention, like those revealed in dichotic listening studies, might be the result of gating that occurs very early in auditory processing, such as in the thalamus, brainstem, or even all the way back to the cochlea.

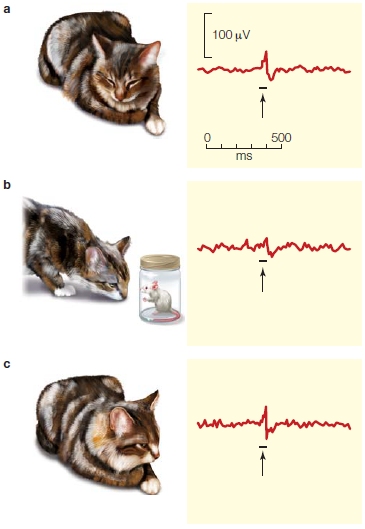

The esteemed Mexican neurophysiologist Raul Hernández-Peón and his colleagues (1956) attempted to determine whether phenomena like the cocktail party effect might result from a gating of auditory inputs in the ascending auditory pathways. They recorded the activity in neurons within the subcortical auditory pathway of a cat while it was passively listening to the sound from a speaker (Figure 1a). They compared those results with recordings from the same cat when it was ignoring the sound coming from the speaker. How did they know the cat was ignoring the sounds? They showed mice to the cat, thereby attracting its visual attention (Figure 1b). They found that the amplitude of activity of neurons in the cochlear nucleus was reduced when the animal attended to the mice—apparently strong evidence for early-selection theories of attention.

Unfortunately, these particular experiments suffered fatal flaws that could affect attention. The cat—being a cat—was more aroused once it spotted a mouse, and because a speaker was used to present the stimuli instead of little cat headphones, movements of the ears led to changes in the amplitudes of the signals between conditions. Hernández-Peón and his colleagues had failed to control for the differences either in the state of arousal or in the amplitude of the sound at the cat’s ears.

FIGURE 1 Early study of the neurophysiology of attention.

A sound was played to a cat through a loudspeaker under three conditions while recordings from the cochlear nucleus in the brainstem were obtained. (a) While the animal sits passively in the cage listening to sounds, the evoked response from the cochlear nucleus is robust. (b) The animal’s attention is attracted away from the sounds that it is hearing to visual objects of interest (a mouse in a jar). (c) The animal is once again resting and passively hearing sounds. The arrows indicate the responses of interest, and the horizontal lines indicate the onsets and offsets of the sounds from the loudspeaker.

These problems have two solutions, and both are necessary. One solution is to introduce experimental controls that match arousal between conditions of attention. The other is to carefully control the stimulus properties by rigorously monitoring ear, head, and eye positions.

In 1969, a Finnish psychologist, Risto Näätänen, laid out the theoretical issues that have to be addressed to permit a valid neurophysiological test of selective attention. Among the issues he noted were that the experimental design had to be able to distinguish between simple behavioral arousal (low state of arousal vs. high state of arousal) and truly selective attention (e.g., attending one source of relevant sensory input while simultaneously ignoring distracting events).

Indeed, when Hernández-Peón’s students repeated the 1956 experiment and carefully avoided changes in the sound amplitude at the ear, no differences were found subcortically between the neural response to attended and ignored sound.

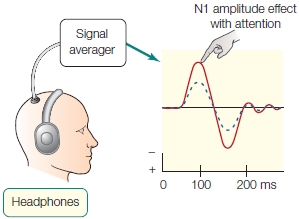

The first physiological studies to control for both adjustments of peripheral sensory organs and nonspecific effects of behavioral arousal were conducted on humans by Steven Hillyard and his colleagues (1973) at the University of California, San Diego. ERPs were recorded because they provide a precise temporal record of underlying neural activity, and the ERP waves are related to different aspects of sensory, cognitive, and motor processing. Hillyard presented streams of sounds into headphones being worn by volunteers. Ten percent of the sounds were a deviant tone that differed in pitch. During one condition, participants were asked to attend to and count the number of higher pitched tones in one ear while ignoring those in the other (e.g., attend to right-ear sounds and ignore left-ear sounds). In a second condition, they were asked to pay attention to the stimuli in the other ear (e.g., attend to left-ear sounds and ignore right-ear sounds). In this way the researchers separately obtained auditory ERPs to stimuli entering one ear when input to that ear was attended and when it was ignored (while attending the other ear). The significant design feature of the experiment was that, during the two conditions of attention, the participants were always engaged in a difficult attention task, thus controlling for differing arousal states. All that varied was the direction of covert attention—to which ear the participants directed their attention. Figure 2 shows that the auditory sensory ERPs had a larger amplitude for attended stimuli, providing evidence that sensory processes were directed by attention. This result supported early-selection models and gives us a physiological basis for the cocktail party effect. Note that the subject also heard the sound through headphones to avoid the problem of differing sound strength at the ear drum, as occurred in the cat studies of Hernández-Peón.

FIGURE 2 Event-related potentials in a dichotic listening task.

The solid line represents the idealized average voltage response to an attended input over time; the dashed line, the response to an unattended input. Hillyard and colleagues found that the amplitude of the N1 component was enhanced when attending to the stimulus compared to ignoring the stimulus.

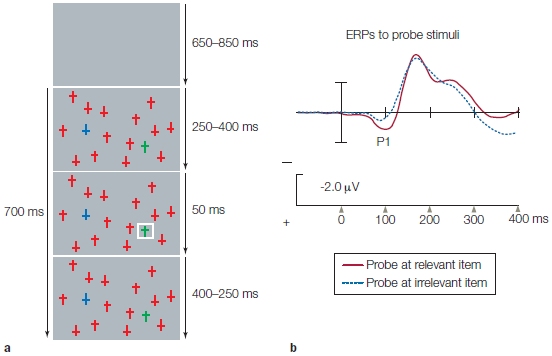

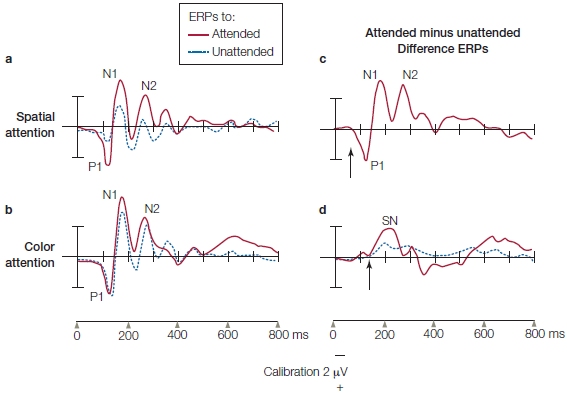

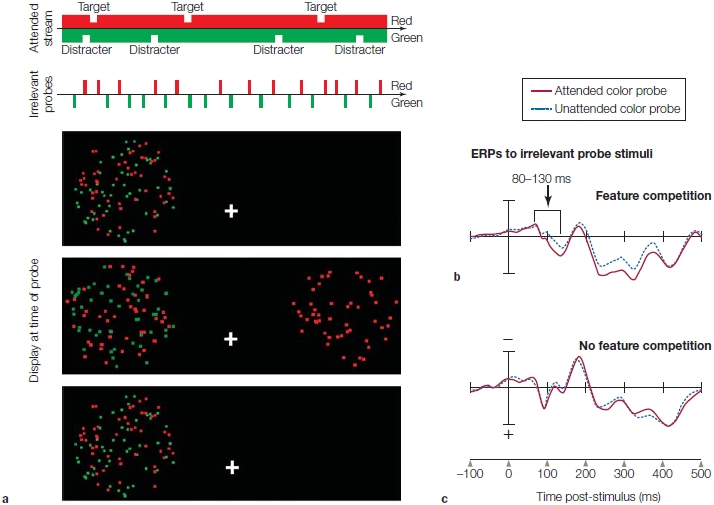

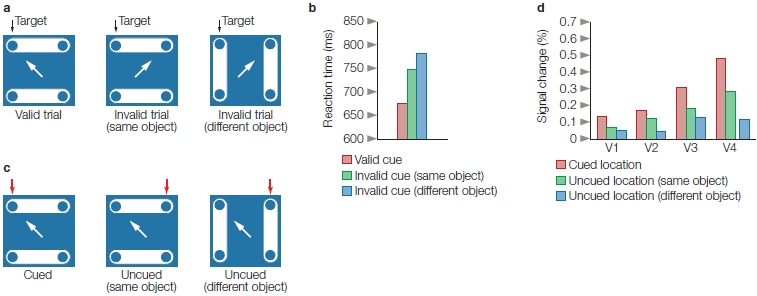

Looking at a typical ERP recording from a stimulus in one visual field (Figure 7.17), the first big ERP wave is a positive one that begins at 60–70 ms and peaks at about 100 ms (P1; first trough in Figure 7.17b) over the contralateral occipital cortex. It is followed by a negative wave that peaks at 180 ms (N1; Figure 7.17b). Modulations in the visual ERPs due to attention begin as early as 70–90 ms after stimulus onset, and thus, affect the P1 wave (Eason et al., 1969; Van Voorhis & Hillyard, 1977). When a visual stimulus appears at a location to which a subject is attending, the P1 is larger in amplitude than when the same stimulus appears at the same location but attention is focused elsewhere (Figure 7.17b). This is consistent with the attention affects observed in studies of auditory and tactile selective attention, which also modulates sensory responses.

This effect of visual attention primarily occurs with manipulations of spatial attention and not when attention is focused selectively on the features (e.g., one color vs. another) or object properties (e.g., car keys vs. wallet) of stimuli alone. Attention effects for the more complex tasks of feature attention or object attention are observed later in the ERPs (greater than 120-ms latency—but see Figure 7.38 and related text). We describe these effects later in the chapter when we discuss attention to stimulus features. Thus, it seems that spatial attention has the earliest effect on stimulus processing. This early influence of spatial attention may be possible because retinotopic mapping of the visual system means that the brain encodes space very early—as early as at the retina—and space is a strong defining feature of relevant versus irrelevant environmental events.

Where, within the visual sensory hierarchy, are these earliest effects of selective visuospatial attention taking place and what do they represent? The P1 attention effect has a latency of about 70 ms from stimulus onset, and it is sensitive to changes in physical stimulus parameters, such as location in the visual field and stimulus luminance. We’ve learned from intracranial recordings that the first volleys of afferent inputs into striate cortex (V1) take place with a latency longer than 35 ms, and that early visual cortical responses are in the same latency range as the P1 response. Taken together, these clues suggest that the P1 wave is a sensory wave generated by neural activity in the visual cortex, and therefore, its sensitivity to spatial attention supports early selection models of attention. We know from Chapter 3, however, that ERPs represent the summed electrical responses of tens of thousands of neurons, not single neurons. This combined response produces a large enough signal to propagate through the skull to be recorded on the human scalp. Can the effect of attention be detected in the response of single visual neurons in the cortex? For example, let’s say your attention wanders from the book and you look out your window to see if it is still cloudy and WHAT??? You jerk your head to the right to get a double take. A brand new red Maserati Spyder convertible is sitting in your driveway. As a good neuroscientist, you immediately think, “I wonder how my spatial attention, focused on this Maserati, is affecting my neurons in my visual cortex right now?” rather than “What the heck is a Maserati doing in my driveway?”

FIGURE 7.17 Stimulus display used to reveal physiological effects of sustained, spatial selective attention.

(a) The participant fixates the eyes on the central crosshairs while stimuli are flashed to the left (shown in figure) and right fields. (left panel) The participant is instructed to covertly attend to the left stimuli, and ignore those on the right. (right panel) The participant is instructed to ignore the left stimuli and attend to the right stimuli. Then the responses to the same physical stimuli, such as the white rectangle being flashed to left visual hemifield in the figure, are compared when they are attended and ignored. (b) Sensory ERPs recorded from a single right occipital scalp electrode in response to the left field stimulus. The waveform shows a series of characteristic positive and negative voltage deflections over time, called ERP components. Notice that the positive voltage is plotted downward. Their names reflect their voltage (P = positive; N = negative) and their order of appearance (e.g., 1 = first deflection). Attended stimuli (red trace) elicit ERPs with greater amplitude than do unattended stimuli (dashed blue trace).

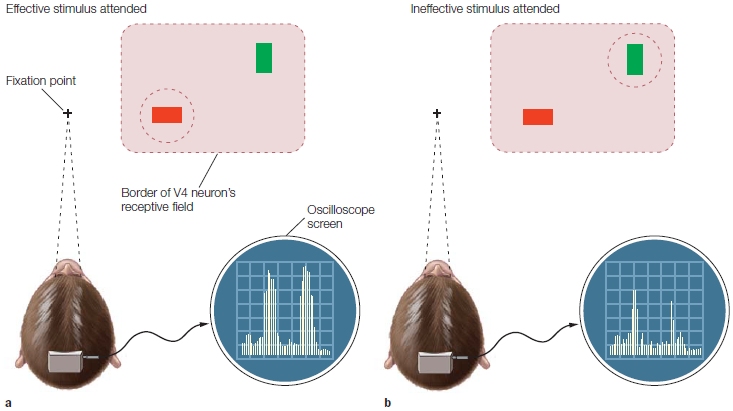

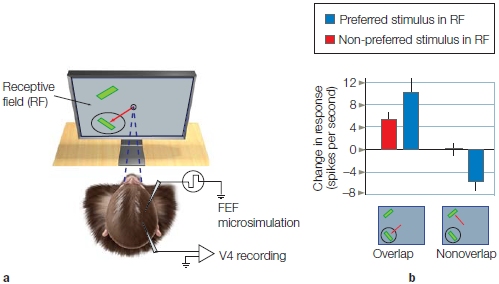

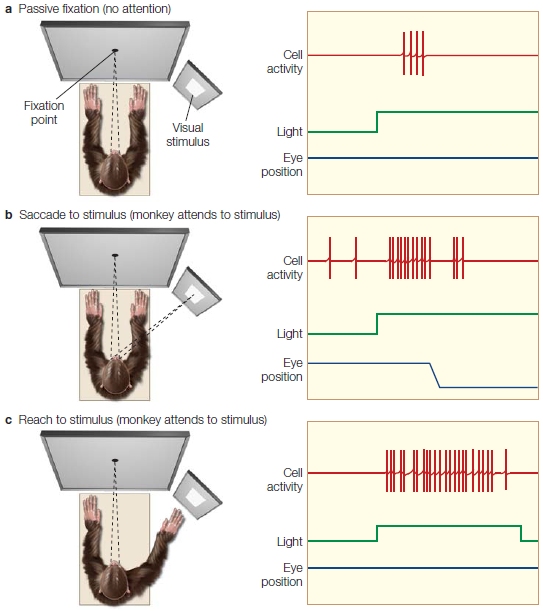

Jeff Moran and Robert Desimone (1985) revealed the answer to this question (the former, not the latter). The scientists investigated how visuospatial selective attention affected the firing rates of individual neurons in the visual cortex of monkeys. Using single-cell recording, they first recorded and characterized the responses of single neurons in extrastriate visual area V4 (ventral stream area) to figure out what regions of the visual field they coded (receptive field location) and which specific stimulus features the neurons responded to most vigorously. The team found, for example, that neurons in V4 fired robustly in response to a single-colored, oriented bar stimulus (e.g., a red horizontal bar) more than another (e.g., a green vertical bar). Next, they simultaneously presented the preferred (red horizontal) and non-preferred (green vertical) stimuli near each other in space, so that both stimuli were within the region of the visual field that defined the neuron’s receptive field. Over a period of several months, the researchers had previously trained the monkeys to fixate on a central spot on a monitor, to covertly attend to the stimulus at one location in the visual field, and to perform a task related to it while ignoring the other stimulus. Responses of single neurons were recorded and compared under two conditions: when the monkey attended the preferred (red horizontal bar) stimulus, and when it instead attended the non-preferred (green vertical bar) stimulus that was located a short distance away. Because the two stimuli (attended and ignored) were positioned in different locations, the task can be characterized as a spatial attention task. How did attention affect the firing rate of the neurons?

When the red stimulus was attended, it elicited a stronger response (more action potentials fired per second) in the corresponding V4 neuron that preferred red horizontal bars than when the red stimulus was ignored while attending the green vertical bar positioned at another location. Thus, spatial selective attention affected the firing rates of V4 neurons (Figure 7.18). As with the ERPs in humans, the activity of single visual cortical neurons are found to be modulated by spatial attention.

Several studies have replicated the attention effects observed by Moran and Desimone in V4 and have extended this finding to other visual areas, including later stages of the ventral pathway in the inferotemporal region. In addition, work in dorsal-stream visual areas has demonstrated effects of attention in the motion processing areas MT and MST of the monkey. Researchers also investigated whether attention affected even earlier steps in visual processing—in primary visual cortex (V1), for example.

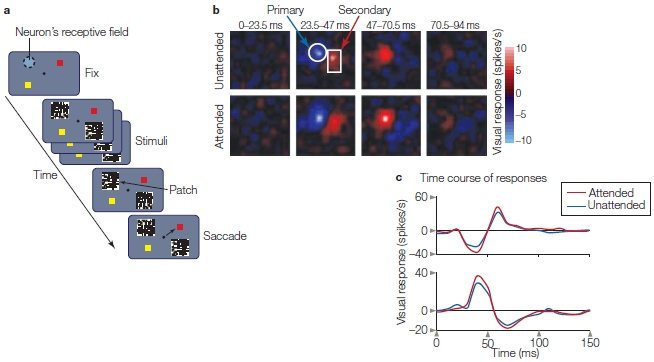

Carrie McAdams and Clay Reid (2005), at Harvard Medical School, carried out experiments to determine which level of processing within V1 was influenced by attention. Recall from Chapter 6 that many stages of neural processing take place within a visual area, and in V1 different neurons display characteristic receptive-field properties—some are called simple cells, others complex cells, and so on. Simple cells exhibit orientation tuning and respond to contrast borders (like those found along the edge of an object). Simple cells are also relatively early in the hierarchy of neural processing in V1—so, if attention were to affect them, this would be further evidence of how early in processing, and by what mechanism, spatial attention acts within V1.

McAdams and Reid trained monkeys to fixate on a central point and covertly attend a black-and-white flickering noise pattern in order to detect a small, colored pixel that could appear anywhere within the pattern (Figure 7.19a). When the monkeys detected the color, they were to signal this by making a rapid eye movement (a saccade) from fixation to the location on the screen that contained that color. The attended location would be positioned either over the receptive field of the V1 neuron they were recording or in the opposite visual field. Thus, the researchers could evaluate responses of the neuron when that region of space was attended and when it was ignored (in different blocks). They also could use the flickering noise pattern to create a spatiotemporal receptive-field map (Figure 7.19b) showing regions of the receptive field that were either excited or inhibited by light. In this way, the researchers could first determine whether the neuron had the properties of simple cells. They could also see whether attention affected the firing pattern and receptive-field organization. What did they come up with? They found that spatial attention enhanced the responses of the simple cells but did not affect the spatial or temporal organization of their receptive fields (Figure 7.19c).

FIGURE 7.18 Spatial attention modulates activity of V4 neurons.

The areas circled by dashed lines indicate the attended locations for each trial. A red bar is an effective sensory stimulus, and a green bar is an ineffective sensory stimulus for this neuron. The neuronal firing rates are shown to the right of each monkey head. The first burst of activity is to the cue, and the second burst in each image is to the target array. (a) When the animal attended to the red bar, the V4 neuron gave a good response. (b) When the animal attended to the green bar, a poor response was generated.

FIGURE 7.19 Attention effects in V1 simple cells.

(a) The stimulus sequence began with a fixation point and two color locations that would serve as saccade targets. Then two flickering black-and-white patches appeared, one over the neuron’s receptive field and the other in the opposite visual field. Before the onset of the stimuli, the monkey was instructed which of the two patches to attend. The monkey had been trained to covertly attend the indicated patch to detect a small color pixel that would signal where a subsequent saccade of the eyes was to be made (to the matching color) for a reward. (b) The spatiotemporal receptive field of the neuron when unattended (attend opposite visual field patch) and when attended. Each of the eight panels corresponds to the same spatial location as that of the black-and-white stimulus over the neuron’s receptive field. The excitatory (red) and inhibitory (blue) regions of the receptive field are evident; they are largest from 23.5 to 70 ms after stimulus onset (middle two panels). Note that the amplitudes of the responses were larger when attended than when unattended. This difference can be seen in these receptive-field maps and is summarized as plots in (c).

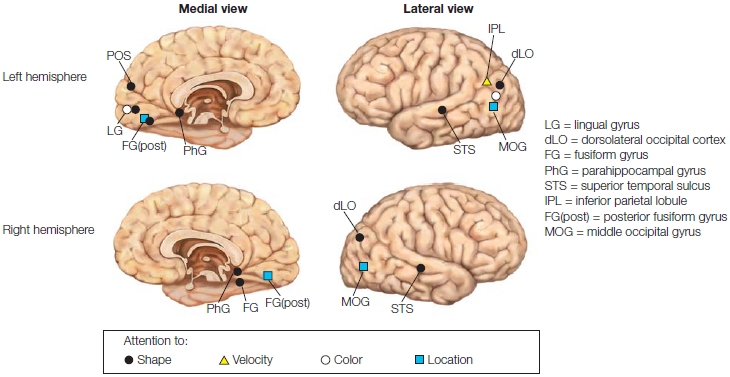

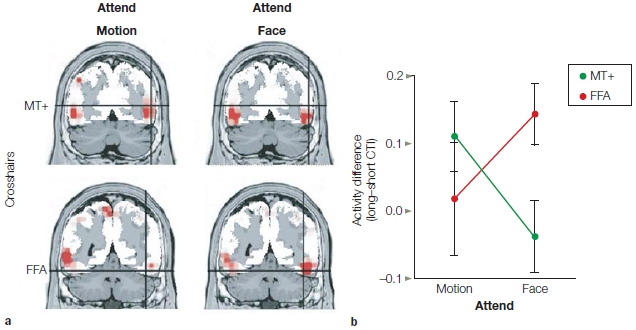

Does the same happen in humans? Yes, but different methods have to be used, since intracranial recordings are rarely done in humans. Neuroimaging studies of spatial attention show results consistent with those from cellular recordings in monkeys. Whole brain imaging studies have the advantage that one may investigate attention effects in multiple brain regions all in one experiment. Such studies have shown that spatial attention modulates the activity in multiple cortical visual areas. Hans-Jochen Heinze and his colleagues (1994) directly related ERP findings to functional brain neuroanatomy by combining positron emission tomography (PET) imaging with ERP recordings. They demonstrated that visuospatial attention results in modulation of blood flow (related to neuronal activity) in visual cortex. Subsequent studies using fMRI have permitted a more fine-grained analysis of the effects of spatial attention in humans.

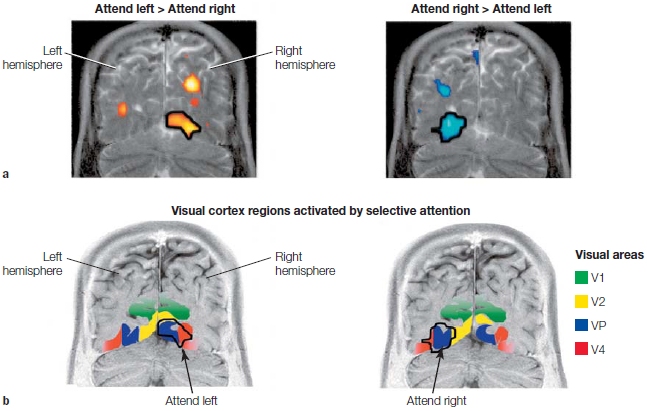

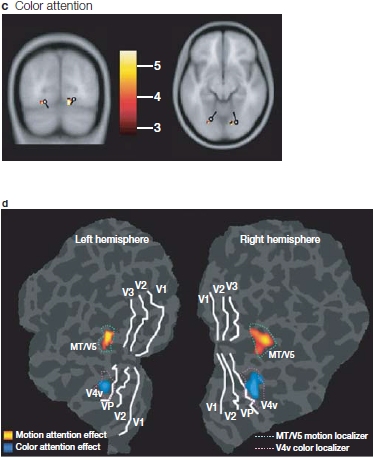

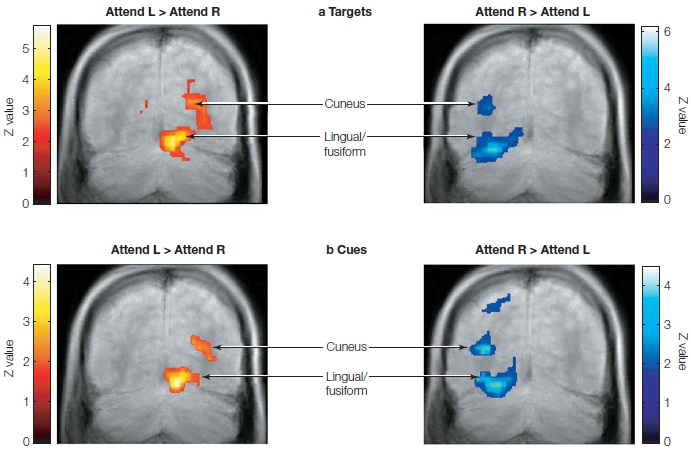

For example, Joseph Hopfinger and his colleagues (2000) used a modified version of a spatial cuing task combined with event-related fMRI. On each trial, an arrow cue was presented at the center of the display and indicated the side to which participants should direct their attention. Eight seconds later, the bilateral target display (flickering black-and-white checkerboards) appeared for 500 ms. The participants’ task was to press a button if some of the checks were gray rather than white, but only if this target appeared on the cued side. The 8-s gap between the arrow and the target display allowed the slow hemodynamic responses (see Chapter 3) linked to the attention-directing cues to be analyzed separately from the hemodynamic responses linked to the detection of and response to the target displays. The results are shown in Figure 7.20 in coronal sections through the visual cortex of a single participant in the Hopfinger study. As you can see in this figure, attention to one visual hemifield activated multiple regions of visual cortex in the contralateral hemisphere.

FIGURE 7.20 Selective attention activates specific regions of the visual cortex, as demonstrated by event-related fMRI.

(a) Areas of activation in a single participant were overlaid onto a coronal section through the visual cortex obtained by structural MRI. The statistical contrasts reveal where attention to the left hemifield produced more activity than attention to the right (reddish to yellow colors, left) and the reverse, where attention to the right hemifield elicited more activity than did attention to the left (bluish colors, right). As demonstrated in prior studies, the effects of spatial attention were activations in the visual cortex contralateral to the attended hemifield. (b) The regions activated by attention (shown in black outline) were found to cross multiple early visual areas (shown as colored regions—refer to key).

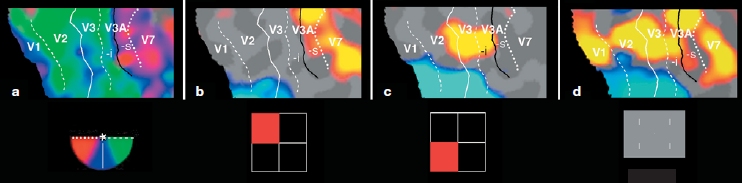

Roger Tootell and Anders Dale at Massachusetts General Hospital (R. Tootell et al., 1998) investigated how all of the attention-related activations in visual cortex related to the multiple visual cortical areas in humans using retinotopic mapping. That is, they wanted to differentiate and identify one activated visual area from another on the scans. They combined high-resolution mapping of the borders of early visual cortical areas (retinotopic mapping; see Chapter 3) with a spatial attention task. Participants were required to selectively attend to stimuli located in one visual field quadrant while ignoring those in the other quadrants; different quadrants were attended to in different conditions while the participants’ brains were scanned with fMRI methods. This permitted the researchers to map the attentional activations onto the flattened computer maps of the visual cortex, thus permitting the attention effects to be related directly to the multiple visual areas of human visual cortex.

They found that spatial attention produced robust modulations of activity in multiple extrastriate visual areas, as well as a smaller modulation of striate cortex (V1; Figure 7.21). This work provides a high-resolution view of the functional anatomy of multiple areas of extrastriate and striate cortex during sustained spatial attention in human visual cortex.

Now we know that spatial attention does influence the processing of visual inputs. Attended stimuli produce greater neural responses than do ignored stimuli, and this difference is observed in multiple visual cortical areas. Is the effect of spatial attention different in the different visual areas? It seems so. The Tootell fMRI work hints at this possibility, because attention-related modulation of activity in V1 appeared to be less robust than that in extrastriate cortex; also, work by Motter (1993) suggested a similar pattern in the visual cortex of monkeys. If so, what mechanisms might explain a hierarchical organization of attention effects as you move up the visual hierarchy from V1 through extrastriate cortical areas?

FIGURE 7.21 Spatial attention produced robust modulation of activity in multiple extrastriate visual areas, as demonstrated by fMRI.

Panel a shows the retinotopic mappings of the left visual field for each participant, corresponding to the polar angles shown at right (which represents the left visual field). Panel b shows the attention-related modulations (attended versus unattended) of sensory responses to a target in the upper left quadrant (the quadrant to which attention was directed is shown at right in red). Panel c shows the same for stimuli in the lower left quadrant. In b and c, the yellow to red colors indicate areas where activity was greater when the stimulus was attended to than when ignored; the bluish colors represent the opposite, where the activity was greater when the stimulus was ignored than when attended. The attention effects in b and c can be compared to the pure sensory responses to the target bars when passively viewed (d).

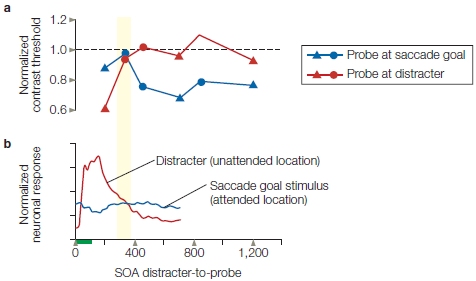

Robert Desimone and John Duncan (1995) proposed a biased competition model for selective attention. Their model may help explain two questions. First, why are the effects of attention larger when multiple competing stimuli fall within a neuron’s receptive field? And second, how does attention operate at different levels of the visual hierarchy as neuronal receptive fields change their properties? In the biased competition model, when different stimuli in a visual scene fall within the receptive field of a visual neuron, the bottom-up signals from the two stimuli compete like two snarling dogs to control the neuron’s firing. The model suggests that attention can help resolve this competition in favor of the attended stimulus. Given that the sizes of neuronal receptive fields increase as you go higher in the visual hierarchy, there is a greater chance for competition between different stimuli within a neuron’s receptive field, and therefore, a greater need for attention to help resolve the competition (to read more about receptive fields, see Chapter 3).

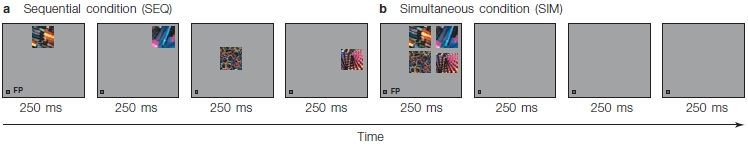

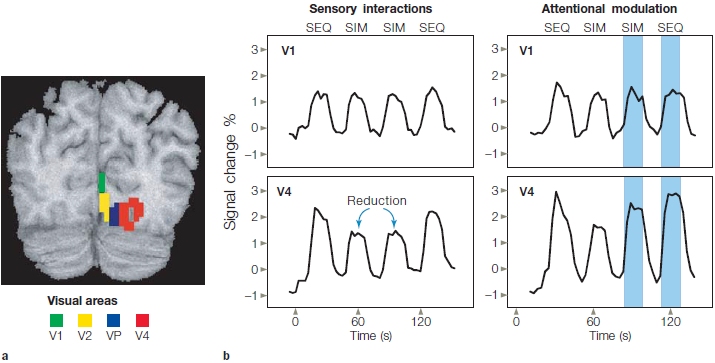

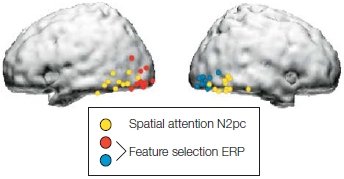

Sabine Kastner and her colleagues (1998) used fMRI to investigate the biased competition model during spatial attention in humans (Figure 7.22). To do this, they first asked whether, in the absence of focused spatial attention, nearby stimuli could interfere with one another. The answer was yes. They found that when they presented two nearby stimuli simultaneously, the stimuli interfered with each other and the neural response evoked by each stimulus was reduced compared to when one stimulus was presented alone. If attention is introduced and directed to one stimulus in the display, however, then simultaneous presentation of the competing stimulus no longer interferes (Figure 7.23), and this effect tended to be larger in area V4 than in V1. The attention focused on one stimulus attenuates the influence of the competing stimulus. To return to our analogy, one of the snarling dogs (the competing stimulus) is muzzled.

FIGURE 7.22 Design of the task for attention to competing stimuli used to test the biased competition model.

Competing stimuli were presented either sequentially (a) or simultaneously (b). During the attention condition, covert attention was directed to the stimulus closest to the point of fixation (FP), and the other stimuli were merely distracters.

FIGURE 7.23 Functional MRI signals in the study investigating the biased competition model of attention.

(a) Coronal MRI section in one participant, where the pure sensory responses in multiple visual areas are mapped with meridian mapping (similar to that used in Figure 7.20). (b) The percentage of signal changes over time in areas V1 and V4 as a function of whether the stimuli were presented in the sequential (SEQ) or simultaneous (SIM) condition, and as a function of whether they were unattended (left) or whether attention was directed to the target stimulus (right, shaded blue). In V4 especially, the amplitudes during the SEQ and SIM conditions were more similar when attention was directed to the target stimulus (shaded blue areas at right) than when it was not (unshaded areas).

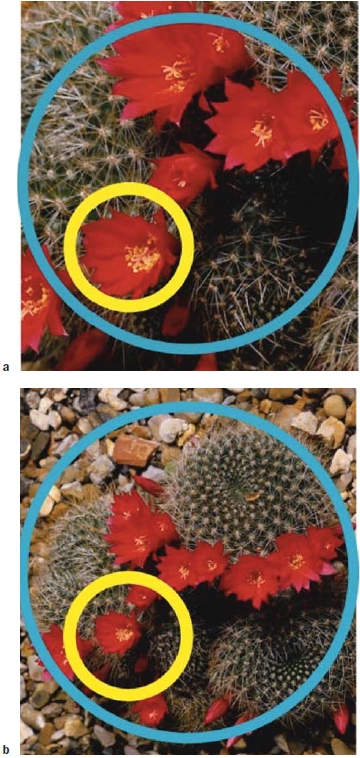

It appears to be the case that, for a given stimulus, spatial attention operates differently at early (V1) versus later (e.g., V4) stages of the visual cortex. Why? Perhaps because the neuronal receptive fields differ in size from one visual cortical area to the next. Thus, although smaller stimuli might fall within a receptive field of a single V1 neuron, larger stimuli would not; but these larger stimuli would fall within the larger receptive field of a V4 neuron. In addition, exactly the same stimulus can occupy different spatial scales depending on its distance from the observer. For example, look at the flowers in Figure 7.24. When viewed at a greater distance (panel b), the same flowers occupy less of the visual field (compare what you see in the yellow circles). All of the flowers actually could fall into the receptive field of a single neuron at an earlier stage of the visual hierarchy. This observation suggests that attention operates at different stages of vision, depending on the spatial scale of the attended and ignored stimuli. Does it? How would you design a study to answer this question?

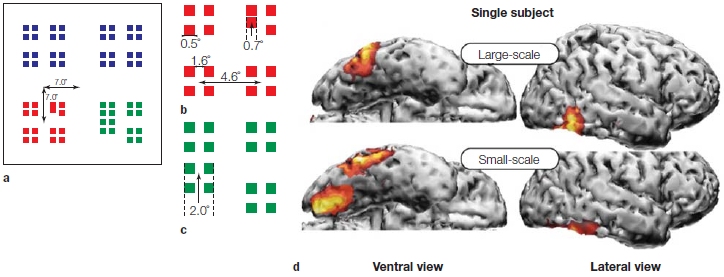

Max Hopf and colleagues (2006) combined recordings of ERPs, magnetoencephalography (MEG; see Chapter 3), and fMRI. The simple stimuli they used are shown in Figure 7.25a–c. In each trial, the target appeared as either a square or a group of squares, small or large, red or green, and shifted either up or down in the visual field. Participants were to attend to the targets of one color as instructed and to push one of two buttons depending on whether the targets were shifted up or down. The study revealed that attention acted at earlier levels of the visual system for the smaller targets than it did for the large targets (Figure 7.25d). So, although attention does act at multiple levels of the visual hierarchy, it also optimizes its action to match the spatial scale of the visual task.

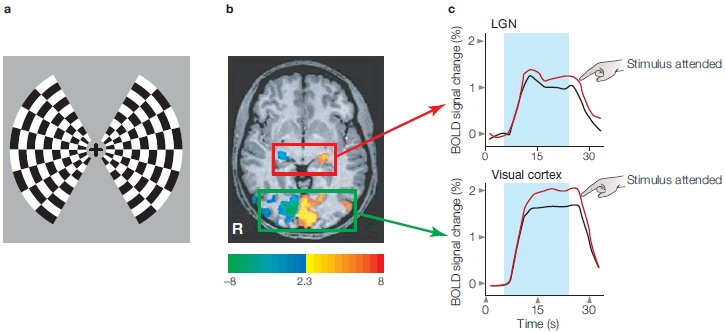

Now that we have seen the effects of attention on the cortical stages of the visual hierarchy, have you started to wonder if attention might also cause changes in processing at the level of the subcortical visual relays? Well, others have also been curious, and this curiosity stretches back for more than 100 years. Recall the reflections of Helmholtz that we described earlier about the possible mechanisms of covert spatial attention? (See also How The Brain Works: Attention, Arousal, and Experimental Design.) Contemporary researchers have been able to shed light on this question of whether attention might influence subcortical processing.

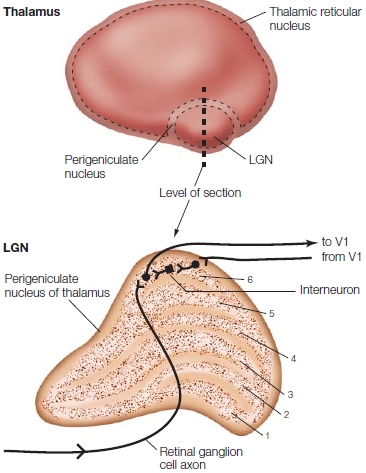

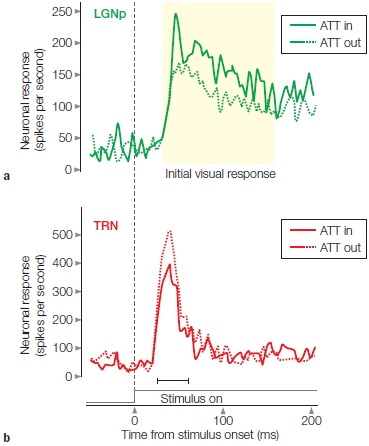

Subcortical Attention Effects Could attentional filtering or selection occur even earlier along the visual processing pathways—in the thalamus or in the retina? Unlike the cochlea, the human retina contains no descending neural projections that could be used to modulate retinal activity by attention. But massive neuronal projections do extend from the visual cortex (layer 6 neurons) back to the thalamus. These projections synapse on neurons in what is known as the thalamic reticular nucleus (TRN; also known as the perigeniculate nucleus), which is the portion of the reticular nucleus that surrounds the lateral geniculate nucleus (LGN) (Figure 7.26).

FIGURE 7.24 Competition varies between objects depending on their scale.

The same stimulus can occupy a different sized region of visual space depending on its distance from the observer. (a) Viewed from up close, a single flower may occupy all of the receptive field of a V4 neuron (yellow circles), whereas multiple flowers fit within the larger receptive field of high-order inferotemporal (IT) neurons (blue circles). (b) Viewed from greater distance, multiple flowers are present within the smaller V4 receptive field and the larger IT receptive field.

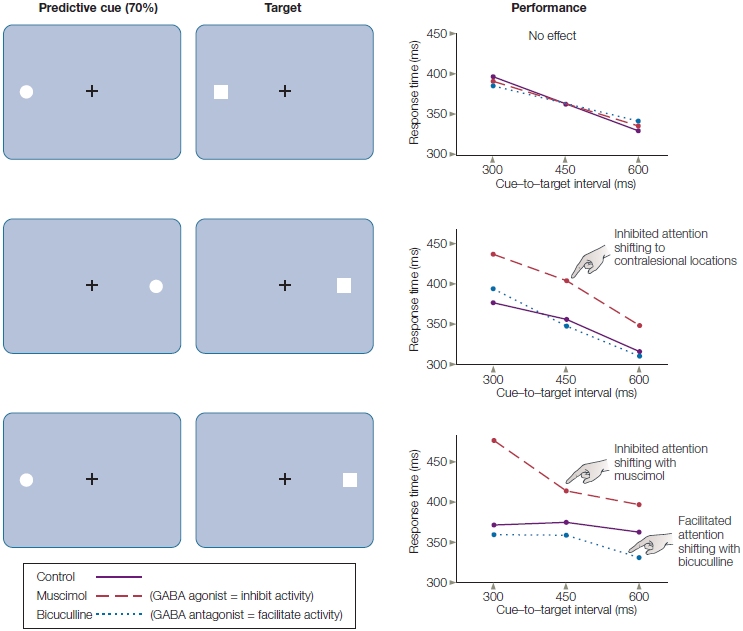

These neurons maintain complex interconnections with neurons in the thalamic relays and could, in principle, modulate information flow from the thalamus to the cortex. Such a process has been shown to take place in cats during intermodal (visual–auditory) attention (Yingling & Skinner, 1976). The TRN was also implicated in a model to select the visual field location for the current spotlight of attention in perception—an idea proposed by Nobel laureate Francis Crick (1992). Is there support for such a mechanism?