|

I never forget a face, but in your case I’ll be glad to make an exception. ~ Groucho Marx |

Chapter 6

Object Recognition

OUTLINE

Principles of Object Recognition

Multiple Pathways for Visual Perception

Computational Problems in Object Recognition

Failures in Object Recognition: The Big Picture

Category Specificity in Agnosia: The Devil Is in the Details

Processing Faces: Are Faces Special?

Mind Reading

WHILE STILL IN HIS THIRTIES, patient G.S. suffered a stroke and nearly died. Although he eventually recovered most of his cognitive functions, G.S. continued to complain about one severe problem: He could not recognize objects. G.S.’s sensory abilities were intact, his language function was normal, and he had no problems with coordination. Most striking, he had no loss of visual acuity. He could easily judge which of two lines was longer, and he could describe the color and general shape of objects. Nonetheless, when shown household objects such as a candle or a salad bowl, he was unable to name them, even though he could describe the candle as long and thin, and the salad bowl as curved and brown. G.S.’s deficit, however, did not reflect an inability to retrieve verbal labels of objects. When asked to name a round, wooden object in which lettuce, tomatoes, and cucumbers are mixed, he responded “salad bowl.” He also could identify objects by using other senses, such as touch or smell. For example, after visually examining a candle, he reported that it was a “long object.” Upon touching it, he labeled it a “crayon”; but after smelling it, he corrected himself and responded “candle.” Thus, his deficit was modality specific, confined to his visual system.

G.S. had even more difficulty recognizing objects in photographs. When shown a picture of a combination lock and asked to name the object, he failed to respond at first. Then he noted the round shape. Interestingly, while viewing the picture, he kept twirling his fingers, pantomiming the actions of opening a combination lock. When asked about this, he reported that it was a nervous habit. Prompted by experimenters to provide more details or to make a guess, G.S. said that the picture was of a telephone (the patient was referring to a rotary dial telephone, which was commonly used in his day). He remained adamant about this guess, even after he was informed that it was not a picture of a telephone. Finally, the experimenter asked him if the object in the picture was a telephone, a lock, or a clock. By this time, convinced it was not a telephone, he responded “clock.” Then, after a look at his fingers, he proudly announced, “It’s a lock, a combination lock.”

G.S.’s actions were telling. Even though his eyes and optic nerve functioned normally, he could not recognize an object that he was looking at. In other words, sensory information was entering his visual system normally, and information about the components of an object in his visual field was being processed. He could differentiate and identify colors, lines, and shapes. He knew the names of objects and what they were for, so his memory was fine. Also, when viewing the image of a lock, G.S.’s choice of a telephone was not random. He had perceived the numeric markings around the lock’s circumference, a feature found on rotary dial telephones. G.S.’s finger twirling indicated that he knew more about the object in the picture than his erroneous statement that it was a telephone. In the end, his hand motion gave him the answer. G.S. had let his fingers do the talking. Although his visual system perceived the parts, and he understood the function of the object he was looking at, G.S. could not put all of that information together to recognize the object. G.S. had a type of visual agnosia.

Principles of Object Recognition

Failures of visual perception can happen even when the processes that analyze color, shape, and motion are intact. Similarly, a person can have a deficit in her auditory, olfactory, or somatosensory system even when her sense of hearing, smell, or touch is functioning normally. Such disorders are referred to as agnosias. The label was coined by Sigmund Freud, who derived it from the Greek a– (“without”) and gnosis (“knowledge”). To be agnosic means to experience a failure of knowledge, or recognition. When the disorder is limited to the visual modality, as with G.S., the syndrome is referred to as visual agnosia.

Patients with visual agnosia have provided a window into the processes that underlie object recognition. As we discover in this chapter, by analyzing the subtypes of visual agnosia and their associated deficits, we can draw inferences about the processes that lead to object recognition. Those inferences can help cognitive neuroscientists develop detailed models of these processes.

As with many neuropsychological labels, the term visual agnosia has been applied to a number of distinct disorders associated with different neural deficits. In some patients, the problem is one of developing a coherent percept—the basic components are there, but they can’t be assembled. It’s somewhat like going to Legoland and—instead of seeing the integrated percepts of buildings, cars, and monsters—seeing nothing but piles of Legos. In other patients, the components are assembled into a meaningful percept, but the object is recognizable only when observed from a certain angle—say from the side, but not from the front. In other instances, the components are assembled into a meaningful percept, but the patient is unable to link that percept to memories about the function or properties of the object. When viewing a car, the patient might be able to draw a picture of that car, but is still unable to tell that it is a car or describe what a car is for. Patient G.S.’s problem seems to be of this last form. Despite his relatively uniform difficulty in identifying visually presented objects, other aspects of his performance—in particular, the twirling fingers—indicate that he has retained knowledge of this object, but access to that information is insufficient to allow him to come up with the name of the object.

When thinking about object recognition, there are four major concepts to keep in mind. First, at a fundamental level, the case of patient G.S. forces researchers to be precise when using terms like perceive or recognize. G.S. can see the pictures, yet he fails to perceive or recognize them. Distinctions like these constitute a core issue in cognitive neuroscience, highlighting the limitations of the language used in everyday descriptions of thinking. Such distinctions are relevant in this chapter, and they will reappear when we turn to problems of attention and memory in Chapters 7 and 9.

Second, as we saw in Chapter 5, although our sensory systems use a divide-and-conquer strategy, our perception is of unified objects. Features like color and motion are processed along distinct neural pathways. Perception, however, requires more than simply perceiving the features of objects. For instance, when gazing at the northern coastline of San Francisco (Figure 6.1), we do not see just blurs of color floating among a sea of various shapes. Instead, our percepts are of the deep-blue water of the bay, the peaked towers of the Golden Gate Bridge, and the silver skyscrapers of the city.

Third, perceptual capabilities are enormously flexible and robust. The city vista looks the same whether people view it with both eyes or with only the left or the right eye. Changing our position may reveal Golden Gate Park in the distance or it may present a view in which a building occludes half of the city. Even so, we readily recognize that we are looking at the same city. The percept remains stable even if we stand on our head and the retinal image is inverted. We readily attribute the change in the percept to our viewing position. We do not see the world as upside down. We could move across the bay and gaze at the city from a different angle and still recognize it. Somehow, no matter if the inputs are partial, upside down, full face, or sideways, hitting varying amounts of the retina or all of it, the brain interprets it all as the same object and identifies it: “That, my friend, is San Francisco!” We take this constancy for granted, but it is truly amazing when we consider how the sensory signals are radically different with each viewing position. (Curiously, this stability varies for different classes of objects. If, while upside down, we catch sight of a group of people walking toward us, then we will not recognize a friend quite as readily as when seeing her face in the normal, upright position. As we shall see, face perception has some unique properties.)

|

|

FIGURE 6.1 Our view of the world depends on our vantage point.

These two photographs are taken of the same scene, but from two different positions and under two different conditions. Each vantage point reveals new views of the scene, including objects that were obscured from the other vantage point. Moreover, the colors change, depending on the time of day and weather. Despite this variability, we easily recognize that both photographs are of the Golden Gate Bridge, with San Francisco in the distance.

Fourth, the product of perception is also intimately interwoven with memory. Object recognition is more than linking features to form a coherent whole; that whole triggers memories. Those of us who have spent many hours roaming the hills around San Francisco Bay recognize that the pictures in Figure 6.1 were taken from the Marin headlands just north of the city. Even if you have never been to San Francisco, when you look at these pictures, there is interplay between perception and memory. For the traveler arriving from Australia, the first view of San Francisco is likely to evoke comparisons to Sydney; for the first-time tourist from Kansas, the vista may be so unusual that she recognizes it as such: a place unlike any other that she has seen.

In the previous chapter, we saw how objects and scenes from the external world are disassembled and input into the visual system in the form of lines, shapes, and colors. In this chapter, we explore how the brain processes those low-level inputs into the high-level, coherent, memory-invoking percepts of everyday life. We begin with a discussion of the cortical real estate that is involved in object recognition. Then, we look at some of the computational problems that the object recognition system has to solve. After that, we turn to patients with object recognition deficits and consider what their deficits tell us about perception. Next, we delve into the fascinating world of category-specific recognition problems and their implications for processing. Along the way, it will be useful to keep in mind the four concepts introduced earlier: Perception and recognition are two different animals; we perceive objects as unified wholes, and do so in a manner that is highly flexible; and our perception and memory are tightly bound. We close the chapter with a look at how researchers are putting theories of object recognition to the test by trying to predict what a person is viewing simply by looking at his fMRI scans—the 21stcentury version of mind reading.

TAKE-HOME MESSAGES

ANATOMICAL ORIENTATION

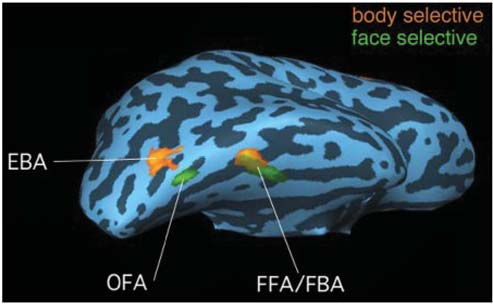

The anatomy of object recognition

Specific regions of the brain are used for distinct types of object recognition. The parahippocampal area and posterior parietal cortex process information about places and scenes. Multiple regions are involved in face recognition, including fusiform gyrus and superior temporal sulcus, while other body parts are recognized using areas within the lateral occipital and posterior inferior temporal cortex.

Multiple Pathways for Visual Perception

The pathways carrying visual information from the retina to the first few synapses in the cortex clearly segregate into multiple processing streams. Much of the information goes to the primary visual cortex (also called V1 or striate cortex; see Chapter 5 and Figures 5.23 and 5.24), located in the occipital lobe. Output from V1 is contained primarily in two major fiber bundles, or fasciculi. Figure 6.2 shows that the superior longitudinal fasciculus takes a dorsal path from the striate cortex and other visual areas, terminating mostly in the posterior regions of the parietal lobe. The inferior longitudinal fasciculus follows a ventral route from the occipital striate cortex into the temporal lobe. These two pathways are referred to as the ventral (occipitotemporal) stream and the dorsal (occipitoparietal) stream. This anatomical separation of information-carrying fibers from the visual cortex to two separate regions of the brain raises some questions. What are the different properties of processing within the ventral and dorsal streams? How do they differ in their representation of the visual input? How does processing within these two streams interact to support object perception?

|

FIGURE 6.2 The major object recognition pathways. |

HOW THE BRAIN WORKS

Now You See It, Now You Don’t

Gaze at the picture in Figure 1 for a couple of minutes. If you are like most people, you initially saw a vase. But surprise! After a while the vase changed to a picture of two human profiles staring at each other. With continued viewing, your perception changes back and forth, satisfied with one interpretation until suddenly the other asserts itself and refuses to yield the floor. This is an example of multistable perception.

How are multistable percepts resolved in the brain? The stimulus information does not change at the points of transition. Rather, the interpretation of the pictorial cues changes. When staring at the white region, you see the vase. If you shift attention to the black regions, you see the profiles. But here we run into a chicken-and-egg question. Did the representation of individual features change first and thus cause the percept to change? Or did the percept change and lead to a reinterpretation of the features?

To explore these questions, Nikos Logothetis of the Max Planck Institute in Tübingen, Germany, turned to a different form of multistable perception: binocular rivalry (Sheinberg & Logothetis, 1997). The exquisite focusing capability of our eyes (perhaps assisted by an optometrist) makes us forget that they provide two separate snapshots of the world. These snapshots are only slightly different, and they provide important cues for depth perception. With some technological tricks, however, it is possible to present radically different inputs to the two eyes. To accomplish this, researchers employ special glasses that have a shutter which alternately blocks the input to one eye and then the other at very rapid rates. Varying the stimulus in synchrony with the shutter allows a different stimulus to be presented to each eye.

FIGURE 1 Does your perception change over time as you continue to stare at this drawing?

Do we see two things simultaneously at the same location? The answer is no. As with the ambiguous vase–face profiles picture, only one object or the other is seen at any single point in time, although at transitions there is sometimes a period of fuzziness in which neither object is clearly perceived. Logothetis trained his monkeys to press one of two levers to indicate which object was being perceived. To make sure the animals were not responding randomly, he included nonrivalrous trials in which only one of the objects was presented. He then recorded from single cells in various areas of the visual cortex. Within each area he selected two objects, only one of which was effective in driving the cell. In this way he could correlate the activity of the cell with the animal’s perceptual experience.

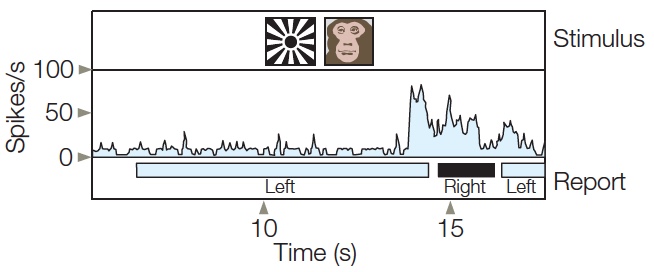

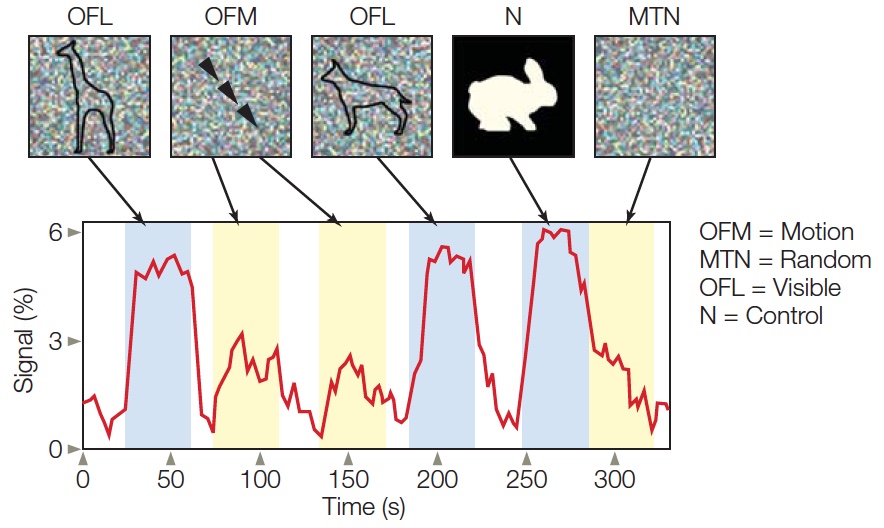

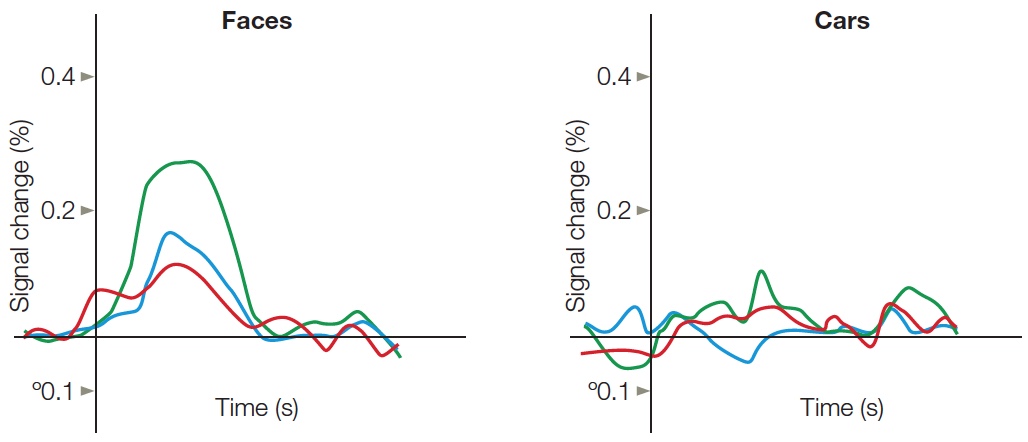

As his recordings moved up the ventral pathway, Logothetis found an increase in the percentage of active cells, with activity mirroring the animals’ perception rather than the stimulus conditions. In V1, the responses of less than 20% of the cells fluctuated as a function of whether the animal perceived the effective or ineffective stimulus. In V4, this percentage increased to over 33%. In contrast, the activity of all the cells in the visual areas of the temporal lobe was tightly correlated with the animal’s perception. Here the cells would respond only when the effective stimulus, the monkey face, was perceived (Figure 2). When the animal pressed the lever indicating that it perceived the ineffective stimulus (the starburst) under rivalrous conditions, the cells were essentially silent. In both V4 and the temporal lobe, the cell activity changed in advance of the animal’s response, indicating that the percept had changed. Thus, even when the stimulus did not change, an increase in activity was observed prior to the transition from a perception of the ineffective stimulus to a perception of the effective stimulus.

These results suggest a competition during the early stages of cortical processing between the two possible percepts. The activity of the cells in V1 and in V4 can be thought of as perceptual hypotheses, with the patterns across an ensemble of cells reflecting the strength of the different hypotheses. Interactions between these cells ensure that, by the time the information reaches the inferotemporal lobe, one of these hypotheses has coalesced into a stable percept. Reflecting the properties of the real world, the brain is not fooled into believing that two objects exist at the same place at the same time.

FIGURE 2 When the starburst or monkey face is presented alone, the cell in the temporal cortex responds vigorously to the monkey face but not to the starburst.

In the rivalrous condition, the two stimuli are presented simultaneously, one to the left eye and one to the right eye. The bottom bar shows the monkey’s perception, indicated by a lever press. About 1 s after the onset of the rivalrous stimulus, the animal perceives the starburst; the cell is silent during this period. About 7 s later, the cell shows a large increase in activity and, correspondingly, indicates that its perception has changed to the monkey face shortly thereafter. Then, 2 s later, the percept flips back to the starburst and the cell’s activity is again reduced.

The What and Where Pathways

To address the first of these questions, Leslie Ungerleider and Mortimer Mishkin, at the National Institutes of Health, proposed that processing along these two pathways is designed to extract fundamentally different types of information (Ungerleider & Mishkin, 1982). They hypothesized that the ventral stream is specialized for object perception and recognition—for determining what we’re looking at. The dorsal stream is specialized for spatial perception—for determining where an object is—and for analyzing the spatial configuration between different objects in a scene. “What” and “where” are the two basic questions to be answered in visual perception. To respond appropriately, we must (a) recognize what we’re looking at and (b) know where it is.

The initial data for the what–where dissociation of the ventral and dorsal streams came from lesion studies with monkeys. Animals with bilateral lesions to the temporal lobe that disrupted the ventral stream had great difficulty discriminating between different shapes—a “what” discrimination (Pohl, 1973). For example, they made many errors while learning that one object, such as a cylinder, was associated with a food reward when paired with another object (e.g., a cube). Interestingly, these same animals had no trouble determining where an object was in relation to other objects; this second ability depends on a “where” computation. The opposite was true for animals with parietal lobe lesions that disrupted the dorsal stream. These animals had trouble discriminating where an object was in relation to other objects (“where”) but had no problem discriminating between two similar objects (“what”).

More recent evidence indicates that the separation of what and where pathways is not limited to the visual system. Studies with various species, including humans, suggest that auditory processing regions are similarly divided. The anterior aspects of primary auditory cortex are specialized for auditory-pattern processing (what is the sound?), and posterior regions are specialized for identifying the spatial location of a sound (where is it coming from?). One particularly clever experiment demonstrated this functional specialization by asking cats to identify the where and what of an auditory stimulus (Lomber & Malhotra, 2008). The cats were trained to perform two different tasks: one task required the animal to locate a sound, and a second task required making discriminations between different sound patterns. The researchers then placed thin tubes over the anterior auditory region; through these tubes, a cold liquid could be passed to cool the underlying neural tissue. This procedure temporarily inactivates the targeted tissue, providing a transient lesion (akin to the logic of TMS studies conducted with people). Cooling resulted in selective deficits in the pattern discrimination task, but not in the localization task. In a second phase of the study, the tubes were repositioned over the posterior auditory region. This time there was a deficit in the localization task, but not in the pattern discrimination one—a neat double dissociation in the same animal.

Representational Differences Between the Dorsal and Ventral Streams

Neurons in both the temporal and parietal lobes have large receptive fields, but the physiological properties of the neurons within each lobe are quite distinct. Neurons in the parietal lobe may respond similarly to many different stimuli (Robinson et al., 1978). For example, a parietal neuron recorded in a fully conscious monkey might be activated when a stimulus such as a spot of light is restricted to a small region of space or when the stimulus is a large object that encompasses much of the hemifield. In addition, many parietal neurons are responsive to stimuli presented in the more eccentric parts of the visual field. Although 40 % of these neurons have receptive fields near the central region of vision (the fovea), the remaining cells have receptive fields that exclude the foveal region. These eccentrically tuned cells are ideally suited for detecting the presence and location of a stimulus, especially one that has just entered the field of view. Remember in Chapter 5 that, when examining subcortical visual processing, we suggested a similar role for the superior colliculus, which also plays an important role in visual attention (discussed in Chapter 7).

The response of neurons in the ventral stream of the temporal lobe is quite different (Ito et al., 1995). The receptive fields for these neurons always encompass the fovea, and most of these neurons can be activated by a stimulus that falls within either the left or the right visual field. The disproportionate representation of central vision appears to be ideal for a system devoted to object recognition. We usually look directly at things we wish to identify, thereby taking advantage of the greater acuity of foveal vision.

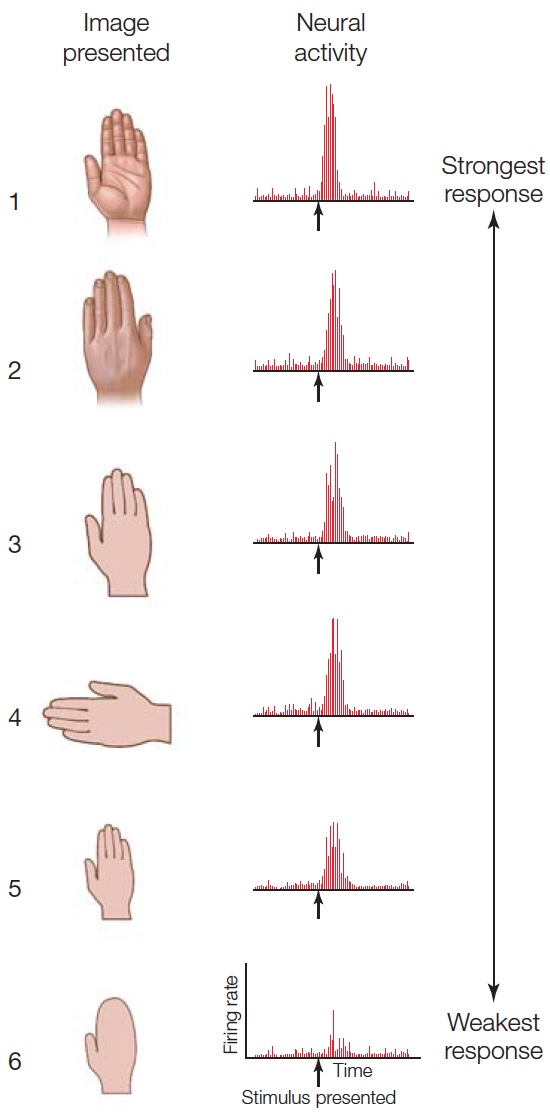

FIGURE 6.3 Single-cell recordings from a neuron in the inferior temporal cortex.

Neurons in the inferior temporal cortex rarely respond to simple stimuli such as lines or spots of light. Rather, they respond to more complex objects such as hands. This cell responded weakly when the image did not include the defining fingers (6).

Cells within the visual areas of the temporal lobe have a diverse pattern of selectivity (Desimone, 1991). In the posterior region, earlier in processing, cells show a preference for relatively simple features such as edges. Others, farther along in the processing stream, have a preference for much more complex features such as human body parts, apples, flowers, or snakes. Recordings from one such cell, located in the inferotemporal cortex, are shown in Figure 6.3. This cell is most highly activated by the human hand. The first five images in the figure show the response of the cell to various views of a hand. Activity is high regardless of the hand’s orientation and is only slightly reduced when the hand is considerably smaller. The sixth image, of a mitten, shows that the response diminishes if the same shape lacks defining fingers.

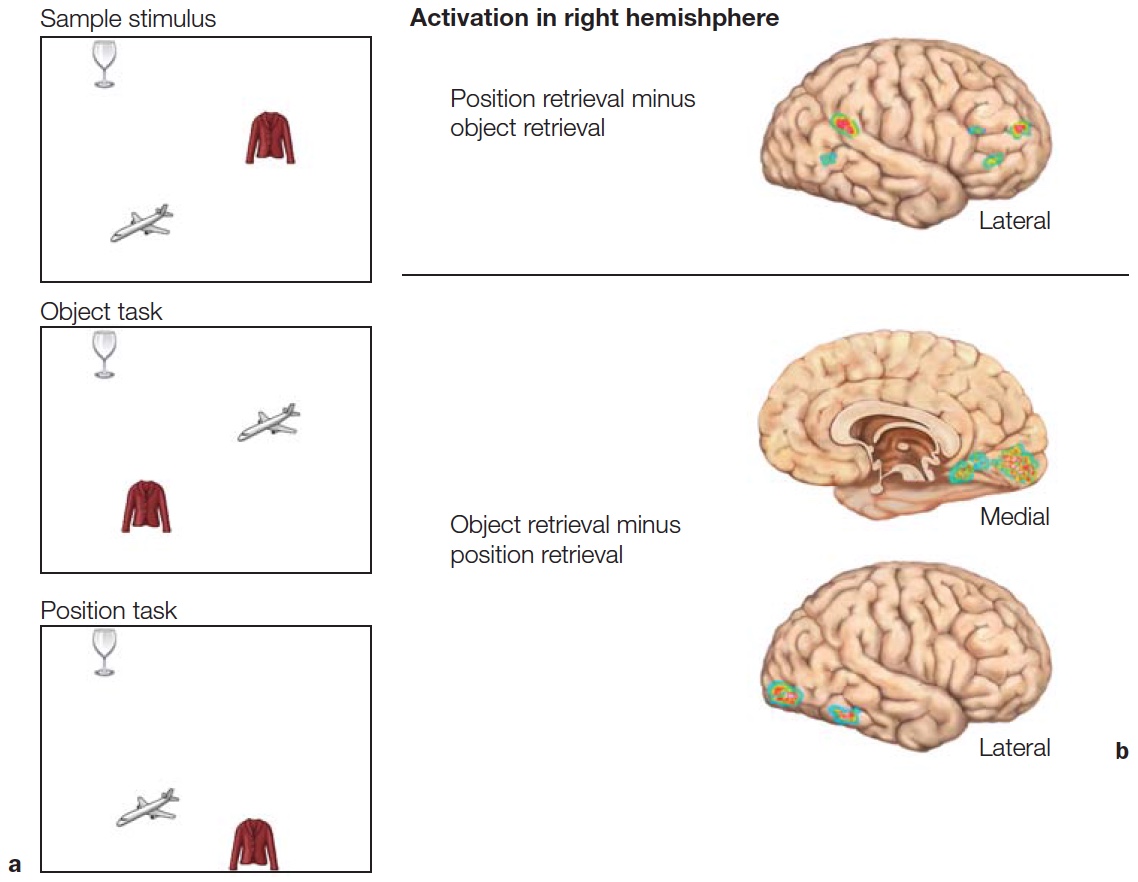

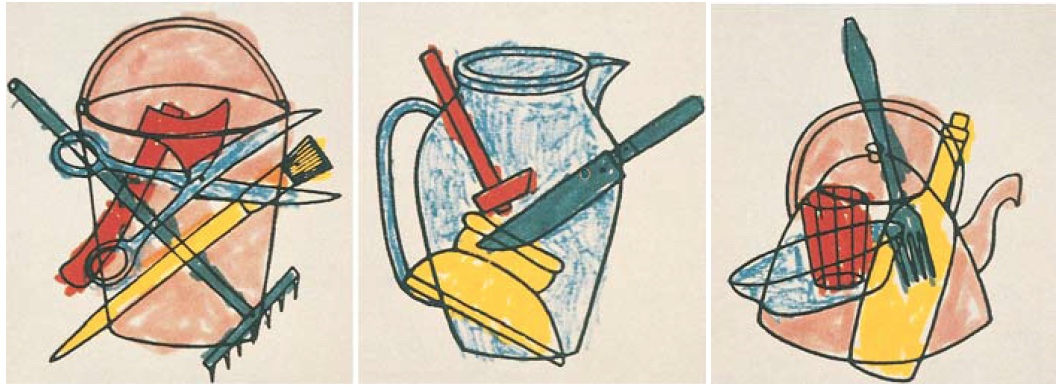

Neuroimaging studies with human participants have provided further evidence that the dorsal and ventral streams are activated differentially by “where” and “what” tasks. In one elegant study using positron emission tomography (S. Kohler et al., 1995), trials consisted of pairs of displays containing three objects each (Figure 6.4a). In the position task, the participants had to determine if the objects were presented at the same locations in the two displays. In the object task, they had to determine if the objects remained the same across the two displays. The irrelevant factor could remain the same or change: The objects might change on the position task, even though the locations remained the same; similarly, the same objects might be presented at new locations in the object task. Thus, the stimulus displays were identical for the two conditions; the only difference was the task instruction.

The PET data for the two tasks were compared directly to identify neural regions that were selectively activated by one task or the other. In this way, areas that were engaged similarly for both tasks—because of similar perception, decision, or response requirements—were masked. During the position task, regional cerebral blood flow was higher in the parietal lobe in the right hemisphere (Figure 6.4b, left panel). In contrast, the object task led to increased regional cerebral blood flow bilaterally at the junction of the occipital and temporal lobes (Figure 6.4b, right panel).

Perception for Identification Versus Perception for Action

Patient studies offer more support for a dissociation of “what” and “where” processing. As we shall see in Chapter 7, the parietal cortex is central to spatial attention. Lesions of this lobe can also produce severe disturbances in the ability to represent the world’s spatial layout and the spatial relations of objects within it.

More revealing have been functional dissociations in the performance of patients with visual agnosia. Mel Goodale and David Milner (1992) at the University of Western Ontario described a 34-year-old woman, D.F., who suffered carbon monoxide intoxication because of a leaky propane gas heater. For D.F., the event caused a severe object recognition disorder. When asked to name household items, she made errors such as labeling a cup an “ashtray” or a fork a “knife.” She usually gave crude descriptions of a displayed object; for example, a screwdriver was “long, black, and thin.” Picture recognition was even more disrupted. When shown drawings of common objects, D.F. could not identify a single one. Her deficit could not be attributed to anomia, a problem with naming objects, because whenever an object was placed in her hand, she identified it. Sensory testing indicated that D.F.’s agnosia could not be attributed to a loss of visual acuity. She could detect small gray targets displayed against a black background. Although her ability to discriminate small differences in hue was abnormal, she correctly identified primary colors.

FIGURE 6.4 Matching task used to contrast position and object discrimination.

(a) Object and position matching to sample task. The Study and Test displays each contain three objects in three positions. On object retrieval trials, the participant judges if the three objects were the same or different. On position retrieval trials, the participant judges if the three objects are in the same or different locations. In the examples depicted, the correct response would be “same” for the object task trial and “different” for the position task trial. (b) Views of the right hemisphere showing cortical regions that showed differential pattern of activation in the position and object retrieval tasks.

Most relevant to our discussion is the dissociation of D.F.’s performance on two tasks, both designed to assess her ability to perceive the orientation of a three-dimensional object. For these tasks, D.F. was asked to view a circular block into which a slot had been cut. The orientation of the slot could be varied by rotating the block. In the explicit matching task, D.F. was given a card and asked to orient her hand so that the card would fit into the slot. D.F. failed miserably, orienting the card vertically even when the slot was horizontal (Figure 6.5a). When asked to insert the card into the slot, however, D.F. quickly reached forward and inserted the card (Figure 6.5b). Her performance on this visuomotor task did not depend on tactile feedback that would result when the card contacted the slot; her hand was properly oriented even before she reached the block.

D.F.’s performance showed that the two processing systems make use of perceptual information from different sources. The explicit matching task showed that D.F. could not recognize the orientation of a three-dimensional object; this deficit is indicative of her severe agnosia. Yet when D.F. was asked to insert the card (the action task), her performance clearly indicated that she had processed the orientation of the slot. While shape and orientation information were not available to the processing system for objects, they were available for the visuomotor task. This dissociation suggests that the “what” and “where” systems may carry similar information, but they each support different aspects of cognition.

The “what” system is essential for determining the identity of an object. If the object is familiar, people will recognize it as such; if it is novel, we may compare the percept to stored representations of similarly shaped objects. The “where” system appears to be essential for more than determining the locations of different objects; it is also critical for guiding interactions with these objects. D.F.’s performance is an example of how information accessible to action systems can be dissociated from information accessible to knowledge and consciousness. Indeed, Goodale and Milner argued that the dichotomy should be between “what” and “how,” to emphasize that the dorsal visual system provides a strong input to motor systems to compute how a movement should be produced. Consider what happens when you grab a glass of water to drink. Your visual system has factored in where the glass is in relation to your eyes, your head, the table, and the path required to move the water glass directly to your mouth.

|

Explicit matching task

|

Action task

|

|

FIGURE 6.5 Dissociation between perception linked to awareness and perception linked to action. |

|

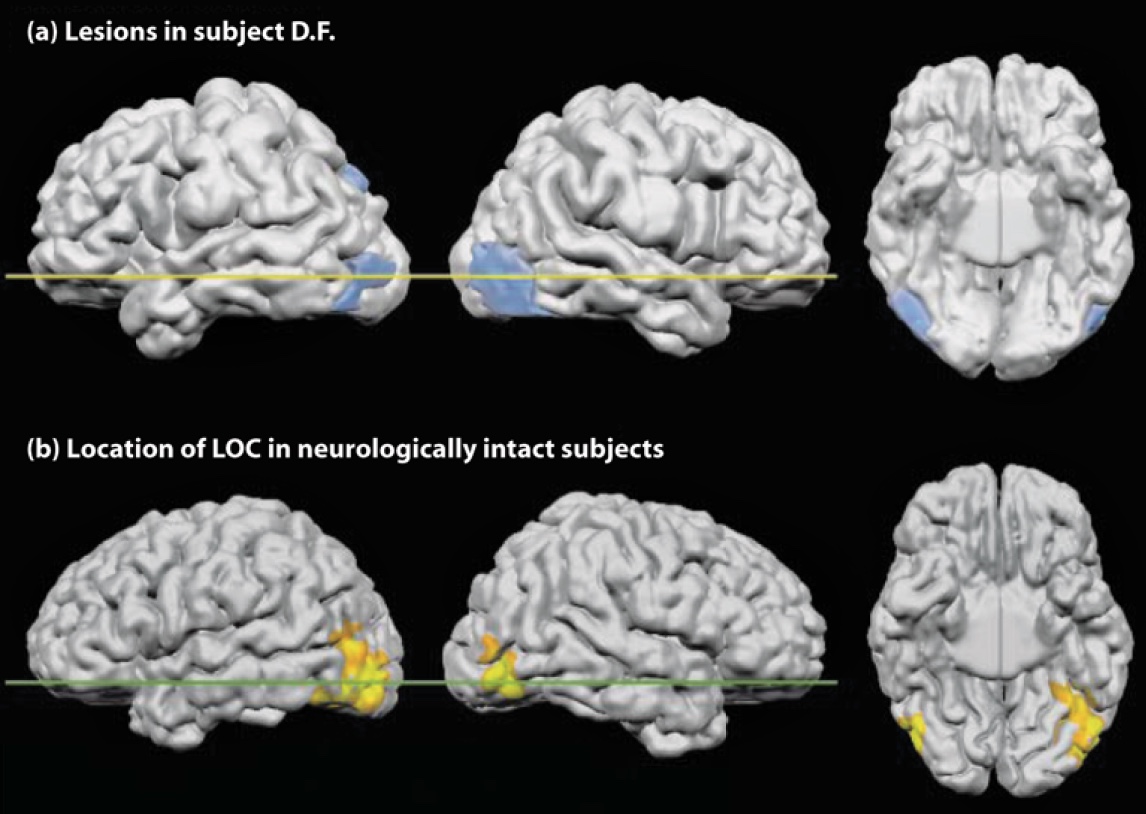

Goodale, Milner, and their colleagues have subsequently tested D.F. in many studies to explore the neural correlates of this striking dissociation between vision for recognition and vision for action (Goodale & Milner, 2004). Structural MRI scans showed that D.F. has widespread cortical atrophy with concentrated bilateral lesions in the ventral stream that encompass lateral occipital cortex (LOC) (Figure 6.6; T. James et al., 2003). Functional MRI scans show that D.F. does have some ventral activation in spared tissue when she was attempting to recognize objects, but it was more widespread than is normally seen in controls. In contrast, when asked to grasp objects, D.F. showed robust activity in anterior regions of the inferior parietal lobe. This activity is similar to what is observed in neurologically healthy individuals (Culham et al., 2003).

Patients who suffer from carbon monoxide intoxication typically have diffuse damage, so it is difficult to pinpoint the source of the behavioral deficits. Therefore, cognitive neuroscientists tend to focus their studies on patients with more focal lesions, such as those that result from stroke. One recent case study describes a patient, J.S., with an intriguing form of visual agnosia (Karnath et al., 2009). J.S. complained that he was unable to see objects, watch TV, or read. He could dress himself, but only if he knew beforehand exactly where his clothes were located. What’s more, he was unable to recognize familiar people by their faces, even though he could identify them by their voices. Oddly enough, however, he was able to walk around the neighborhood without a problem. He could easily grab objects presented to him at different locations, even though he could not identify the objects.

J.S. was examined using tests similar to those used in the studies with D.F. (see Figure 6.5). When shown an object, he performed poorly in describing its size; but he could readily pick it up, adjusting his grip size to match the object’s size. Or, if shown two flat and irregular shapes, J.S. found it very challenging to say if they were the same or different, yet could easily modify his hand shape to pick up each object. As with D.F., J.S. displays a compelling dissociation in his abilities for object identification, even though his actions indicate that he has “perceived” in exquisite detail the shape and orientation of the objects. MRIs of J.S.’s brain revealed damage limited to the medial aspect of the ventral occipitotemporal cortex (OTC). Note that J.S.’s lesions are primarily in the medial aspect of the OTC, but D.F.’s lesions were primarily in lateral occipital cortex. Possibly both the lateral and medial parts of the ventral stream are needed for object recognition, or perhaps the diffuse pathology associated with carbon monoxide poisoning in D.F. has affected function within the medial OTC as well.

FIGURE 6.6 Ventral-stream lesions in patient D.F. shown in comparison with the functionally-defined lateral occipital complex (LOC) in healthy participants.

(a) Reconstruction of D.F.’s brain lesion. Lateral views of the left and right hemispheres are shown, as is a ventral view of the underside of the brain. (b) The highlighted regions indicate activation in the lateral occipital cortex of neurologically healthy individuals when they are recognizing objects.

Patients like D.F. and J.S. offer examples of single dissociations. Each shows a selective (and dramatic) impairment in using vision to recognize objects while remaining proficient in using vision to perform actions. The opposite dissociation can also be found in the clinical literature: Patients with optic ataxia can recognize objects, yet cannot use visual information to guide their actions. For instance, when someone with optic ataxia reaches for an object, she doesn’t move directly toward it; rather, she gropes about like a person trying to find a light switch in the dark. Although D.F. had no problem avoiding obstacles when reaching for an object, patients with optic ataxia fail to take obstacles into account as they reach for something (Schindler et al., 2004). Their eye movements present a similar loss of spatial knowledge. Saccades, or directed eye movements, may be directed inappropriately and fail to bring the object within the fovea. When tested on the slot task used with D.F. (see Figure 6.5), these patients can report the orientation of a visual slot, even though they cannot use this information when inserting an object in the slot. In accord with what researchers expect on the basis of dorsal–ventral dichotomy, optic ataxia is associated with lesions of the parietal cortex.

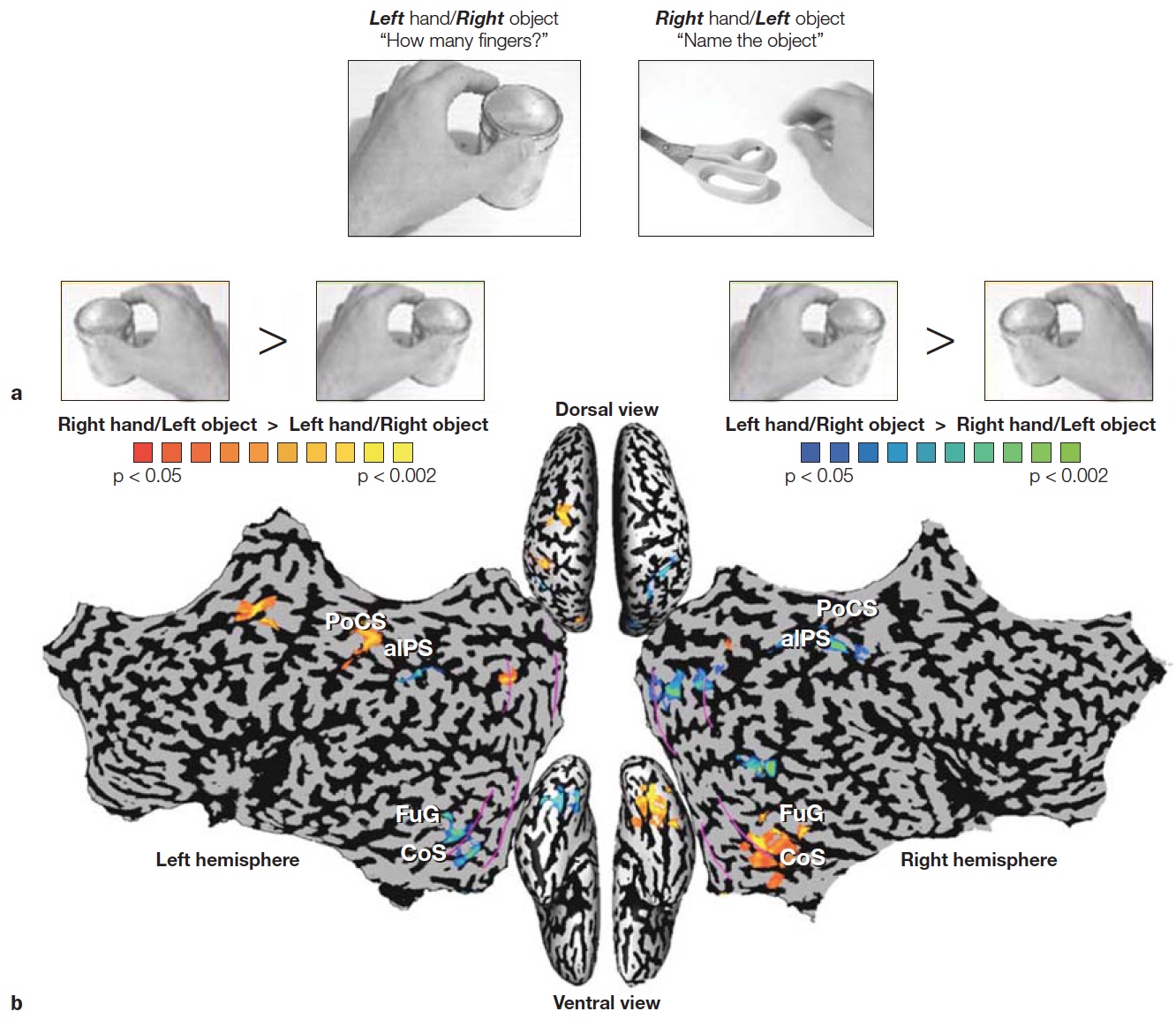

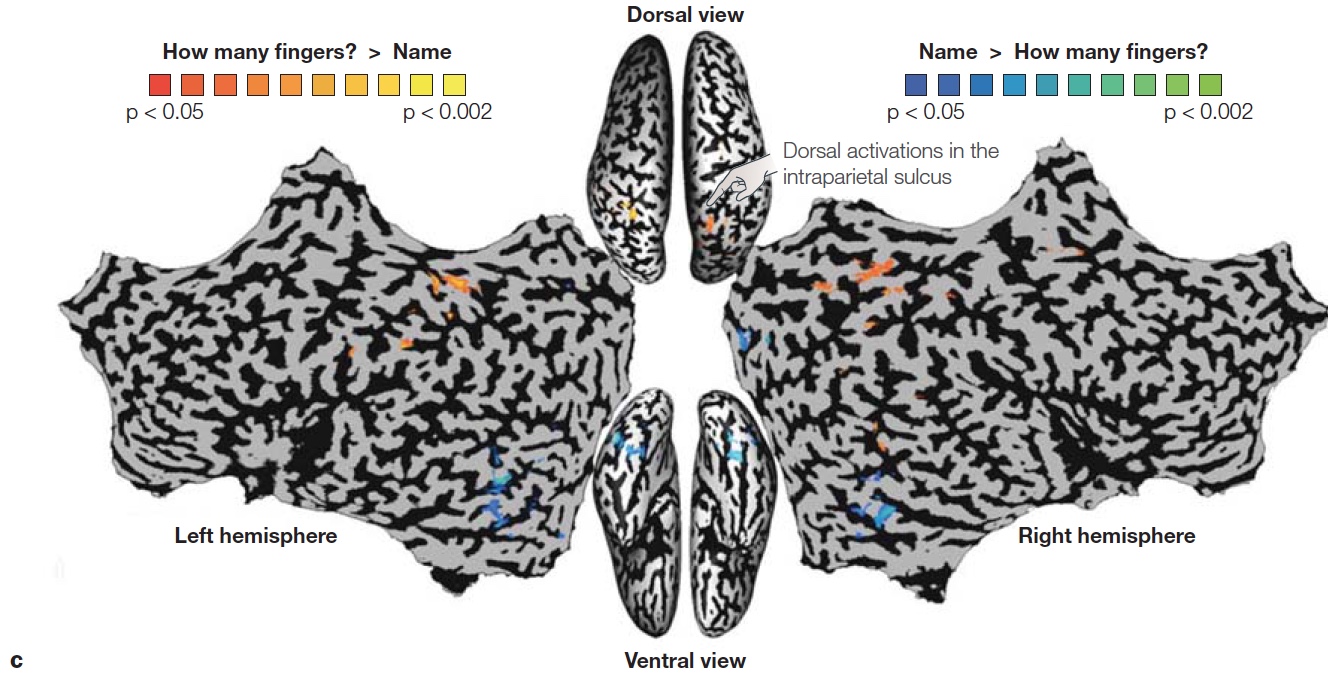

Although these examples are dramatic demonstrations of functional separation of “what” and “where” processing, do not forget that this evidence comes from the study of patients with rare disorders. It is also important to see if similar principles hold in healthy brains. Lior Shmuelof and Ehud Zohary designed a study to compare activity patterns in the dorsal and ventral streams in normal subjects (Shmuelof & Zohary, 2005). The participants viewed video clips of various objects that were being manipulated by a hand. The objects were presented in either the left or right visual field, and the hand approached the object from the opposite visual field (Figure 6.7a). Activation of the dorsal parietal region was driven by the position of the hand (Figure 6.7b). For example, when viewing a right hand reaching for an object in the left visual field, the activation was stronger in the left parietal region. In contrast, activation in ventral occipitotemporal cortex was correlated with the position of the object. In a second experiment, the participants were asked either to identify the object or judge how many fingers were used to grasp the object. Here again, ventral activation was stronger for the object identification task, but dorsal activation was stronger for the finger judgment task (Figure 6.7c).

In sum, the what–where or what–how dichotomy offers a functional account of two computational goals of higher visual processing. This distinction is best viewed as heuristic rather than absolute. The dorsal and ventral streams are not isolated from one another, but rather communicate extensively. Processing within the parietal lobe, the termination of the “where” pathway, serves many purposes. We have focused here on its guiding of action; in Chapter 7 we will see that the parietal lobe also plays a critical role in selective attention, the enhancement of processing at some locations instead of others. Moreover, spatial information can be useful for solving “what” problems. For example, depth cues help segregate a complex scene into its component objects. The rest of this chapter concentrates on object recognition—in particular, the visual system’s assortment of strategies that make use of both dorsal and ventral stream processing for perceiving and recognizing the world.

TAKE-HOME MESSAGES

Computational Problems in Object Recognition

Object perception depends primarily on an analysis of the shape of a visual stimulus. Cues such as color, texture, and motion certainly also contribute to normal perception. For example, when people look at the surf breaking on the shore, their acuity is not sufficient to see grains of sand, and water is essentially amorphous, lacking any definable shape. Yet the textures of the sand’s surface and the water’s edge, and their differences in color, enable us to distinguish between the two regions. The water’s motion is important too. Nevertheless, even if surface features like texture and color are absent or applied inappropriately, recognition is minimally affected: We can readily identify an elephant, an apple, and the human form in Figure 6.8, even though they are shown as pink, plaid, and wooden, respectively. Here object recognition is derived from a perceptual ability to match an analysis of shape and form to an object, regardless of color, texture, or motion cues.

FIGURE 6.7 Hemispheric asymmetries depend on location of object and hand used to reach the object.

(a) Video clips showed a left or right hand, being used to reach for an object on the left or right side of space. In the “Action” condition, participants judged the number of fingers used to contact the object. In the “Recognition” condition, participants named the object. (b) Laterality pattern in dorsal and ventral regions reveal preference for either the hand or object. Dorsal activation is related to the position of the hand, being greater in the hemisphere contralateral to the hand grasping the object. Ventral activation is related to the position of the object, being greater in the hemisphere contralateral to the object being grasped. (c) Combining across right hand and left hand pictures, dorsal activation in the intraparietal sulcus (orange) was stronger when judging how many fingers would be required to grasp the object, whereas ventral activation in occipitotemporal cortex (blue) was greater when naming the object.

FIGURE 6.8 Analyzing shape and form.

Despite the irregularities in how these objects are depicted, most people have little problem recognizing them. We may never have seen pink elephants or plaid apples, but our object recognition system can still discern the essential features that identify these objects as elephants and apples.

To account for shape-based recognition, we need to consider two problems. The first has to do with shape encoding. How is a shape represented internally? What enables us to recognize differences between a triangle and a square or between a chimp and a person? The second problem centers on how shape is processed, given that the position from which an object is viewed varies. We recognize shapes from an infinite array of positions and orientations, and our recognition system is not hampered by scale changes in the retinal image as we move close to or away from an object. Let’s start with the latter problem.

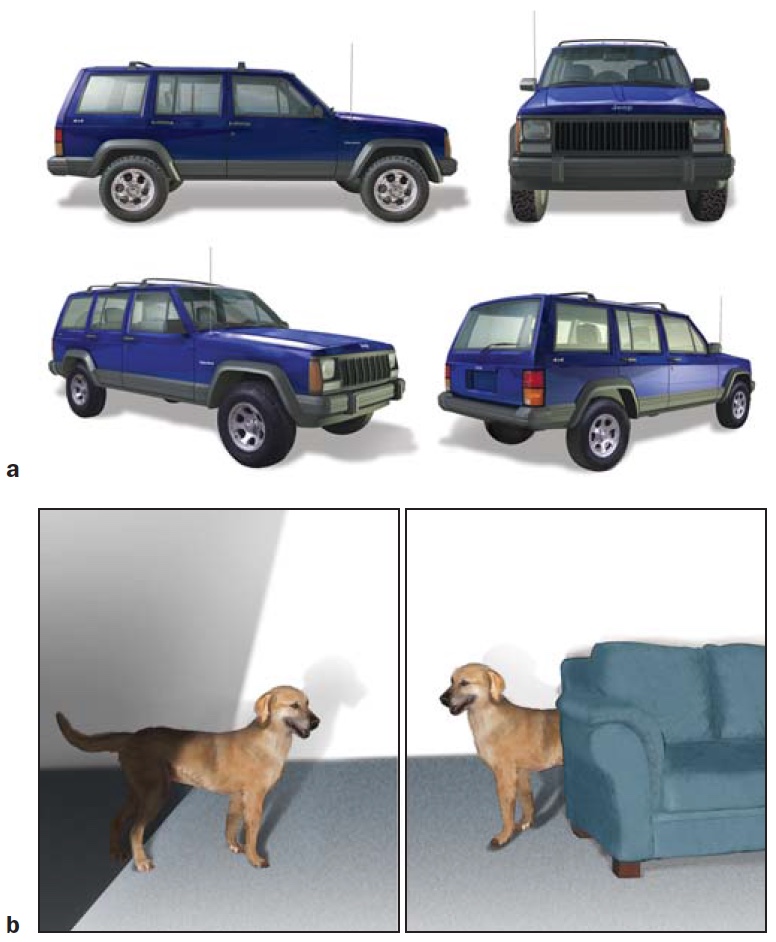

Variability in Sensory Information

Object constancy refers to our amazing ability to recognize an object in countless situations. Figure 6.9a shows four drawings of an automobile that have little in common with respect to sensory information reaching the eye. Yet we have no problem identifying the object in each picture as a car, and discerning that all four cars are the same model. The visual information emanating from an object varies for several reasons: viewing position, how it is illuminated, and the object’s surroundings. First, sensory information depends highly on viewing position. Viewpoint changes not only as you view an object from different angles, but when the object itself moves and thus changes its orientation relative to you. When a dog rolls over, or you walk around the room gazing at him, your interpretation of the object (the dog) remains the same despite the changes in how the image hits the retina and the retinal projection of shape. The human perceptual system is adept at separating changes caused by shifts in viewpoint from changes intrinsic to an object itself.

FIGURE 6.9 Object constancy.

(a) The image on the retina is vastly different for these four drawings of a car. (b) Other sources of variation in the sensory input include shadows and occlusion (where one object is in front of another). Despite this sensory variability, we rapidly recognize the objects and can judge if they depict the same object or different objects.

Moreover, while the visible parts of an object may differ depending on how light hits it and where shadows are cast (Figure 6.9b), recognition is largely insensitive to changes in illumination. A dog in the sun and dog in the shade still register as a dog.

Lastly, objects are rarely seen in isolation. People see objects surrounded by other objects and against varied backgrounds. Yet, we have no trouble separating a dog from other objects on a crowded city street, even when the dog is partially obstructed by pedestrians, trees, and hydrants. Our perceptual system quickly partitions the scene into components.

Object recognition must overcome these three sources of variability. But it also has to recognize that changes in perceived shape can actually reflect changes in the object. Object recognition must be general enough to support object constancy, and it must also be specific enough to pick out slight differences between members of a category or class.

View-Dependent Versus View-Invariant Recognition

A central debate in object recognition has to do with defining the frame of reference in which recognition occurs (D. Perrett et al., 1994). For example, when we look at a bicycle, we easily recognize it from its most typical view, from the side; but we also recognize it when looking down upon it or straight on. Somehow, we can take two-dimensional information from the retina and recognize a three-dimensional object from any angle. Various theories have been proposed to explain how we solve the problem of viewing position. These theories can be grouped into two categories: recognition is dependent on the frame of reference; or, recognition is independent of the frame of reference.

FIGURE 6.10 View-dependent object recognition.

View-dependent theories of object recognition posit that recognition processes depend on the vantage point. Recognizing that all four of these drawings depict a bicycle—one from a side view, one from an aerial view, and two viewed at an angle—requires matching the distinct sensory inputs to view-dependent representations.

Theories with a view-dependent frame of reference posit that people have a cornucopia of specific representations in memory; we simply need to match a stimulus to a stored representation. The key idea is that the stored representation for recognizing a bicycle from the side is different from the one for recognizing a bicycle viewed from above (Figure 6.10). Hence, our ability to recognize that two stimuli are depicting the same object is assumed to arise at a later stage of processing.

One shortcoming with view-dependent theories is that they seem to place a heavy burden on perceptual memory. Each object requires multiple representations in memory, each associated with a different vantage point. This problem is less daunting, however, if we assume that recognition processes are able to match the input to stored representations through an interpolation process. We recognize an object seen from a novel viewpoint by comparing the stimulus information to the stored representations and choosing the best match. When our viewing position of a bicycle is at a 41° angle, relative to vertical, a stored representation of a bicycle viewed at 45° is likely good enough to allow us to recognize the object. This idea is supported by experiments using novel objects—an approach that minimizes the contribution of the participants’ experience and the possibility of verbal strategies. The time needed to decide if two objects are the same or different increases as the viewpoints diverge, even when each member of the object set contains a unique feature (Tarr et al., 1997).

An alternative scheme proposes that recognition occurs in a view-invariant frame of reference. Recognition does not happen by simple analysis of the stimulus information. Rather, the perceptual system extracts structural information about the components of an object and the relationship between these components. In this scheme, the key to successful recognition is that critical properties remain independent of viewpoint (Marr, 1982). To stay with the bicycle example, the properties might be features such as an elongated shape running along the long axis, combined with a shorter, stick-like shape coming off of one end. Throw in two circularshaped parts, and we could recognize the object as a bicycle from just about any position.

As the saying goes, there’s more than one way to skin a cat. In fact, the brain may use both view-dependent and view-invariant operations to support object recognition. Patrick Vuilleumier and his colleagues at University College London explored this hypothesis in an fMRI study (Vuilleumier et al., 2002). The study was motivated by the finding from various imaging studies that, when a stimulus is repeated, the blood oxygen level– dependent (BOLD) response is lower in the second presentation compared to the first. This repetition suppression effect is hypothesized to indicate increased neural efficiency: The neural response to the stimulus is more efficient and perhaps faster when the pattern has been recently activated. To ask about view dependency, study participants were shown pictures of objects, and each picture was repeated over the course of the scanning session. The second presentation was either in the same orientation or from a different viewpoint.

Experimenters observed a repetition suppression effect in left ventral occipital cortex, regardless of whether the object was shown from the same or a different viewpoint (Figure 6.11a), consistent with a view-invariant representation. In contrast, activation in right ventral occipital cortex decreased only when the second presentation was from the original viewpoint (Figure 6.11b), consistent with a view-dependent representation. When the object was shown from a new viewpoint, the BOLD response was similar to that observed for the object in the initial presentation. Thus the two hemispheres may process information in different ways, providing two snapshots of the world (this idea is discussed in more detail in Chapter 4).

Shape Encoding

FIGURE 6.11 Asymmetry between left and right fusiform activation to repetition effects.

(a) A repetition suppression effect is observed in left ventral occipital cortex regardless of whether an object is shown from the same or a different viewpoint, consistent with a view-invariant representation. (b) In contrast, activation in the right ventral occipital cortex decreased relative to activity during the presentation of novel stimuli only when the second object was presented in the original viewpoint, consistent with a view-dependent representation.

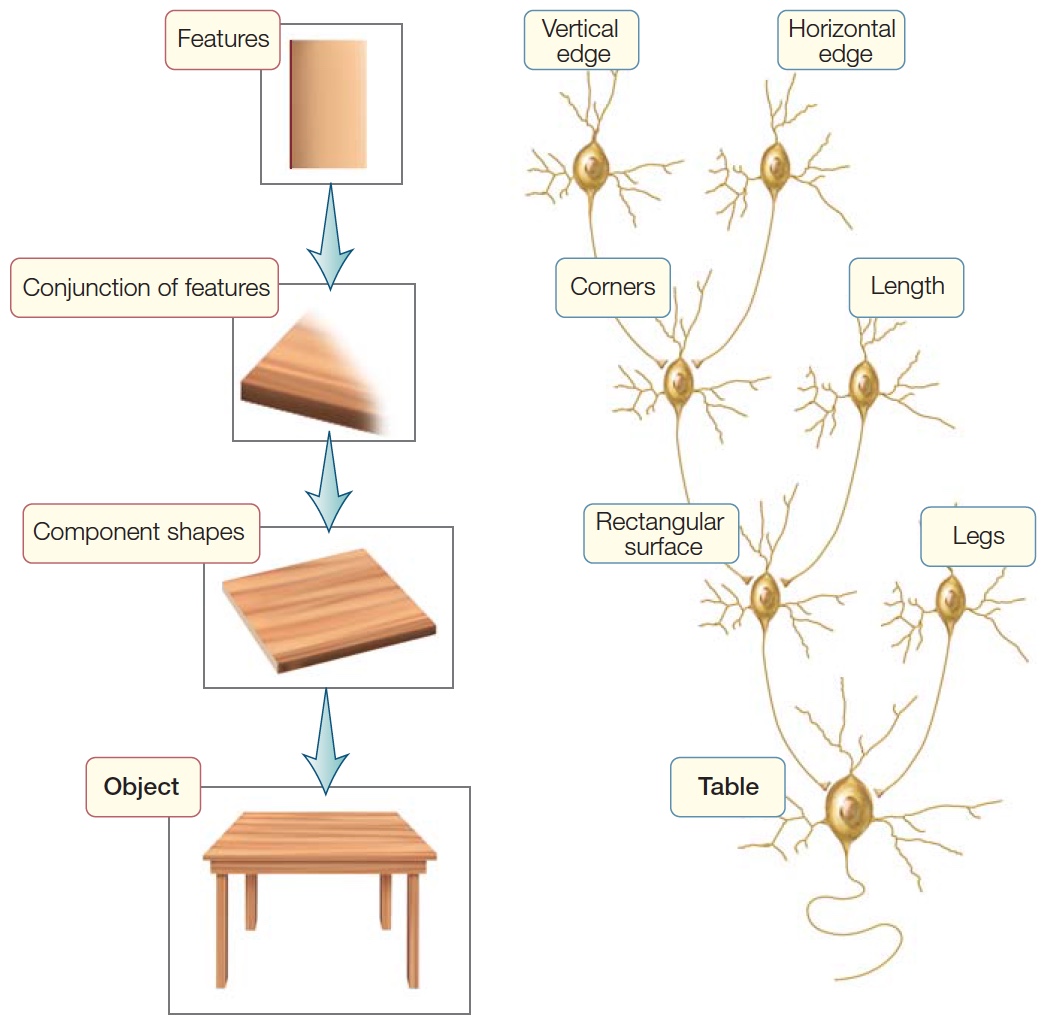

Now let’s consider how shape is encoded. In the last chapter, we introduced the idea that recognition may involve hierarchical representations in which each successive stage adds complexity. Simple features such as lines can be combined into edges, corners, and intersections, which—as processing continues up the hierarchy—are grouped into parts, and the parts grouped into objects.

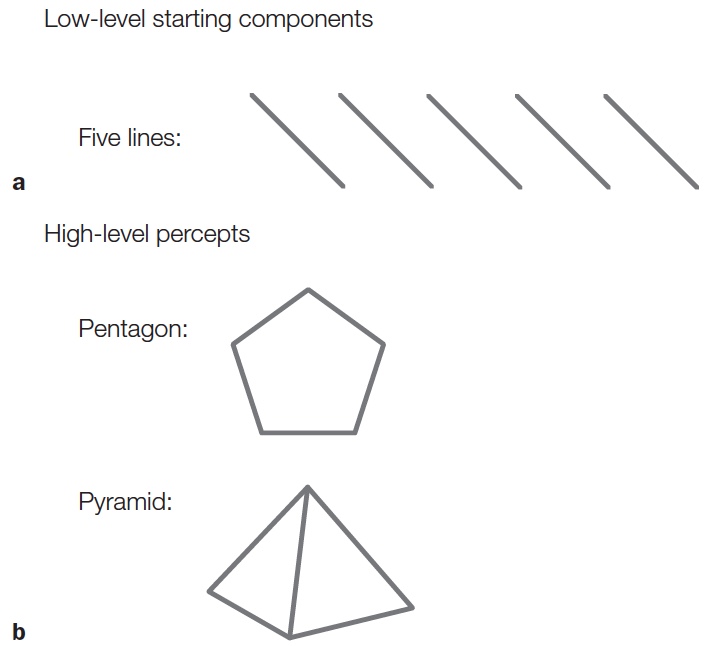

People recognize a pentagon because it contains five line segments of equal length, joined together to form five corners that define an enclosed region (Figure 6.12). The same five line segments can define other objects, such as a pyramid. With the pyramid, however, there are only four points of intersection, not five; and the lines define a more complicated shape that implies it is three-dimensional. The pentagon and the pyramid might activate similar representations at the lowest levels of the hierarchy, yet the combinations of these features into a shape produces distinct representations at higher levels of the processing hierarchy.

FIGURE 6.12 Basic elements and the different objects they can form.

The same basic components (five lines) can form different items (e.g., a pentagon or a pyramid) depending on their arrangement. Although the low-level components (a) are the same, the high-level percepts (b) are distinct.

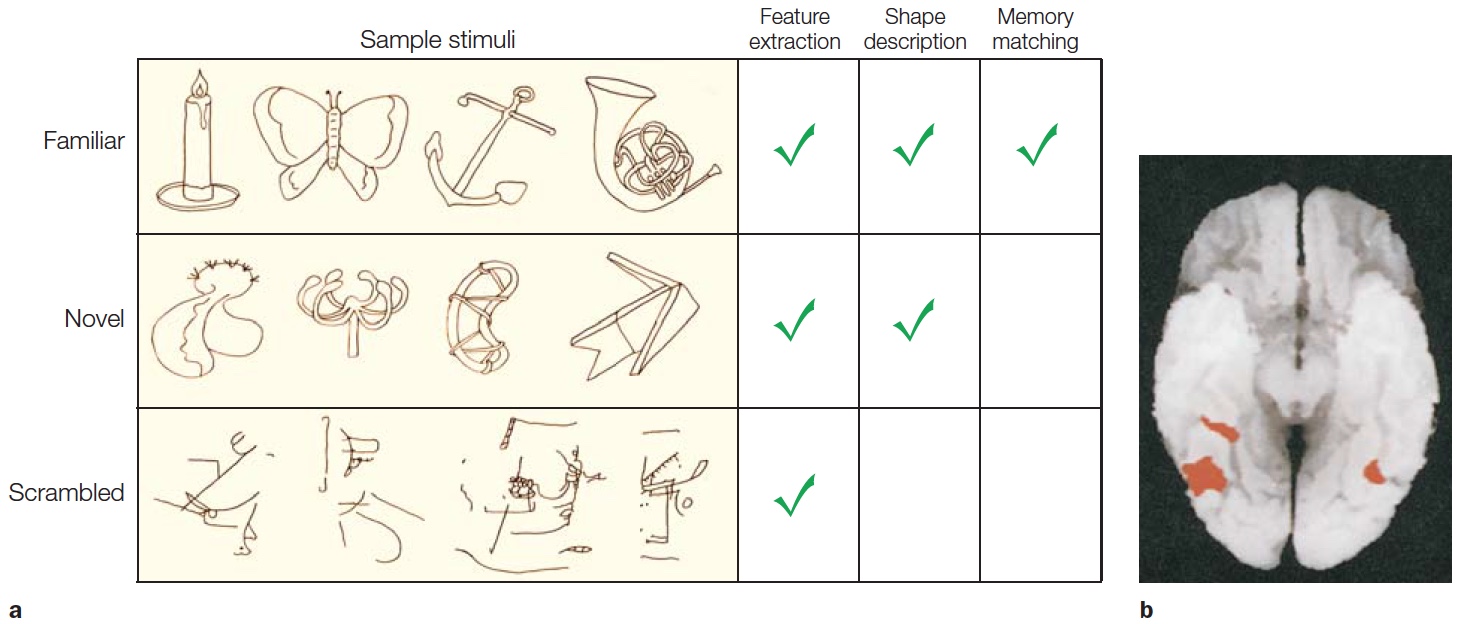

One way to investigate how we encode shapes is to identify areas of the brain that are active when comparing contours that form a recognizable shape versus contours that are just squiggles. How do activity patterns in the brain change when a shape is familiar? This question emphasizes the idea that perception involves a connection between sensation and memory (recall our four guiding principles of object recognition). Researchers explored this question in a PET study designed to isolate the specific mental operations used when people viewed familiar shapes, novel shapes, or stimuli formed by scrambling the shapes to form random drawings (Kanwisher et al., 1997a). All three types of stimuli should engage the early stages of visual perception, or what is called feature extraction (Figure 6.13a). To identify areas involved in object perception, a comparison can be made between responses to novel objects and responses to scrambled stimuli—as well as responses between familiar objects and scrambled stimuli—under the assumption that scrambled stimuli do not define objects per se. The memory retrieval contribution should be most evident when viewing novel or familiar objects. In the PET study, both novel and familiar stimuli led to increases in regional cerebral blood flow bilaterally in lateral occipital cortex (LOC, sometimes referred to as lateral occipital complex; Figure 6.13b). Since this study, many others have shown that the LOC is critical for shape and object recognition. Interestingly, no differences were found between the novel and familiar stimuli in these posterior cortical regions. At least within these areas, recognizing that something is unfamiliar may be as taxing as recognizing that something is familiar.

FIGURE 6.13 Component analysis of object recognition.

(a) Stimuli for the three conditions and the mental operations required in each condition. Novel objects are hypothesized to engage processes involved in perception even when verbal labels do not exist. (b) Activation was greater for the familiar and novel objects compared to the scrambled images along the ventral surface of the occipitotemporal cortex.

When we view an object such as a dog, it may be a real dog, a drawing of a dog, a statue of a dog, or an outline of a dog made of flashing lights. Still, we recognize each one as a dog. This insensitivity to the specific visual cues that define an object is known as cue invariance. Research has shown that, for the LOC, shape seems to be the most salient property of the stimulus. In one fMRI study, participants viewed stimuli in which shapes were defined by either lines (our normal percepts) or the coherent motion of dots. When compared to control stimuli with similar sensory properties, the LOC response was similar to the two types of object depictions (Grill-Spector et al., 2001; Figure 6.14). Thus the LOC can support the perception of the pink elephant or the plaid apple.

Grandmother Cells and Ensemble Coding

An object is more than just a shape, though. Somehow we also know that one dog shape is a real dog, and the other is a marble statue. How do people recognize specific objects? Some researchers have attempted to answer this question at the level of neurons by asking whether there are individual cells that respond only to specific integrated percepts. Furthermore, do these cells code for the individual parts that define the object? When you recognize an object as a tiger, does this happen because a neuron sitting at the top of the perceptual hierarchy, having combined all of the information that suggests a tiger, then becomes active? If the object had been a lion, would the same cell have been silent, despite the similarities in shape (and other properties) between a tiger and lion? Alternatively, does perception of an object depend on the firing of a collection of cells? In this case, when you see a tiger, a group of neurons that code for different features of the tiger might become active, but only some of them are also active when you see a lion.

|

FIGURE 6.14 BOLD response in lateral occipital |

Earlier in this chapter, we touched on the finding that cells in the inferotemporal lobe selectively respond to complex stimuli (e.g., objects, places, body parts, or faces; see Figure 6.3). This observation is consistent with hierarchical theories of object perception. According to these theories, cells in the initial areas of the visual cortex code elementary features such as line orientation and color. The outputs from these cells are combined to form detectors sensitive to higher order features such as corners or intersections—an idea consistent with the findings of Hubel and Wiesel (see Milestones in Cognitive Science: Pioneers in the Visual Cortex in Chapter 5). The process continues as each successive stage codes more complex combinations (Figure 6.15). The type of neuron that can recognize a complex object has been called a gnostic unit (from the Greek gnostikos, meaning “of knowledge”), referring to the idea that the cell (or cells) signals the presence of a known stimulus—an object, a place, or an animal that has been encountered in the past.

|

FIGURE 6.15 The hierarchical coding hypothesis. |

It is tempting to conclude that the cell represented by the recordings in Figure 6.3 signals the presence of a hand, independent of viewpoint. Other cells in the inferior temporal cortex respond preferentially to complex stimuli such as jagged contours or fuzzy textures. The latter might be useful for a monkey, in order to identify that an object has a fur-covered surface and therefore, might be the backside of another member of its group. Even more intriguing, researchers have discovered cells in the inferotemporal gyrus and the floor of the superior temporal sulcus that are selectively activated by faces. In a tongue-in-cheek manner, they coined the term grandmother cell to convey the notion that people’s brains might have a gnostic unit that becomes excited only when their grandmother comes into view. Other gnostic units would be specialized to recognize, for example, a blue Volkswagen or the Golden Gate Bridge.

Itzhak Fried and his colleagues at the University of California, Los Angeles, explored this question by making single-cell recordings in human participants (Quiroga et al., 2005). The participants in their study all had epilepsy; and, in preparation for a surgical procedure to alleviate their symptoms, they each had electrodes surgically implanted in their temporal lobe. In the study, participants were shown a wide range of pictures including animals, objects, landmarks, and individuals. The investigators’ first observation was that, in general, it was difficult to make these cells respond. Even when the stimuli were individually tailored to each participant based on an interview to determine that person’s visual history, the temporal lobe cells were generally inactive. Nonetheless, there were exceptions. Most notable, these exceptions revealed an extraordinary degree of stimulus specificity. Recall Figure 3.21, which shows the response of one temporal lobe neuron that was selectively activated in response to photographs of the actress Halle Berry. Ms. Berry could be wearing sunglasses, sporting dramatically different haircuts, or even be in costume as Catwoman from one of her movie roles—but in all cases, this particular neuron was activated. Other actresses or famous people failed to activate the neuron.

Let’s briefly return to the debate between grandmother-cell coding versus ensemble coding. Although you might be tempted to conclude that cells like these are gnostic units, it is important to keep in mind the limitations of such experiments. First, aside from the infinite number of possible stimuli, the recordings are performed on only a small subset of neurons. As such, this cell potentially could be activated by a broader set of stimuli, and many other neurons might respond in a similar manner. Second, the results also suggest that these gnostic-like units are not really “perceptual.” The same cell was also activated when the words Halle Berry were presented. This observation takes the wind out of the argument that this is a grandmother cell, at least in the original sense of the idea. Rather, the cell may represent the concept of “Halle Berry,” or even represent the name Halle Berry, a name that is likely recalled from memory for any of the stimuli relevant to Halle Berry.

Studies like this pose three problems for the traditional grandmother-cell hypothesis:

One alternative to the grandmother-cell hypothesis is that object recognition results from activation across complex feature detectors (Figure 6.16). Granny, then, is recognized when some of these higher order neurons are activated. Some of the cells may respond to her shape, others to the color of her hair, and still others to the features of her face. According to this ensemble hypothesis, recognition is not due to one unit but to the collective activation of many units. Ensemble theories readily account for why we can recognize similarities between objects (say, the tiger and lion) and may confuse one visually similar object with another: Both objects activate many of the same neurons. Losing some units might degrade our ability to recognize an object, but the remaining units might suffice. Ensemble theories also account for our ability to recognize novel objects. Novel objects bear a similarity to familiar things, and our percept results from activating units that represent their features.

FIGURE 6.16 The ensemble coding hypothesis.

Objects are defined by the simultaneous activation of a set of defining properties. “Granny” is recognized here by the co-occurrence of her wrinkles, face shape, hair color, and so on.

The results of single-cell studies of temporal lobe neurons are in accord with ensemble theories of object recognition. Although it is striking that some cells are selective for complex objects, the selectivity is almost always relative, not absolute. The cells in the inferotemporal cortex prefer certain stimuli to others, but they are also activated by visually similar stimuli. The cell represented in Figure 6.3, for instance, increases its activity when presented with a mitten-like stimulus. No cells respond to a particular individual’s hand; the hand-selective cell responds equally to just about any hand. In contrast, as people’s perceptual abilities demonstrate, we make much finer discriminations.

Summary of Computational Problems

We have considered several computational problems that must be solved by an object recognition system. Information is represented on multiple scales. Although early visual input can specify simple features, object perception involves intermediate stages of representation in which features are assembled into parts. Objects are not determined solely by their parts, though; they also are defined by the relationship between the parts. An arrow and the letter Y contain the same parts but differ in their arrangement. For object recognition to be flexible and robust, the perceived spatial relations among parts should not vary across viewing conditions.

TAKE-HOME MESSAGES

Failures in Object Recognition: The Big Picture

Now that we have some understanding of how the brain processes visual stimuli in order to recognize objects, let’s return to our discussion of agnosia. Many people who have suffered a traumatic neurological insult, or who have a degenerative disease such as Alzheimer’s, may experience problems recognizing things. This is not necessarily a problem of the visual system. It could be the result of the effects of the disease or injury on attention, memory, and language. Unlike someone with visual agnosia, for a person with Alzheimer’s disease, recognition failures persist even when an object is placed in their hands or if it is verbally described to them. As noted earlier, people with visual agnosia have difficulty recognizing objects that are presented visually or require the use of visually based representations. The key word is visual—these patients’ deficit is restricted to the visual domain. Recognition through other sensory modalities, such as touch or audition, is typically just fine.

Like patient G.S., who was introduced at the beginning of this chapter, visual agnostics can look at a fork yet fail to recognize it as a fork. When the object is placed in their hands, however, they will immediately recognize it (Figure 6.17a). Indeed, after touching the object, an agnosia patient may actually report seeing the object clearly. Because the patient can recognize the object through other modalities, and through vision with supplementary support, we know that the problem does not reflect a general loss of knowledge. Nor does it represent a loss of vision, for they can describe the object’s physical characteristics such as color and shape. Thus, their deficit reflects either a loss of knowledge limited to the visual system or a disruption in the connections between the visual system and modality-independent stores of knowledge. So, we can say that the label visual agnosia is restricted to individuals who demonstrate object recognition problems even though visual information continues to be registered at the cortical level.

FIGURE 6.17 Agnosia versus memory loss.

To diagnose an agnosic disorder, it is essential to rule out general memory problems. (a) The patient with visual agnosia is unable to recognize a fork by vision alone but immediately recognizes it when she picks it up. (b) The patient with a memory disorder is unable to recognize the fork even when he picks it up.

The 19th-century German neurologist Heinrich Lissauer was the first to suggest that there were distinct subtypes of visual object recognition deficits. He distinguished between recognition deficits that were sensory based and those that reflected an inability to access visually directed memory—a disorder that he melodramatically referred to as Seelenblindheit, or “soul blindness” (Lissauer, 1890). We now know that classifying agnosia as sensory based is not quite correct, at least not if we limit “sensory” to processes such as the detection of shape, features, color, motion, and so on. The current literature broadly distinguishes between three major subtypes of agnosia: apperceptive agnosia, integrative agnosia, and associative agnosia, roughly reflecting the idea that object recognition problems can arise at different levels of processing. Keep in mind, though, that specifying subtypes can be a messy business, because the pathology is frequently extensive and because a complex process such as object recognition, by its nature, involves a number of interacting component processes. Diagnostic categories are useful for clinical purposes, but generally have limited utility when these neurological disorders are used to build models of brain function. With that caveat in mind, we can now look at each of these forms of agnosia in turn.

Apperceptive Agnosia

Apperceptive agnosia can be a rather puzzling disorder. A standard clinical evaluation of visual acuity may fail to reveal any marked problems. The patient may perform normally on shape discrimination tasks and even have little difficulty recognizing objects, at least when presented from perspectives that make salient the most important features. The object recognition problems become evident when the patient is asked to identify objects based on limited stimulus information, either because the object is shown as a line drawing or seen from an unusual perspective.

Beginning in the late 1960s, Elizabeth Warrington embarked on a series of investigations of perceptual disabilities in patients possessing unilateral cerebral lesions caused by a stroke or tumor (Warrington & Rabin, 1970; Warrington, 1985). Warrington devised a series of tests to look at object recognition capabilities in one group of approximately 70 patients (all of whom were right-handed and had normal visual acuity). In a simple perceptual matching test, participants had to determine if two stimuli, such as a pattern of dots or lines, were the same or different. Patients with right-sided parietal lesions showed poorer performance than did either control subjects or patients with lesions of the left hemisphere. Left-sided damage had little effect on performance. This result led Warrington to propose that the core problem for patients with right-sided lesions involved the integration of spatial information (see Chapter 4).

To test this idea, Warrington devised the Unusual Views Object Test. Participants were shown photographs of 20 objects, each from two distinct views (Figure 6.18a). In one photograph, the object was oriented in a standard or prototypical view; for example, a cat was photographed with its head facing forward. The other photograph depicted an unusual or atypical view; for example, the cat was photographed from behind, without its face or feet in the picture. Participants were asked to name the objects shown. Although normal participants made few, if any, errors, patients with right posterior lesions had difficulty identifying objects that had been photographed from unusual orientations. They could name the objects photographed in the prototypical orientation, which confirmed that their problem was not due to lost visual knowledge.

HOW THE BRAIN WORKS

Auditory Agnosia

Other sensory modalities besides visual perception surely contribute to object recognition. Distinctive odors in a grocery store enable us to determine which bunch of greens is thyme and which is basil. Using touch, we can differentiate between cheap polyester and a fine silk garment. We depend on sounds, both natural and human-made, to cue our actions. A siren prompts us to search for a nearby police car or ambulance, or anxious parents immediately recognize the cries of their infant and rush to the baby’s aid. Indeed, we often overlook our exquisite auditory capabilities for object recognition. Have a friend rap on a wooden tabletop, or metal filing cabinet, or glass window. You will easily distinguish between these objects.

Numerous studies have documented failures of object recognition in other sensory modalities. As with visual agnosia, a patient has to meet two criteria to be labeled agnosic. First, a deficit in object recognition cannot be secondary to a problem with perceptual processes. For example, to be classified as having auditory agnosia, patients must perform within normal limits on tests of tone detection; that is, the loudness of a sound that’s required for the person to detect it must fall within a normal range. Second, the deficit in recognizing objects must be restricted to a single modality. For example, a patient who cannot identify environmental sounds such as the ones made by flowing water or jet engines must be able to recognize a picture of a waterfall or an airplane.

Consider a patient, C.N., reported by Isabelle Peretz and her colleagues (1994) at the University of Montreal. A 35-year-old nurse, C.N. had suffered a ruptured aneurysm in the right middle cerebral artery, which was repaired. Three months later, she was diagnosed with a second aneurysm, in the left middle cerebral artery which also required surgery. Postoperatively, C.N.’s abilities to detect tones and to comprehend and produce speech were not impaired. But she immediately complained that her perception of music was deranged. Her amusia, or impairment in music abilities, was verified by tests. For example, she could not recognize melodies taken from her personal record collection, nor could she recall the names of 140 popular tunes, including the Canadian national anthem.

C.N.’s deficit could not be attributed to a problem with long-term memory. She also failed when asked to decide if two melodies were the same or different. Evidence that the problem was selective to auditory perception was provided by her excellent ability to identify these same songs when shown the lyrics. Similarly, when given the title of a musical piece such as The Four Seasons, C.N. responded that the composer was Vivaldi and could even recall when she had first heard the piece.

Just as interesting as C.N.’s amusia was her absence of problems with other auditory recognition tests. C.N. understood speech, and she was able to identify environmental sounds such as animal cries, transportation noises, and human voices. Even within the musical domain, C.N. did not have a generalized problem with all aspects of music comprehension. She performed as well as normal participants when asked to judge if two-tone sequences had the same rhythm. Her performance fell to a level of near chance, however, when she had to decide if the two sequences were the same melody. This dissociation makes it less surprising that, despite her inability to recognize songs, she still enjoyed dancing!

Other cases of domain-specific auditory agnosia have been reported. Many patients have an impaired ability to recognize environmental sounds, and, as with amusia, this deficit is independent of language comprehension problems. In contrast, patients with pure word deafness cannot recognize oral speech, even though they exhibit normal auditory perception for other types of sounds and have normal reading abilities. Such category specificity suggests that auditory object recognition involves several distinct processing systems. Whether the operation of these processes should be defined by content (e.g., verbal versus nonverbal input) or by computations (e.g., words and melodies may vary with regard to the need for part-versus-whole analysis) remains to be seen... or rather heard.

This impairment can be understood by going back to our earlier discussion of object constancy. A hallmark of human perceptual systems is that from an infinite set of percepts, we readily extract critical features that allow us to identify objects. Certain vantage points are better than others, but the brain is designed to overcome variability in the sensory input to recognize both similarities and differences between different inputs. The ability to achieve object constancy is compromised in patients with apperceptive agnosia. Although these patients can recognize objects, this ability diminishes when the perceptual input is limited (as with shadows; Figure 6.18b) or does not include the most salient features (as with atypical views). The finding that this type of disorder is more common in patients with right-hemisphere lesions suggests that this hemisphere is essential for the operations required to achieve object constancy.

|

FIGURE 6.18 Tests used to identify apperceptive agnosia. |

|

Integrative Agnosia

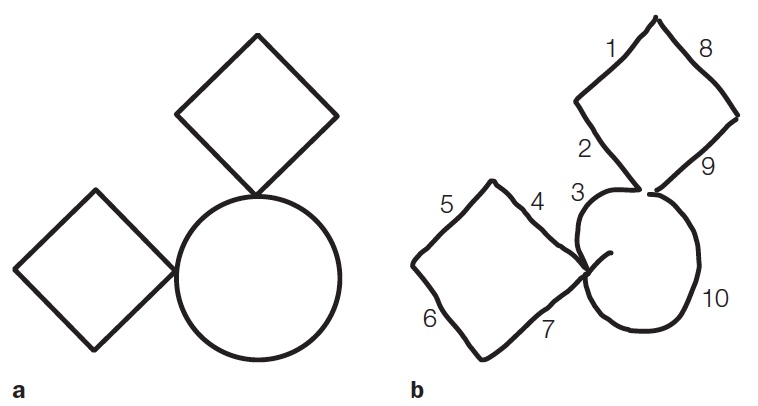

People with integrative agnosia are unable to integrate features into parts, or parts of an object into a coherent whole. This classification of agnosia was first suggested by Jane Riddoch and Glyn Humphreys following an intensive case study of one patient, H.J.A. The patient had no problem doing shape-matching tasks and, unlike with apperceptive agnosia, was successful in matching photographs of objects seen from unusual views. His object recognition problem, however, became apparent when he was asked to identify objects that overlapped one another (Humphreys & Riddoch, 1987; Humphreys et al., 1994). He was either at a loss to describe what he saw, or would build a percept only step-by-step. Rather than perceive an object at a glance, H.J.A. relied on recognizing salient features or parts. To recognize a dog, he would perceive each of the legs, the characteristic shape of the body and head, and then use these part representations to identify the whole object. Such a strategy runs into problems when objects overlap, because the observer must not only identify the parts but also correctly assign parts to objects.

FIGURE 6.19 Patients with integrative agnosia do not see objects holistically.

Patient C.K. was asked to copy the figure shown in (a). His overall performance (b) was quite good; the two diamonds and the circle can be readily identified. However, as noted in the text, the numbers indicate the order he used to produce the segments.

A telling example of this deficit is provided by the drawings of another patient with integrative agnosia—C.K., a young man who suffered a head injury in an automobile accident (Behrmann et al., 1994). C.K. was shown a picture consisting of two diamonds and one circle in a particular spatial arrangement and asked to reproduce the drawing (Figure 6.19). Glance at the drawing in Figure 6.19b—not bad, right? But now look at the numbers, indicating the order in which C.K. drew the segments to form the overall picture. After starting with the left-hand segments of the upper diamond, C.K. proceeded to draw the upper left-hand arc of the circle and then branched off to draw the lower diamond before returning to complete the upper diamond and the rest of the circle. For C.K., each intersection defined the segments of different parts. He failed to link these parts into recognizable wholes—the defining characteristic of integrative agnosia. Other patients with integrative agnosia are able to copy images perfectly, but cannot tell you what they are.

Object recognition typically requires that parts be integrated into whole objects. The patient described at the beginning of this chapter, G.S., exhibited some features of integrative agnosia. He was fixated on the belief that the combination lock was a telephone because of the circular array of numbers, a salient feature (part) on the standard rotary phones of his time. He was unable to integrate this part with the other components of the combination lock. In object recognition, the whole truly is greater than the sum of its parts.

Associative Agnosia

Associative agnosia is a failure of visual object recognition that cannot be attributed to a problem of integrating parts to form a whole, or to a perceptual limitation, such as a failure of object constancy. A patient with associative agnosia can perceive objects with his visual system, but cannot understand or assign meaning to the objects. Associative agnosia rarely exists in a pure form; patients often perform abnormally on tests of basic perceptual abilities, likely because their lesions are not highly localized. Their perceptual deficiencies, however, are not proportional to their object recognition problem.

For instance, one patient, F.R.A., awoke one morning and discovered that he could not read his newspaper—a condition known as alexia, or acquired alexia (R. McCarthy & Warrington, 1986). A CT scan revealed an infarct of the left posterior cerebral artery. The lesioned area was primarily in the occipital region of the left hemisphere, although the damage probably extended into the posterior temporal cortex. F.R.A. could copy geometric shapes and could point to objects when they were named. Notably, he could segment a complex drawing into its parts (Figure 6.20). Apperceptive and integrative agnosia patients fail miserably when instructed to color each object differently. In contrast, F.R.A. performed the task effortlessly. Despite this ability, though, he could not name the objects that he had colored. When shown line drawings of common objects, he could name or describe the function of only half of them. When presented with images of animals that were depicted to be the same size, such as a mouse and a dog, and asked to point to the larger one, his performance was barely above chance. Nonetheless, his knowledge of such properties was intact. If the two animal names were said aloud, F.R.A. could do the task perfectly. Thus his recognition problems reflected an inability to access that knowledge from the visual modality. Associative agnosia is reserved for patients who derive normal visual representations but cannot use this information to recognize things.

|

FIGURE 6.20 Alexia patient F.R.A.’s drawings. |

FIGURE 6.21 Matching-by-Function Test.

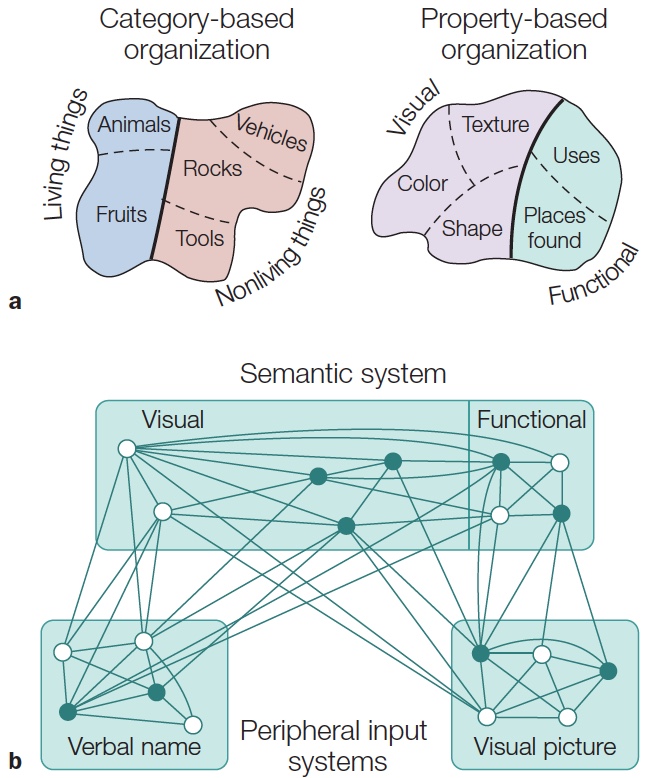

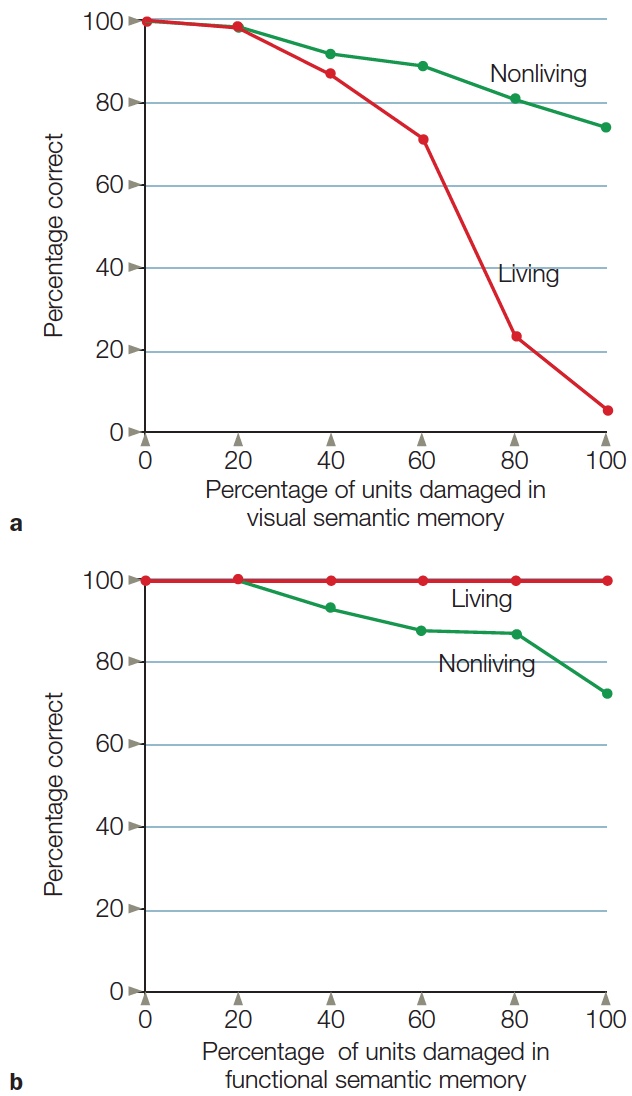

Participants are asked to choose the two objects that are most similar in function.