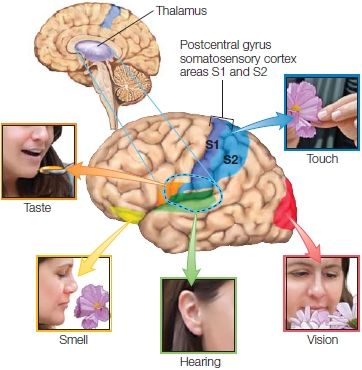

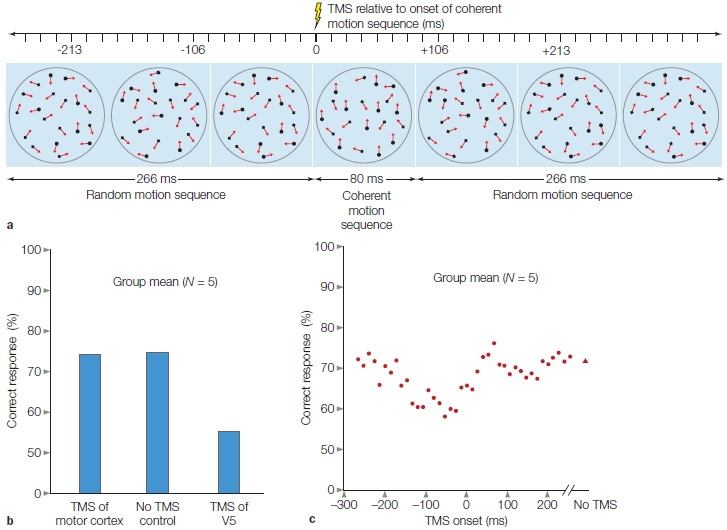

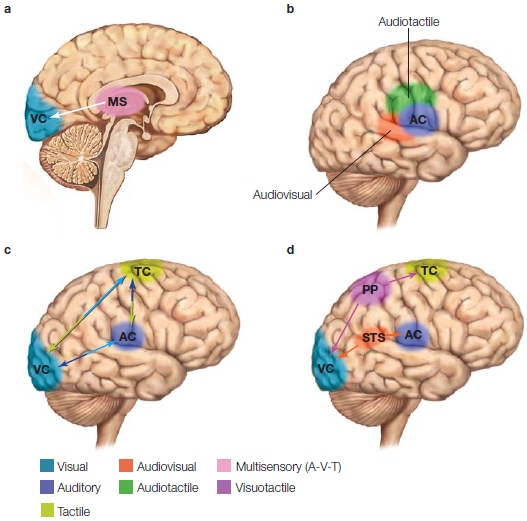

FIGURE 5.1 Major sensory regions of the cerebral cortex.

|

Monet is only an eye, but my God, what an eye! ~ Paul Cezanne |

Chapter 5

Sensation and Perception

OUTLINE

Senses, Sensation, and Perception

Sensation: Early Perceptual Processing

Audition

Olfaction

Gustation

Somatosensation

Vision

From Sensation to Perception

Deficits in Visual Perception

Multimodal Perception: I See What You’re Sayin’

Perceptual Reorganization

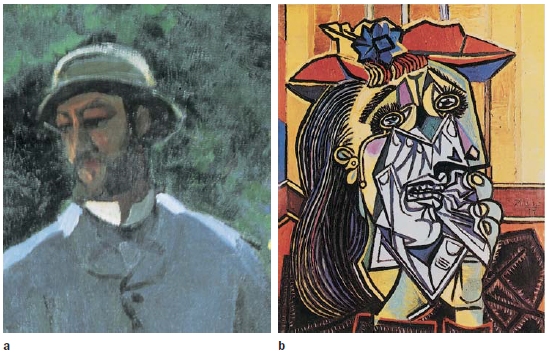

IN HOSPITALS ACROSS THE COUNTRY, Neurology Grand Rounds is a weekly event. There, staff neurologists, internists, and residents gather to review the most puzzling and unusual cases being treated on the ward. In Portland, Oregon, the head of neurology presented such a case. He was not puzzled about what had caused his patient’s problem. That was clear. The patient, P.T., had suffered a cerebral vascular accident, commonly known as a stroke. In fact, he had sustained two strokes. The first, suffered 6 years previously, had been a left hemisphere stroke. The patient had shown a nearly complete recovery from that stroke. P.T. had suffered a second stroke a few months before, however, and the CT scan showed that the damage was in the right hemisphere. This finding was consistent with the patient’s experience of left-sided weakness, although the weakness had mostly subsided after a month.

The unusual aspect of P.T.’s case was the collection of symptoms he continued to experience 4 months later. As he tried to resume the daily routines required on his small family farm, P.T. had particular difficulty recognizing familiar places and objects. While working on a stretch of fence, for example, he might look out over the hills and suddenly realize that he did not know the landscape. It was hard for him to pick out individual dairy cows—a matter of concern lest he attempt to milk a bull! Disturbing as this was, it was not the worst of his problems. Most troubling of all, he no longer recognized the people around him, including his wife. He had no trouble seeing her and could accurately describe her actions, but when it came to identifying her, he was at a complete loss. She was completely unrecognizable to him! He knew that her parts—body, legs, arms, and head—formed a person, but P.T. failed to see these parts as belonging to a specific individual. This deficit was not limited to P.T.’s wife; he had the same problem with other members of his family and friends from his small town, a place he had lived for 66 years.

A striking feature of P.T.’s impairment was that his inability to recognize objects and people was limited to the visual modality. As soon as his wife spoke, he immediately recognized her voice. Indeed, he claimed that, on hearing her voice, the visual percept of her would “fall into place.” The shape in front of him would suddenly morph into his wife. In a similar fashion, he could recognize specific objects by touching, smelling, or tasting them.

Senses, Sensation, and Perception

The overarching reason why you are sitting here reading this book today is that you had ancestors who successfully survived their environment and reproduced. One reason they were able to do this was their ability to sense and perceive things that could be threatening to their survival and then act on those perceptions. Pretty obvious, right? Less obvious is that most of these perceptions and behavioral responses never even reach people’s conscious awareness, and what does reach our awareness is not an exact replica of the stimulus. This latter phenomenon becomes more evident when we are presented with optical illusions (as we see later in the chapter). Perception begins with a stimulus from the environment, such as sound or light, which stimulates one of the sense organs such as the ear or eye. The input from the sound or light wave is transduced into neural activity by the sense organ and sent to the brain for processing. Sensation refers to the early processing that goes on. The mental representation of that original stimulus, which results from the various processing events, whether it accurately reflects the stimulus or not, is called a percept. Thus, perception is the process of constructing the percept.

Our senses are our physiological capacities to provide input from the environment to our neurological system. Hence, our sense of sight is our capacity to capture light waves on the retina, convert them into electrical signals, and ship them on for further processing. We tend to give most of the credit for our survival to our sense of sight, but it does not operate alone. For instance, the classic “we don’t have eyes in the back of our head” problem means we can’t see the bear sneaking up behind us. Instead, the rustling of branches or the snap of a twig warns us. We do not see particularly well in the dark either, as many people know after stubbing a toe when groping about to find the light switch. And though the milk may look fine, one sniff tells you to dump it down the drain. Although these examples illustrate the interplay of senses on the conscious level, neuroimaging studies have helped to reveal that extensive interaction takes place between the sensory modalities much earlier in the processing pathways than was previously imagined.

In normal perception, all of the senses are critical. Effectively and safely driving a car down a busy highway requires the successful integration of seeing, touch, hearing, and perhaps even smell (warning, for example, that you have been riding the brakes down a hill). Enjoying a meal also involves the interplay of the senses. We cannot enjoy food intensely without smelling its fragrance. The sense of touch is an essential part of our gastronomic experience also, even if we don’t think much about it. It gives us an appreciation for the texture of the food: the creamy smoothness of whipped cream or the satisfying crunch of an apple. Even visual cues enhance our gustatory experience—a salad of green, red, and orange hues is much more enticing than one that is brown and black.

In this chapter, we begin with an overview of sensation and perception and then turn to a description of what is known about the anatomy and function of the individual senses. Next we tackle the issue of how information from our different sensory systems is integrated to produce a coherent representation of the world. We end by discussing the interesting phenomenon of synesthesia—what happens when sensory information is more integrated than is usual.

Sensation: Early Perceptual Processing

Shared Processing From Acquisition to Anatomy

Before dealing with each sense individually, let’s look at the anatomical and processing features that the sensory systems have in common. Each system begins with some sort of anatomical structure for collecting, filtering, and amplifying information from the environment. For instance, the outer ear, the ear canal, and inner ear concentrate and amplify sound. In vision, the muscles of the eye direct the gaze, the pupil size is adjusted to filter the amount of light, and the cornea and lens refract light to focus it on the retina. Each system has specialized receptor cells that transduce the environmental stimulus, such as sound waves or light waves or chemicals, into neural signals. These neural signals are passed along their specific sensory nerve pathways: the olfactory signals via the olfactory nerve (first cranial nerve); visual signals via the optic nerve (second cranial nerve); auditory signals via the cochlear nerve (also called the auditory nerve, which joins with the vestibular nerve to form the eighth cranial nerve); taste via the facial and glossopharyngeal nerves (seventh and ninth cranial nerves); facial sensation via the trigeminal nerve (fifth cranial nerve); and sensation for the rest of the body via the sensory nerves that synapse in the dorsal roots of the spinal cord.

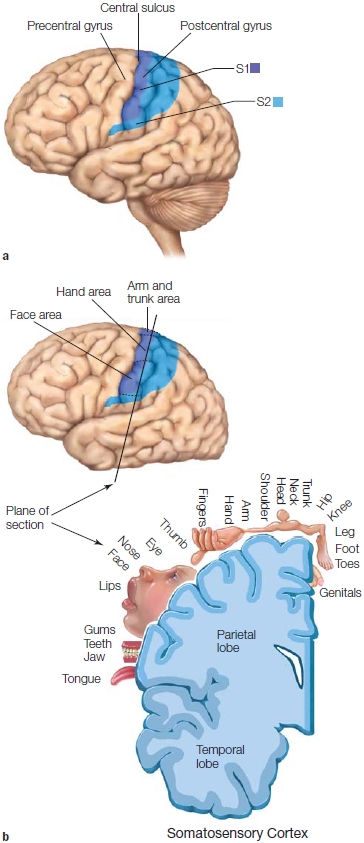

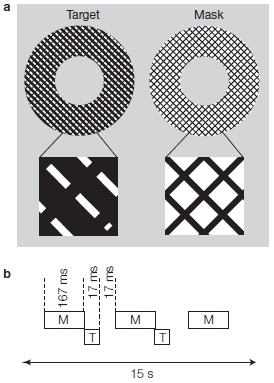

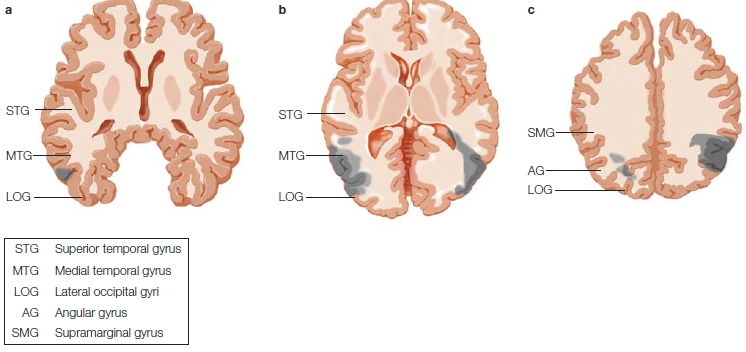

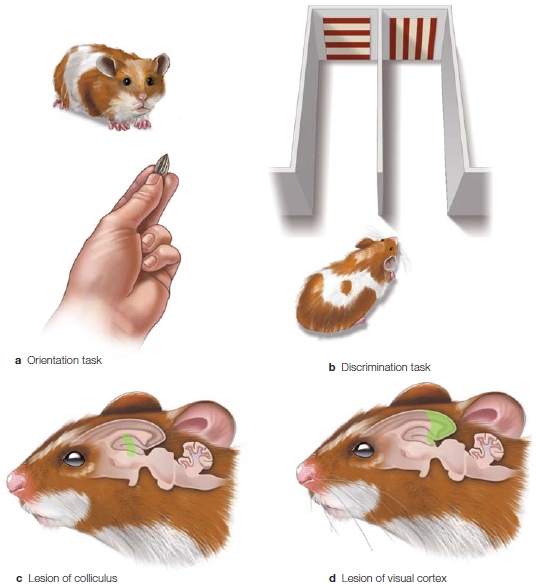

FIGURE 5.1 Major sensory regions of the cerebral cortex.

The sensory nerves from the body travel up the spinal cord and enter the brain through the medulla, where the glossopharyngeal and vestibulocochlear nerves also enter. The facial nerve enters the brainstem at the pontomedullary junction. The trigeminal nerve enters at the level of the pons. These nerves all terminate in different parts of the thalamus (Figure 5.1). The optic nerve travels from the eye socket to the optic chiasm, where fibers from the nasal visual fields cross to the opposite side of the brain, and most (not all) of the newly combined fibers terminate in the thalamus. From the thalamus, neural connections from each of these pathways travel first to what are known as primary sensory cortex, and then to secondary sensory cortex (Figure 5.1). The olfactory nerve is a bit of a rogue. It is the shortest cranial nerve and follows a different course. It terminates in the olfactory bulb, and axons extending from here course directly to the primary and secondary olfactory cortices without going through the brainstem or the thalamus.

Receptors Share Responses to Stimuli

Across the senses, receptor cells share a few general properties. Receptor cells are limited in the range of stimuli that they respond to, and as part of this limitation, their capability to transmit information has only a certain degree of precision. Receptor cells do not become active until the stimulus exceeds some minimum intensity level. They are not fixed entities, but rather adapt as the environment changes.

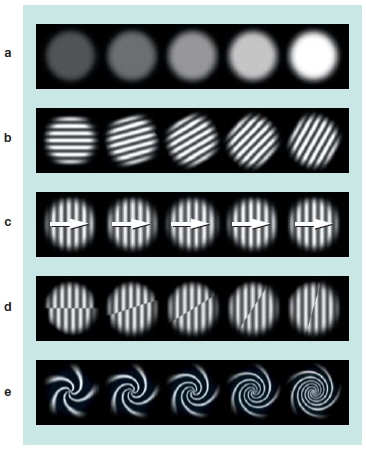

FIGURE 5.2 Vision and light.

(a) The electromagnetic spectrum. The small, colored section in the center indicates the part of the spectrum that is visible to the human eye. (b) The visible region of the electromagnetic spectrum varies across species. An evening primrose as seen by humans (left) and bees (right). Bees perceive the ultraviolet part of the spectrum.

Range Each sensory modality responds to a limited range of stimuli. Most people’s impression is that human color vision is unlimited. However, there are many colors, or parts of the electromagnetic spectrum, that we cannot see (Figure 5.2). Our vision is limited to a small region of this spectrum, wavelengths of light in the range of 400 to 700 nanometers (nm). Individual receptor cells respond to just a portion of this range. This range is not the same for all species. For example, birds and insects have receptors that are sensitive to shorter wavelengths and thus, can see ultraviolet light (Figure 5.2b, right). Some bird species actually exhibit sexual dichromatism (the male and female have different coloration) that is not visible to humans. Similar range differences are found in audition. We are reminded of this when we blow a dog whistle (invented by Francis Galton, Charles Darwin’s cousin). We immediately have the dog’s attention, but we cannot hear the high-pitched sound ourselves. Dogs can hear sound-wave frequencies of up to about 60 kilohertz (kHz), but we hear only sounds below about 20 kHz. Although a dog has better night vision than we do, we see more colors. Dogs cannot see the red–green spectrum. As limited as our receptor cells may be, we do respond to a wide range of stimulus intensities. The threshold stimulus value is the minimum stimulus that will activate a percept.

Adaptation Adaptation refers to how sensory systems stay fine-tuned. It is the adjusting of the sensitivity of the sensory system to the current environment and to important changes in the environment. You will come to see that perception is mainly concerned with changes in sensation. This makes good survival sense. Adaptation happens quickly in the olfactory system. You smell the baking bread when you walk into the bakery, but the fragrance seems to evaporate quickly. Our auditory system also adapts rather quickly. When we first turn the key to start a car, the sound waves from the motor hit our ears, activating sensory neurons. But this activity soon stops, even though the stimulus continues as we drive along the highway. Some neurons continue to fire as long as the stimulus continues, but their rate of firing slows down: the longer the stimulus continues, the less frequent the action potentials are. The noise of the computer drops into the background, and we have “adapted” to it.

ANATOMICAL ORIENTATION

Anatomy of the senses

Sensory inputs about taste, touch, smell, hearing, and seeing travel to specific regions of the brain for initial processing.

Visual system adaptation also occurs for changes in the light intensity in the environment. We frequently move between areas with different light intensities, for instance, when walking from a shaded area into the bright sunlight. It takes some time for the eyes to reset to the ambient light conditions, especially when going from bright light into darkness. When you go camping for the first time with veteran campers, one of the first things you are going to be told is not to shine your flashlight into someone’s eyes. It would take about 20–30 minutes for that person to regain her “night vision,” that is, to regain sensitivity to the low level of ambient light after being exposed to the bright light. We discuss how this works later, in the Vision section.

Acuity Our sensory systems are tuned to respond to different sources of information in the environment. Light activates receptors in the retina, pressure waves produce mechanical and electrical changes in the eardrum, and odor molecules are absorbed by receptors in the nose. How good we are at distinguishing among stimuli within a sensory modality, or what we would call acuity, depends on a couple of factors. One is simply the design of the stimulus collection system. Dogs can adjust the position of their two ears independently to better capture sound waves. This design contributes to their ability to hear sounds that are up to four times farther away than humans are capable of hearing. Another factor is the number and distribution of the receptors. For instance, for touch, we have many more receptors on our fingers than we do on our back; thus, we can discern stimuli better with our fingers. Our visual acuity is better than that of most animals, but not better than an eagle. Our acuity is best in the center of our visual field, because the central region of the retina, the fovea, is packed with photoreceptors. The farther away from the fovea, the fewer the receptors. The same is true for the eagle, but he has two foveas.

In general, if a sensory system devotes more receptors to certain types of information (e.g., as in the sensory receptors of the hands), there is a corresponding increase in cortical representation of that information (see, for example, Figure 5.16). This finding is interesting, because many creatures carry out exquisite perception without a cortex. So what is our cortex doing with all of the sensory information? The expanded sensory capabilities in humans, and mammals in general, are probably not for better sensation per se; rather, they allow that information to support flexible behavior, due to greatly increased memory capacity and pathways linking that information to our action and attention systems.

Sensory Stimuli Share an Uncertain Fate The physical stimulus is transduced into neural activity (i.e., electrochemical signals) by the receptors and sent through subcortical and cortical regions of the brain to be processed. Sometimes a stimulus may produce subjective sensory awareness. When that happens, the stimulus is not the only factor contributing to the end product. Each level of processing—including attention, memory, and emotional systems—contributes as well. Even with all of this activity going on, most of the sensory stimulation never reaches the level of consciousness. No doubt if you close your eyes right now, you will not be able to describe everything that is in front of you, although it has all been recorded on your retina.

Connective Similarities Most people typically think of sensory processing as working in one direction; that is, information moves from the sensor organs to the brain. Neural activity, however, is really a two-way street. At all levels of the sensory pathways, neural connections are going in both directions. This feature is especially pronounced at the interface between the subcortex and cortex. Sensory signals from the visual, auditory, somatosensory, and gustatory (taste) systems all synapse within the thalamus before projecting onto specific regions within the cortex. The visual pathway passes through the lateral geniculate nucleus (LGN) of the thalamus, the auditory system through the medial geniculate nucleus (MGN), the somatic pathway through the ventral posterior nuclear complex and the gustatory pathway through the ventral posteromedial nucleus. Just exactly what is going on in the thalamus is unclear. It appears to be more than just a relay station. Not only are there projections from these nuclei to the cortex, but the thalamic nuclei are interconnected, providing an opportunity for multisensory integration, an issue we turn to later in the chapter. The thalamus also receives descending, or feedback, connections from primary sensory regions of the cortex as well as other areas of the cortex, such as the frontal lobe. These connections appear to provide a way for the cortex to control, to some degree, the flow of information from the sensory systems (see Chapter 7).

Now that we have a general idea of what is similar about the anatomy of the various sensory systems and processing of sensory stimuli, let’s take a closer look at the individual sensory systems.

Audition

Imagine you are out walking to your car late at night, and you hear a rustling sound. Your ears (and heart!) are working on overdrive, trying to determine what is making the sound (or more troubling, who) and where the sound is coming from. Is it merely a tree branch blowing in the breeze, or is someone sneaking up behind you? The sense of hearing, or audition, plays an important role in our daily lives. Sounds can be essential for survival—we want to avoid possible attacks and injury—but audition also is fundamental for communication. How does the brain process sound? What happens as sound waves enter the ear? And how does our brain interpret these signals? More specifically, how does the nervous system figure out the what and the where of sound sources?

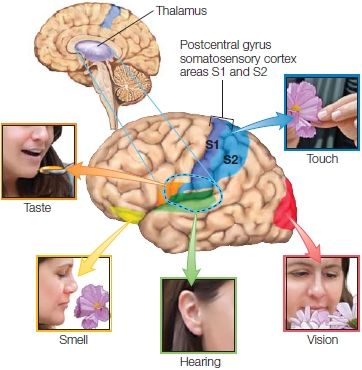

Neural Pathways of Audition

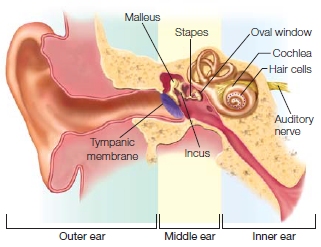

Figure 5.3 presents an overview of the auditory pathways. The complex structures of the inner ear provide the mechanisms for transforming sounds (variations in sound pressure) into neural signals. This is how hearing works: Sound waves arriving at the ear enter the auditory canal. Within the canal, the sound waves are amplified, similar to what happens when you honk your car’s horn in a tunnel. The waves travel to the far end of the canal, where they hit the tympanic membrane, or eardrum, and make it vibrate. These low-pressure vibrations then travel through the air-filled middle ear and rattle three tiny bones, the malleus, incus, and stapes, which cause a second membrane, the oval window, to vibrate.

|

|

|

FIGURE 5.3 Overview of the auditory pathway. |

|

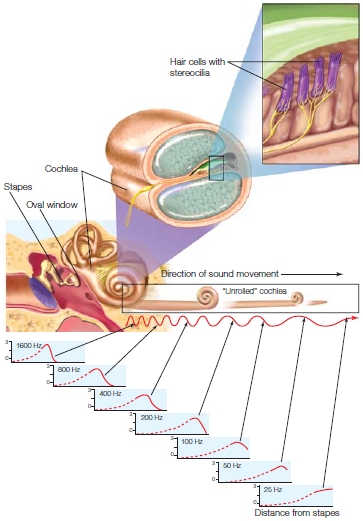

The oval window is the “door” to the fluid-filled cochlea, the critical auditory structure of the inner ear. Within the cochlea are tiny hair cells located along the inner surface of the basilar membrane. The hair cells are the sensory receptors of the auditory system. Hair cells are composed of up to 200 tiny filaments known as stereocilia that float in the fluid. The vibrations at the oval window produce tiny waves in the fluid that move the basilar membrane, deflecting the stereocilia. The location of a hair cell on the basilar membrane determines its frequency tuning, the sound frequency that it responds to. This is because the thickness (and thus, the stiffness) of the basilar membrane varies along its length from the oval window to the apex of the cochlea. The thickness constrains how the membrane will move in response to the fluid waves. Near the oval window, the membrane is thick and stiff. Hair cells attached here can respond to high-frequency vibrations in the waves. At the other end, the apex of the cochlea, the membrane is thinner and less stiff. Hair cells attached here will respond only to low frequencies. This spatial arrangement of the sound receptors is known as tonotopy, and the arrangement of the hair cells along the cochlear canal form a tonotopic map. Thus, even at this early stage of the auditory system, information about the sound source can be discerned.

The hair cells act as mechanoreceptors. When deflected by the membrane, mechanically gated ion channels open in the hair cells, allowing positively charged ions of potassium and calcium to flow into the cell. If the cell is sufficiently depolarized, it will release transmitter into a synapse between the base of the hair cell and an afferent nerve fiber. In this way, a mechanical event, the deflections of the hair cells, is converted into a neural signal (Figure 5.4).

FIGURE 5.4 Transduction of sound waves along the cochlea.

The cochlea is unrolled to show how the sensitivity to different frequencies varies with distance from the stapes.

Natural sounds like music or speech are made up of complex frequencies. Thus, a natural sound will activate a broad range of hair cells. Although we can hear sounds up to 20,000 hertz (Hz), our auditory system is most sensitive to sounds in the range of 1000 to 4000 Hz, a range that carries much of the information critical for human communication, such as speech or the cries of a hungry infant. Other species have sensitivity to very different frequencies. Elephants can hear very low-frequency sounds, allowing them to communicate over long distances (since such sounds are only slowly distorted by distance); mice communicate at frequencies well outside our hearing system. These species-specific differences likely reflect evolutionary pressures that arose from the capabilities of different animals to produce sounds. Our speech apparatus has evolved to produce changes in sound frequencies in the range of our highest sensitivity.

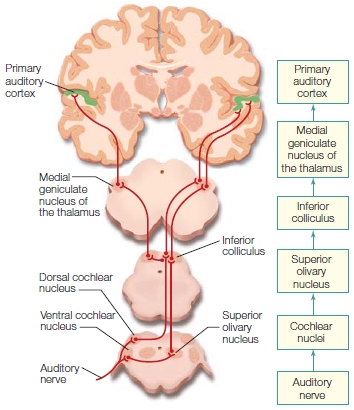

The auditory system contains several synapses between the hair cells and the cortex. The cochlear nerve, also called the auditory nerve, projects to the cochlear nucleus in the medulla. Axons from this nucleus travel up to the pons and split to innervate the left and right olivary nucleus, providing the first point within the auditory pathways where information is shared from both ears. Axons from the cochlear and olivary nuclei project to the inferior colliculus, higher up in the midbrain. At this stage, the auditory signals can access motor structures; for example, motor neurons in the colliculus can orient the head toward a sound. Some of the axons coursing through the pons branch off to the nucleus of the lateral lemniscus in the midbrain, where another important characteristic of sound, timing, is processed. From the midbrain, auditory information ascends to the MGN in the thalamus, which in turn projects to the primary auditory cortex (A1) in the superior part of the temporal lobe.

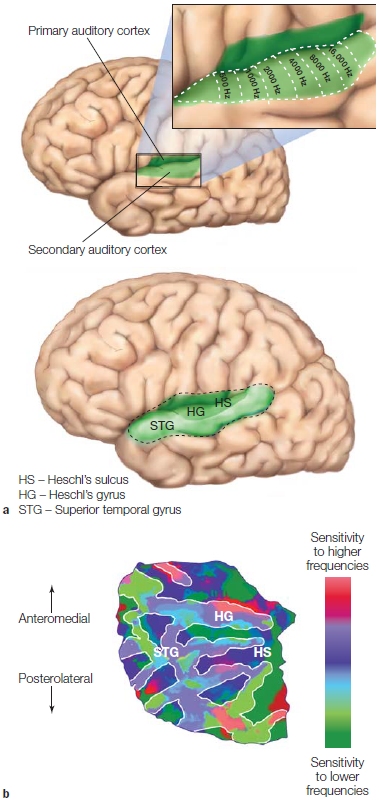

FIGURE 5.5 The auditory cortex and tonotopic maps.

(a) The primary auditory cortex is located in the superior portion of the temporal lobe (left and right hemispheres), with the majority of the region buried in the lateral sulcus on the transverse temporal gyrus and extending onto the superior temporal gyrus. (b) A flat map representation of primary and secondary auditory regions. Multiple tonotopic maps are evident, with the clearest organization evident in primary auditory cortex.

Neurons throughout the auditory pathway continue to have frequency tuning and maintain their tonotopic arrangement as they travel up to the cortex. As described in Chapter 2 (p. 56), the primary auditory cortex contains a tonotopic map, an orderly correspondence between the location of the neurons and their specific frequency tuning. Cells in the rostral part of A1 tend to be responsive to low-frequency sounds; cells in the caudal part of A1 are more responsive to high-frequency sounds. The tonotopic organization is evident in studies using single-cell recording methods, and thanks to the resolution provided by fMRI, it can also be seen in humans (Figure 5.5). Tonotopic maps are also found in secondary auditory areas of the cortex.

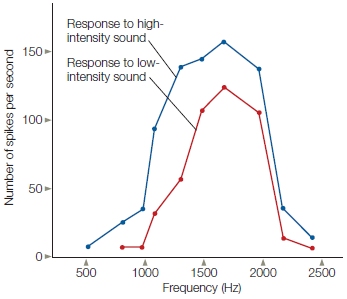

As Figure 5.6 shows, the tuning curves for auditory cells can be quite broad. The finding that individual cells do not give precise frequency information but provide only coarse coding may seem puzzling, because animals can differentiate between very small differences in sound frequencies. Interestingly, the tuning of individual neurons becomes sharper as we move through the auditory system. A neuron in the cat’s cochlear nucleus that responds maximally to a pure tone of 5000 Hz may also respond to tones ranging from 2000 to 10,000 Hz. A comparable neuron in the cat auditory cortex responds to a much narrower range of frequencies. The same principle is observed in humans. In one study, electrodes were placed in the auditory cortex of epileptic patients to monitor for seizure activity (Bitterman et al., 2008). Individual cells were exquisitely tuned, showing a strong response to, say, a tone at 1010 Hz but no response, or even a slight inhibition to tones just 20 Hz different. This fine resolution is essential for making the precise discriminations for perceiving sounds, including speech. Indeed, it appears that human auditory tuning is sharper than that of all other species except for the bat.

While A1 is, at a gross level, tonotopically organized, more recent studies using high-resolution imaging methods in the mouse suggest that, at a finer level of resolution, organization may be much more messy. At this level, adjacent cells frequently show very different tuning. Thus, there is a large-scale tonotopic organization but with considerable heterogeneity at the local level (Bandyopadhyay et al., 2010; Rothchild et al., 2010). This mixture may reflect the fact that natural sounds contain information across a broad range of frequencies and that the local organization arises from experience with these sounds.

Computational Goals in Audition

FIGURE 5.6 Frequency-dependent receptive fields for a cell in the auditory nerve of the squirrel monkey.

This cell is maximally sensitive to a sound of 1600 Hz, and the firing rate falls off rapidly for either lower- or higher-frequency sounds. The cell is also sensitive to intensity differences, with stronger responses to louder sounds. Other cells in the auditory nerve would show tuning for different frequencies.

Frequency data are essential for deciphering a sound. Sound-producing objects have unique resonant properties that provide a characteristic signature. The same note played on a clarinet and a trumpet will sound differently, because the resonant properties of each instrument will produce considerable differences in the note’s harmonic structure. Yet, we are able to identify a “G” from different instruments as the same note. This is because the notes share the same base frequency. In a similar way, we produce our range of speech sounds by varying the resonant properties of the vocal tract. Movements of our lips, tongue, and jaw change the frequency content of the acoustic stream produced during speech. Frequency variation is essential for a listener to identify words or music.

Auditory perception does not merely identify the content of an acoustic stimulus. A second important function of audition is to localize sounds in space. Consider the bat, which hunts by echolocation. High-pitched sounds are emitted by the bat and bounce back, as echoes from the environment. From these echoes, the bat’s brain creates an auditory image of the environment and the objects within it—preferably a tasty moth. But knowing that a moth (“what”) is present will not lead to a successful hunt. The bat also has to determine the moth’s precise location (“where”). Some very elegant work in the neuroscience of audition has focused on the “where” problem. In solving the “where” problem, the auditory system relies on integrating information from the two ears.

In developing animal models to study auditory perception, neuroscientists select animals with well-developed hearing. A favorite species for this work has been the barn owl, a nocturnal creature. Barn owls have excellent scotopia (night vision), which guides them to their prey. Barn owls, however, also must use an exquisitely tuned sense of hearing to locate food, because visual information can be unreliable at night. The low levels of illumination provided by the moon and stars fluctuate with the lunar cycle and clouds. Sound, such as the patter of a mouse scurrying across a field, offers a more reliable stimulus. Indeed, barn owls have little trouble finding prey in a completely dark laboratory.

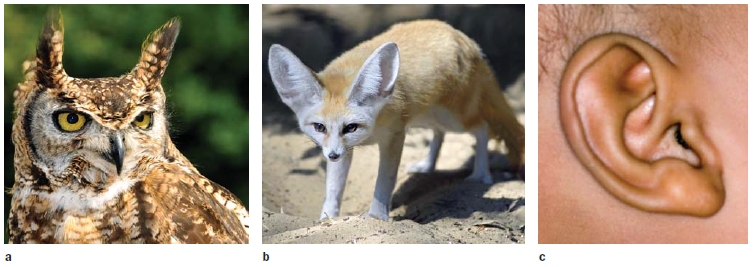

Barn owls rely on two cues to localize sounds: the difference in when a sound reaches each of the two ears, the interaural time, and the difference in the sound’s intensity at the two ears. Both cues exist because the sound reaching two ears is not identical. Unless the sound source is directly parallel to the head’s orientation, the sound will reach one ear before the other. Moreover, because the intensity of a sound wave becomes attenuated over time, the magnitude of the signal at the two ears will not be identical. The time and intensity differences are minuscule. For example, if the stimulus is located at a 45° angle to the line of sight, the interaural time difference will be approximately 1/10,000 of a second. The intensity differences resulting from sound attenuation are even smaller—indistinguishable from variations due to “noise.” However, these small differences are amplified by a unique asymmetry of owl anatomy: The left ear is higher than eye level and points downward, and the right ear is lower than eye level and points upward. Because of this asymmetry, sounds coming from below are louder in the left ear than the right. Humans do not have this asymmetry, but the complex structure of the human outer ear, or pinna, amplifies the intensity difference between a sound heard at the two ears (Figure 5.7).

Interaural time and intensity differences provide independent cues for sound localization. To show this, researchers use little owl headphones. Stimuli are presented over headphones, and the owl is trained to turn its head in the perceived direction of the sound. The headphones allow the experimenter to manipulate each cue separately. When amplitude is held constant, asynchronies in presentation times prompt the owl to shift its head in the horizontal plane. Variations in amplitude produce vertical head movements. Combining the two cues by fusing the inputs from the two ears provides the owl with a complete representation of three-dimensional space. If one ear is plugged, the owl’s response indicates that a sound has been detected, but it cannot localize the source.

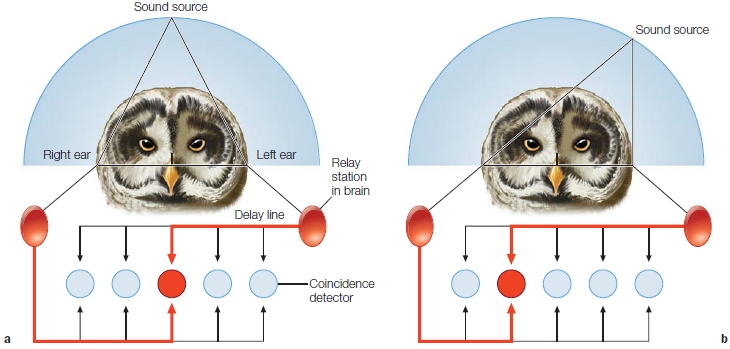

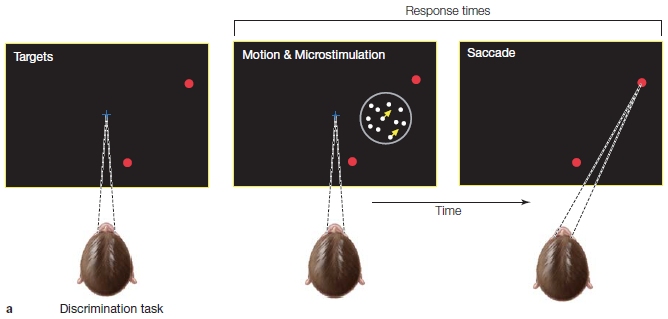

Mark Konishi of the California Institute of Technology has provided a well-specified neural model of how neurons in the brainstem of the owl code interaural time differences by operating as coincidence detectors (M. Konishi, 1993). To be activated, these neurons must simultaneously receive input from each ear. In computer science terms, these neurons act as AND operators. An input from either ear alone or in succession is not sufficient; the neurons will fire only if an input arrives at the same time from both ears.

To see how this model works, look at Figure 5.8. In Figure 5.8a, the sound source is directly in front of the animal. In this situation the coincidence detector in the middle is activated, because the stimulus arrives at each ear at the same time. In Figure 5.8b, the sound source is to the animal’s left. This gives the axon from the left ear a slight head start. Simultaneous activation now occurs in a coincidence detector to the left of center. This simple arrangement provides the owl with a complete representation of the horizontal position of the sound source.

FIGURE 5.7 Variation in pinnae.

The shape of the pinnae help filter sounds and can amplify differences in the stimulus at the two ears. Considerable variation is seen across species. (a) Great Horned Owl, (b) Fennec Fox, and (c) human.

FIGURE 5.8 Slight asymmetries in the arrival times at the two ears can be used to locate the lateral position of a stimulus.

(a) When the sound source is directly in front of the owl, the stimulus will reach the two ears at the same time. As activation is transmitted across the delay lines, the coincidence detector representing the central location will be activated simultaneously from both ears. (b) When the sound source is located to the left, the sound reaches the left ear first. Now a coincidence detector offset to the opposite side receives simultaneous activation from the two ears.

A different coding scheme represents interaural intensities. For this stimulus dimension, the neural code is based on the input’s firing rate. The stronger the input signal, the more strongly the cell fires. Neurons sum the combined intensity signals from both ears to pinpoint the vertical position of the source.

In Konishi’s model, the problem of sound localization by the barn owl is solved at the level of the brainstem. To date, this theory has not explained higher stages of processing, such as in the auditory cortex. Perhaps cortical processing is essential for converting location information into action. The owl does not want to attack every sound it hears; it must decide if the sound is generated by potential prey. Another way of thinking about this is to reconsider the issues surrounding the computational goals of audition. Konishi’s brainstem system provides the owl with a way to solve “where” problems but has not addressed the “what” question. The owl needs a more detailed analysis of the sound frequencies to determine whether a stimulus results from the movement of a mouse or a deer.

TAKE-HOME MESSAGES

Olfaction

We have the greatest awareness of our senses of sight, sound, taste, and touch. Yet the more primitive sense of smell is, in many ways, equally essential for our survival. Although the baleen whale probably does not smell the tons of plankton it ingests, the sense of smell is essential for terrestrial mammals, helping them to recognize foods that are nutritious and safe. Olfaction may have evolved primarily as a mechanism for evaluating whether a potential food is edible, but it serves other important roles as well—for instance, in avoiding hazards, such as fire or airborne toxins. Olfaction also plays an important role in social communication. Pheromones are excreted or secreted chemicals perceived by the olfactory system that trigger a social response in another individual of the same species. Pheromones are well documented in some insects, reptiles, and mammals. It also appears that they play an important role in human social interactions. Odors generated by women appear to vary across the menstrual cycle, and we are all familiar with the strong smells generated by people coming back from a long run. The physiological responses to such smells may be triggered by pheromones. To date, however, no compounds or receptors have been identified in humans. Before discussing the functions of olfaction, let’s review the neural pathways of the brain that respond to odors.

Neural Pathways of Olfaction

Smell is the sensory experience that results from the transduction of neural signals triggered by odor molecules, or odorants. These molecules enter the nasal cavity, either during the course of normal breathing or when we sniff. They will also flow into the nose passively, because air pressure in the nasal cavity is typically lower than in the outside environment, creating a pressure gradient. Odorants can also enter the system through the mouth, traveling back up into the nasal cavity (e.g., during consumption of food).

How olfactory receptors actually “read” odor molecules is unknown. One popular hypothesis is that odorants attach to odor receptors, which are embedded in the mucous membrane of the roof of the nasal cavity, called the olfactory epithelium. There are over 1,000 types of receptors, and most of these respond to only a limited number of odorants, though a single odorant can bind to more than one type of receptor. Another hypothesis is that the molecular vibrations of groups of odorant molecules contribute to odor recognition (Franco et al., 2011; Turin, 1996). This model predicts that odorants with similar vibrational spectra should elicit similar olfactory responses, and it explains why similarly shaped molecules, but with dissimilar vibrations, have very different fragrances. For example, alcohols and thiols have almost exactly the same structure, but alcohols have a fragrance of, well, alcohol, and thiols smell like rotten eggs.

FIGURE 5.9 Olfaction.

The olfactory receptors lie within the nasal cavity, where they interact directly with odorants. The receptors then send information to the glomeruli in the olfactory bulb, the axons of which form the olfactory nerve that relays information to the primary olfactory cortex. The orbitofrontal cortex is a secondary olfactory processing area.

Figure 5.9 details the olfactory pathway. The olfactory receptor is called a bipolar neuron because appendages extend from opposite sides of its cell body. When an odorant triggers the neuron, whether by shape or vibration, a signal is sent to the neurons in the olfactory bulbs, called the glomeruli. Tremendous convergence and divergence take place in the olfactory bulb. One bipolar neuron may activate over 8,000 glomeruli, and each glomerulus, in turn, receives input from up to 750 receptors. The axons from the glomeruli then exit laterally from the olfactory bulb, forming the olfactory nerve. Their destination is the primary olfactory cortex, or pyriform cortex, located at the ventral junction of the frontal and temporal cortices. The olfactory pathway to the brain is unique in two ways. First, most of the axons of the olfactory nerve project to the ipsilateral cortex. Only a small number cross over to innervate the contralateral hemisphere. Second, unlike the other sensory nerves, the olfactory nerve arrives at the primary olfactory cortex without going through the thalamus. The primary olfactory cortex projects to a secondary olfactory area within the orbitofrontal cortex, as well as making connections with other brain regions including the thalamus, hypothalamus, hippocampus, and amygdala. With these wide-ranging connections, it appears that odor cues influence autonomic behavior, attention, memory, and emotions—something that we all know from experience.

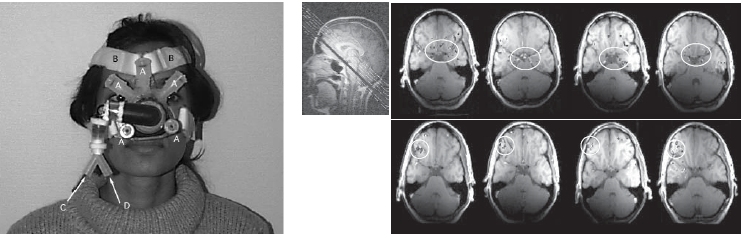

FIGURE 5.10 Sniffing and smelling.

(a) This special device was constructed to deliver controlled odors during fMRI scanning. (b, top) Regions activated during sniffing. The circled region includes the primary olfactory cortex and a posteromedial region of the orbitofrontal cortex. (b, bottom) Regions more active during sniffing when an odor was present compared to when the odor was absent.

The Role of Sniffing in Olfactory Perception

Olfaction has gotten short shrift from cognitive neuroscientists. This neglect reflects, in part, our failure to appreciate the importance of olfaction in people’s lives: We have handed the sniffing crown over to bloodhounds and their ilk. In addition, some thorny technical challenges must be overcome to apply tools such as fMRI to study the human olfactory system. First is the problem of delivering odors to a participant in a controlled manner (Figure 5.10a). Nonmagnetic systems must be constructed to allow the odorized air to be directed at the participant’s nostrils while he is in the fMRI magnet. Second, it is hard to determine when an odor is no longer present. The chemicals that carry the odor can linger in the air for a long time. Third, although some odors overwhelm our senses, most are quite subtle, requiring exploration through the act of sniffing to detect and identify. Whereas it is almost impossible to ignore a sound, we can exert considerable control over the intensity of our olfactory experience.

Noam Sobel of the Weizmann Institute in Israel developed methods to overcome these challenges, conducting neuroimaging studies of olfaction that have revealed an intimate relationship between smelling and sniffing (Mainland & Sobel, 2006; Sobel et al., 1998). Participants were scanned while being exposed to either nonodorized, clean air or one of two chemicals: vanillin or decanoic acid. The former has a fragrance like vanilla, the latter, like crayons. The odor-absent and odor-present conditions alternated every 40 seconds. Throughout the scanning session, the instruction, “Sniff and respond, is there an odor?” was presented every 8 seconds. In this manner, the researchers sought to identify areas in which brain activity was correlated with sniffing versus smelling (Figure 5.10b).

Surprisingly, smelling failed to produce consistent activation in the primary olfactory cortex. Instead, the presence of the odor produced a consistent increase in the fMRI response in lateral parts of the orbitofrontal cortex, a region typically thought to be a secondary olfactory area. Activity in the primary olfactory cortex was closely linked to the rate of sniffing. Each time the person took a sniff, the fMRI signal increased regardless of whether the odor was present. These results seemed quite puzzling and suggested that the primary olfactory cortex might be more a part of the motor system for olfaction.

Upon further study, however, the lack of activation in the primary olfactory cortex became clear. Neurophysiological studies of the primary olfactory cortex in the rat had shown that these neurons habituate (adapt) quickly. It was suggested that perhaps the primary olfactory cortex lacks a smell-related response because the hemodynamic response measured by fMRI exhibits a similar habituation. To test this idea, Sobel’s group modeled the fMRI signal by assuming a sharp increase followed by an extended drop after the presentation of an odor—an elegant example of how single-cell results can be used to interpret imaging data. When analyzed in this manner, the hemodynamic response in the primary olfactory cortex was found to be related to smell as well as to sniffing. These results suggest that the role of the primary olfactory cortex might be essential for detecting a change in the external odor and that the secondary olfactory cortex plays a critical role in identifying the odor itself. Each sniff represents an active sampling of the olfactory environment, and the primary olfactory cortex plays a critical role in determining if a new odor is present.

One Nose, Two Odors

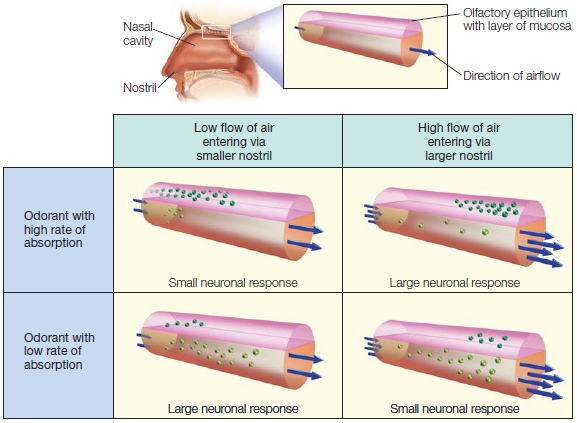

The importance of sniffing for olfactory perception is underscored by the fact that our ability to smell is continually being modulated by changes in the size of the nasal passages. In fact, the two nostrils appear to switch back and forth—one is larger than the other for a number of hours, and then the reverse. These changes have a profound effect on how smell is processed (Figure 5.11). Why might the nose behave this way?

The olfactory percept depends not only on how intense the odor is but also on how efficiently we sample it (Mozell et al., 1991). The presence of two nostrils of slightly different sizes provides the brain with slightly different images of the olfactory environment. To test the importance of this asymmetry, Sobel monitored which nostril was allowing high airflow and which nostril was allowing low airflow, while presenting odors with both high and low absorption rates to each nostril. As predicted (see Figure 5.11), when sniffed through the high-airflow nostril, the odorant with a high absorption rate was judged to be more intense compared to when the same odorant was presented to the low-airflow nostril. The opposite was true for the odorant with a low absorption rate; here, the odor with a low rate of absorption was judged to be more intense when sniffed through the low-airflow nostril. Some of the participants were monitored when the flow rate of their nostrils reversed. The perception of the odorant presented to the same nostril reversed with the change in airflow.

As we saw in Chapter 4, asymmetrical representations are the rule in human cognition, perhaps providing a more efficient manner of processing complex information. With the ancient sense of olfaction, this asymmetry appears to be introduced at the peripheral level by modulation of the rate of airflow through the nostrils.

FIGURE 5.11 Human nostrils have asymmetric flow rates.

Although the same odorants enter each nostril, the response across the epithelium will be different for the two nostrils because of variation in flow rates. One nostril always has a greater input airflow than the other, and the nostrils switch between the two rates every few hours. This system of having one lowflow and one high-flow nostril has evolved to give the nose optimal accuracy in perceiving odorants that have both high and low rates of absorption.

TAKE-HOME MESSAGES

Gustation

The sense of taste depends greatly on the sense of smell. Indeed, the two senses are often grouped together because they both begin with a chemical stimulus. Because these two senses interpret the environment by discriminating between different chemicals, they are referred to as the chemical senses.

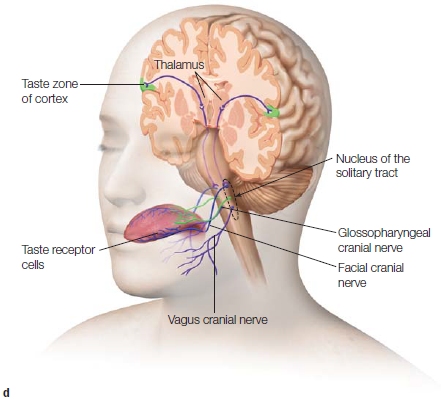

Neural Pathways of Gustation

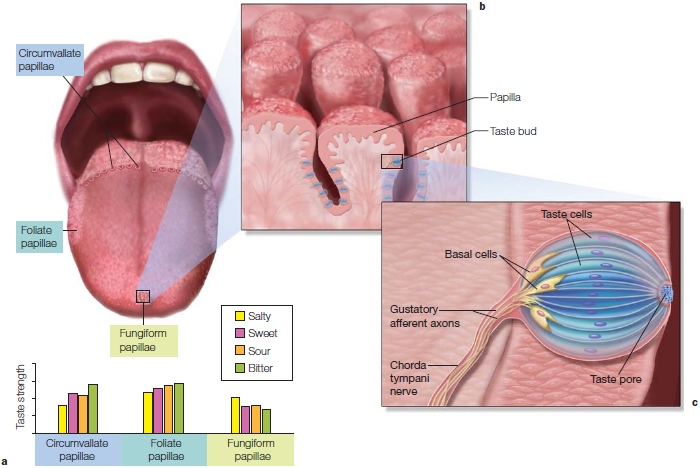

Gustation begins with the tongue. Strewn across the surface of the tongue in specific locations are different types of papillae, the little bumps you can feel on the surface. Papillae serve multiple functions. Some are concerned with gustation, others with sensation, and some with the secretion of lingual lipase, an enzyme that helps break down fats. The papillae in the anterior region and along the sides of the tongue contains several taste buds; those types found predominantly in the center of the tongue do not have taste buds. Taste pores are the conduits that lead from the surface of the tongue to the taste buds. Each taste bud contains many taste cells (Figure 5.12). Taste buds are also found in the cheeks and parts of the roof of the mouth. There are five basic tastes: salty, sour, bitter, sweet, and umami. Umami is the savory taste you experience when you eat steak or other protein-rich substances.

Sensory transduction in the gustatory system begins when a food molecule, or tastant, stimulates a receptor in a taste cell and causes the receptor to depolarize (Figure 5.12). Each of the basic taste sensations has a different form of chemical signal transduction. For example, the experience of a salty taste begins when the salt molecule (NaCl) breaks down into Na+ and Cl−, and the Na+ ion is absorbed by a taste receptor, leading the cell to depolarize. Other taste transduction pathways, such as sweet carbohydrate tastants, are more complex, involving receptor binding that does not lead directly to depolarization. Rather, the presence of certain tastants will initiate a cascade of chemical “messengers” that eventually leads to cellular depolarization. Synapsing with the taste cells in the taste buds are bipolar neurons. Their axons form the chorda tympani nerve.

The chorda tympani nerve joins other fibers to form the facial nerve (the 7th cranial nerve). This nerve projects to the gustatory nucleus, located in the rostral region of the nucleus of the solitary tract in the brainstem. Meanwhile, the caudal region of the solitary nucleus receives sensory neurons from the gastrointestinal tract. The integration of information at this level can provide a rapid reaction. For example, you might gag if you taste something that is “off,” a strong signal that the food should be avoided.

The next synapse in the gustatory system is on the ventral posterior medial nucleus (VPM) of the thalamus. Axons from the VPM synapse in the primary gustatory cortex. This is a region in the insula and operculum, structures at the intersection of the temporal and frontal lobes (Figure 5.12). Primary gustatory cortex is connected to secondary processing areas of the orbitofrontal cortex, providing an anatomical basis for the integration of tastes and smells. While there are only five types of taste cells, we are capable of experiencing a complex range of tastes. This ability must result from the integration of information conveyed from the taste cells and processed in areas like the orbitofrontal cortex.

The tongue does more than just taste. Some papillae contain nociceptive receptors, a type of pain receptor. These are activated by irritants such as capsaicin (contained in chili peppers), carbon dioxide (carbonated drinks), and acetic acid (vinegar). The output from these receptors follows a different path, forming the trigeminal nerve (cranial nerve V). This nerve not only carries pain information but also signals position and temperature information. You are well aware of the reflex response to activation by these irritants if you have ever eaten a hot chili: salivation, tearing, vasodilation (the red face), nasal secretion, bronchospasm (coughing), and decreased respiration. All these are meant to dilute that irritant and get it out of your system as quickly as possible.

FIGURE 5.12 The gustatory transduction pathway.

(a) Three different types of taste papillae span the surface of the tongue. Each cell is sensitive to one of five basic tastes: salty, sweet, sour, bitter, and umami. The bar graph shows how sensitivity for four taste sensations varies between the three papillae. (b) The papillae contain the taste buds. (c) Taste pores on the surface of the tongue open into the taste bud, which contains taste cells. (d) The chorda tympani nerve, formed by the axons from the taste cells, joins with the facial nerve to synapse in the nucleus of the solitary tract in the brain stem, as do the sensory nerves from the GI tract via the vagus nerve. The taste pathway projects to the ventral posterior medial nucleus of the thalamus and information is then relayed to the gustatory cortex in the insula.

Gustatory Processing

Taste perception varies from person to person because the number and types of papillae and taste buds vary considerably between individuals. In humans, the number of taste buds varies from 120 to 668 per cm2. Interestingly, women generally have more taste buds than men (Bartoshuk et al., 1994). People with large numbers of taste buds are known as supertasters. They taste things more intensely, especially bitterness, and feel more pain from tongue irritants. You can spot the two ends of the tasting spectrum at the table. One is pouring on the salsa or drinking grapefruit juice while the other is cringing.

The basic tastes give the brain information about the types of food that have been consumed. The sensation of umami tells the body that protein-rich food is being ingested, sweet tastes indicate carbohydrate intake, and salty tastes give us information that is important for the balance between minerals or electrolytes and water. The tastes of bitter and sour likely developed as warning signals. Many toxic plants taste bitter, and a strong bitter taste can induce vomiting. Other evidence suggesting that bitterness is a warning signal is the fact that we can detect bitter substances 1,000 times better than, say, salty substances. Therefore, a significantly smaller amount of bitter tastant will yield a taste response, allowing toxic bitter substances to be avoided quickly. No wonder supertasters are especially sensitive to bitter tastes. Similarly, but to a lesser extent, sour indicates spoiled food (e.g., “sour milk”) or unripe fruits.

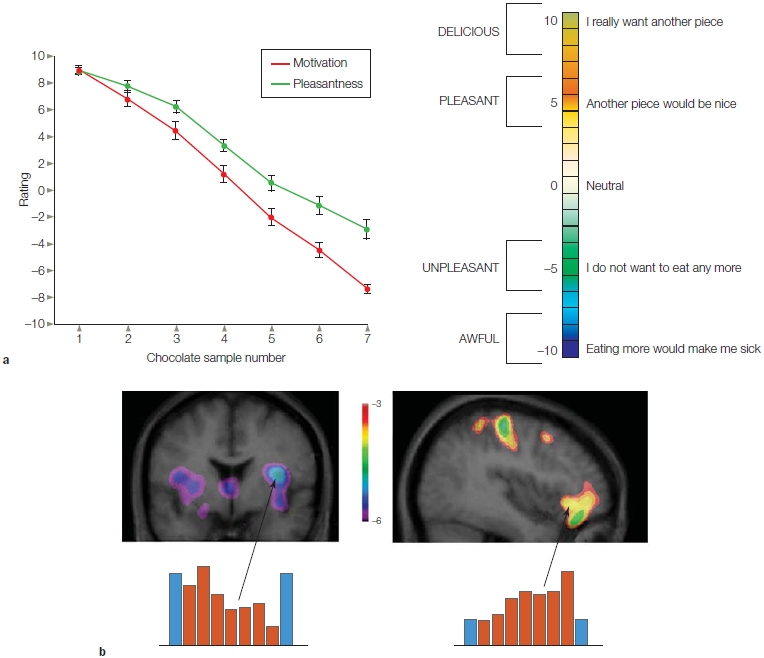

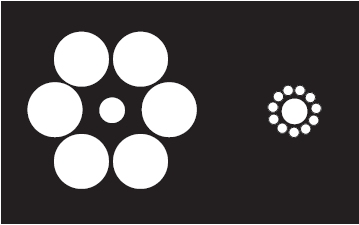

FIGURE 5.13 The neural correlates of satiation.

(a) Participants use a 10-point scale to rate the motivation and pleasantness of chocolate when offered a morsel seven times during the PET session. Desire and enjoyment declined over time. (b) Activation as measured during PET scanning during repeated presentations of chocolate (red). Water was presented during the first and last scans (blue). Across presentations, activity dropped in primary gustatory cortex (left) and increased in orbitofrontal cortex (right). The former could indicate an attenuated response to the chocolate sensation as the person habituates to the taste. The latter might correspond to a change in the participants’ desire (or aversion) to chocolate.

Humans can readily learn to discriminate similar tastes. Richard Frackowiak and his colleagues at University College London (Castriota-Scanderberg et al., 2005) studied wine connoisseurs (sommeliers), asking how their brain response compared to that of nonexperts when tasting wines that varied in quite subtle ways. In primary gustatory areas, the two groups showed a very similar response. The sommeliers, however, exhibited increased activation in the insula cortex and parts of the orbitofrontal cortex in the left hemisphere, as well as greater activity bilaterally in dorsolateral prefrontal cortex. This region is thought to be important for high-level cognitive processes such as decision making and response selection (see Chapter 12).

The orbitofrontal cortex also appears to play an important role in processing the pleasantness and reward value of eating food. Dana Small and her colleagues (2001) at Northwestern University used positron emission tomography (PET) to scan the brains of people as they ate chocolate (Figure 5.13). During testing, the participants rated the pleasantness of the chocolate and their desire to eat more chocolate. Initially, the chocolate was rated as very pleasant and the participants expressed a desire to eat more. But as the participants became satiated, their desire for more chocolate dropped. Moreover, although the chocolate was still perceived as pleasant, the intensity of their pleasure ratings decreased.

By comparing the neural activation in the beginning trials with the trials at the end of the study, the researchers were able to determine which areas of the brain participated in processing the reward value of the chocolate (the pleasantness) and the motivation to eat (the desire to have more chocolate). The posteromedial portion of the orbitofrontal cortex was activated when the chocolate was highly rewarding and the motivation to eat more was strong. In contrast, the posterolateral portion of the orbitofrontal cortex was activated during the satiated state, when the chocolate was unrewarding and the motivation to eat more was low. Thus, the orbitofrontal cortex appears to be a highly specialized taste-processing region containing distinct areas able to process opposite ends of the reward value spectrum associated with eating.

TAKE-HOME MESSAGES

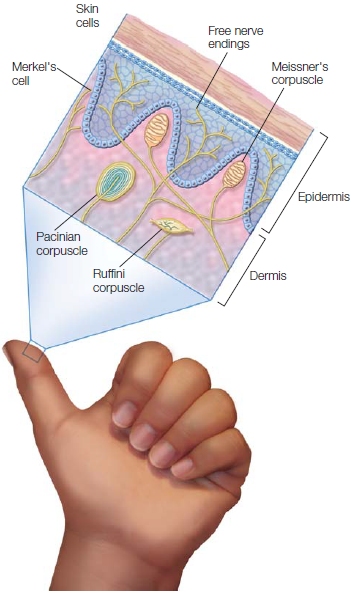

Somatosensation

Somatosensory perception is the perception of all mechanical stimuli that affect the body. This includes interpretation of signals that indicate the position of our limbs and the position of our head, as well as our sense of temperature, pressure, and pain. Perhaps to a greater degree than with our other sensory systems, the somatosensory system includes an intricate array of specialized receptors and vast projections to many regions of the central nervous system.

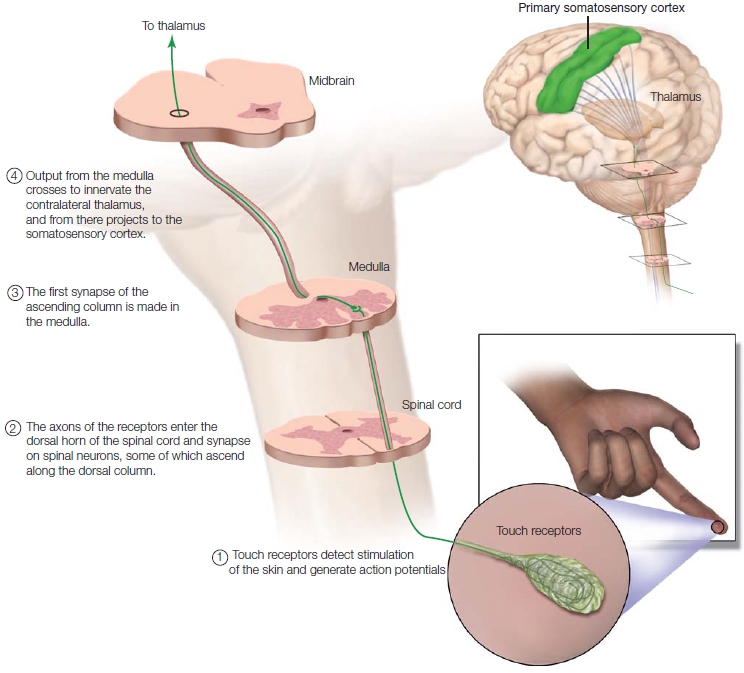

Neural Pathways of Somatosensation

FIGURE 5.14 Somatosensory receptors underneath the skin.

Merkel’s cells detect regular touch; Meissner’s corpuscles, light touch; Pacinian corpuscles, deep pressure; Ruffini corpuscles, temperature. Nociceptors (also known as free nerve endings), detect pain.

Somatosensory receptors lie under the skin (Figure 5.14) and at the musculoskeletal junctions. Touch is signaled by specialized receptors in the skin, including Meissner’s corpuscles, Merkel’s cells, Pacinian corpuscles, and Ruffini corpuscles. These receptors differ in how quickly they adapt and in their sensitivity to various types of touch, such as deep pressure or vibration. Pain is signaled by nociceptors, the least differentiated of the skin’s sensory receptors. Nociceptors come in three flavors: thermal receptors that respond to heat or cold, mechanical receptors that respond to heavy mechanical stimulation, and polymodal receptors that respond to a wide range of noxious stimuli including heat, mechanical insults, and chemicals. The experience of pain is often the result of chemicals, such as histamine, that the body releases in response to injury. Nociceptors are located on the skin, below the skin, and in muscles and joints. Afferent pain neurons may be either myelinated or unmyelinated. The myelinated fibers quickly conduct information about pain. Activation of these cells usually produces immediate action. For example, when you touch a hot stove, the myelinated nociceptors can trigger a response that will cause you to quickly lift your hand, possibly even before you are aware of the temperature. The unmyelinated fibers are responsible for the duller, longer-lasting pain that follows the initial burn and reminds you to care for the damaged skin.

Specialized nerve cells provide information about the body’s position, or what is called proprioception (proprius: Latin for “own,” –ception: “receptor”; thus, a receptor for the self). Proprioception allows the sensory and motor systems to represent information about the state of the muscles and limbs. Proprioceptive cues, for example, signal when a muscle is stretched and can be used to monitor if that movement is due to an external force or from our own actions (see Chapter 8).

Somatosensory receptors have their cell bodies in the dorsal-root ganglia (or equivalent cranial nerve ganglia). The somatosensory receptors enter the spinal cord via the dorsal root (Figure 5.15). Some synapse on motor neurons in the spinal cord to form reflex arcs. Other axons synapse on neurons that send axons up the dorsal column of the spinal cord to the medulla. From here, information crosses over to the ventral posterior nucleus of the thalamus and then on to the cerebral cortex. As in vision (which is covered later in the chapter) and audition, the primary peripheral projections to the brain are crosswired; that is, information from one side of the body is represented primarily in the opposite, or contralateral, hemisphere. In addition to the cortical projections, proprioceptive and somatosensory information is projected to many subcortical structures, such as the cerebellum.

FIGURE 5.15 The major somatosensory pathway (representative).

From skin to cortex, the primary pathway of the somatosensory system.

Somatosensory Processing

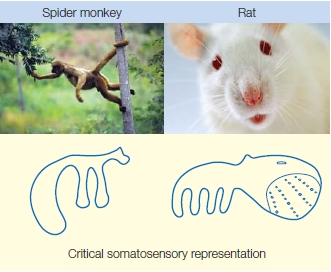

FIGURE 5.16 (a) Somatosensory cortex (S1) lies in the postcentral gyrus, the most anterior portion of the parietal lobe. The secondary somatosensory cortex (S2) is ventral to S1. (b) The somatosensory homunculus as seen along the lateral surface and in greater detail in the coronal section. Note that the body parts with the larger cortical representations are most sensitive to touch.

The initial cortical receiving area is called primary somatosensory cortex or S1 (Figure 5.16a), which includes Brodmann areas 1, 2, and 3. S1 contains a somatotopic representation of the body, called the sensory homunculus (Figure 5.16b). Recall from Chapter 2 that the relative amount of cortical representation in the sensory homunculus corresponds to the relative importance of somatosensory information for that part of the body. For example, the hands cover a much larger portion of the cortex than the trunk does. The larger representation of the hands is essential given the great precision we need in using our fingers to manipulate objects and explore surfaces. When blindfolded, we can readily identify an object placed in our hand, but we would have great difficulty in identifying an object rolled across our back.

Somatotopic maps show considerable variation across species. In each species, the body parts that are the most important for sensing the outside world through touch are the ones that have the largest cortical representation. A great deal of the spider monkey’s cortex is devoted to its tail, which it uses to explore objects that might be edible foods or for grabbing onto tree limbs. The rat, on the other hand, uses its whiskers to explore the world; so a vast portion of the rat somatosensory cortex is devoted to representing information obtained from the whiskers (Figure 5.17).

FIGURE 5.17 Variation in the organization of somatosensory cortex reflects behavioral differences across species.

The cortical area representing the tail of the spider monkey is large because this animal uses its tail to explore the environment as well as for support. The rat explores the world with its whiskers; clusters of neurons form whisker barrels in the rat somatosensory cortex.

Secondary somatosensory cortex (S2) builds more complex representations. From touch, for example, S2 neurons may code information about object texture and size. Interestingly, because of projections across the corpus callosum, S2 in each hemisphere receives information from both the left and the right sides of the body. Thus, when we manipulate an object with both hands, an integrated representation of the somatosensory information can be built up in S2.

Plasticity in the Somatosensory Cortex

Looking at the somatotopic maps may make you wonder just how much of that map is set in stone. What if you worked at the post office for many years sorting mail. Would you see changes in parts of the visual cortex that discriminate numbers? Or if you were a professional violinist, would your motor cortex be any bigger than that of the person who has never picked up a bow? Would anything happen to the part of your brain that represents your finger if you lost it in an accident? Would that part atrophy, or does the neighboring finger expand its representation and become more sensitive?

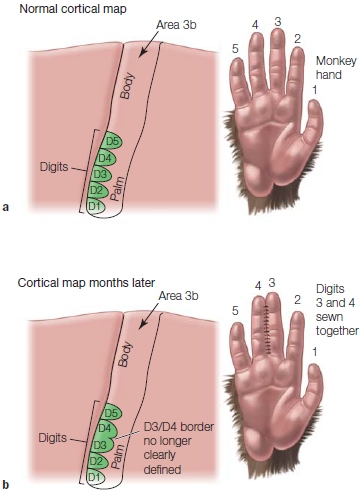

FIGURE 5.18 Reorganization of sensory maps in the primate cortex.

(a) In a mapping of the somatosensory hand area in normal monkey cortex, the individual digit representations can be revealed by single-unit recording. (b) If two fingers of one hand are sewn together, months later the cortical maps change such that the sharp border once present between the sewn fingers is now blurred.

In 1949, Donald Hebb bucked the assumption that the brain was set in stone after the early formative years. He suggested a theoretical framework for how functional reorganization, or what neuroscientists refer to as cortical plasticity, might occur in the brain through the remodeling of neuronal connections. Since then, more people have been looking for and observing brain plasticity in action. Michael Merzenich (Merzenich & Jenkins, 1995; Merzenich et al., 1988) at the University of California, San Francisco, and Jon Kaas (1995) at Vanderbilt University discovered that in adult monkeys, the size and shape of the cortical sensory and motor maps can be altered by experience. For example, when the nerve fibers from a finger to the spinal cord are severed (deafferented), the relevant part of the cortex no longer responds to the touch of that finger (Figure 5.18). Although this is no big surprise, the strange part is that the area of the cortex that formerly represented the denervated finger soon becomes active again. It begins to respond to stimulation from the finger adjacent to the amputated finger. The surrounding cortical area fills in and takes over the silent area. Similar changes are found when a particular finger is given extended sensory stimulation: It gains a little more acreage on the cortical map. This functional plasticity suggests that the adult cortex is a dynamic place where changes can still happen, and it demonstrates a remarkable plasticity.

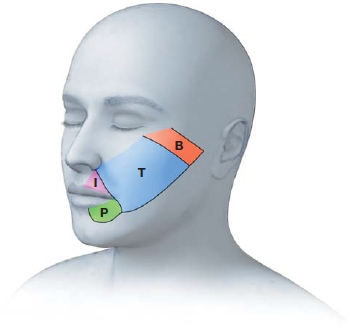

FIGURE 5.19 Perceived sensation of a phantom, amputated hand following stimulation of the face.

A Q-tip was used to lightly brush different parts of the face. The letters indicate the patient’s perceptual experience. The region labeled T indicates the patient experienced touch on his phantom thumb. P is from the pinkie, I, the index finger, and B the ball of the thumb.

Extending these findings to humans, Vilayanur Ramachandran at the University of California, San Diego, studied the cortical mapping of human amputees. Look again at the human cortical somatosensory map in Figure 5.16b. What body part is represented next to the fingers and hand? Ramachandran reasoned that a cortical rearrangement ought to take place if an arm is amputated, just as had been found for the amputation of a digit in monkeys. Such a rearrangement might be expected to create bizarre patterns of perception, since the face area is next to the hand and arm area. Indeed, in one case study, Ramachandran examined a young man whose arm had been amputated just above the elbow a month earlier (1993). When a cotton swab was brushed lightly against his face, he reported feeling his amputated hand being touched! Feelings of sensation in missing limbs are the well-known phenomenon of phantom limb sensation. The sensation in the missing limb is produced by touching a body part that has appropriated the missing limb’s old acreage in the cortex. In this case, the sensation was introduced by stimulating the face. Indeed, with careful examination, a map of the young man’s hand could be demonstrated on his face (Figure 5.19).

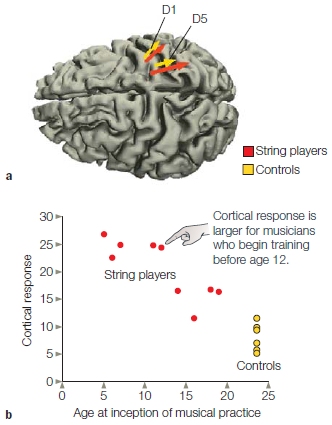

FIGURE 5.20 Increase in cortical representation of the fingers in musicians who play string instruments.

(a) Source of MEG activity for controls (yellow) and musicians (red) following stimulation of the thumb (D1) and fifth finger (D5). The length of the arrows indicates the extent of the responsive region. (b) The size of the cortical response, plotted as a function of the age at which the musicians begin training. Responses were larger for those who began training before the age of 12 years; controls are shown at the lower right of the graph.

These examples of plasticity led researchers to wonder if changes in experience within the normal range—say, due to training and practice—also result in changes in the organization of the adult human brain. Thomas Elbert and his colleagues at the University of Konstanz used magnetoencephalography (MEG) to investigate the somatosensory representations of the hand area in violin players (Elbert et al., 1995). They found that the responses in the musicians’ right hemisphere, which controls the left-hand fingers that manipulate the violin strings, were stronger than those observed in nonmusicians (Figure 5.20). What’s more, they observed that the size of the effect (the enhancement in the response) correlated with the age at which the players began their musical training. These findings suggest that a larger cortical area was dedicated to representing the sensations from the fingers of the musicians, owing to their altered but otherwise normal sensory experience. Another study used a complex visual motor task: juggling. After 3 months of training, the new jugglers had increased gray matter in the extrastriate motion-specific area in their visual cortex and in the left parietal sulcus, an area that is important in spatial judgments. (Draganski et al., 2004). Indeed, there is evidence that cortical reorganization can occur after just 15 to 30 minutes of practice (Classen et al., 1998).

The kicker is, however, that when the jugglers stopped practicing, these areas of their brain returned to their pretraining size, demonstrating something that we all know from experience: Use it or lose it. The realization that plasticity is alive and well in the brain has fueled hopes that stroke victims who have damaged cortex with resultant loss of limb function may be able to structurally reorganize their cortex and regain function. How this process might be encouraged is actively being pursued. One approach is to better understand the mechanisms involved.

Mechanisms of Cortical Plasticity

Most of the evidence for the mechanisms of cortical plasticity comes from animal studies. The results suggest a cascade of effects, operating across different timescales. Rapid changes probably reflect the unveiling of weak connections that already exist in the cortex. Longer-term plasticity may result from the growth of new synapses and/or axons.

Immediate effects are likely to be due to a sudden reduction in inhibition that normally suppresses inputs from neighboring regions. Reorganization in the motor cortex has been found to depend on the level of gamma-aminobutyric acid (GABA), the principal inhibitory neurotransmitter (Ziemann et al., 2001). When GABA levels are high, activity in individual cortical neurons is relatively stable. If GABA levels are lower, however, then the neurons may respond to a wider range of stimuli. For example, a neuron that responds to the touch of one finger will respond to the touch of other fingers if GABA is blocked. Interestingly, temporary deafferentation of the hand (by blocking blood flow to the hand) leads to a lowering of GABA levels in the brain. These data suggest that short-term plasticity may be controlled by a release of tonic inhibition on synaptic input (thalamic or intracortical) from remote sources.

Changes in cortical mapping over a period of days probably involve changes in the efficacy of existing circuitry. After loss of normal sensory input (e.g., through amputation or peripheral nerve section), cortical neurons that previously responded to that input might undergo “denervation hypersensitivity.” That is, the strength of the responses to any remaining weak excitatory input is upregulated: Remapping might well depend on such modulations of synaptic efficacy. Strengthening of synapses is enhanced in the motor cortex by the neurotransmitters norepinephrine, dopamine, and acetylcholine; it is decreased in the presence of drugs that block the receptors for these transmitters (Meintzschel & Ziemann, 2005). These changes are similar to the forms of long-term potentiation and depression in the hippocampus that are thought to underlie the formation of spatial and episodic memories that we will discuss in Chapter 9.

Finally, some evidence in animals suggests that the growth of intracortical axonal connections and even sprouting of new axons might contribute to very slow changes in cortical plasticity.

TAKE-HOME MESSAGES

Vision

Now let’s turn to a more detailed analysis of the most widely studied sense: vision. Like most other diurnal creatures, humans depend on the sense of vision. Although other senses, such as hearing and touch, are also important, visual information dominates our perceptions and appears even to frame the way we think. Much of our language, even when used to describe abstract concepts with metaphors, makes reference to vision. For example, we say “I see” to indicate that something is understood, or “Your hypothesis is murky” to indicate confused thoughts.

Neural Pathways of Vision

One reason vision is so important is that it enables us to perceive information at a distance, to engage in what is called remote sensing or exteroceptive perception. We need not be in immediate contact with a stimulus to process it. Contrast this ability with the sense of touch. For touch, we must be in direct contact with the stimulus. The advantages of remote sensing are obvious. An organism surely can avoid a predator better when it can detect the predator at a distance. It is probably too late to flee once a shark has sunk its teeth into you, no matter how fast your neural response is to the pain.

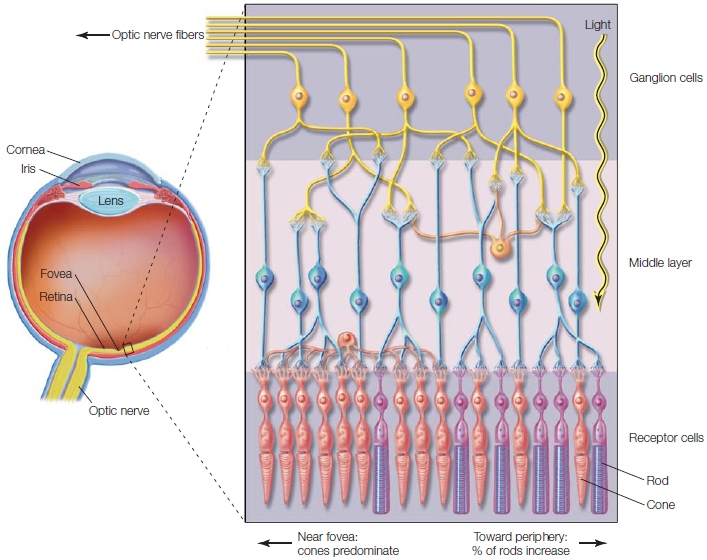

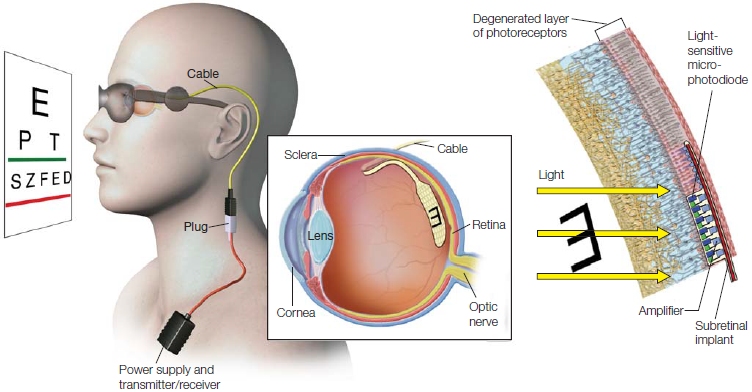

The Receptors Visual information is contained in the light reflected from objects. To perceive objects, we need sensory detectors that respond to the reflected light. As light passes through the lens of the eye, the image is inverted and focused to project on the back surface of the eye (Figure 5.21), the retina. The retina is only about 0.5 mm thick, but it is made up of 10 densely packed layers of neurons. The deepest layers are composed of millions of photoreceptors, the rods and cones. These contain photopigments, protein molecules that are sensitive to light. When exposed to light, the photopigments become unstable and split apart. Unlike most neurons, rods and cones do not fire action potentials. The decomposition of the photopigments alters the membrane potential of the photoreceptors and triggers action potentials in downstream neurons. Thus, photoreceptors provide for translation of the external stimulus of light into an internal neural signal that the brain can interpret.

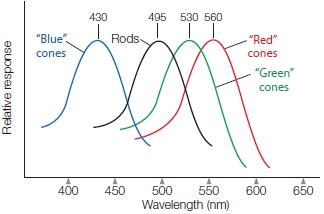

The rods contain the pigment rhodopsin, which is destabilized by low levels of light. Rods are most useful at night when light energy is reduced. Rods also respond to bright light, but the pigment quickly becomes depleted and the rods cease to function until it is replenished. Because this takes several minutes, they are of little use during the day. Cones contain a different type of photopigment, called a photopsin. Cones require more intense levels of light but can replenish their photopigments rapidly. Thus, cones are most active during daytime vision. There are three types of cones, defined by their sensitivity to different regions of the visible spectrum: (a) a cone that responds to short wavelengths, the blue part of the spectrum; (b) one that responds to medium wavelengths, the greenish region; and (c) one that responds to the long “reddish” wavelengths (Figure 5.22). The activity of these three different receptors ultimately leads to our ability to see color.

FIGURE 5.21 Anatomy of the eye and retina.

Light enters through the cornea and activates the receptor cells of the retina located along the rear surface. There are two types of receptor cells: rods and cones. The output of the receptor cells is processed in the middle layer of the retina and then relayed to the central nervous system via the optic nerve, the axons of the ganglion cells.

FIGURE 5.22 Spectral sensitivity functions for rods and the three types of cones.

The short-wavelength (“blue”) cones are maximally responsive to light with a wavelength of 430 nm. The peak sensitivities of the medium-wavelength (“green”) and long-wavelength (“red”) cones are shifted to longer wavelengths. White light, such as daylight, activates all three receptors because it contains all wavelengths.

Rods and cones are not distributed equally across the retina. Cones are densely packed near the center of the retina, in a region called the fovea. Few cones are in the more peripheral regions of the retina. In contrast, rods are distributed throughout the retina. You can easily demonstrate the differential distribution of rods and cones by having a friend slowly bring a colored marker into your view from one side of your head. Notice that you see the marker and its shape well before you identify its color, because of the sparse distribution of cones in the retina’s peripheral regions.

The Retina to the Central Nervous System The rods and cones are connected to bipolar neurons that then synapse with the ganglion cells, the output layer of the retina. The axons of these cells form a bundle, the optic nerve, that transmits information to the central nervous system. Before any information is shipped down the optic nerve, however, extensive processing occurs within the retina, an elaborate convergence of information. Indeed, though humans have an estimated 260 million photoreceptors, we have only 2 million ganglion cells to telegraph information from the retina. Many rods feed into a single ganglion cell. By summing their outputs, the rods can activate a ganglion cell even in low light situations. For cones, however, the story is different: Each ganglion cell is innervated by only a few cones. Thus, they carry much more specific information from only a few receptors, ultimately providing a sharper image. The compression of information, as with the auditory system, suggests that higher-level visual centers should be efficient processors to unravel this information and recover the details of the visual world.

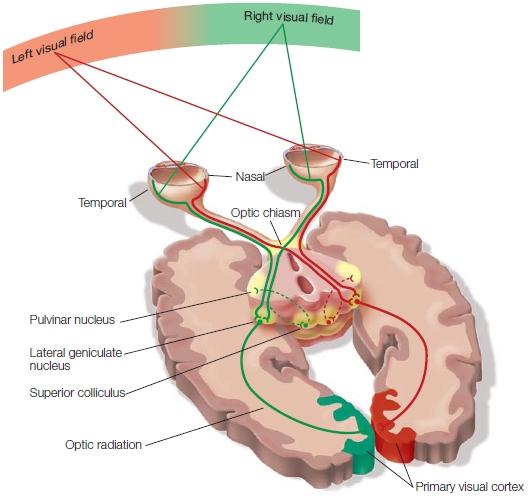

FIGURE 5.23 The primary projection pathways of the visual system.

The optic fibers from the temporal half of the retina project ipsilaterally, and the nasal fibers cross over at the optic chiasm. In this way, the input from each visual field is projected to the primary visual cortex in the contralateral hemisphere after the fibers synapse in the lateral geniculate nucleus (geniculocortical pathway). A small percentage of visual fibers of the optic nerve terminate in the superior colliculus and pulvinar nucleus.

Figure 5.23 diagrams how visual information is conveyed from the eyes to the central nervous system. As we discussed in the last chapter, before entering the brain, each optic nerve splits into two parts. The temporal (lateral) branch continues to traverse along the ipsilateral side. The nasal (medial) branch crosses over to project to the contralateral side; this crossover place is called the optic chiasm. Given the eye’s optics, the crossover of nasal fibers ensures that visual information from each side of external space will be projected to contralateral brain structures. Because of the retina’s curvature, the temporal half of the right retina is stimulated by objects in the left visual field. In the same fashion, the nasal hemiretina of the left eye is stimulated by this same region of external space. Because fibers from each nasal hemiretina cross, all information from the left visual field is projected to the right hemisphere, and information from the right visual field is projected to the left hemisphere.

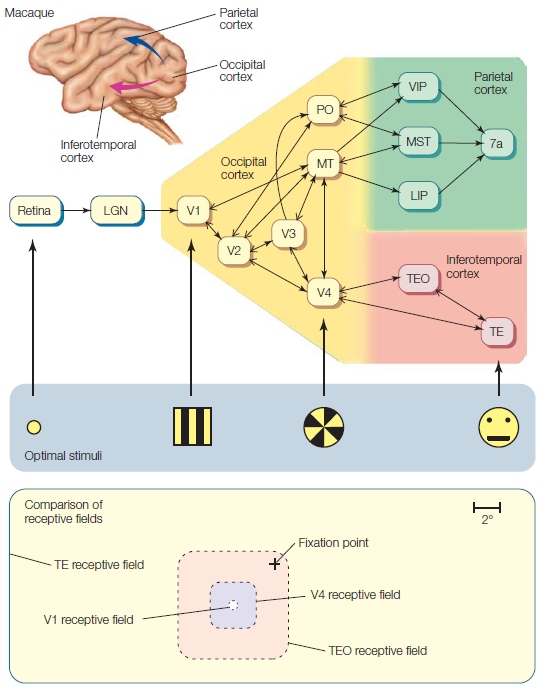

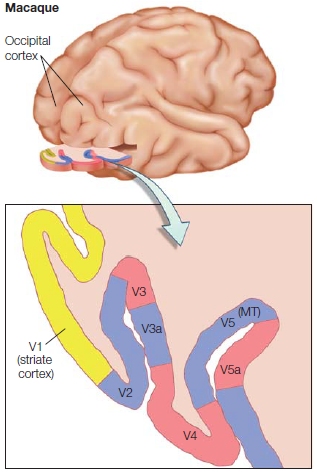

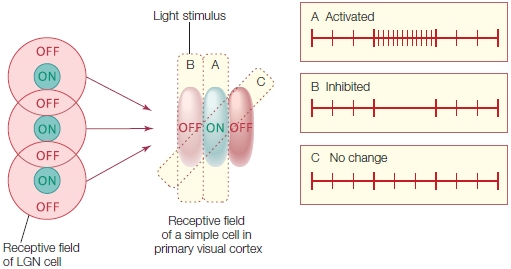

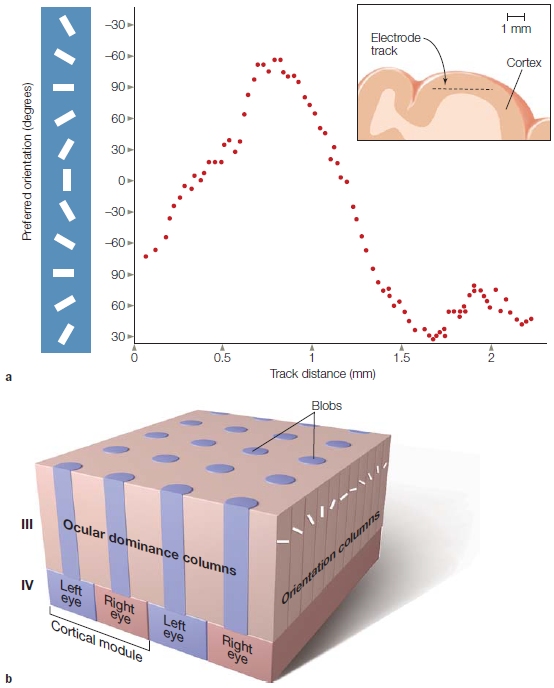

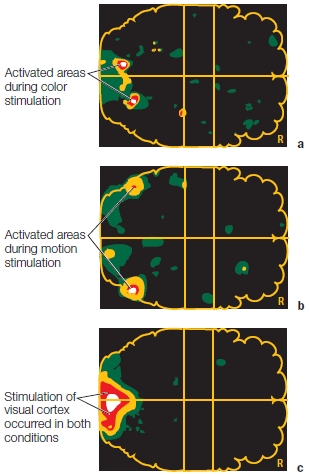

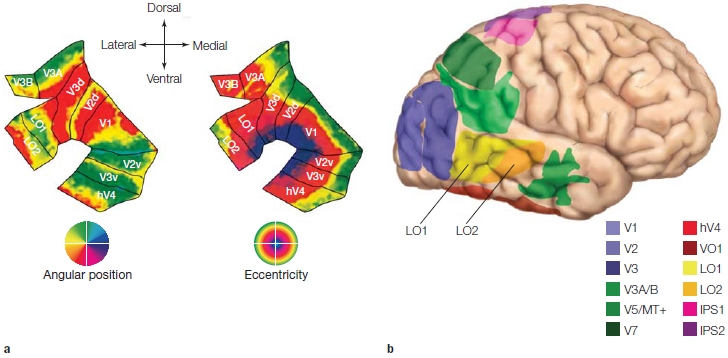

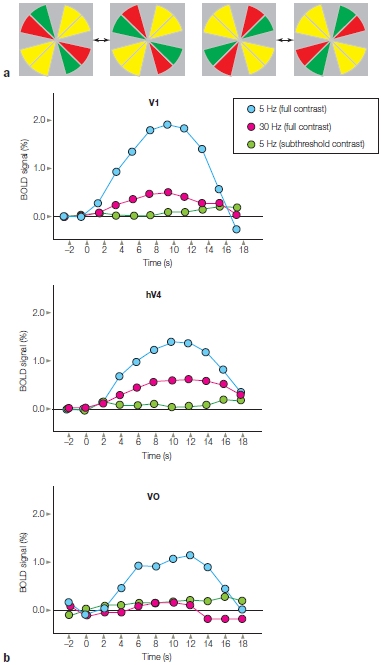

Each optic nerve divides into several pathways that differ with respect to where they terminate in the subcortex. Figure 5.23 focuses on the pathway that contains more than 90 % of the axons in the optic nerve, the retinogeniculate pathway, the projection from the retina to the lateral geniculate nucleus (LGN) of the thalamus. The LGN is made up of six layers. One type of ganglion cell, the M cell, sends output to the bottom two layers. Another type of ganglion cell the P cell, projects to the top four layers. The remaining 10 % of the optic nerve fibers innervate other subcortical structures, including the pulvinar nucleus of the thalamus and the superior colliculus of the midbrain. Even though these other receiving nuclei are innervated by only 10 % of the fibers, these pathways are still important. The human optic nerve is so large that 10 % of it constitutes more fibers than are found in the entire auditory pathway. The superior colliculus and pulvinar nucleus play a large role in visual attention.