|

Though this be madness, yet there is method in’t.

~ William Shakespeare

|

Chapter 3

Methods of Cognitive Neuroscience

OUTLINE

Cognitive Psychology and Behavioral Methods

Studying the Damaged Brain

Methods to Perturb Neural Function

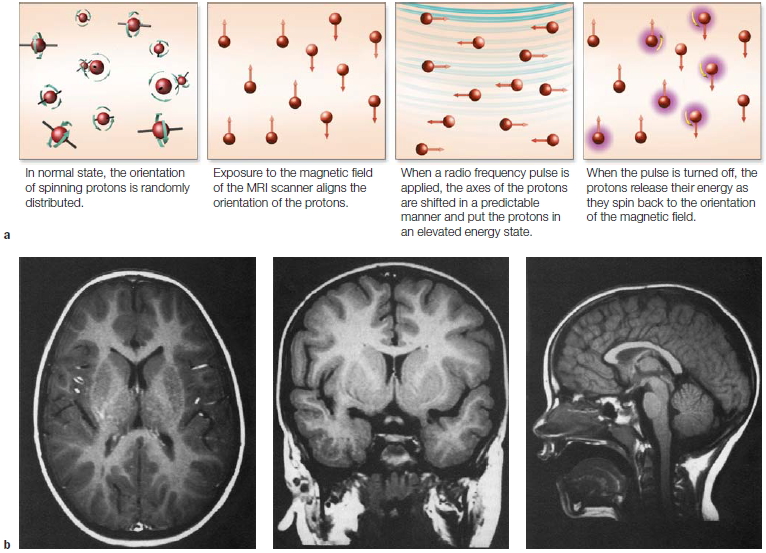

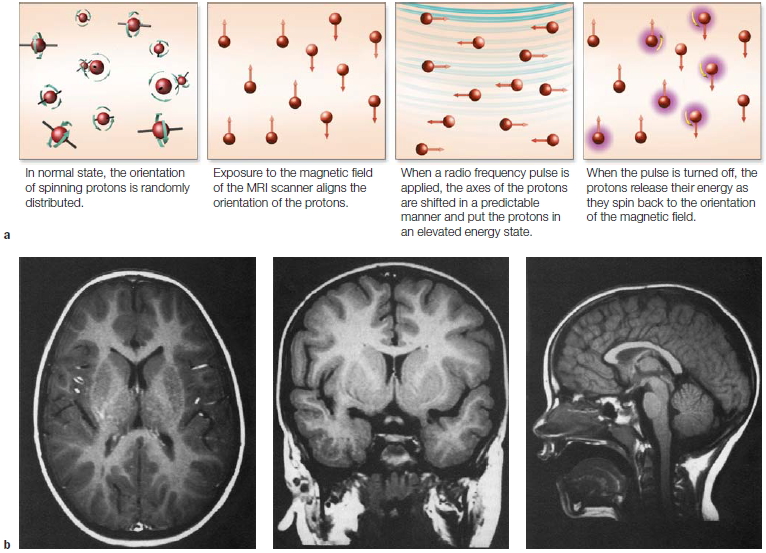

Structural Analysis of the Brain

Methods for the Study of Neural Function

The Marriage of Function and Structure: Neuroimaging

Brain Graphs

Computer Modeling

Converging Methods

IN THE YEAR 2010, Halobacterium halobium and Chlamydomonas reinhardtii made it to prime time as integral parts of the journal Nature’s “Method of the Year.” These microscopic creatures were hailed for their potential to treat a wide range of neurological and psychiatric conditions: anxiety disorder, depression, and Parkinson’s disease, just to name a few. Not bad for a bacterium that hangs out in warm brackish waters and an alga more commonly known as pond scum.

Such grand ambitions for these humble creatures likely never occurred to Dieter Oesterhelt and Walther Stoeckenius (1971), biochemists who wanted to understand why the salt-loving Halobacterium, when removed from its salty environment, would break up into fragments, and why one of these fragments took on an unusual purple hue. Their investigations revealed that the purple color was due to the interaction of retinal (a form of vitamin A) and a protein produced by a set of “opsin genes.” Thus they dubbed this new compound bacteriorhodopsin. The particular combination surprised them. Previously, the only other place where the combined form of retinal and an opsin protein had been observed was in the mammalian eye, where it serves as the chemical basis for vision. In Halobacterium, bacteriorhodopsin functions as an ion pump, converting light energy into metabolic energy as it transfers ions across the cell membrane. Other members of this protein family were identified over the next 25 years, including channelrhodopsin from the green algae C. reinhardtii (Nagel et al., 2002). The light-sensitive properties of microbial rhodopsins turned out to provide just the mechanism that neuroscientists had been dreaming of.

In 1979, Francis Crick, a codiscoverer of the structure of DNA, made a wish list for neuroscientists. What neuroscientists really need, he suggested, was a way to selectively switch on and off neurons, and to do so with great temporal precision. Assuming this manipulation did not harm the cell, a technique like this would enable researchers to directly probe how neurons functionally relate to each other in order to control behavior. Twenty years later, Crick (1999) proposed that light might somehow serve as the switch, because it could be precisely delivered in timed pulses. Unknown to him, and the neuroscience community in general, the key to developing this switch was moldering away in the back editions of plant biology journals, in the papers inspired by Oesterhelt and Stoeckenius’s work on the microbial rhodopsins.

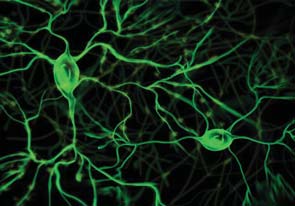

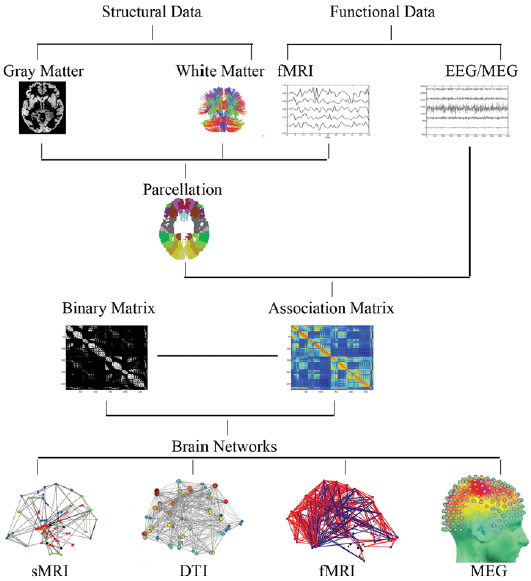

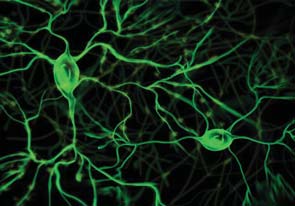

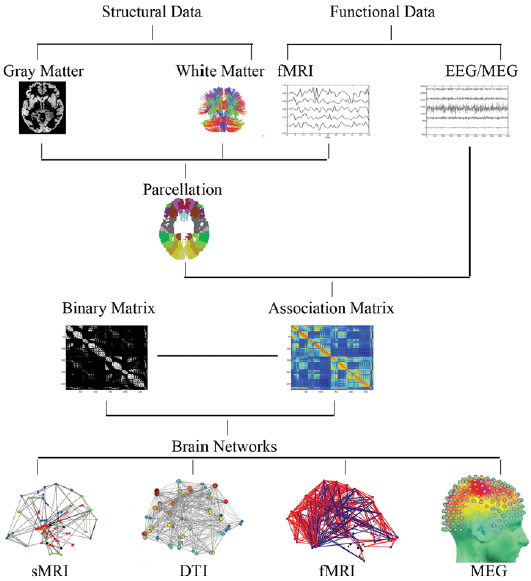

A few years later, Gero Miesenböck provided the first demonstration of how photoreceptor proteins could control neuroactivity. The key challenge was getting the proteins into the cell. Miesenböck accomplished this feat by inserting genes that, when expressed, made targeted cells light responsive (Zemmelman et al., 2002). Expose the cell to light, and the neuron would fire. With this methodological breakthrough, optogenetics was born (Figure 3.1).

Miesenböck’s initial compound proved to have limited usefulness, however. But just a few years later, two graduate students at Stanford, Karl Deisseroth and Ed Boyden, became interested in the opsins as possible neuronal switches (Boyden, 2011). They focused on channelrhodopsin-2 (ChR-2), since a single gene encodes this opsin, making it easier to use molecular biology tools. Using Miesenböck’s technique, a method that has come to be called viral transduction, they spliced the gene for ChR-2 into a neutral virus and then added this virus to a culture of live nerve cells growing in a petri dish. The virus acted like a ferry, carrying the gene into the cell. Once the ChR-2 gene was inside the neurons and the protein had been expressed, Deisseroth and Boyden performed the critical test: They projected a light beam onto the cells. Immediately, the targeted cells began to respond. By pulsing the light, the researchers were able to do exactly what Crick had proposed: precisely control the neuronal activity. Each pulse of light stimulated the production of an action potential; and when the pulse was discontinued, the neuron shut down.

Emboldened by this early success, Deisseroth and Boyden set out to see if the process could be repeated in live animals, starting with a mouse model. Transduction methods were widely used in molecular biology, but it was important to verify that ChR-2 would be expressed in targeted tissue and that the introduction of this rhodopsin would not damage the cells. Another challenge these scientists faced was the need to devise a method of delivering light pulses to the transduced cells. For their initial in vivo study, they implanted a tiny optical fiber in the part of the brain containing motor neurons that control the mouse’s whiskers. When a blue light was pulsed, the whiskers moved (Aravanis et al., 2007). Archimedes, as well as Frances Crick, would have shouted, “Eureka!”

a

|

c

|

b

|

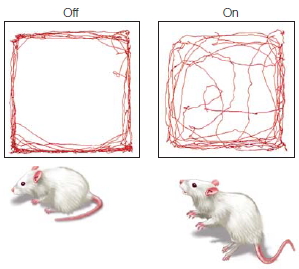

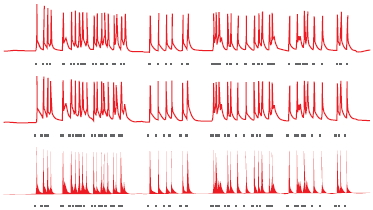

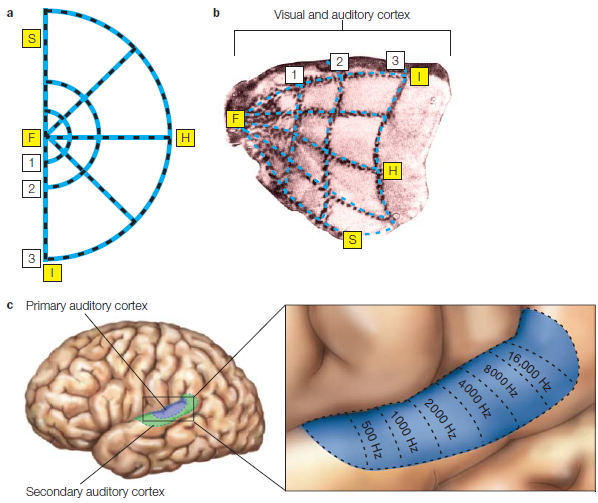

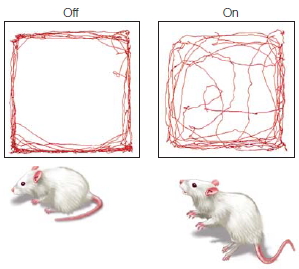

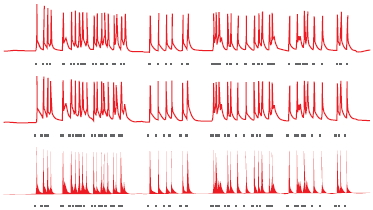

FIGURE 3.1 Optogenetic control of neural activity.

(a) Hippocampal neuron that has been genetically modified to express Channelrhododopsin-2, a protein which forms light-gated ion channels. (b) Activity in three neurons when exposed to a blue light. The small grey dashes below each neuron indicate when the light was turned on (same stimulus for all three neurons). The firing pattern of the cells is tightly coupled to the light, indicating the experimenter can control, to a large extent, the activity of the cells. (c) Behavioral changes resulting from optogenetic stimulation of cells in a subregion of the amygdala. When placed in an open, rectangular arena, mice generally stay close to the walls. With amygdala activation, the mice become less fearful, venturing out into the open part of the arena.

|

The overarching method that neuroscientists use, of course, is the scientific method. This process begins with an observation of a phenomenon. Such an observation can come from various types of populations: animal or human, normally functioning or abnormally functioning. The scientist devises a hypothesis to explain an observation and makes predictions drawn from the hypothesis. The next step is designing experiments to test the hypothesis and its predictions. Such experiments employ the various methods that we discuss in this chapter. Experiments cannot prove that a hypothesis is true. Rather, they can provide support for a hypothesis. Even more important, experiments can be used to disprove a hypothesis, providing evidence that a prevailing idea must be modified. By documenting this process and having it repeated again and again, the scientific method allows our understanding of the world to progress.

Optogenetic techniques are becoming increasingly versatile (for a video on optogenetics, see http://spie.org/x48167.xml?ArticleID=x48167). Many new opsins have been discovered, including ones that respond to different colors of visible light. Others respond to infrared light. Infrared light is advantageous because it penetrates tissue, and thus, it may eliminate the need for implanting optical fibers to deliver the light pulse to the target tissue. Optogenetic methods have been used to turn on and off cells in many parts of the brain, providing experimenters with new tools to manipulate behavior. A demonstration of the clinical potential of this method comes from a recent study in which optogenetic methods were able to reduce anxiety in mice (Tye et al., 2011). After creating light-sensitive neurons in their amygdala (see Chapter 10), a flash of light was sufficient to motivate the mice to move away from the wall of their home cage and boldly step out into the center. Interestingly, this effect worked only if the light was targeted at a specific subregion of the amygdala. If the entire structure was exposed to the light, the mice remained anxious and refused to explore their cages.

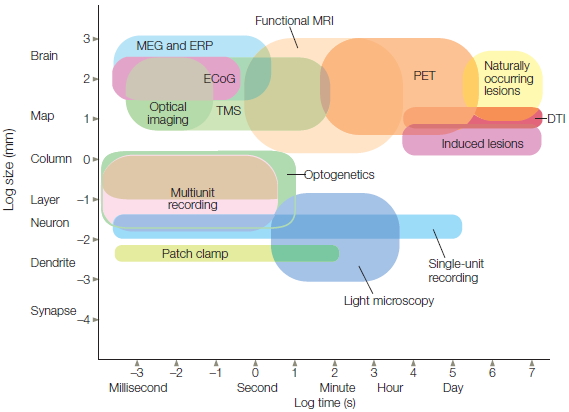

Theoretical breakthroughs in all scientific domains can be linked to the advent of new methods and the development of novel instrumentation. Cognitive neuroscience is no exception. It is a field that emerged in part because of the invention of new methods, some of which use advanced tools unavailable to scientists of previous generations (see Chapter 1; Sejnowski & Churchland, 1989). In this chapter, we discuss how these methods work, what information can be derived from them, and their limitations. Many of these methods are shared with other players in the neurosciences, from neurologists and neurosurgeons to physiologists and philosophers. Cognitive neuroscience endeavors to take advantage of the insights that each approach has to offer and combine them. By addressing a question from different perspectives and with a variety of techniques, the conclusions arrived at can be made with more confidence.

We begin the chapter with cognitive psychology and the behavioral methods it uses to gain insight into how the brain represents and manipulates information. We then turn to how these methods have been used to characterize the behavioral changes that accompany neurological insult or disorder, the subfield traditionally known as neuropsychology. While neuropsychological studies of human patients are dependent on the vagaries of nature, the basic logic of the approach is now pursued with methods in which neural function is deliberately perturbed. We review a range of methods used to perturb neural function. Following this, we turn to more observational methods, first reviewing ways in which cognitive neuroscientists measure neurophysiological signals in either human or animal models, and second, by examining methods in which neural structure and function are inferred through measurements of metabolic and hemodynamic processes. When studying an organ with 11 billion basic elements and gazillions of connections between these elements, we need tools that can be used to organize the data and yield simplified models to evaluate hypotheses. We provide a brief overview of computer modeling and how it has been used by cognitive neuroscientists, and we review a powerful analytical and modeling tool—brain graph theory, which transforms neuroimaging data into models that elucidate the network properties of the human brain. The interdisciplinary nature of cognitive neuroscience has depended on the clever ways in which scientists have integrated paradigms across all of these fields and methodologies. The chapter concludes with examples of this integration. Andiamo!

Cognitive Psychology and Behavioral Methods

Cognitive neuroscience has been informed by the principles of cognitive psychology, the study of mental activity as an information-processing problem. Cognitive psychologists are interested in describing human performance, the observable behavior of humans (and other animals). They also seek to identify the internal processing—the acquisition, storage, and use of information—that underlies this performance. A basic assumption of cognitive psychology is that we do not directly perceive and act in the world. Rather, our perceptions, thoughts, and actions depend on internal transformations or computations. Information is obtained by sense organs, but our ability to comprehend that information, to recognize it as something that we have experienced before and to choose an appropriate response, depend on a complex interplay of processes. Cognitive psychologists design experiments to test hypotheses about mental operations by adjusting what goes into the brain and then seeing what comes out. Put more simply, information is input into the brain, something secret happens to it, and out comes behavior. Cognitive psychologists are detectives trying to figure out what those secrets are.

For example, input this text into your brain and let’s see what comes out:

ocacdrngi ot a sehrerearc ta maccbriegd ineyurvtis, ti edost’n rttaem ni awth rreod eht tlteser ni a rwdo rea, eht ylon pirmtoatn gihtn si atth het rifts nda satl ttelre eb tat het ghitr clepa. eht srte anc eb a otlta sesm dan ouy anc itlls arde ti owtuthi moprbel. ihst si cebusea eth nuamh nidm sedo otn arde yrvee telrte yb stifle, tub eth rdow sa a lohew.

Not much, eh? Now take another shot at it:

Aoccdrnig to a rseheearcr at Cmabrigde Uinervtisy, it deosn’t mttaer in waht oredr the ltteers in a wrod are, the olny iprmoatnt tihng is taht the frist and lsat ltteer be at the rghit pclae. The rset can be a total mses and you can sitll raed it wouthit porbelm. Tihs is bcuseae the huamn mnid deos not raed ervey lteter by istlef, but the wrod as a wlohe.

Oddly enough, the second version makes sense. It is surprisingly easy to read the second passage, even though only a few words are correctly spelled. As long as the first and last letters of each word are in the correct position, we can accurately infer the correct spelling, especially when the surrounding context helps generate expectations for each word. Simple demonstrations like this one help us discern the content of mental representations, and thus, help us gain insight into how information is manipulated by the mind.

Cognitive neuroscience is distinctive in the study of the brain and behavior, because it combines paradigms developed in cognitive psychology with methods employed to study brain structure and function. Next, we introduce some of those paradigms.

Ferreting Out Mental Representations and Transformations

Two key concepts underlie the cognitive approach:

- Information processing depends on internal representations.

- These mental representations undergo transformations.

Mental Representations We usually take for granted the idea that information processing depends on internal representations. Consider the concept “ball.” Are you thinking of an image, a word description, or a mathematical formula? Each instance is an alternative form of representing the “circular” or “spherical” concept and depends on our visual system, our auditory system, our ability to comprehend the spatial arrangement of a curved drawing, our ability to comprehend language, or our ability to comprehend geometric and algebraic relations. The context would help dictate which representational format would be most useful. For example, if we wanted to show that the ball rolls down a hill, a pictorial representation is likely to be much more useful than an algebraic formula—unless you are doing your physics final, where you would likely be better off with the formula.

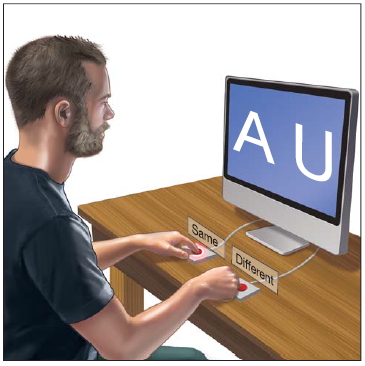

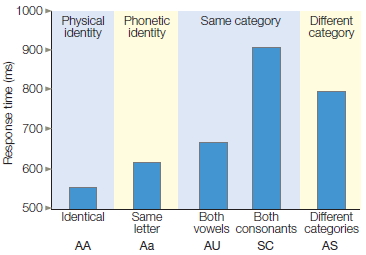

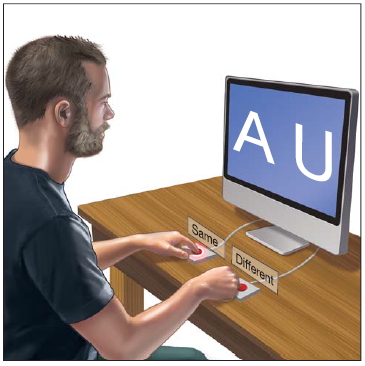

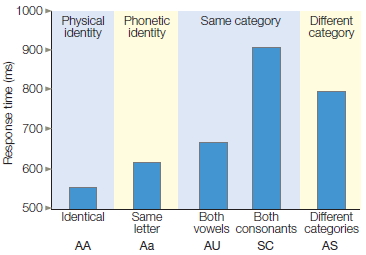

A letter-matching task, first introduced by Michael Posner (1986) at the University of Oregon, provides a powerful demonstration that even with simple stimuli, the mind derives multiple representations (Figure 3.2). Two letters are presented simultaneously in each trial. The participant’s task is to evaluate whether they are both vowels, both consonants, or one vowel and one consonant. The participant presses one button if the letters are from the same category, and the other button if they are from different categories.

One version of this experiment includes five conditions. In the physical-identity condition, the two letters are exactly the same. In the phonetic-identity condition, the two letters have the same identity, but one letter is a capital and the other is lowercase. There are two types of samecategory conditions, conditions in which the two letters are different members of the same category. In one, both letters are vowels; in the other, both letters are consonants.

Experiments like the one represented in Figure 3.2 involve manipulating one variable and observing its effect on another variable. The variable that is manipulated is called the independent variable. It is what you (the researcher) have changed. In this example, the relationship of the two letters is the independent variable, defining the conditions of the experiment (e.g., Identical, Same letter, Both vowels, etc.). The dependent variable is the event being studied. In this example, it is the response time of the participant. When graphing the results of an experiment, the independent variable is displayed on the horizontal axis (Figure 3.2b) and the dependent variable is displayed on the vertical axis. Experiments can involve more than one independent and dependent variable.

Finally, in the different-category condition, the two letters are from different categories and can be either of the same type size or of different sizes. Note that the first four conditions—physical identity, phonetic identity, and the two same-category conditions—require the “same” response: On all three types of trials, the correct response is that the two letters are from the same category. Nonetheless, as Figure 3.2b shows, response latencies differ significantly. Participants respond fastest to the physical-identity condition, next fastest to the phonetic-identity condition, and slowest to the same-category condition, especially when the two letters are both consonants.

The results of Posner’s experiment suggest that we derive multiple representations of stimuli. One representation is based on the physical aspects of the stimulus. In this experiment, it is a visually derived representation of the shape presented on the screen. A second representation corresponds to the letter’s identity. This representation reflects the fact that many stimuli can correspond to the same letter. For example, we can recognize that A, a, and a all represent the same letter. A third level of abstraction represents the category to which a letter belongs. At this level, the letters A and E activate our internal representation of the category “vowel.” Posner maintains that different response latencies reflect the degrees of processing required to perform the letter-matching task. By this logic, we infer that physical representations are activated first, phonetic representations next, and category representations last.

|

|

|

a

|

b

|

|

FIGURE 3.2 Letter-matching task.

(a) Participants press one of two buttons to indicate if the letters are the same or different. The definition of “same” and “different” is manipulated across different blocks of the experiment. (b) The relationship between the two letters is plotted on the x-axis. This relationship is the independent variable, the variable that the experimenter is manipulating. Reaction time is plotted on the y-axis. It is the dependent variable, the variable that the experimenter is measuring.

|

As you may have experienced personally, experiments like these elicit as many questions as answers. Why do participants take longer to judge that two letters are consonants than they do to judge that two letters are vowels? Would the same advantage for identical stimuli exist if the letters were spoken? What about if one letter were visual and the other were auditory? Cognitive psychologists address questions like these and then devise methods for inferring the mind’s machinery from observable behaviors.

In the letter-matching task, the primary dependent variable was reaction (or response) time, the speed with which participants make their judgments. Reaction time experiments use the chronometric methodology. Chronometric comes from the Greek words chronos (“time”) and metron (“measure”). The chronometric study of the mind is essential for cognitive psychologists because mental events occur rapidly and efficiently. If we consider only whether a person is correct or incorrect on a task, we miss subtle differences in performance. Measuring reaction time permits a finer analysis of the brain’s internal processes.

Internal Transformations The second critical notion of cognitive psychology is that our mental representations undergo transformations. For instance, the transformation of mental representations is obvious when we consider how sensory signals are connected with stored information in memory. For example, a whiff of garlic may transport you to your grandmother’s house or to a back alley in Palermo, Italy. In this instance, an olfactory sensation has somehow been transformed by your brain, allowing this stimulus to call up a memory. Taking action often requires that perceptual representations be translated into action representations in order to achieve a goal. For example, you see and smell garlic bread on the table at dinner. These sensations are transformed into perceptual representations, which are then processed by the brain, allowing you to decide on a course of action and to carry it out—pick up the bread and place it in your mouth. Take note, though, that information processing is not simply a sequential process from sensation to perception to memory to action. Memory may alter how we perceive something. When you see a dog, do you reach out to pet it, perceiving it as cute, or do you draw back in fear, perceiving it as dangerous, having been bitten when you were a child? The manner in which information is processed is also subject to attentional constraints. Did you register that last sentence, or did all the talk about garlic cause your attention to wander as you made plans for dinner? Cognitive psychology is all about how we manipulate representations.

Characterizing Transformational Operations Suppose you arrive at the grocery store and discover that you forgot to bring your shopping list. You know for sure that you need coffee and milk, the main reason you came; but what else? As you cruise the aisles, scanning the shelves, you hope something will prompt your memory. Is the peanut butter gone? How many eggs are left?

This memory retrieval task draws on a number of cognitive capabilities. As we have just learned, the fundamental goal of cognitive psychology is to identify the different mental operations or transformations that are required to perform tasks such as this.

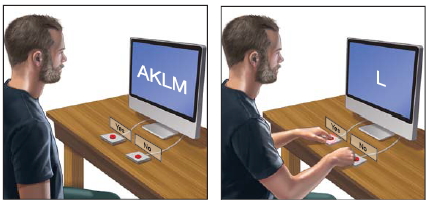

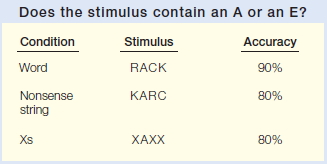

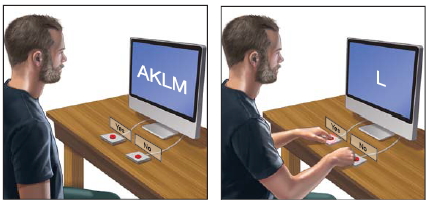

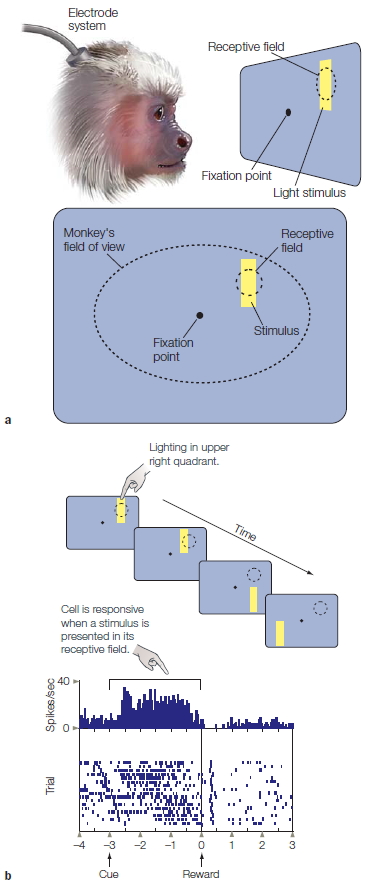

Saul Sternberg (1975) introduced an experimental task that bears some similarity to the problem faced by an absentminded shopper. In Sternberg’s task, however, the job is not recalling items stored in memory, but rather comparing sensory information with representations that are active in memory. In each trial, the participant is first presented with a set of letters to memorize (Figure 3.3a). The memory set could consist of one, two, or four letters. Then a single letter is presented, and the participant must decide if this letter was part of the memorized set. The participant presses one button to indicate that the target was part of the memory set (“yes” response) and a second button to indicate that the target was not part of the set (“no” response). Once again, the primary dependent variable is reaction time.

Sternberg postulated that, to respond on this task, the participant must engage in four primary mental operations:

- Encode. The participant must identify the visible target.

- Compare. The participant must compare the mental representation of the target with the representations of the items in memory.

- Decide. The participant must decide whether the target matches one of the memorized items.

- Respond. The participant must respond appropriately for the decision made in step 3.

By postulating a set of mental operations, we can devise experiments to explore how these putative mental operations are carried out.

A basic question for Sternberg was how to characterize the efficiency of recognition memory. Assuming that all items in the memory set are actively represented, the recognition process might work in one of two ways: A highly efficient system might simultaneously compare a representation of the target with all of the items in the memory set. On the other hand, the recognition process might be able to handle only a limited amount of information at any point in time. For example, it might require that each item in memory be compared successively to a mental representation of the target.

|

|

|

a

|

b

|

|

FIGURE 3.3 Memory comparison task.

(a) The participant is presented with a set of one, two, or four letters and asked to memorize them. After a delay, a single probe letter appears, and the participant indicates whether that letter was a member of the memory set. (b) Reaction time increases with set size, indicating that the target letter must be compared with the memory set sequentially rather than in parallel.

|

Sternberg realized that the reaction time data could distinguish between these two alternatives. If the comparison process can be simultaneous for all items—what is called a parallel process—then reaction time should be independent of the number of items in the memory set. But if the comparison process operates in a sequential, or serial, manner, then reaction time should slow down as the memory set becomes larger, because more time is required to compare an item with a large memory list than with a small memory list. Sternberg’s results convincingly supported the serial hypothesis. In fact, reaction time increased in a constant, or linear, manner with set size, and the functions for the “yes” and “no” trials were essentially identical (Figure 3.3b).

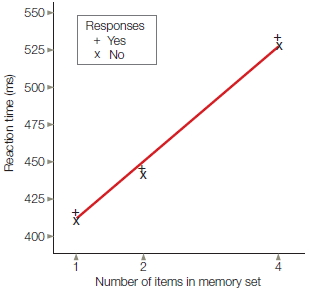

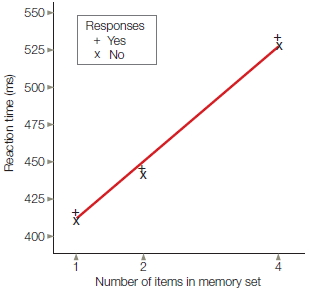

FIGURE 3.4 Word superiority effect.

Participants are more accurate in identifying the target vowel when it is embedded in a word. This result suggests that letter and word levels of representation are activated in parallel.

Although memory comparison appears to involve a serial process, much of the activity in our mind operates in parallel. A classic demonstration of parallel processing is the word superiority effect (Reicher, 1969). In this experiment, a stimulus is shown briefly and participants are asked which of two target letters (e.g., A or E) was presented. The stimuli can be composed of words, nonsense letter strings, or letter strings in which every letter is an X except for the target letter (Figure 3.4). Brief presentation times are used so that errors will be observed, because the critical question centers on whether context affects performance. The word superiority effect (see Figure 3.4 caption) refers to the fact that participants are most accurate in identifying the target letter when the stimuli are words. As we saw earlier, this finding suggests that we do not need to identify all the letters of a word before we recognize the word. Rather, when we are reading a list of words, representations corresponding to the individual letters and to the entire word are activated in parallel for each item. Performance is facilitated because both representations can provide information as to whether the target letter is present.

Constraints on Information Processing

In the memory search experiment, participants are not able to compare the target item to all items in the memory set simultaneously. That is, their processing ability is constrained. Whenever a constraint is identified, an important question to ask is whether the constraint is specific to the system that you are investigating (in this case, memory) or if it is a more general processing constraint. Obviously, people can do only a certain amount of internal processing at any one time, but we also experience task-specific constraints. Processing constraints are defined only by the particular set of mental operations associated with a particular task. For example, although the comparison (item 2 in Sternberg’s list) of a probe item to the memory set might require a serial operation, the task of encoding (item 1 in Sternberg’s list) might occur in parallel, so it would not matter whether the probe was presented by itself or among a noisy array of competing stimuli.

Exploring the limitations in task performance is a central concern for cognitive psychologists. Consider a simple color-naming task—devised in the early 1930s by J. R. Stroop, an aspiring doctoral student (1935; for a review, see MacLeod, 1991)—that has become one of the most widely employed tasks in all of cognitive psychology. We will refer to this task many times in this book. The Stroop task involves presenting the participant with a list of words and then asking her to name the color of each word as fast as possible. As Figure 3.5 illustrates, this task is much easier when the words match the ink colors.

FIGURE 3.5 Stroop task.

Time yourself as you work through each column, naming the color of the ink of each stimulus as fast as possible. Assuming that you do not squint to blur the words, it should be easy to read the first and second columns but quite difficult to read the third.

The Stroop effect powerfully demonstrates the multiplicity of mental representations. The stimuli in this task appear to activate at least two separable representations. One representation corresponds to the color of each stimulus; it is what allows the participant to perform the task. The second representation corresponds to the color concept associated with each word. Participants are slower to name the colors when the ink color and words are mismatched, thus indicating that the second representation is activated, even though it is irrelevant to the task. Indeed, the activation of a representation based on the word rather than the color of the word appears to be automatic.

The Stroop effect persists even after thousands of trials of practice, because skilled readers have years of practice in analyzing letter strings for their symbolic meaning. On the other hand, the interference from the words is markedly reduced if the response requires a key press rather than a vocal response. Thus, the word-based representations are closely linked to the vocal response system and have little effect when the responses are produced manually.

TAKE-HOME MESSAGES

- Cognitive psychology focuses on understanding how objects or ideas are represented in the brain and how these representations are manipulated.

- Fundamental goals of cognitive psychology include identifying the mental operations that are required to perform cognitive tasks and exploring the limitations in task performance.

Studying the Damaged Brain

An integral part of cognitive neuroscience research methodology is choosing the population to be studied. Study populations fall into four broad groups: animals and humans that are neurologically intact, and animals and humans in which the neurological system is abnormal, either as a result of an illness or a disorder, or as a result of experimental manipulation. The population a researcher picks to study depends, at least in part, on the questions being asked. We begin this section with a discussion of the major natural causes of brain dysfunction. Then we consider the different study populations, their limitations, and the methods used with each group.

Causes of Neurological Dysfunction

Nature has sought to ensure that the brain remains healthy. Structurally, the skull provides a thick, protective encasement, engendering such comments as “hardheaded” and “thick as a brick.” The distribution of arteries is extensive, ensuring an adequate blood supply. Even so, the brain is subject to many disorders, and their rapid treatment is frequently essential to reduce the possibility of chronic, debilitating problems or death. We discuss some of the more common types of disorders.

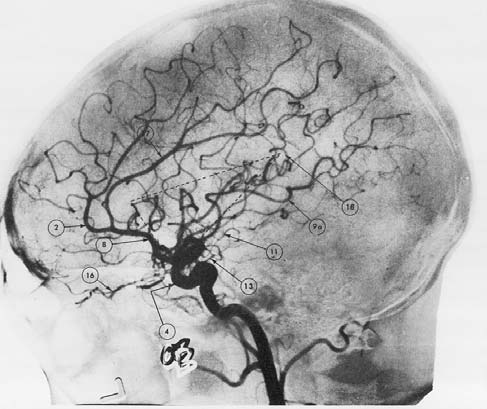

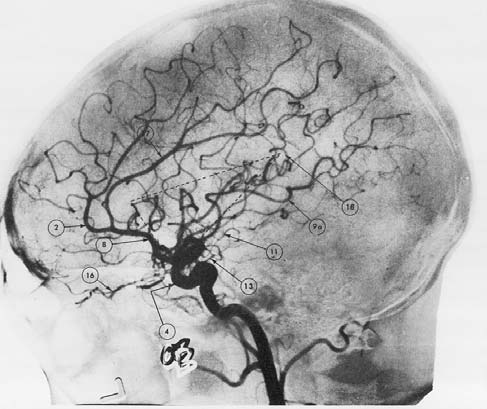

Vascular Disorders As with all other tissue, neurons need a steady supply of oxygen and glucose. These substances are essential for the cells to produce energy, fire action potentials, and make transmitters for neural communication. The brain, however, is a hog. It uses 20 % of all the oxygen we breathe, an extraordinary amount considering that it accounts for only 2 % of the total body mass. What’s more, a continuous supply of oxygen is essential: A loss of oxygen for as little as 10 minutes can result in neural death. Angiography is a clinical imaging method used to evaluate the circulatory system in the brain and diagnose disruptions in circulation. As Figure 3.6 shows, this method helps us visualize the distribution of blood by highlighting major arteries and veins. A dye is injected into the vertebral or carotid artery and then an X-ray study is conducted.

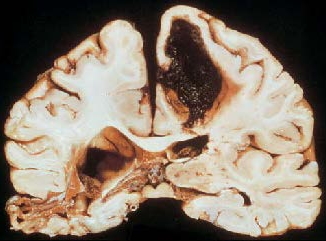

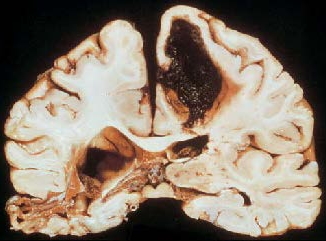

Cerebral vascular accidents, or strokes, occur when blood flow to the brain is suddenly disrupted. The most frequent cause of stroke is occlusion of the normal passage of blood by a foreign substance. Over years, atherosclerosis, the buildup of fatty tissue, occurs in the arteries. This tissue can break free, becoming an embolus that is carried off in the bloodstream. An embolus that enters the cranium may easily pass through the large carotid or vertebral arteries. As the arteries and capillaries reach the end of their distribution, however, their size decreases. Eventually, the embolus becomes stuck, or infarcted, blocking the flow of blood and depriving all downstream tissue of oxygen and glucose. Within a short time, this tissue will become dysfunctional. If the blood flow is not rapidly restored, the cells will die (Figure 3.7a).

FIGURE 3.6 The brain’s blood supply.

The angiogram provides an image of the arteries in the brain.

The onset of stroke can be quite varied, depending on the afflicted area. Sometimes the person may lose consciousness and die within minutes. In such cases the infarct is usually in the vicinity of the brainstem. When the infarct is cortical, the presenting symptoms may be striking, such as sudden loss of speech and comprehension. In other cases, the onset may be rather subtle. The person may report a mild headache or feel clumsy in using one of his or her hands. The vascular system is fairly consistent between individuals; thus, stroke of a particular artery typically leads to destruction of tissue in a consistent anatomical location. For example, occlusion of the posterior cerebral artery invariably leads to deficits in visual perception.

There are many other types of cerebral vascular disorders. Ischemia can be caused by partial occlusion of an artery or a capillary due to an embolus, or it can arise from a sudden drop in blood pressure that prevents blood from reaching the brain. A sudden rise in blood pressure can lead to cerebral hemorrhage (Figure 3.7b), or bleeding over a wide area of the brain due to the breakage of blood vessels. Spasms in the vessels can result in irregular blood flow and have been associated with migraine headaches.

Other disorders are due to problems in arterial structures. Cerebral arteriosclerosis is a chronic condition in which cerebral blood vessels become narrow because of thickening and hardening of the arteries. The result can be persistent ischemia. More acute situations can arise if a person has an aneurysm, a weak spot or distention in a blood vessel. An aneurysm may suddenly expand or even burst, causing a rapid disruption of the blood circulation.

Tumors Brain lesions also can result from tumors. A tumor, or neoplasm, is a mass of tissue that grows abnormally and has no physiological function. Brain tumors are relatively common; most originate in glial cells and other supporting white matter tissues. Tumors also can develop from gray matter or neurons, but these are much less common, particularly in adults. Tumors are classified as benign when they do not recur after removal and tend to remain in the area of their germination (although they can become quite large). Malignant, or cancerous, tumors are likely to recur after removal and are often distributed over several different areas. With brain tumors, the first concern is not usually whether the tumor is benign or malignant, but rather its location and prognosis. Concern is greatest when the tumor threatens critical neural structures. Neurons can be destroyed by an infiltrating tumor or become dysfunctional as a result of displacement by the tumor.

|

|

|

a

|

b

|

|

FIGURE 3.7 Vascular disorders of the brain.

(a) Strokes occur when blood flow to the brain is disrupted. This brain is from a person who had an occlusion of the middle cerebral artery. The person survived the stroke. After death, a postmortem analysis shows that almost all of the tissue supplied by this artery had died and been absorbed. (b) Coronal section of a brain from a person who died following a cerebral hemorrhage. The hemorrhage destroyed the dorsomedial region of the left hemisphere. The effects of a cerebrovascular accident 2 years before death can be seen in the temporal region of the right hemisphere.

|

Degenerative And Infectious Disorders Many neurological disorders result from progressive disease. Table 3.1 lists some of the more prominent degenerative and infectious disorders. In later chapters, we will review some of these disorders in detail, exploring the cognitive problems associated with them and how these problems relate to underlying neuropathologies. Here, we focus on the etiology and clinical diagnosis of degenerative disorders.

Degenerative disorders have been associated with both genetic aberrations and environmental agents. A prime example of a degenerative disorder that is genetic in origin is Huntington’s disease. The genetic link in degenerative disorders such as Parkinson’s disease and Alzheimer’s disease is weaker. Environmental factors are suspected to be important, perhaps in combination with genetic predispositions.

|

table 3.1 Prominent Degenerative and Infectious Disorders of the Central Nervous System

|

|

Disorder

|

Type

|

Most Common Pathology

|

| Alzheimer’s disease | Degenerative | Tangles and plaques in limbic and temporoparietal cortex |

| Parkinson’s disease | Degenerative | Loss of dopaminergic neurons |

| Huntington’s disease | Degenerative | Atrophy of interneurons in caudate and putamen nuclei of basal ganglia |

| Pick’s disease | Degenerative | Frontotemporal atrophy |

| Progressive supranuclear palsy (PSP) | Degenerative | Atrophy of brainstem, including colliculus |

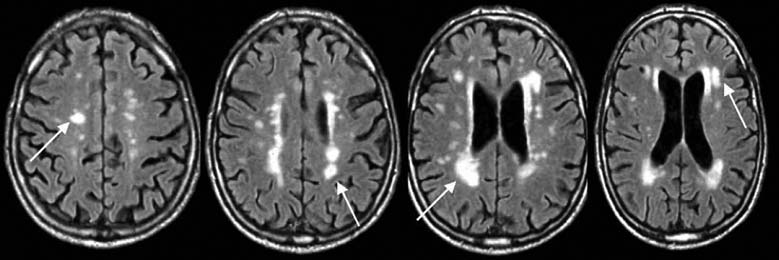

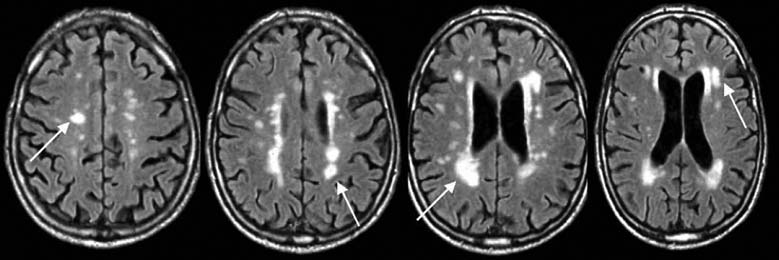

| Multiple sclerosis | Possibly infectious | Demyelination, especially of fibers near ventricles |

| AIDS dementia | Viral infection | Diffuse white matter lesions |

| Herpes simplex | Viral infection | Destruction of neurons in temporal and limbic regions |

| Korsakoff’s syndrome | Nutritional deficiency | Destruction of neurons in diencephalon and temporal lobes |

|

|

|

a

|

b

|

|

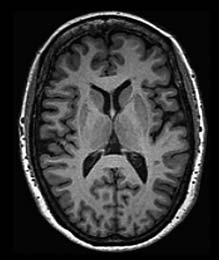

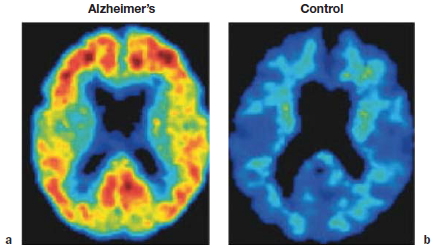

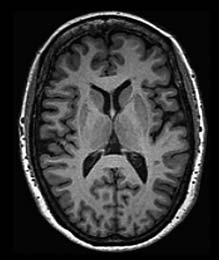

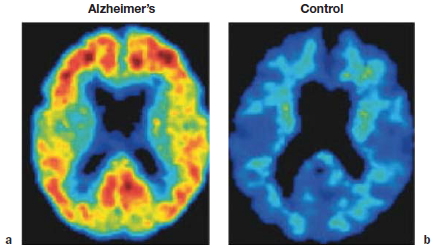

FIGURE 3.8 Degenerative disorders of the brain.

(a) Normal brain of a 60-year-old male. (b) Axial slices at four sections of the brain in a 79-year-old male with Alzheimer’s disease. Arrows show growth of white matter lesions.

|

Although neurologists were able to develop a taxonomy of degenerative disorders before the development of neuroimaging methods, diagnosis today is usually confirmed by MRI scans. The primary pathology resulting from Huntington’s disease or Parkinson’s disease is observed in the basal ganglia, a subcortical structure that figures prominently in the motor pathways (see Chapter 8). In contrast, Alzheimer’s disease is associated with marked atrophy of the cerebral cortex (Figure 3.8).

Progressive neurological disorders can also be caused by viruses. The human immunodeficiency virus (HIV) that causes dementia related to acquired immunodeficiency syndrome (AIDS) has a tendency to lodge in subcortical regions of the brain, producing diffuse lesions of the white matter by destroying axonal fibers. The herpes simplex virus, on the other hand, destroys neurons in cortical and limbic structures if it migrates to the brain. Viral infection is also suspected in multiple sclerosis, although evidence for such a link is indirect, coming from epidemiological studies. For example, the incidence of multiple sclerosis is highest in temperate climates, and some isolated tropical islands had not experienced multiple sclerosis until the population came in contact with Western visitors.

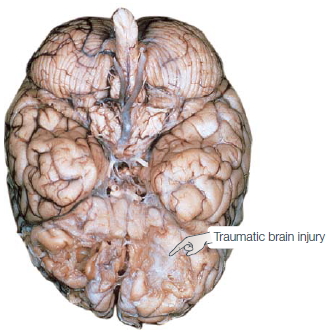

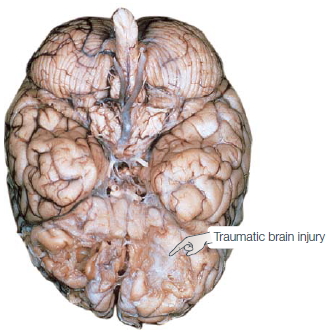

Traumatic Brain Injury More than any disease, such as stroke or tumor, most patients arrive on a neurology ward because of a traumatic event such as a car accident, a gunshot wound, or an ill-advised dive into a shallow swimming hole. Traumatic brain injury (TBI) can result from either a closed or an open head injury. In closed head injuries, the skull remains intact, but mechanical forces generated by a blow to the head damage the brain. Common causes of closed head injuries are car accidents and falls, although researchers are now recognizing that closed head TBI can be prevalent in people who have been near a bomb blast or participate in contact sports. The damage may be at the site of the blow, for example, just below the forehead—damage referred to as a coup. Reactive forces may also bounce the brain against the skull on the opposite side of the head, resulting in a countercoup. Certain regions are especially sensitive to the effects of coups and countercoups. The inside surface of the skull is markedly jagged above the eye sockets; and, as Figure 3.9 shows, this rough surface can produce extensive tearing of brain tissue in the orbitofrontal region.

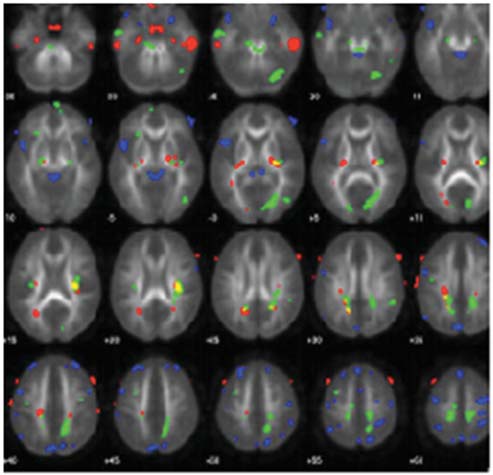

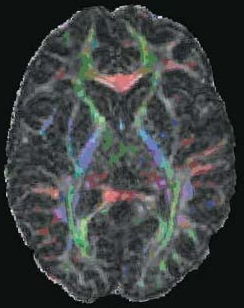

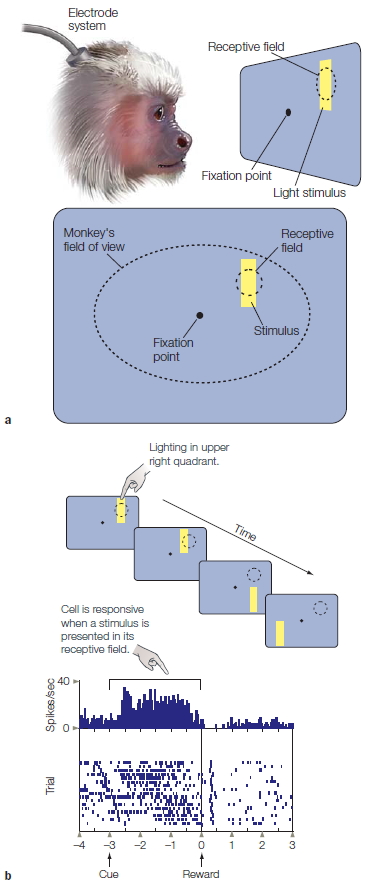

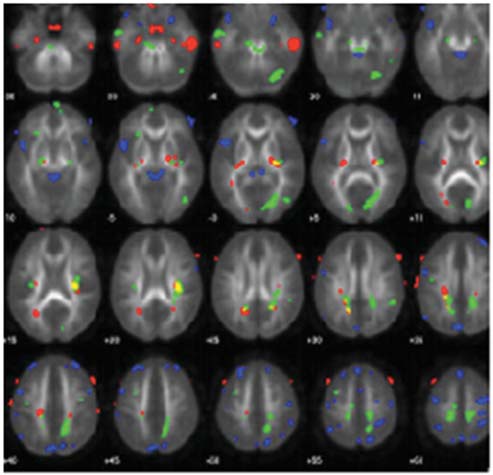

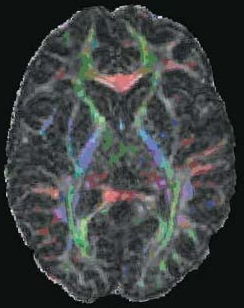

An imaging method, diffusion tensor imaging (discussed later in the chapter), can be used to identify anatomical damage that can result from TBI. For example, using this method, researchers have shown that professional boxers have sustained damage in white matter tracts, even if they never had a major traumatic event (Chappell et al., 2006, Figure 3.10). Similarly, evidence is mounting that the repeated concussions suffered by football and soccer players may cause changes in neural connectivity that produce chronic cognitive problems (Shi et al., 2009).

Open head injuries happen when an object like a bullet or shrapnel penetrates the skull. With these injuries, the penetrating object may directly damage brain tissue, and the impact of the object can also create reactive forces producing coup and countercoup.

Additional damage can follow a traumatic event as a result of vascular problems and increased risk of infection. Trauma can disrupt blood flow by severing vessels, or it can change intracranial pressure as a result of bleeding. People who have experienced a TBI are also at increased risk for seizure, further complicating their recovery.

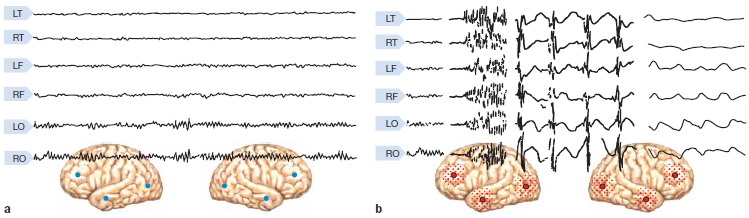

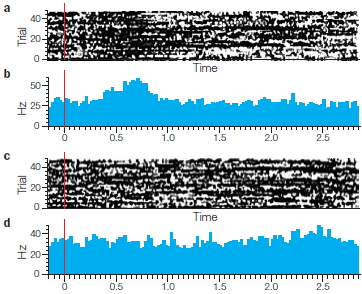

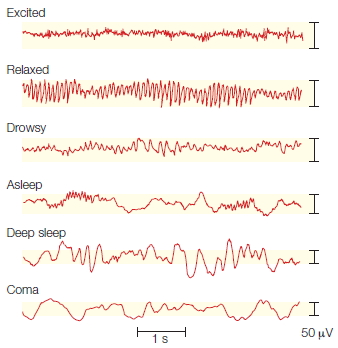

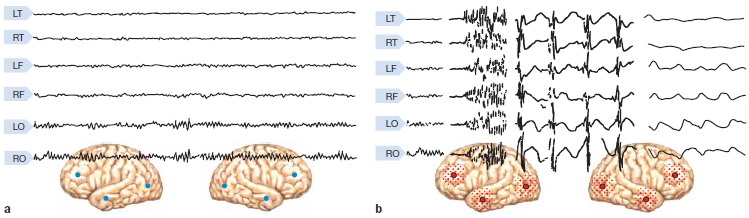

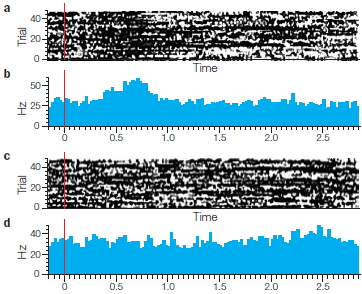

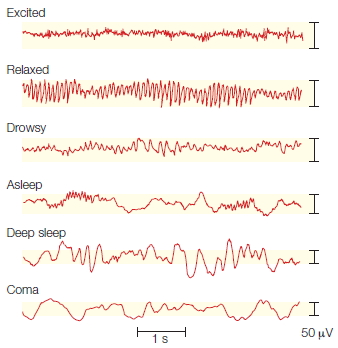

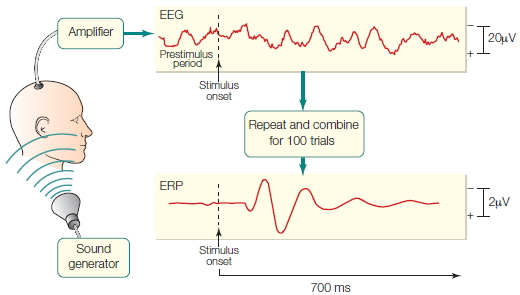

Epilepsy Epilepsy is a condition characterized by excessive and abnormally patterned activity in the brain. The cardinal symptom is a seizure, a transient loss of consciousness. The extent of other disturbances varies. Some epileptics shake violently and lose their balance. For others, seizures may be perceptible only to the most attentive friends and family. Seizures are confirmed by electroencephalography (EEG). During the seizure, the EEG profile is marked by large-amplitude oscillations (Figure 3.11).

|

|

|

a

|

b

|

|

FIGURE 3.9 Traumatic brain injury.

Trauma can cause extensive destruction of neural tissue. Damage can arise from the collision of the brain with the solid internal surface of the skull, especially along the jagged surface over the orbital region. In addition, accelerative forces created by the impact can cause extensive shearing of dendritic arbors. (a) In this brain of a 54-year-old man who had sustained a severe head injury 24 years before death, tissue damage is evident in the orbitofrontal regions and was associated with intellectual deterioration subsequent to the injury. (b) The susceptibility of the orbitofrontal region to trauma was made clear by A. Holbourn of Oxford, who in 1943 filled a skull with gelatin and then violently rotated the skull. Although most of the brain retains its smooth appearance, the orbitofrontal region has been chewed up.

|

The frequency of seizures is highly variable. The most severely affected patients have hundreds of seizures each day, and each seizure can disrupt function for a few minutes. Other epileptics suffer only an occasional seizure, but it may incapacitate the person for a couple of hours. Simply having a seizure, however, does not mean a person has epilepsy. Although 0.5 % of the general population has epilepsy, it is estimated that 5 % of people will have a seizure at some point during life, usually triggered by an acute event such as trauma, exposure to toxic chemicals, or high fever.

FIGURE 3.10 Sports-related TBI.

Colored regions show white matter tracts that are abnormal in the brains of professional boxers.

TAKE-HOME MESSAGES

- Brain lesions, either naturally occurring (in humans) or experimentally derived (in animals), allow experimenters to test hypotheses concerning the functional role of the damaged brain region.

- Cerebral vascular accidents, or strokes, occur when blood flow to the brain is suddenly disrupted. Angiography is used to evaluate the circulatory system in the brain.

- Tumors can cause neurological symptoms either by damaging neural tissue or by producing abnormal pressure on spared cortex and cutting off its blood supply.

- Degenerative disorders include Huntington’s disease, Parkinson’s disease, Alzheimer’s disease, and AIDSrelated dementia.

- Neurological trauma can result in damage at the site of the blow (coup) or at the site opposite the blow because of reactive forces (countercoup). Certain brain regions such as the orbitofrontal cortex are especially prone to damage from trauma.

- Epilepsy is characterized by excessive and abnormally patterned activity in the brain.

FIGURE 3.11 Electrical activity in a normal and epileptic brain.

Electroencephalographic recordings from six electrodes, positioned over the temporal (T), frontal (F), and occipital (O) cortex on both the left (L) and the right (R) sides. (a) Activity during normal cerebral activity. (b) Activity during a grand mal seizure.

Studying Brain–Behavior Relationships Following Neural Disruption

The logic of using participants with brain lesions is straightforward. If a neural structure contributes to a task, then a structure that is dysfunctional through either surgical intervention or natural causes should impair performance of that task. Lesion studies have provided key insights into the relationship between brain and behavior. Fundamental concepts, such as the left hemisphere’s dominant role in language or the dependence of visual functions on posterior cortical regions, were developed by observing the effects of brain injury. This area of research was referred to as behavioral neurology, the province of physicians who chose to specialize in the study of diseases and disorders that affect the structure and function of the nervous system.

Studies of human participants with neurological dysfunction have historically been hampered by limited information on the extent and location of the lesion. Two developments in the past half-century, however, have led to significant advances in the study of neurological patients. First, with neuroimaging methods such as computed tomography and magnetic resonance imaging, we can precisely localize brain injury in vivo. Second, the paradigms of cognitive psychology have provided the tools for making more sophisticated analyses of the behavioral deficits observed following brain injury. Early work focused on localizing complex tasks such as language, vision, executive control, and motor programming. Since then, the cognitive revolution has shaken things up. We know that these complex tasks require integrated processing of component operations that involve many different regions of the brain. By testing patients with brain injuries, researchers have been able to link these operations to specific brain structures, as well as make inferences about the component operations that underlie normal cognitive performance.

The lesion method has a long tradition in research involving laboratory animals, in large part because the experimenter can control the location and extent of the lesion. Over the years, surgical and chemical lesioning techniques have been refined, allowing for ever greater precision. Most notable are neurochemical lesions. For instance, systemic injection of 1-methyl-4-phenyl-1,2,3,6- tetrahydropyridine (MPTP) destroys dopaminergic cells in the substantia nigra, producing an animal version of Parkinson’s disease (see Chapter 8). Other chemicals have reversible effects, allowing researchers to produce a transient disruption in nerve conductivity. As long as the drug is active, the exposed neurons do not function. When the drug wears off, function gradually returns. The appeal of this method is that each animal can serve as its own control. Performance can be compared during the “lesion” and “nonlesion” periods. We will discuss this work further when we address pharmacological methods.

There are some limitations in using animals as models for human brain function. Although humans and many animals have some similar brain structures and functions, there are notable differences. Because homologous structures do not always have homologous functions, broad generalizations and conclusions are suspect. As neuroanatomist Todd Preuss (2001) put it:

The discovery of cortical diversity could not be more inconvenient. For neuroscientists, the fact of diversity means that broad generalization about cortical organization based on studies of a few “model” species, such as rats and rhesus macaques, are built on weak foundations.

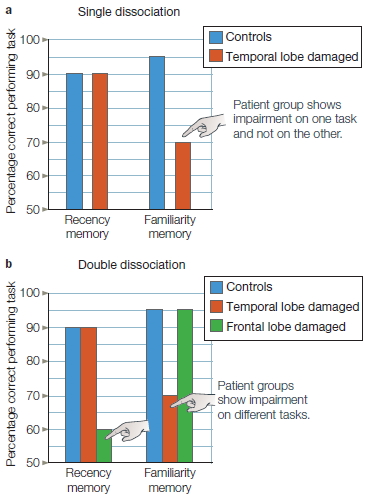

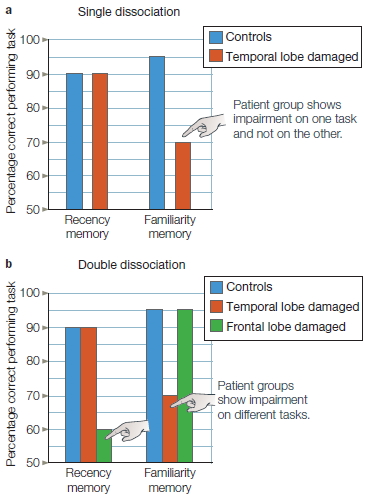

Consider a study designed to explore the relationship of two aspects of memory: when we learned something and how familiar it is. The study might be designed around the following questions: Is familiarity dependent on our knowledge of when we learned something? Do these two aspects of memory depend on the same brain structures? The working hypothesis could be that these two aspects of memory are separable, and that each is associated with a particular region of the brain. A researcher designs two memory tests: one to look at memory of when information was acquired—“Do you remember when you learned that the World Trade Center Towers had been attacked?” and the second to look at familiarity—“What events occurred and in what order?”

Assuming that the study participants were selectively impaired on only one of the two memory tests, our researcher would have observed a single dissociation (Figure 1a). In a single dissociation study, when two groups are each tested on two tasks, a between-group difference is apparent in only one task. Two groups are necessary so that the participants’ performance can be compared with that of a control group. Two tasks are necessary to examine whether a deficit is specific to a particular task or reflects a more general impairment. Many conclusions in neuropsychology are based on single dissociations. For example, compared to control participants, patients with hippocampal lesions cannot develop long-term memories even though their short-term memory is intact. In a separate example, patients with Broca’s aphasia have intact comprehension but struggle to speak fluently.

Single dissociations have unavoidable problems. In particular, although the two tasks are assumed to be equally sensitive to differences between the control and experimental groups, often this is not the case. One task may be more sensitive than the other because of differences in task difficulty or sensitivity problems in how the measurements are obtained. For example, a task that measures familiarity might require a greater degree of concentration than the one that measures when a memory was learned. If the experimental group has a brain injury, it may have produced a generalized problem in concentration and the patient may have difficulty with the more demanding task. The problem, however, would not be due to a specific memory problem.

A double dissociation identifies whether two cognitive functions are independent of each other, something that a single association cannot do. In a double dissociation, group 1 is impaired on task X (but not task Y) and group 2 is impaired on task Y (but not task X; Figure 1b). Either the performances of the two groups are compared to each other, or more commonly, the patient groups are compared with a control group that shows no impairment in either task. With a double dissociation, it is no longer reasonable to argue that a difference in performance results merely from the unequal sensitivity of the two tasks. In our memory example, the claim that one group has a selective problem with familiarity would be greatly strengthened if it were shown that a second group of patients showed selective impairment on the temporal-order task. Double dissociations offer the strongest neuropsychological evidence that a patient or patient group has a selective deficit in a certain cognitive operation.

FIGURE 1 Single and double dissociations.

(a) In the single dissociation, the patient group shows impairment on one task and not on the other. (b) In the double dissociation, one patient group shows impairment on one task, and a second patient group shows impairment on the other task. Double dissociations provide much stronger evidence for a selective impairment.

In both human and animal studies, the lesion approach itself has limitations. For naturally occurring lesions associated with strokes or tumors, there is considerable variability among patients. Moreover, researchers cannot be confident that the effect of a lesion eliminates the contribution of only a single structure. The function of neural regions that are connected to the lesioned area might also be altered, either because they are deprived of their normal neural input or because their axons fail to make normal synaptic connections. The lesion might also cause the individual to develop a compensatory strategy to minimize the consequences of the lesion. For example, when monkeys are deprived of sensory feedback to one arm, they stop using the limb. However, if the sensory feedback to the other arm is eliminated later, the animals begin to use both limbs (Taub & Berman, 1968). The monkeys prefer to use a limb that has normal sensation, but the second surgery shows that they could indeed use the compromised limb.

The Lesion Approach in Humans Two methodological approaches are available when choosing a study population of participants with brain dysfunction. Researchers can either pick a population with similar anatomical lesions or assemble a population with a similar behavioral deficit. The choice will depend, among other things, on the question being asked. In the box “The Cognitive Neuroscientist’s Toolkit: Study Design,” we consider two possible experimental outcomes that might be obtained in neuropsychological studies, the single and double dissociation. Either outcome can be useful for developing functional models that inform our understanding of cognition and brain function. We also consider in that box the advantages and disadvantages of conducting such studies on an individual basis or by using groups of patients with similar lesions.

Lesion studies rest on the assumption that brain injury is eliminative—that brain injury disturbs or eliminates the processing ability of the affected structure. Consider this example. Suppose that damage to brain region A results in impaired performance on task X. One conclusion is that region A contributes to the processing required for task X. For example, if task X is reading, we might conclude that region A is critical for reading. But from cognitive psychology, we know that a complex task like reading has many component operations: fonts must be perceived, letters and letter strings must activate representations of their corresponding meanings, and syntactic operations must link individual words into a coherent stream. By merely testing reading ability, we will not know which component operation or operations are impaired when there are lesions to region A. What the cognitive neuropsychologist wants to do is design tasks that will be able to test specific hypotheses about brainfunction relationships. If a reading problem stems from a general perceptual problem, then comparable deficits should be seen on a range of tests of visual perception. If the problem reflects the loss of semantic knowledge, then the deficit should be limited to tasks that require some form of object identification or recognition.

Associating neural structures with specific processing operations calls for appropriate control conditions. The most basic control is to compare the performance of a patient or group of patients with that of healthy participants. Poorer performance by the patients might be taken as evidence that the affected brain regions are involved in the task. Thus, if a group of patients with lesions in the frontal cortex showed impairment on our reading task, we might suppose that this region of the brain was critical for reading. Keep in mind, however, that brain injury can produce widespread changes in cognitive abilities. Besides having trouble reading, the frontal lobe patient might also demonstrate impairment on other tasks, such as problem solving, memory, or motor planning. Thus the challenge for the cognitive neuroscientist is to determine whether the observed behavioral problem results from damage to a particular mental operation or is secondary to a more general disturbance. For example, many patients are depressed after a neurological disturbance such as a stroke, and depression is known to affect performance on a wide range of tasks.

Functional Neurosurgery: Intervention to Alter or Restore Brain Function

Surgical interventions for treating neurological disorders provide a unique opportunity to investigate the link between brain and behavior. The best example comes from research involving patients who have undergone surgical treatment for the control of intractable epilepsy. The extent of tissue removal is always well documented, enabling researchers to investigate correlations between lesion site and cognitive deficits. But caution must be exercised in attributing cognitive deficits to surgically induced lesions. Because the seizures have spread beyond the epileptogenic tissue, other structurally intact tissue may be dysfunctional owing to the chronic effects of epilepsy. One method used with epilepsy patients compares their performance before and after surgery. The researcher can differentiate changes associated with the surgery from those associated with the epilepsy. An especially fruitful paradigm for cognitive neuroscience has involved the study of patients who have had the fibers of the corpus callosum severed. In these patients, the two hemispheres have been disconnected—a procedure referred to as a callosotomy operation or, more informally, the split-brain procedure. The relatively few patients who have had this procedure have been studied extensively, providing many insights into the roles of the two hemispheres on a wide range of cognitive tasks. These studies are discussed more extensively in Chapter 4.

In the preceding examples, neurosurgery was eliminative in nature, but it has also been used as an attempt to restore normal function. Examples are found in current treatments for Parkinson’s disease, a movement disorder resulting from basal ganglia dysfunction. Although the standard treatment is medication, the efficacy of the drugs can change over time and even produce debilitating side effects. Some patients who develop severe side effects are now treated surgically. One widely used technique is deep-brain stimulation (DBS), in which electrodes are implanted in the basal ganglia. These devices produce continuous electrical signals that stimulate neural activity. Dramatic and sustained improvements are observed in many patients (Hamani et al., 2006; Krack et al., 1998), although why the procedure works is not well understood. There are side effects, in part because more than one type of neuron is stimulated. Optogenetics methods promise to provide an alternative method in which clinicians can control neural activity. While there are currently no human applications, this method has been used to explore treatments of Parkinson’s symptoms in a mouse model of the disease. Early work here suggests that the most effective treatments may not result from the stimulation of specific cells, but rather the way in which stimulation changes the interactions between different types of cells (Kravitz et al., 2010). This finding underscores that many diseases of the nervous system are not usually related to problems with neurons per se, but rather with how the flow of information is altered by the disease process.

TAKE-HOME MESSAGES

- Research involving patients with neurological disorders is used to examine structure–function relationships. Single and double dissociations can provide evidence that damage to a particular brain region may result in a selective deficit of a certain cognitive operation.

- Surgical procedures have been used to treat neurological disorders such as epilepsy or Parkinson’s disease. Studies conducted in patients before and after surgery have provided unique opportunities to study brainbehavior relationships.

Methods to Perturb Neural Function

As mentioned earlier, patient research rests on the assumption that brain injury is an eliminative process. The lesion is believed to disrupt certain mental operations while having little or no impact on others. The brain is massively interconnected, however, so just as with lesion studies in animals, structural damage in one area might have widespread functional (i.e., behavioral) consequences; or, through disruption of neural connections, the functional impact might be associated with a region of the brain that was not itself directly damaged. There is also increasing evidence that the brain is a plastic device: Neural function is constantly being reshaped by our experiences, and such reorganization can be quite remarkable following neurological damage. Consequently, it is not always easy to analyze the function of a missing part by looking at the operation of the remaining system. You don’t have to be an auto mechanic to understand that cutting the spark plug wires or cutting the gas line will cause an automobile to stop running, but this does not mean that spark plug wires and the gas line do the same thing; rather, removing either one of these parts has similar functional consequences.

Many insights can be gleaned from careful observations of people with neurological disorders, but as we will see throughout this book, such methods are, in essence, correlational. Concerns like these point to the need for methods that involve the study of the normal brain.

The neurologically intact participant, both human and nonhuman, is used, as we have already noted, as a control when studying participants with brain injuries. Neurologically intact participants are also used to study intact function (discussed later in this chapter) and to investigate the effects of transient perturbations to the normal brain, which we discuss next.

One age-old method of perturbing function in both humans and animals is one you may have tried yourself: the use of drugs, whether it be coffee, chocolate, beer, or something stronger. Newer methods include transcranial magnetic stimulation and transcranial direct current stimulation. Genetic methods, used in animal models, provide windows into the molecular mechanisms that underpin brain function. Genomic analysis can also help identify the genetic abnormalities that contribute to certain diseases, such as Huntington’s. And of course, optogenetics, which opened this chapter, has enormous potential for understanding brain structure–function connections as well as managing or curing some devastating diseases.

We turn now to the methods used to perturb function, both at the neurologic and genetic levels, in normal participants.

Pharmacology

The release of neurotransmitters at neuronal synapses and the resultant responses are critical for information transfer from one neuron to the next. Though protected by the blood–brain barrier (BBB), the brain is not a locked compartment. Many different drugs, known as psychoactive drugs (e.g., caffeine, alcohol, and cocaine as well as the pharmaceutical drugs used to treat depression and anxiety), can disturb these interactions, resulting in changes in cognitive function. Pharmacological studies may involve the administration of agonist drugs, those that have a similar structure to a neurotransmitter and mimic its action, or antagonist drugs, those that bind to receptors and block or dampen neurotransmission.

For the researcher studying the impacts of pharmaceuticals on human populations, there are “native” groups to study, given the prevalence of drug use in our culture. For example, in Chapter 12 we examine studies of cognitive impairments associated with chronic cocaine abuse.

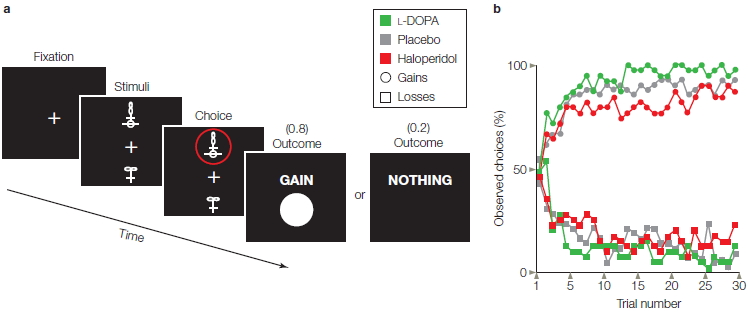

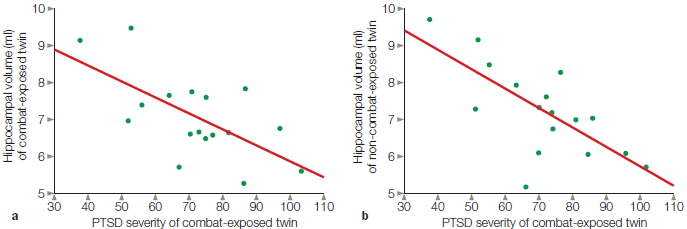

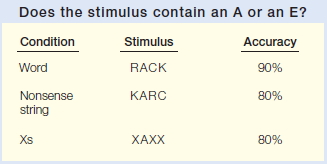

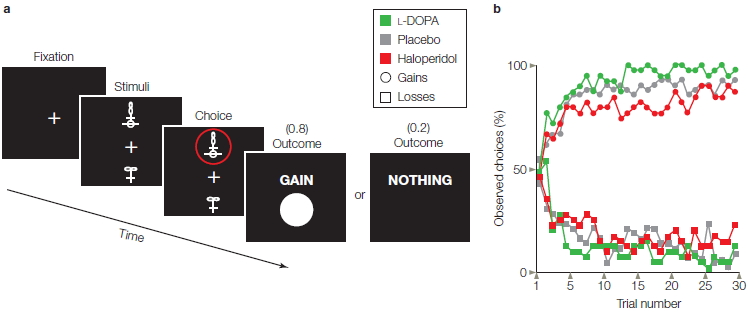

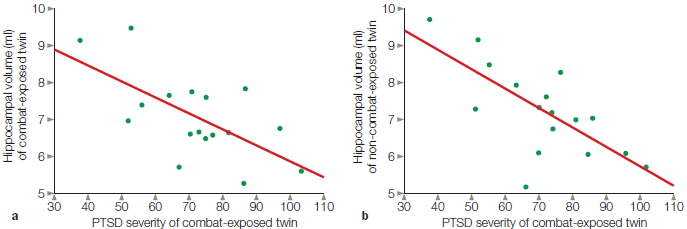

Besides being used in studies of chronic drug users, neurologically intact populations are used for studies in which researchers administer a drug in a controlled environment and monitor its effects on cognitive function. For instance, the neurotransmitter dopamine is known to be a key ingredient in reward-seeking behavior. One study looked at the effect of dopamine on decision making when a potential monetary reward or loss was involved. One group of participants received the dopamine receptor antagonist haloperidol; another received the receptor agonist L-DOPA, the metabolic precursor of dopamine (though dopamine itself is unable to cross the BBB, L-DOPA can and is then converted to dopamine). Each group performed a computerized learning task, in which they were presented with a choice of two symbols on each trial. They had to choose between the symbols with the goal of maximizing payoffs (Figure 3.12; Pessiglione et al., 2006). Each symbol was associated with a certain unknown probability of gain or no gain, loss or no loss, or no gain or loss. For instance, a squiggle stood an 80 % chance of winning a pound and a 20 % chance of winning nothing, but a figure eight stood an 80 % of losing a pound and a 20 % chance of no loss, and a circular arrow resulted in no win or loss. On gain trials, the L-DOPA-treated group won more money than the haloperidol-treated group, whereas on loss trials, the groups did not differ. These results are consistent with the hypothesis that dopamine has a selective effect on reward-driven learning.

A major drawback of drug studies in which the drug is injected into the bloodstream is the lack of specificity. The entire body and brain are awash in the drug, so it is unknown how much drug actually makes it to the site of interest in the brain. In addition, the potential impact of the drug on other sites in the body and the dilution effect confound data analysis. In some animal studies, direct injection of a study drug to specific brain regions helps obviate this problem. For example, Judith Schweimer (2006) examined the brain mechanisms involved in deciding how much effort an individual should expend to gain a reward. Do you stay on the couch and watch a favorite TV show, or get dressed up to go out to a party and perhaps make a new friend? Earlier work showed that rats depleted of dopamine are unwilling to make effortful responses that are highly rewarding (Schweimer et al., 2005) and that the anterior cingulate cortex (ACC), a part of the prefrontal cortex, is important for evaluating the cost versus benefit of performing an action (Rushworth et al., 2004). Knowing that there are two types of dopamine receptors in the ACC, called D1 and D2, Schweimer wondered which was involved. In one group of rats, she injected a drug into the ACC that blocked the D1 receptor; in another, she injected a D2 antagonist. The group that had their D1 receptors blocked turned out to act like couch potatoes, but the rats with blocked D2 receptors were willing to make the effort to pursue the high reward. This dissociation indicates that dopamine input to the D1 receptors within the ACC is critical for effort-based decision making.

FIGURE 3.12 Pharmacological manipulation of reward-based learning.

(a) Participants chose the upper or lower of two abstract visual stimuli and observed the outcome. The selected stimulus, circled in red, is associated with an 80% chance of winning $1 and a 20% chance of winning nothing. The probabilities are different for other stimuli. (b) Learning functions showing probability of selecting stimuli associated with gains (circles) or avoid stimuli associated with losses (squares) as a function of the number of times each stimulus was presented. Participants given L-DOPA (green), a dopamine agonist, were faster in learning to choose stimuli associated with gains, compared to participants given a placebo (gray). Participants given haloperidol (red), a dopamine antagonist, were slower in leaning to choose the gain stimuli. The drugs did not affect how quickly participants learned to avoid the stimuli associated with a cost.

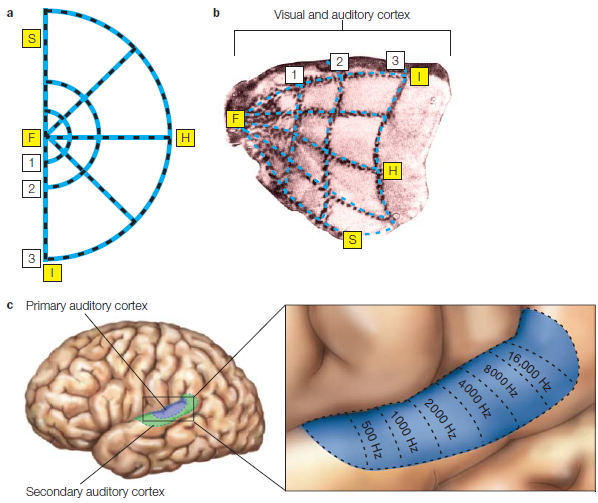

Transcranial Magnetic Stimulation

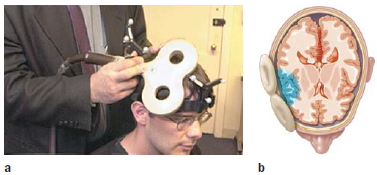

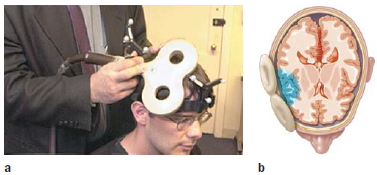

Transcranial magnetic stimulation (TMS) offers a method to noninvasively produce focal stimulation of the human brain. The TMS device consists of a tightly wrapped wire coil, encased in an insulated sheath and connected to a source of powerful electrical capacitors. Triggering the capacitors sends a large electrical current through the coil, generating a magnetic field. When the coil is placed on the surface of the skull, the magnetic field passes through the skin and scalp and induces a physiological current that causes neurons to fire (Figure 3.13a). The exact mechanism causing the neural discharge is not well understood. Perhaps the current leads to the generation of action potentials in the soma; alternatively, the current may directly stimulate axons. The area of neural activation will depend on the shape and positioning of the coil. With currently available coils, the area of primary activation can be constrained to about 1.0 to 1.5 cm3, although there are also downstream effects (see Figure 3.13b).

When the TMS coil is placed over the hand area of the motor cortex, stimulation will activate the muscles of the wrist and fingers. The sensation can be rather bizarre. The hand visibly twitches, yet the participant is aware that the movement is completely involuntary! Like many research tools, TMS was originally developed for clinical purposes. Direct stimulation of the motor cortex provides a relatively simple way to assess the integrity of motor pathways because muscle activity in the periphery can be detected about 20 milliseconds (ms) after stimulation.

TMS has also become a valuable research tool in cognitive neuroscience because of its ability to induce “virtual lesions” (Pascual-Leone et al., 1999). By stimulating the brain, the experimenter is disrupting normal activity in a selected region of the cortex. Similar to the logic in lesion studies, the behavioral consequences of the stimulation are used to shed light on the normal function of the disrupted tissue. This method is appealing because the technique, when properly conducted, is safe and noninvasive, producing only a relatively brief alteration in neural activity. Thus, performance can be compared between stimulated and nonstimulated conditions in the same individual. This, of course, is not possible with brain-injured patients.

FIGURE 3.13 Transcranial magnetic stimulation.

(a) The TMS coil is held by the experimenter against the participant’s head. Both the coil and the participant have affixed to them a tracking device to monitor the head and coil position in real time. (b) The TMS pulse directly alters neural activity in a spherical area of approximately 1 cm3.

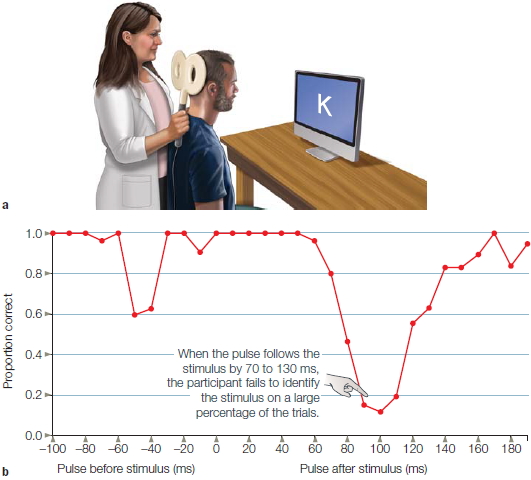

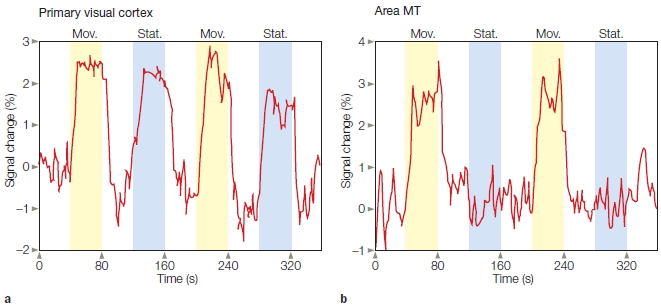

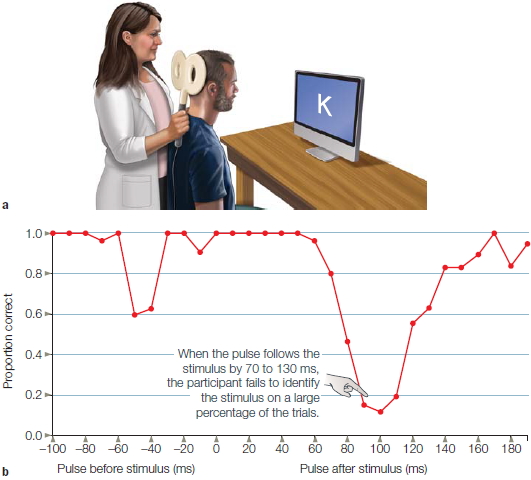

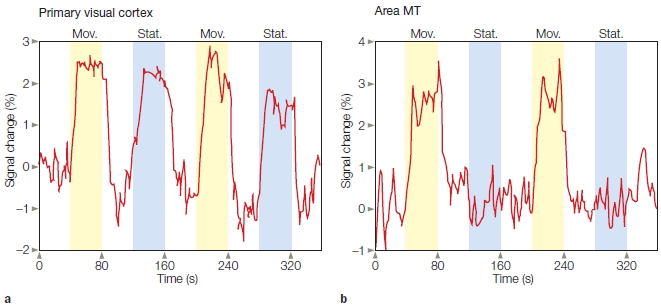

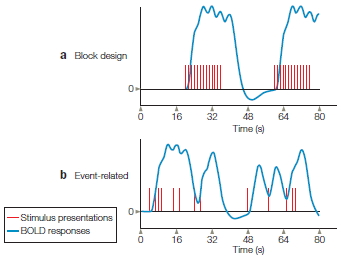

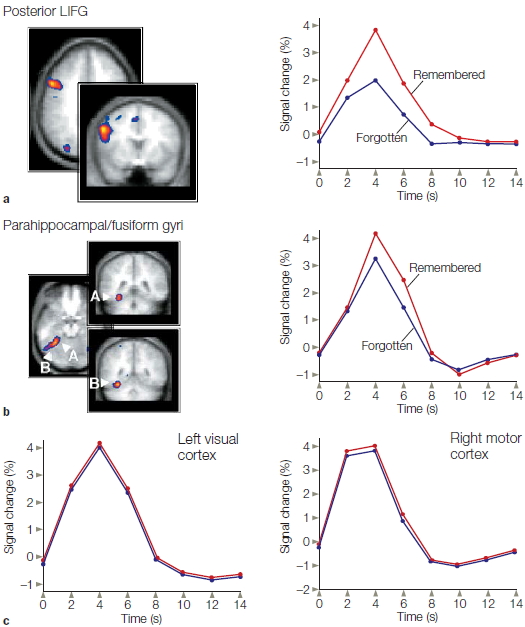

The virtual-lesion approach has been successfully employed even when the person is unaware of any effects from the stimulation. For example, stimulation over visual cortex (Figure 3.14) can interfere with a person’s ability to identify a letter (Corthout et al., 1999). The synchronized discharge of the underlying visual neurons interferes with their normal operation. The timing between the onset of the TMS pulse and the onset of the stimulus (e.g., presentation of a letter) can be manipulated to plot the time course of processing. In the letter identification task, the person will err only if the stimulation occurs between 70 and 130 ms after presentation of the letter. If the TMS is given before this interval, the neurons have time to recover; if the TMS is given after this interval, the visual neurons have already responded to the stimulus.

Transcranial Direct Current Stimulation

Transcranial direct current stimulation (tDCS) is a brain stimulation procedure that has been around in some form for the last two thousand years. The early Greeks and Romans used electric torpedo fish, which can deliver from 8 to 220 volts of DC electricity, to stun and numb patients in an attempt to alleviate pain, such as during childbirth and migraine headache episodes. Today’s electrical stimulation uses a much smaller current (1–2 mV) that feels like a tingling or itchy feeling when it is turned on or off. tDCS sends a current between two small electrodes—an anode and a cathode—placed on the scalp. Physiological studies show that neurons under the anode become depolarized. That is, they are put into an elevated state of excitability, making them more likely to initiate an action potential when a stimulus or movement occurs (see Chapter 2). Neurons under the cathode become hyperpolarized and are less likely to fire. tDCS will alter neural activity over a much larger area than is directly affected by a TMS pulse.

FIGURE 3.14 Transcranial magnetic stimulation over the occipital lobe.

(a) The center of the coil is positioned over the occipital lobe to disrupt visual processing. The participant attempts to name letters that are briefly presented on the screen. A TMS pulse is applied on some trials, either just before or just after the letter. (b) The independent variable is the time between the TMS pulse and letter presentation. Visual perception is markedly disrupted when the pulse occurs 80–120 ms after the letter due to disruption of neural activity in the visual cortex. There is also a drop in performance if the pulse comes before the letter. This is likely an artifact due to the participant blinking in response to the sound of the TMS pulse.

tDCS has been shown to produce changes in behavioral performance. The effects can sometimes be observed within a single experimental session. Anodal tDCS generally leads to improvements in performance, perhaps because the neurons are put into a more excitable state. Cathodal stimulation may hinder performance, akin to TMS, although the effects of cathodal stimulation are generally less consistent. tDCS has also been shown to produce beneficial effects for patients with various neurological conditions such as stroke or chronic pain. The effects tend to be short-lived, lasting for just a half hour beyond the stimulation phase. If repeatedly applied, however, the duration of the benefit can be prolonged from minutes to weeks (Boggio et al., 2007).

TMS and tDCS give cognitive neuroscientists safe methods for transiently disrupting the activity of the human brain. An appealing feature of these methods is that researchers can design experiments to test specific functional hypotheses. Unlike neuropsychological studies in which comparisons are usually between a patient group and matched controls, participants in TMS and tDCS studies can serve as their own controls, since the effects of these stimulation procedures are transient.

Genetic Manipulations

The start of the 21st century witnessed the climax of one of the great scientific challenges: the mapping of the human genome. Scientists now possess a complete record of the genetic sequence on our chromosomes. We have only begun to understand how these genes code for all aspects of human structure and function. In essence, we now have a map containing the secrets to many treasures: What causes people to grow old? Why are some people more susceptible to certain cancers than other people? What dictates whether embryonic tissue will become a skin cell or a brain cell? Deciphering this map is an imposing task that will take years of intensive study.

Genetic disorders are manifest in all aspects of life, including brain function. As noted earlier, diseases such as Huntington’s disease are clearly heritable. By analyzing individuals’ genetic codes, scientists can now predict whether the children of individuals carrying the HD gene will develop this debilitating disorder. Moreover, by identifying the genetic locus of this disorder, scientists hope to devise techniques to alter the aberrant genes, either by modifying them or by figuring out a way to prevent them from being expressed.

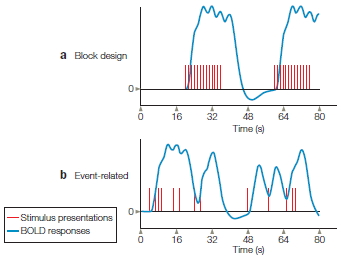

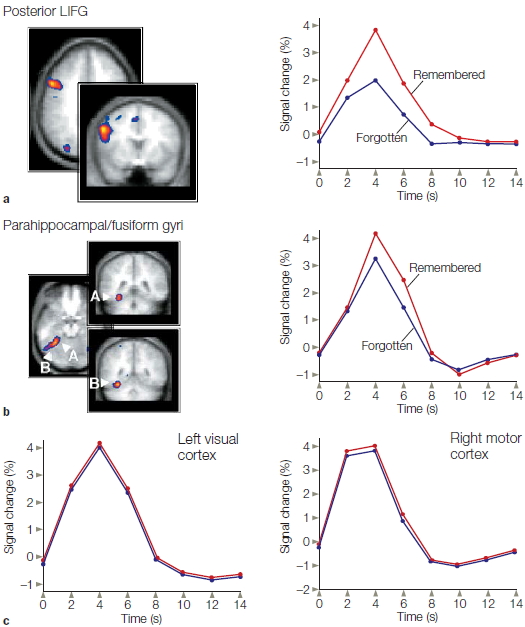

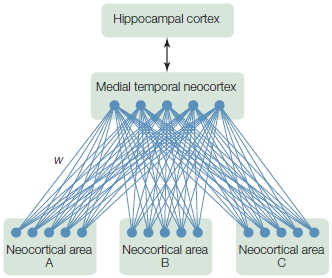

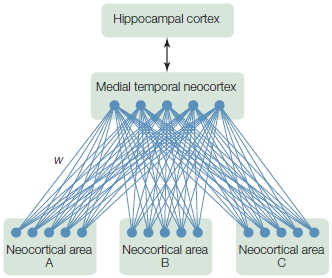

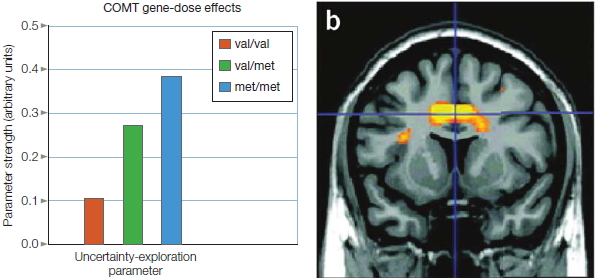

In a similar way, scientists have sought to understand other aspects of normal and abnormal brain function through the study of genetics. Behavioral geneticists have long known that many aspects of cognitive function are heritable. For example, controlling mating patterns on the basis of spatial-learning performance allows the development of “maze-bright” and “maze-dull” strains of rats. Rats that quickly learn to navigate mazes are likely to have offspring with similar abilities, even if the offspring are raised by rats that are slow to navigate the same mazes. Such correlations are also observed across a range of human behaviors, including spatial reasoning, reading speed, and even preferences in watching television (Plomin et al., 1990). This finding should not be taken to mean that our intelligence or behavior is genetically determined. Maze-bright rats perform quite poorly if raised in an impoverished environment. The truth surely reflects complex interactions between the environment and genetics (see “The Cognitive Neuroscientist’s Toolkit: Correlation and Causation”).