FIGURE 1.1 An artistic rendition of the miraculous resurrection of Anne Green in 1650.

|

In science it often happens that scientists say, “You know that’s a really good argument; my position is mistaken,” and then they actually change their minds and you never hear that old view from them again. They really do it. It doesn’t happen as often as it should, because scientists are human and change is sometimes painful. But it happens every day. I cannot recall the last time something like that happened in politics or religion. ~ Carl Sagan, 1987 |

Chapter 1

A Brief History of Cognitive Neuroscience

OUTLINE

A Historical Perspective

The Brain Story

The Psychological Story

The Instruments of Neuroscience

The Book in Your Hands

AS ANNE GREEN WALKED to the gallows in the castle yard of Oxford, England, in 1650, she must have been feeling scared, angry, and frustrated. She was about to be executed for a crime she had not committed: murdering her stillborn child. Many thoughts raced through her head, but “I am about to play a role in the founding of clinical neurology and neuroanatomy” although accurate, certainly was not one of them. She proclaimed her innocence to the crowd, a psalm was read, and she was hanged. She hung there for a full half hour before she was taken down, pronounced dead, and placed in a coffin provided by Drs. Thomas Willis and William Petty. This was when Anne Green’s luck began to improve. Willis and Petty were physicians and had permission from King Charles I to dissect, for medical research, the bodies of any criminals killed within 21 miles of Oxford. So, instead of being buried, Anne’s body was carried to their office.

An autopsy, however, was not what took place. As if in a scene from Edgar Allan Poe, the coffin began to emit a grumbling sound. Anne was alive! The doctors poured spirits in her mouth and rubbed a feather on her neck to make her cough. They rubbed her hands and feet for several minutes, bled five ounces of her blood, swabbed her neck wounds with turpentine, and cared for her through the night. The next morning, able to drink fluids and feeling more chipper, Anne asked for a beer. Five days later, she was out of bed and eating normally (Molnar, 2004; Zimmer, 2004).

After her ordeal, the authorities wanted to hang Anne again. But Willis and Petty fought in her defense, arguing that her baby had been stillborn and its death was not her fault. They declared that divine providence had stepped in and provided her miraculous escape from death, thus proving her innocence. Their arguments prevailed. Anne was set free and went on to marry and have three more children.

FIGURE 1.1 An artistic rendition of the miraculous resurrection of Anne Green in 1650.

This miraculous experience was well publicized in England (Figure 1.1). Thomas Willis (Figure 1.2) owed much to Anne Green and the fame brought to him by the events of her resurrection. With it came money he desperately needed and the prestige to publish his work and disseminate his ideas, and he had some good ones. An inquisitive neurologist, he actually coined the term neurology and became one of the best-known doctors of his time. He was the first anatomist to link abnormal human behaviors to changes in brain structure. He drew these conclusions after treating patients throughout their lives and autopsying them after their deaths. Willis was among the first to link specific brain damage to specific behavioral deficits, and to theorize how the brain transfers information in what would later be called neuronal conduction.

FIGURE 1.2 Thomas Willis (1621–1675), a founder of clinical neuroscience.

With his colleague and friend Christopher Wren (the architect who designed St. Paul’s Cathedral in London), Willis created drawings of the human brain that remained the most accurate representations for 200 years (Figure 1.3). He also coined names for a myriad of brain regions (Table 1.1; Molnar, 2004; Zimmer, 2004). In short, Willis set in motion the ideas and knowledge base that took hundreds of years to develop into what we know today as the field of cognitive neuroscience.

In this chapter, we discuss some of the scientists and physicians who have made important contributions to this field. You will discover the origins of cognitive neuroscience and how it has developed into what it is today: a discipline geared toward understanding how the brain works, how brain structure and function affect behavior, and ultimately how the brain enables the mind.

A Historical Perspective

The scientific field of cognitive neuroscience received its name in the late 1970s in the back seat of a New York City taxi. One of us (M.S.G.) was riding with the great cognitive psychologist George A. Miller on the way to a dinner meeting at the Algonquin Hotel. The dinner was being held for scientists from Rockefeller and Cornell universities, who were joining forces to study how the brain enables the mind—a subject in need of a name. Out of that taxi ride came the term cognitive neuroscience—from cognition, or the process of knowing (i.e., what arises from awareness, perception, and reasoning), and neuroscience (the study of how the nervous system is organized and functions). This seemed the perfect term to describe the question of understanding how the functions of the physical brain can yield the thoughts and ideas of an intangible mind. And so the term took hold in the scientific community.

When considering the miraculous properties of brain function, bear in mind that Mother Nature built our brains through the process of evolution; they were not designed by a team of rational engineers. While life first appeared on our 4.5-billion-year-old Earth approximately 3.8 billion years ago, human brains, in their present form, have been around for only about 100,000 years, a mere drop in the bucket. The primate brain appeared between 34 million and 23 million years ago, during the Oligocene epoch. It evolved into the progressively larger brains of the great apes in the Miocene epoch between roughly 23 million and 7 million years ago. The human lineage diverged from the last common ancestor that we shared with the chimpanzee somewhere in the range of 5–7 million years ago. Since that divergence, our brains have evolved into the present human brain, capable of all sorts of wondrous feats. Throughout this book, we will be reminding you to take the evolutionary perspective: Why might this behavior have evolved? How could it promote survival and reproduction? WWHGD? (What would a hunter-gather do?) The evolutionary perspective often helps us to ask more informed questions and provides insight into how and why the brain functions as it does.

FIGURE 1.3 The human brain (ventral view) drawn by Christopher Wren for Thomas Willis, published in Willis’s The Anatomy of the Brain and Nerves.

| table 1.1 A Selection of Terms Coined by Thomas Willis | |

| Term | Definition |

| Anterior commissure | Axonal fibers connecting the middle and inferior temporal gyri of the left and right hemispheres. |

| Cerebellar peduncles | Axonal fibers connecting the cerebellum and brainstem. |

| Claustrum | A thin sheath of gray matter located between two brain areas: the external capsule and the putamen. |

| Corpus striatum | A part of the basal ganglia consisting of the caudate nucleus and the lenticular nucleus. |

| Inferior olives | The part of the brainstem that modulates cerebellar processing. |

| Internal capsule | White matter pathways conveying information from the thalamus to the cortex. |

| Medullary pyramids | A part of the medulla that consists of corticospinal fibers. |

| Neurology | The study of the nervous system and its disorders. |

| Optic thalamus | The portion of the thalamus relating to visual processing. |

| Spinal accessory nerve | The 11th cranial nerve, which innervates the head and shoulders. |

| Stria terminalis | The white matter pathway that sends information from the amygdala to the basal forebrain. |

| Striatum | Gray matter structure of the basal ganglia. |

| Vagus nerve | The 10th cranial nerve, which, among other functions, has visceral motor control of the heart. |

During most of our history, humans were too busy to think about thought. Although there can be little doubt that the brains of our long-ago ancestors could engage in such activities, life was given over to more practical matters, such as surviving in tough environments, developing ways to live better by inventing agriculture or domesticating animals, and so forth. Nonetheless, the brain mechanisms that enable us to generate theories about the characteristics of human nature thrived inside the heads of ancient humans. As civilization developed to the point where day-to-day survival did not occupy every hour of every day, our ancestors began to spend time looking for causation and constructing complex theories about the motives of fellow humans. Examples of attempts to understand the world and our place in it include Oedipus Rex (the ancient Greek play that deals with the nature of the child–parent conflict) and Mesopotamian and Egyptian theories on the nature of religion and the universe. Although the pre-Socratic Greek philosopher, Thales, rejected supernatural explanations of phenomena and proclaimed that every event had a natural cause (presaging modern cognitive neuroscience), the early Greeks had one big limitation: They did not have the methodology to explore the mind systematically through experimentation.

It wasn’t until the 19th century that the modern tradition of observing, manipulating, and measuring became the norm, and scientists started to determine how the brain gets its jobs done. To understand how biological systems work, a laboratory is needed and experiments have to be performed to answer the questions under study and to support or refute the hypotheses and conclusions that have been made. This approach is known as the scientific method, and it is the only way that a topic can move along on sure footing. And in the case of cognitive neuroscience, there is no end to the rich phenomena to study.

The Brain Story

Imagine that you are given a problem to solve. A hunk of biological tissue is known to think, remember, attend, solve problems, tell jokes, want sex, join clubs, write novels, exhibit bias, feel guilty, and do a zillion other things. You are supposed to figure out how it works. You might start by looking at the big picture and asking yourself a couple of questions. “Hmmm, does the blob work as a unit with each part contributing to a whole? Or, is the blob full of individual processing parts, each carrying out specific functions, so the result is something that looks like it is acting as a whole unit?” From a distance the city of New York (another type of blob) appears as an integrated whole, but it is actually composed of millions of individual processors—that is, people. Perhaps people, in turn, are made of smaller, more specialized units.

This central issue—whether the mind is enabled by the whole brain working in concert or by specialized parts of the brain working at least partly independently—is what fuels much of modern research in cognitive neuroscience. As we will see, the dominant view has changed back and forth over the years, and it continues to change today.

FIGURE 1.4 Franz Joseph Gall (1758–1828), one of the founders of phrenology.

Thomas Willis foreshadowed cognitive neuroscience with the notion that isolated brain damage (biology) could affect behavior (psychology), but his insights slipped from view. It took another century for Willis’s ideas to resurface. They were expanded upon by a young Austrian physician and neuroanatomist, Franz Joseph Gall (Figure 1.4). After studying numerous patients, Gall became convinced that the brain was the organ of the mind and that innate faculties were localized in specific regions of the cerebral cortex. He thought that the brain was organized around some 35 or more specific functions, ranging from cognitive basics such as language and color perception to more ephemeral capacities such as affection and a moral sense, and each was supported by specific brain regions. These ideas were well received, and Gall took his theory on the road, lecturing throughout Europe.

Building on his theories, Gall and his disciple Johann Spurzheim hypothesized that if a person used one of the faculties with greater frequency than the others, the part of the brain representing that function would grow (Gall & Spurzheim, 1810–1819). This increase in local brain size would cause a bump in the overlying skull. Logically, then, Gall and his colleagues believed that a careful analysis of the skull could go a long way in describing the personality of the person inside the skull. Gall called this technique anatomical personology (Figure 1.5). The idea that character could be divined through palpating the skull was dubbed phrenology by Spurzheim and, as you may well imagine, soon fell into the hands of charlatans. Some employers even required job applicants to have their skulls “read” before they were hired.

Gall, apparently, was not politically astute. When asked to read the skull of Napoleon Bonaparte, Gall did not ascribe to his skull the noble characteristics that the future emperor was quite sure he possessed. When Gall later applied to the Academy of Science of Paris, Napoleon decided that phrenology needed closer scrutiny and ordered the Academy to obtain some scientific evidence of its validity. Although Gall was a physician and neuroanatomist, he was not a scientist. He observed correlations and sought only to confirm, not disprove, them. The Academy asked physiologist Marie-Jean-Pierre Flourens (Figure 1.6) to see if he could come up with any concrete findings that could back up this theory.

Flourens set to work. He destroyed parts of the brains of pigeons and rabbits and observed what happened. He was the first to show that indeed certain parts of the brain were responsible for certain functions. For instance, when he removed the cerebral hemispheres, the animal no longer had perception, motor ability, and judgment. Without the cerebellum, the animals became uncoordinated and lost their equilibrium. He could not, however, find any areas for advanced abilities such as memory or cognition and concluded that these were more diffusely scattered throughout the brain. Flourens developed the notion that the whole brain participated in behavior, a view later known as the aggregate field theory. In 1824, Flourens wrote, “All sensations, all perceptions, and all volitions occupy the same seat in these (cerebral) organs. The faculty of sensation, percept and volition is then essentially one faculty.” The theory of localized brain functions, known as localizationism, fell out of favor.

|

|

|

| a | b | c |

|

FIGURE 1.5 (a) An analysis of Presidents Washington, Jackson, Taylor, and McKinley by Jessie A. Fowler, from the Phrenological Journal, June 1898. (b) The phrenological map of personal characteristics on the skull, from the American Phrenological Journal, March 1848. (c) Fowler & Wells Co. publication on marriage compatibility in connection with phrenology, 1888. |

||

|

|

| a | b |

|

FIGURE 1.6 (a) Marie-Jean-Pierre Flourens (1794–1867), who supported the idea later termed the aggregate field theory. (b) The posture of a pigeon deprived of its cerebral hemispheres, as described by Flourens. |

|

FIGURE 1.7 John Hughlings Jackson (1835–1911), an English neurologist who was one of the first to recognize the localizationist view.

That state of affairs didn’t last for too long, however. New evidence obtained through clinical observations and autopsies started trickling in from across Europe, and it helped to swing the pendulum slowly back to the localizationist view. In 1836 a neurologist from Montpellier, Marc Dax, provided one of the first bits of evidence. He sent a report to the Academy of Sciences about three patients, noting that each had speech disturbances and similar left-hemisphere lesions found at autopsy. At the time, a report from the provinces got short shrift in Paris, and it would be another 30 years before anyone took much notice of this observation that speech could be disrupted by a lesion to one hemisphere only.

Meanwhile, in England, the neurologist John Hughlings Jackson (Figure 1.7) began to publish his observations on the behavior of persons with brain damage. A key feature of Jackson’s writings was the incorporation of suggestions for experiments to test his observations. He noticed, for example, that during the start of their seizures, some epileptic patients moved in such characteristic ways that the seizure appeared to be stimulating a set map of the body in the brain; that is, the clonic and tonic jerks in muscles, produced by the abnormal epileptic firings of neurons in the brain, progressed in the same orderly pattern from one body part to another. This phenomenon led Jackson to propose a topographic organization in the cerebral cortex—that is, a map of the body was represented across a particular cortical area, where one part would represent the foot, another the lower leg, and so on. As we will see, this proposal was verified over a half century later by Wilfred Penfield. Jackson was one of the first to realize this essential feature of brain organization.

Although Jackson was also the first to observe that lesions on the right side of the brain affect visuospatial processes more than do lesions on the left side, he did not maintain that specific parts of the right side of the brain were solely committed to this important human cognitive function. Being an observant clinical neurologist, Jackson noticed that it was rare for a patient to lose a function completely. For example, most people who lost their capacity to speak following a cerebral stroke could still say some words. Patients unable to direct their hands voluntarily to specific places on their bodies could still easily scratch those places if they itched. When Jackson made these observations, he concluded that many regions of the brain contributed to a given behavior.

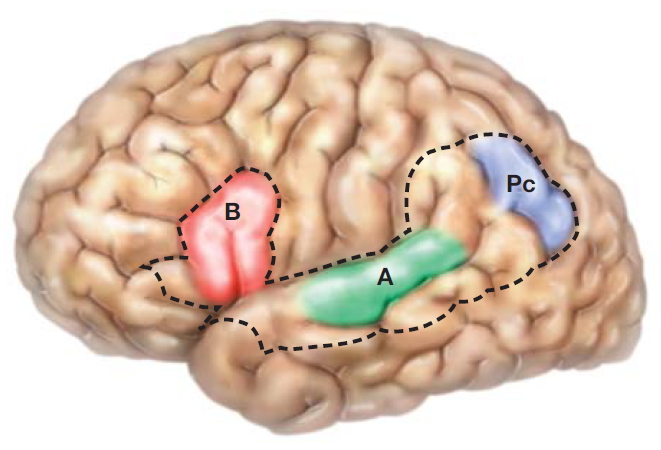

Meanwhile, the well-known and respected Parisian physician Paul Broca (Figure 1.8a) published, in 1861, the results of his autopsy on a patient who had been nicknamed Tan—perhaps the most famous neurological case in history. Tan had developed aphasia: He could understand language, but “tan” was the only word he could utter. Broca found that Tan (his real name was Leborgne) had a syphilitic lesion in his left hemisphere in the inferior frontal lobe. This region of the brain has come to be called Broca’s area. The impact of this finding was huge. Here was a specific aspect of language that was impaired by a specific lesion. Soon Broca had a series of such patients. This theme was picked up by the German neurologist Carl Wernicke. In 1876, Wernicke reported on a stroke victim who (unlike Broca’s patient) could talk quite freely but made little sense when he spoke. Wernicke’s patient also could not understand spoken or written language. He had a lesion in a more posterior region of the left hemisphere, an area in and around where the temporal and parietal lobes meet, which is now referred to as Wernicke’s area (Figure 1.8b).

|

|

| a | b |

|

FIGURE 1.8 (a) Paul Broca (1824–1880). (b) The connections between the speech centers, from Wernicke’s 1876 article on aphasia. A = Wernicke’s sensory speech center; B = Broca’s area for speech; Pc = Wernicke’s area concerned with language comprehension and meaning. |

|

Today, differences in how the brain responds to focal disease are well known (H. Damasio et al., 2004; R. J. Wise, 2003), but a little over 100 years ago Broca’s and Wernicke’s discoveries were earth-shattering. (Note that people had largely forgotten Willis’s observations that isolated brain damage could affect behavior. Throughout the history of brain science, an unfortunate and oft repeated trend is that we fail to consider crucial observations made by our predecessors.) With the discoveries of Broca and Wernicke, attention was again paid to this startling point: Focal brain damage causes specific behavioral deficits.

As is so often the case, the study of humans leads to questions for those who work on animal models. Shortly after Broca’s discovery, the German physiologists Gustav Fritsch and Eduard Hitzig electrically stimulated discrete parts of a dog brain and observed that this stimulation produced characteristic movements in the dog. This discovery led neuroanatomists to more closely analyze the cerebral cortex and its cellular organization; they wanted support for their ideas about the importance of local regions. Because these regions performed different functions, it followed that they ought to look different at the cellular level.

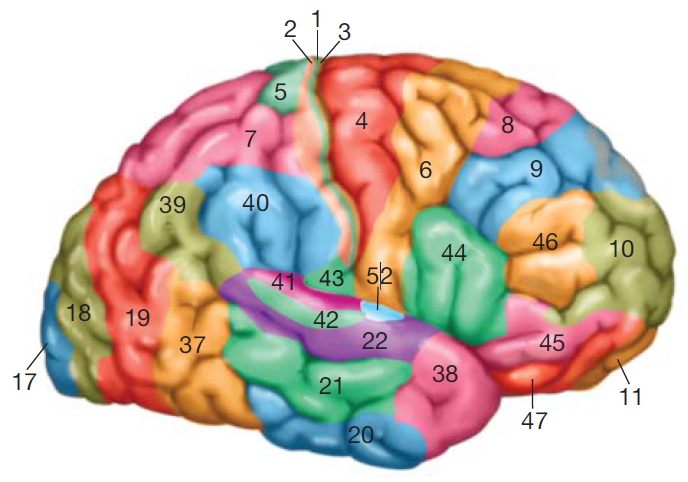

FIGURE 1.9 Sampling of the 52 distinct areas described by Brodmann on the basis of cell structure and arrangement.

Following this logic, German neuroanatomists began to analyze the brain by using microscopic methods to view the cell types in different brain regions. Perhaps the most famous of the group was Korbinian Brodmann, who analyzed the cellular organization of the cortex and characterized 52 distinct regions (Figure 1.9). He published his cortical maps in 1909. Brodmann used tissue stains, such as the one developed by Franz Nissl, that permitted him to visualize the different cell types in different brain regions. How cells differ between brain regions is called cytoarchitectonics, or cellular architecture.

Soon many now-famous anatomists, including Oskar Vogt, Vladimir Betz, Theodor Meynert, Constantin von Economo, Gerhardt von Bonin, and Percival Bailey, contributed to this work, and several subdivided the cortex even further than Brodmann had. To a large extent, these investigators discovered that various cytoarchitectonically described brain areas do indeed represent functionally distinct brain regions. For example, Brodmann first distinguished area 17 from area 18—a distinction that has proved correct in subsequent functional studies. The characterization of the primary visual area of the cortex, area 17, as distinct from surrounding area 18, remarkably demonstrates the power of the cytoarchitectonic approach, as we will consider more fully in Chapter 2.

|

|

| a | b |

|

FIGURE 1.10 (a) Camillo Golgi (1843–1926), cowinner of the Nobel Prize in 1906. (b) Golgi’s drawings of different types of ganglion cells in dog and cat. |

|

|

|

| a | b |

|

FIGURE 1.11 (a) Santiago Ramón y Cajal (1852–1934), cowinner of the Nobel Prize in 1906. (b) Ramón y Cajal’s drawing of the afferent inflow to the mammalian cortex. |

|

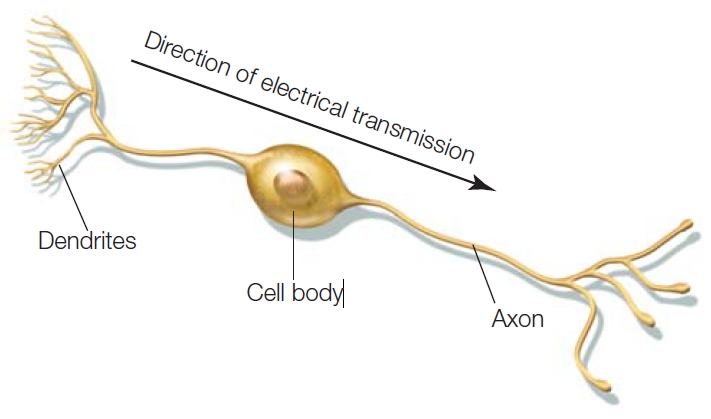

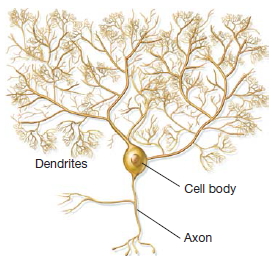

Despite all of this groundbreaking work in cytoarchitectonics, the truly huge revolution in our understanding of the nervous system was taking place elsewhere, in Italy and Spain. There, an intense struggle was going on between two brilliant neuroanatomists. Oddly, it was the work of one that led to the insights of the other. Camillo Golgi (Figure 1.10), an Italian physician, developed one of the most famous cell stains in the history of the world: the silver method for staining neurons—la reazione nera, “the black reaction,” that impregnated individual neurons with silver chromate. This stain permits visualization of individual neurons in their entirety. Using Golgi’s method, Santiago Ramón y Cajal (Figure 1.11) went on to find that, contrary to the view of Golgi and others, neurons were discrete entities. Golgi had believed that the whole brain was a syncytium, a continuous mass of tissue that shares a common cytoplasm. Ramón y Cajal, who some call the father of modern neuroscience, was the first to identify the unitary nature of neurons and to articulate what came to be known as the neuron doctrine, the concept that the nervous system is made up of individual cells. He also recognized that the transmission of electrical information went in only one direction, from the dendrites down to the axonal tip (Figure 1.12).

FIGURE 1.12 A bipolar retinal cell, illustrating the dendrites and axon of the neuron.

Many gifted scientists were involved in the early history of the neuron doctrine (Shepherd, 1991). For example, Jan Evangelista Purkinje (Figure 1.13), a Czech, not only described the first nerve cell in the nervous system in 1837 but also invented the stroboscope, described common visual phenomena, and made a host of other major discoveries. Hermann von Helmholtz (Figure 1.14) figured out that electrical current in the cell was not a by-product of cellular activity, but the medium that was actually carrying information along the axon of a nerve cell. He was also the first to suggest that invertebrates would be good models for studying vertebrate brain mechanisms. British physiologist Sir Charles Sherrington vigorously pursued the neuron’s behavior as a unit and, indeed, coined the term synapse to describe the junction between two neurons.

|

|

| a | b |

|

FIGURE 1.13 (a) Jan Evangelista Purkinje (1787–1869), who described the first nerve cell in the nervous system. (b) A Purkinje cell of the cerebellum. |

|

|

|

| a | b |

|

FIGURE 1.14 (a) Hermann Ludwig von Helmholtz (1821–1894). (b) Helmholtz’s apparatus for measuring the velocity of nerve conduction. |

|

With Golgi, Ramón y Cajal, and these other bright minds, the neuron doctrine was born—a discovery whose importance was highlighted by the 1906 Nobel Prize in Physiology or Medicine shared by Golgi and Ramón y Cajal, and later by the 1932 Nobel Prize awarded to Sherrington.

As the 20th century progressed, the localizationist views were mediated by those who saw that, even though particular neuronal locations might serve independent functions, the network of these locations and the interaction between them are what yield the integrated, holistic behavior that humans exhibit. Once again this neglected idea had previously been discussed nearly a century earlier by the French biologist Claude Bernard, who wrote in 1865:

If it is possible to dissect all the parts of the body, to isolate them in order to study them in their structure, form and connections it is not the same in life, where all parts cooperate at the same time in a common aim. An organ does not live on its own, one could often say it did not exist anatomically, as the boundary established is sometimes purely arbitrary. What lives, what exists, is the whole, and if one studies all the parts of any mechanisms separately, one does not know the way they work.

Thus, scientists have come to believe that the knowledge of the parts (the neurons and brain structures) must be understood in conjunction with the whole (i.e., what the parts make when they come together: the mind). Next we explore the history of research on the mind.

The Psychological Story

Physicians were the early pioneers studying how the brain worked. In 1869 a Dutch ophthalmologist, Franciscus Donders, was the first to propose the now-common method of using differences in reaction times to infer differences in cognitive processing. He suggested that the difference in the amount of time it took to react to a light and the amount of time needed to react to a particular color of light was the amount of time required for the process of identifying a color. Psychologists began to use this approach, claiming that they could study the mind by measuring behavior, and experimental psychology was born.

Before the start of experimental psychological science the mind had been the province of philosophers, who wondered about the nature of knowledge and how we come to know things. The philosophers had two main positions: rationalism and empiricism. Rationalism grew out of the Enlightenment period and held that all knowledge could be gained through the use of reason alone: Truth was intellectual, not sensory. Through thinking, then, rationalists would determine true beliefs and would reject beliefs that, although perhaps comforting, were unsupportable and even superstitious. Among intellectuals and scientists, rationalism replaced religion and became the only way to think about the world. In particular, this view, in one form or another, was supported by René Descartes, Baruch Spinoza, and Gottfried Leibniz.

MILESTONES IN COGNITIVE NEUROSCIENCE

Interlude

In textbook writing, authors use broad strokes to communicate milestones that have become important to people’s thinking over a long period of time. It would be folly, however, not to alert the reader that these scientific advances took place in a complex and intriguing cultural, intellectual, and personal setting. The social problems that besieged the world’s first scientists remain today, in full glory: Issues of authorship, ego, funding, and credit are all integral to the fabric of intellectual life. Much as teenagers never imagine that their parents once had the same interests, problems, and desires as they do, novitiates in science believe they are tackling new issues for the first time in human history. Gordon Shepherd (1991), in his riveting account Foundations of the Neuron Doctrine, detailed the variety of forces at work on the figures we now feature in our brief history.

Shepherd noted how the explosion of research on the nervous system started in the 18th century as part of the intense activity swirling around the birth of modern science. As examples, Robert Fulton invented the steam engine in 1807, and Hans Christian Ørsted discovered electromagnetism. Of more interest to our concerns, Leopoldo Nobili, an Italian physicist, invented a precursor to the galvanometer—a device that laid the foundation for studying electrical currents in living tissue. Many years before, in 1674, Anton van Leeuwenhoek in Holland had used a primitive microscope to view animal tissue (Figure 1). One of his first observations was of a cross section of a cow’s nerve in which he noted “very minute vessels.” This observation was consistent with René Descartes’s idea that nerves contained fluid or “spirits,” and these spirits were responsible for the flow of sensory and motor information in the body (Figure 2). To go further, however, this revolutionary work would have to overcome the technical problems with early microscopes, not the least of which was the quality of glass used in the lens. Chromatic aberrations made them useless at higher magnification. It was not until lens makers solved this problem that microscopic anatomy again took center stage in the history of biology.

|

|

|

| a | b | c |

| FIGURE 1 (a) Anton van Leeuwenhoek (1632–1723). (b) One of the original microscopes used by Leeuwenhoek, composed of two brass plates holding the lens. | FIGURE 2 René Descartes (1596–1650). Portrait by Frans Hals. | |

Although rationalism is frequently equated with logical thinking, the two are not identical. Rationalism considers such issues as the meaning of life, whereas logic does not. Logic simply relies on inductive reasoning, statistics, probabilities, and the like. It does not concern itself with personal mental states like happiness, self-interest, and public good. Each person weighs these issues differently, and as a consequence, a rational decision is more problematic than a simple logical decision.

Empiricism, on the other hand, is the idea that all knowledge comes from sensory experience, that the brain began life as a blank slate. Direct sensory experience produces simple ideas and concepts. When simple ideas interact and become associated with one another, complex ideas and concepts are created in an individual’s knowledge system. The British philosophers—from Thomas Hobbes in the 17th century, through John Locke and David Hume, to John Stuart Mill in the 19th century—all emphasized the role of experience. It is no surprise, then, that a major school of experimental psychology arose from this associationist view. Psychological associationists believed that the aggregate of a person’s experience determined the course of mental development.

FIGURE 1.15 Edward L. Thorndike (1874–1949).

One of the first scientists to study associationism was Hermann Ebbinghaus, who, in the late 1800s, decided that complex processes like memory could be measured and analyzed. He took his lead from the great psychophysicists Gustav Fechner and Ernst Heinrich Weber, who were hard at work relating the physical properties of things such as light and sound to the psychological experiences that they produce in the observer. These measurements were rigorous and reproducible. Ebbinghaus was one of the first to understand that mental processes that are more internal, such as memory, also could be measured (see Chapter 9).

Even more influential to the shaping of the associationist view was the classic 1911 monograph Animal Intelligence: An Experimental Study of the Associative Processes in Animals, by Edward Thorndike (Figure 1.15). In this volume, Thorndike articulated his law of effect, which was the first general statement about the nature of associations. Thorndike simply observed that a response that was followed by a reward would be stamped into the organism as a habitual response. If no reward followed a response, the response would disappear. Thus, rewards provided a mechanism for establishing a more adaptive response.

Associationism came to be dominated by American behavioral psychologist John B. Watson (Figure 1.16), who proposed that psychology could be objective only if it were based on observable behavior. He rejected Ebbinghaus’s methods and declared that all talk of mental processes, which cannot be publicly observed, should be avoided. Associationism became committed to an idea widely popularized by Watson that he could turn any baby into anything. Learning was the key, he proclaimed, and everybody had the same neural equipment on which learning could build. Appealing to the American sense of equality, American psychology was giddy with this idea of the brain as a blank slate upon which to build through learning and experience, and every prominent psychology department in the country was run by people who held this view.

Behaviorist–associationist psychology went on despite the already well-established position—first articulated by Descartes, Leibniz, Kant, and others—that complexity is built into the human organism. Sensory information is merely data on which preexisting mental structures act. This idea, which dominates psychology today, was blithely asserted in that golden age, and later forgotten or ignored.

Although American psychologists were focused on behaviorism, the psychologists in Britain and Canada were not. Montreal became a hot spot for new ideas on how biology shapes cognition and behavior. In 1928, Wilder Penfield (Figure 1.17), an American who had studied neuropathology with Sir Charles Sherrington at Oxford, became that city’s first neurosurgeon. In collaboration with Herbert Jasper, he invented the Montreal procedure for treating epilepsy, in which he surgically destroyed the neurons in the brain that produced the seizures. To determine which cells to destroy, Penfield stimulated various parts of the brain with electrical probes and observed the results on the patients—who were awake, lying on the operating table under local anesthesia only. From these observations, he was able to create maps of the sensory and motor cortices in the brain (Penfield & Jasper, 1954) that Hughlings Jackson had predicted over half a century earlier.

|

|

| a | b |

|

FIGURE 1.16 (a) John B. Watson (1878–1958). (b) Watson and “Little Albert” during one of Watson’s fear-conditioning experiments. |

|

|

|

|

|

|

FIGURE 1.17 Wilder Penfield (1891–1976). |

FIGURE 1.18 Donald O. Hebb (1904–1985). |

FIGURE 1.19 Brenda Milner (1918–). |

FIGURE 1.20 George A. Miller (1920–2012). |

Soon he was joined by a Nova Scotian psychologist, Donald Hebb (Figure 1.18), who spent time working with Penfield studying the effects of brain surgery and injury on the functioning of the brain. Hebb became convinced that the workings of the brain explained behavior and that the psychology and biology of an organism could not be separated. Although this idea—which kept popping up only to be swept under the carpet again and again over the past few hundred years—is well accepted now, Hebb was a maverick at the time. In 1949 he published a book, The Organization of Behavior: A Neuropsychological Theory (Hebb, 1949), that rocked the psychological world. In it he postulated that learning had a biological basis. The well-known neuroscience mantra “cells that fire together, wire together” is a distillation of his proposal that neurons can combine together into a single processing unit and the connection patterns of these units make up the ever-changing algorithms determining the brain’s response to a stimulus. He pointed out that the brain is active all the time, not just when stimulated by an impulse, and that inputs from the outside can only modify the ongoing activity. Hebb’s theory was subsequently used in the design of artificial neural networks.

Hebb’s British graduate student, Brenda Milner (Figure 1.19), continued the behavioral studies on Penfield’s patients, both before and after their surgery. When patients began to complain about mild memory loss after surgery, she became interested in memory and was the first to provide anatomical and physiological proof that there are multiple memory systems. Brenda Milner, 60 years later, is still associated with the Montreal Neurological Institute and has seen a world of change sweep across the study of brain, mind, and behavior. She was in the vanguard of cognitive neuroscience as well as one of the first in a long line of influential women in the field.

The true end of the dominance of behaviorism and stimulus–response psychology in America did not come until the late 1950s. Psychologists began to think in terms of cognition, not just behavior. George Miller (Figure 1.20), who had been a confirmed behaviorist, had a change of heart in the 1950s. In 1951, Miller wrote an influential book entitled Language and Communication and noted in the preface, “The bias is behavioristic.” Eleven years later he wrote another book, called Psychology, the Science of Mental Life—a title that signals a complete rejection of the idea that psychology should study only behavior.

Upon reflection, Miller determined that the exact date of his rejection of behaviorism and his cognitive awakening was September 11, 1956, during the second Symposium on Information Theory, held at the Massachusetts Institute of Technology (MIT). That year had been a rich one for several disciplines. In computer science, Allen Newell and Herbert Simon successfully introduced Information Processing Language I, a powerful program that simulated the proof of logic theorems. The computer guru John von Neumann wrote the Silliman lectures on neural organization, in which he considered the possibility that the brain’s computational activities were similar to a massively parallel computer. A famous meeting on artificial intelligence was held at Dartmouth College, where Marvin Minsky, Claude Shannon (known as the father of information theory), and many others were in attendance.

|

|

|

FIGURE 1.21 Noam Chomsky (1928–). |

FIGURE 1.22 Patricia Goldman-Rakic (1937–2003). |

Big things were also happening in psychology. Signal detection and computer techniques, developed in World War II to help the U.S. Department of Defense detect submarines, were now being applied by psychologists James Tanner and John Swets to study perception. In 1956, Miller wrote his classic and entertaining paper, “The Magical Number Seven, Plus-or-Minus Two,” in which he showed that there is a limit to the amount of information that can be apprehended in a brief period of time. Attempting to reckon this amount of information led Miller to Noam Chomsky’s work (Figure 1.21; for a review see Chomsky, 2006), where he came across, perhaps, the most important development to the field. Chomsky showed him how the sequential predictability of speech follows from adherence to grammatical, not probabilistic, rules. A preliminary version of Chomsky’s ideas on syntactic theories, published in September 1956 in an article titled, “Three Models for the Description of Language, ” transformed the study of language virtually overnight. The deep message that Miller gleaned was that learning theory—that is, associationism, then heavily championed by B. F. Skinner—could in no way explain how language was learned. The complexity of language was built into the brain, and it ran on rules and principles that transcended all people and all languages. It was innate and it was universal. Thus, on September 11, 1956, after a year of great development and theory shifting, Miller realized that, although behaviorism had important theories to offer, it could not explain all learning. He then set out to understand the psychological implications of Chomsky’s theories by using psychological testing methods. His ultimate goal was to understand how the brain works as an integrated whole—to understand the workings of the brain and the mind. Many followed his new mission, and a few years later a new field was born: cognitive neuroscience.

What has come to be a hallmark of cognitive neuroscience is that it is made up of an insalata mista (“mixed salad”) of different disciplines. Miller had stuck his nose into the worlds of linguistics and computer science and come out with revelations for psychology and neuroscience. In the same vein, in the 1970s Patricia Goldman-Rakic (Figure 1.22) put together a multidisciplinary team of people working in biochemistry, anatomy, electrophysiology, pharmacology, and behavior. She was curious about one of Milner’s memory systems, working memory, and chose to ignore the behaviorists’ claim that the prefrontal cortex’s higher cognitive function could not be studied. As a result, she produced the first description of the circuitry of the prefrontal cortex and how it relates to working memory (Goldman-Rakic, 1987). Later she discovered that individual cells in the prefrontal cortex are dedicated to specific memory tasks, such as remembering a face or a voice. She also performed the first studies on the influence of dopamine on the prefrontal cortex. Her findings caused a phase shift in the understanding of many mental illnesses—including schizophrenia, which previously had been thought to be the result of bad parenting.

The Instruments of Neuroscience

Changes in electrical impulses, fluctuations in blood flow, and shifts in utilization of oxygen and glucose are the driving forces of the brain’s business. They are also the parameters that are measured and analyzed in the various methods used to study how mental activities are supported by brain functions. The advances in technology and the invention of these methods have provided cognitive neuroscientists the tools to study how the brain enables the mind. Without these instruments, the discoveries made in the past 40 years would not have been possible. In this section, we provide a brief history of the people, ideas, and inventions behind some of the noninvasive techniques used in cognitive neuroscience. Many of these methods and their current applications are discussed in greater detail in Chapter 3.

The Electroencephalograph

In 1875, shortly after Hermann von Helmholtz figured out that it was actually an electrical impulse wave that carried messages along the axon of a nerve, British scientist Richard Canton used a galvanometer to measure continuous spontaneous electrical activity from the cerebral cortex and skull surface of live dogs and apes. A fancier version, the “string galvanometer,” designed by a Dutch physician, Willem Einthoven, was able to make photographic recordings of the electrical activity. Using this apparatus, the German psychiatrist Hans Berger published a paper describing recordings of a human brain’s electrical currents in 1929. He named the recording an electroencephalogram. Electroencephalography remained the sole technique for noninvasive brain study for a number of years.

Measuring Blood Flow in the Brain

FIGURE 1.23 Angelo Mosso’s experimental setup was used to measure the pulsations of the brain at the site of a skull defect.

Angelo Mosso, a 19th-century Italian physiologist, was interested in blood flow in the brain and studied patients who had skull defects as the result of neurosurgery. During these studies, he recorded pulsations as blood flowed around and through their cortex (Figure 1.23) and noticed that the pulsations of the brain increased locally during mental activities such as mathematical calculations. He inferred that blood flow followed function. These observations, however, slipped from view and were not pursued until a few decades later when in 1928 John Fulton presented the case of patient Walter K., who was evaluated for a vascular malformation that resided above his visual cortex (Figure 1.24). The patient mentioned that at the back of his head he heard a noise that increased when he used his eyes, but not his other senses. This noise was a bruit, the sound that blood makes when it rushes through a narrowing of its channel. Fulton concluded that blood flow to the visual cortex varied with the attention paid to surrounding objects.

Another 20 years slipped by, and Seymour Kety (Figure 1.25), a young physician at the University of Pennsylvania, realized that if you could perfuse arterial blood with an inert gas, such as nitrous oxide, then the gas would circulate through the brain and be absorbed independently of the brain’s metabolic activity. Its accumulation would be dependent only on physical parameters that could be measured, such as diffusion, solubility, and perfusion. With this idea in mind, he developed a method to measure the blood flow and metabolism of the human brain as a whole. Using more drastic methods in animals (they were decapitated; their brains were then removed and analyzed), Kety was able to measure the blood flow to specific regions of the brain (Landau et al., 1955). His animal studies provided evidence that blood flow was related directly to brain function. Kety’s method and results were used in developing positron emission tomography (described later in this section), which uses radiotracers rather than an inert gas.

|

|

|

FIGURE 1.24 Walter K.’s head with a view of the skull defect over the occipital cortex. |

FIGURE 1.25 Seymour S. Kety (1915–2000). |

Computerized Axial Tomography

Although blood flow was of interest to those studying brain function, having good anatomical images in order to locate tumors was motivating other developments in instrumentation. Investigators needed to be able to obtain three-dimensional views of the inside of the human body. In the 1930s, Alessandro Vallebona developed tomographic radiography, a technique in which a series of transverse sections are taken. Improving upon these initial attempts, UCLA neurologist William Oldendorf (1961) wrote an article outlining the first description of the basic concept later used in computerized tomography (CT), in which a series of transverse X-rays could be reconstructed into a three-dimensional picture. His concept was revolutionary, but he could not find any manufacturers willing to capitalize on his idea. It took insight and cash, which was provided by four lads from Liverpool, the company EMI, and Godfrey Newbold Hounsfield, a computer engineer who worked at the Central Research Laboratories of EMI, Ltd. EMI was an electronics firm that also owned Capitol Records and the Beatles’ recording contract. Hounsfield, using mathematical techniques and multiple two-dimensional X-rays to reconstruct a three-dimensional image, developed his first scanner, and as the story goes, EMI, flush with cash from the Beatles’ success, footed the bill. Hounsfield performed the first computerized axial tomography (CAT) scan in 1972.

|

|

|

|

FIGURE 1.26 Irene Joliot-Curie (1897–1956). |

FIGURE 1.27 Michel M. Ter-Pogossian (1925–1996). |

FIGURE 1.28 Michael E. Phelps (1939–). |

Positron Emission Tomography and Radioactive Tracers

While CAT was great for revealing anatomical detail, it revealed little about function. Researchers at Washington University, however, used CAT as the basis for developing positron emission tomography (PET), a noninvasive sectioning technique that could provide information about function. Observations and research by a huge number of people over many years have been incorporated into what ultimately is today’s PET. Its development is interwoven with that of the radioactive isotopes, aka “tracers,” that it employs. We previously noted the work of Seymour Kety done in the 1940s and 1950s. A few years earlier, in 1934, Irene Joliot-Curie (Figure 1.26) and Frederic Joliot-Curie discovered that some originally nonradioactive nuclides emitted penetrating radiation after being irradiated. This observation led Ernest O. Lawrence (the inventor of the cyclotron) and his colleagues at the University of California, Berkeley to realize that the cyclotron could be used to produce radioactive substances. If radioactive forms of oxygen, nitrogen, or carbon could be produced, then they could be injected into the blood circulation and would become incorporated into biologically active molecules. These molecules would concentrate in an organ, where the radioactivity would begin to decay. The concentration of the tracers could then be measured over time, allowing inferences about metabolism to be made.

In 1950, Gordon Brownell at Harvard University realized that positron decay (of a radioactive tracer) was associated with two gamma particles being emitted at 180 degrees. Using this handy discovery, a simple positron scanner with a pair of sodium iodide detectors was designed and built, and it was scanning patients for brain tumors in a matter of months (Sweet & Brownell, 1953). In 1959, David E. Kuhl, a radiology resident at the University of Pennsylvania, who had been dabbling with radiation since high school (did his parents know?), and Roy Edwards, an engineer, combined tomography with gamma-emitting radioisotopes and obtained the first emission tomographic image.

The problem with most radioactive isotopes of nitrogen, oxygen, carbon, and fluorine is that their half-lives are measured in minutes. Anyone who was going to use them had to have their own cyclotron and be ready to roll as the isotopes were created. It happened that Washington University had both a cyclotron that produced radioactive oxygen-15 (15O) and two researchers, Michel Ter-Pogossian and William Powers, who were interested in using it. They found that when injected into the bloodstream, 15O-labeled water could be used to measure blood flow in the brain (Ter-Pogossian & Powers, 1958). Ter-Pogossian (Figure 1.27) was joined in the 1970s by Michael Phelps (Figure 1.28), a graduate student who had started out his career as a Golden Gloves boxer. Excited about X-ray CT, they thought that they could adapt the technique to reconstruct the distribution within an organ of a short-lived “physiological” radionuclide from its emissions. They designed and constructed the first positron emission tomograph, dubbed PETT (positron emission transaxial tomography; Ter-Pogossian et al., 1975), which later was shortened to PET.

Another metabolically important molecule in the brain is glucose. Under the direction of Joanna Fowler and Al Wolf, using Brookhaven National Laboratory’s powerful cyclotron, 18 F-labeled 2-fluorodeoxy-D-glucose (2FDG) was created (Ido et al., 1978). 18 F has a half-life that is amenable for PET imaging and can give precise values of energy metabolism in the brain. The first work using PET to look for neural correlates of human behavior began when Phelps joined Kuhl at the University of Pennsylvania and together, using 2FDG, they established a method for imaging the tissue consumption of glucose. Phelps, in a leap of insight, invented the block detector, a device that eventually increased spatial resolution of PET from 3 centimeters to 3 millimeters.

Magnetic Resonance Imaging

Magnetic resonance imaging (MRI) is based on the principle of nuclear magnetic resonance, which was first described and measured by Isidor Rabi in 1938. Discoveries made independently in 1946 by Felix Bloch at Harvard University and Edward Purcell at Stanford University expanded the understanding of nuclear magnetic resonance to liquids and solids. For example, the protons in a water molecule line up like little bar magnets when placed in a magnetic field. If the equilibrium of these protons is disturbed by zapping them with radio frequency pulses, then a measurable voltage is induced in a receiver coil. The voltage changes over time as a function of the proton’s environment. By analyzing the voltages, information about the examined tissue can be deduced.

FIGURE 1.29 Paul Lauterbur (1929–2007).

In 1971, while Paul Lauterbur (Figure 1.29) was on sabbatical, he was thinking grand thoughts as he ate a fast-food hamburger. He scribbled his ideas on a nearby napkin, and from these humble beginnings he developed the theoretical model that led to the invention of the first magnetic resonance imaging scanner, located at The State University of New York at Stony Brook (Lauterbur, 1973). (Lauterbur won the 2003 Nobel Prize in Physiology or Medicine, but his first attempt at publishing his findings was rejected by the journal Nature. He later quipped, “You could write the entire history of science in the last 50 years in terms of papers rejected by Science or Nature” [Wade, 2003]). It was another 20 years, however, before MRI was used to investigate brain function. This happened when researchers at Massachusetts General Hospital demonstrated that following the injection of contrast material into the bloodstream, changes in the blood volume of a human brain, produced by physiological manipulation of blood flow, could be measured using MRI (Belliveau et al., 1990). Not only were excellent anatomical images produced, but they could be combined with physiology germane to brain function.

Functional Magnetic Resonance Imaging

When PET was introduced, the conventional wisdom was that increased blood flow to differentially active parts of the brain was driven by the brain’s need for more oxygen. An increase in oxygen delivery permitted more glucose to be metabolized, and thus more energy would be available for performing the task. Although this idea sounded reasonable, little data were available to back it up. In fact, if this proposal were true, then increases in blood flow induced by functional demands should be equivalent to the increase in oxygen consumption. This would mean that the ratio of oxygenated to deoxygenated hemoglobin should stay constant. PET data, however, did not back this up (Raichle, 2008). Instead, Peter Fox and Marc Raichle, at Washington University, found that although functional activity induced increases in blood flow, there was no corresponding increase in oxygen consumption (Fox & Raichle, 1986). In addition, more glucose was being used than would be predicted from the amount of oxygen consumed (Fox et al., 1988). What was up with that? Raichle (2008) relates that oddly enough, a random scribble written in the margin of Michael Faraday’s lab notes in 1845 (Faraday, 1933) provided the hint that led to the solution of this puzzle. It was Linus Pauling and Charles Coryell who somehow happened upon this clue.

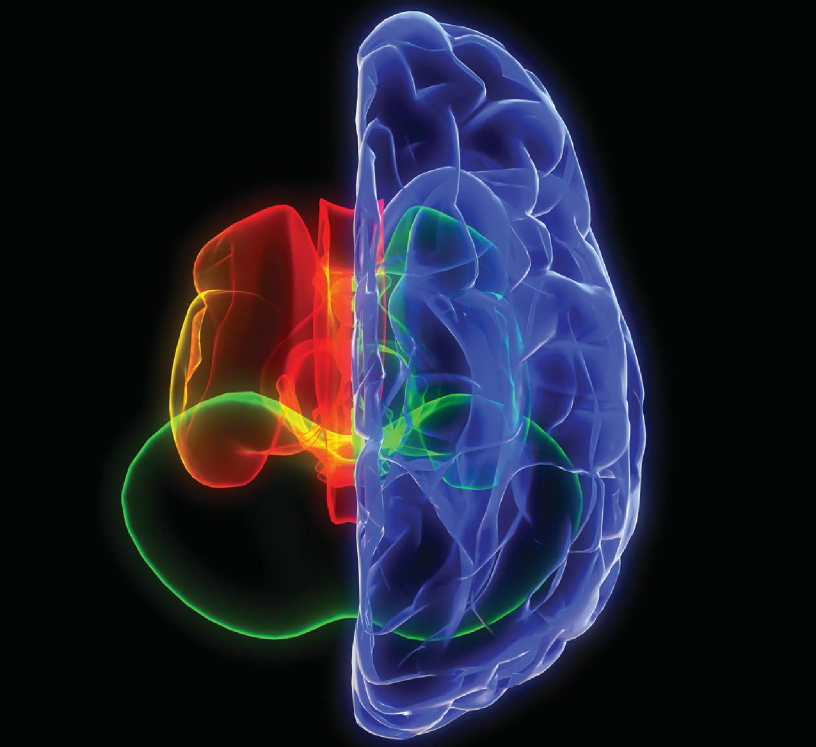

Faraday had noted that dried blood was not magnetic and in the margin of his notes had written that he must try fluid blood. He was puzzled because hemoglobin contains iron. Ninety years later, Pauling and Coryell (1936), after reading Faraday’s notes, became curious too. They found that indeed oxygenated and deoxygenated hemoglobin behaved very differently in a magnetic field. Deoxygenated hemoglobin is weakly magnetic due to the exposed iron in the hemoglobin molecule. Years later, Kerith Thulborn (1982) remembered and capitalized on this property described by Pauling and Coryell, realizing that it was feasible to measure the state of oxygenation in vivo. Seiji Ogawa (1990) and his colleagues at AT&T Bell Laboratories tried manipulating oxygen levels by administering 100 % oxygen alternated with room air (21 % oxygen) to human subjects who were undergoing MRI. They discovered that on room air, the structure of the venous system was visible due to the contrast provided by the deoxygenated hemoglobin that was present. On 100 % O2, however, the venous system completely disappeared (Figure 1.30). Thus contrast depended on the blood oxygen level. BOLD (blood oxygen level– dependent) contrast was born. This technique led to the development of functional magnetic resonance imaging (fMRI). MRI does not use ionizing radiation, it combines beautifully detailed images of the body with physiology related to brain function, and it is sensitive (Figure 1.31). With all of these advantages, it did not take long for MRI and fMRI to be adopted by the research community, resulting in explosive growth of functional brain imaging.

|

Air |

O2 |

|

|

|

FIGURE 1.30 Images of a mouse brain under varying oxygen conditions. |

|

Machines are useful, however, only if you know what to do with them and what their limitations are. Raichle understood the potential of these new scanning methods, but he also realized that some basic problems had to be solved. If generalized information about brain function and anatomy were to be obtained, then the scans from different individuals performing the same tasks under the same circumstances had to be comparable. This was proving difficult, however, since no two brains are precisely the same size and shape. Furthermore, early data was yielding a mishmash of results that varied in anatomical location from person to person. Eric Reiman, a psychiatrist working with Raichle, suggested that averaging blood flow across subjects might solve this problem. The results of this approach were clear and unambiguous (Fox, 1988). This landmark paper presented the first integrated approach for the design, execution, and interpretation of functional brain images.

But what can be learned about the brain and the behavior of a human when a person is lying prone in a scanner? Cognitive psychologists Michael Posner, Steve Petersen, and Gordon Shulman, at Washington University, developed innovative experimental paradigms, including the cognitive subtraction method (first proposed by Donders), for use while PET scanning. The methodology was soon applied to fMRI. This joining together of cognitive psychology’s experimental methods with brain imaging was the beginning of human functional brain mapping. Throughout this book, we will draw from the wealth of brain imaging data that has been amassed in the last 30 years in our quest to learn about how the brain enables the mind.

|

|

| a | b |

|

FIGURE 1.31 An early set of fMRI images showing activation of the human visual cortex. |

|

The Book in Your Hands

Our goals in this book are to introduce you to the big questions and discussions in cognitive neuroscience and to teach you how to think, ask questions, and approach those questions like a cognitive neuroscientist. In the next chapter, we introduce the biological foundations of the brain by presenting an overview of its cellular mechanisms and neuroanatomy. In Chapter 3 we discuss the methods that are available to us for observing mind– brain relationships, and we introduce how scientists go

about interpreting and questioning those observations. Building on this foundation, we launch into the core processes of cognition: hemispheric specialization, sensation and perception, object recognition, attention, the control of action, learning and memory, emotion, and language, devoting a chapter to each. These are followed by chapters on cognition control, social cognition, and a new chapter for this edition on consciousness, free will, and the law.

Each chapter begins with a story that illustrates and introduces the chapter’s main topic. Beginning with Chapter 4, the story is followed by an anatomical orientation highlighting the portions of the brain that we know are involved in these processes, and a description of what a deficit of that process would result in. Next, the heart of the chapter focuses on a discussion of the cognitive process and what is known about how it functions, followed by a summary and suggestions for further reading for those whose curiosity has been aroused.

Summary

Thomas Willis first introduced us, in the mid 1600s, to the idea that damage to the brain could influence behavior and that the cerebral cortex might indeed be the seat of what makes us human. Phrenologists expanded on this idea and developed a localizationist view of the brain. Patients like those of Broca and Wernicke later supported the importance of specific brain locations on human behavior (like language). Ramón y Cajal, Sherrington, and Brodmann, among others, provided evidence that although the microarchitecture of distinct brain regions could support a localizationist view of the brain, these areas are interconnected. Soon scientists began to realize that the integration of the brain’s neural networks might be what enables the mind.

At the same time that neuroscientists were researching the brain, psychologists were studying the mind. Out of the philosophical theory of empiricism came the idea of associationism, that any response followed by a reward would be maintained and that these associations were the basis of how the mind learned. Associationism was the prevailing theory for many years, until Hebb emphasized the biological basis of learning, and Chomsky and Miller realized that associationism couldn’t explain all learning or all actions of the mind.

Neuroscientists and psychologists both reached the conclusion that there is more to the brain than just the sum of its parts, that the brain must enable the mind—but how? The term cognitive neuroscience was coined in the late 1970s because fields of neuroscience and psychology were once again coming together. Neuroscience was in need of the theories of the psychology of the mind, and psychology was ready for a greater understanding of the working of the brain. The resulting marriage is cognitive neuroscience.

The last half of the 20th century saw a blossoming of interdisciplinary research that produced both new approaches and new technologies resulting in noninvasive methods of imaging brain structure, metabolism, and function.

So welcome to cognitive neuroscience. It doesn’t matter what your background is, you’re welcome here.

Key Terms

aggregate field theory (p. 7)

associationism (p. 12)

behaviorism (p. 13)

cognitive neuroscience (p. 4)

cytoarchitectonics (p. 8)

empiricism (p. 10)

Montreal procedure (p. 13)

neuron doctrine (p. 9)

phrenology (p. 6)

rationalism (p. 10)

syncytium (p. 9)

Thought Questions

Suggested Reading

Kass-Simon, G., & Farnes, P. (1990). Women of science: Righting the record. Bloomington: Indiana University Press.

Lindzey, G. (Ed.). (1936). History of psychology in autobiography (Vol. 3). Worcester, MA: Clark University Press.

Miller, G. (2003). The cognitive revolution: A historical perspective. Trends in Cognitive Sciences, 7, 141–144.

Raichle, M. E. (1998). Behind the scenes of functional brain imaging: A historical and physiological perspective. Proceedings of the National Academy of Sciences, USA, 95, 765–772.

Shepherd, G. M. (1991). Foundations of the neuron doctrine. New York: Oxford University Press.

Zimmer, C. (2004). Soul made flesh: The discovery of the brain—and how it changed the world. New York: Free Press.